NSX Advanced Load Balancer Controller 上提供了 pyVmomi VMware 自动化库,以供 ControlScript 使用。pyVmomi 是适用于 VMware vSphere API 的 Python SDK,可为管理 ESX、ESXi 和 vCenter 提供支持。这种自动化的一种常见用途是用于后端池服务器自动缩放。本主题中所述的步骤可根据自动缩放策略自动缩减和扩展池服务器。

在 VMware Cloud 中配置池服务器自动缩放

要为服务器池配置扩展,需要进行以下配置。以下所有配置步骤必须在与池相同的租户中执行。

创建 ControlScript

定义自动缩放警示

将自动缩放警示附加到服务器池的自动缩放策略

创建对服务器自动扩展-服务器自动缩减事件触发的扩展和缩减警示,以运行相关的 ControlScript。

步骤 1:创建 ControlScript

需要创建以下控制脚本:

扩展操作

#!/usr/bin/python

import sys

from avi.vmware_ops.vmware_scale import scale_out

vmware_settings = {

'vcenter': '10.10.10.10',

'user': 'root',

'password': 'vmware',

'cluster_name': 'Cluster1',

'template_name': 'web-app-template',

'template_folder_name': 'Datacenter1/WebApp/Template',

'customization_spec_name': 'web-app-centos-dhcp',

'vm_folder_name': 'Datacenter1/WebApp/Servers',

'resource_pool_name': None,

'port_group': 'WebApp'}

scale_out(vmware_settings, *sys.argv)

vmware_settings 参数如下所示:

vcenter – vCenter 的 IP 地址或主机名。

user – 用于访问 vCenter 的用户名。

password – 用于访问 vCenter 的密码。

cluster_name – vCenter 集群名称。

template_folder_name – 包含模板虚拟机的文件夹,例如 Datacenter1/Folder1/Subfolder1,或者为“None”,以搜索所有文件夹(如果存在大量虚拟机,则指定文件夹效率将会更高)。

template_name – 模板虚拟机的名称。

vm_folder_name – 放置新虚拟机的文件夹名称(或为“None”,与模板相同)。

customization_spec_name – 要使用的自定义规范的名称 - 必须使用 DHCP 为新创建的虚拟机分配 IP 地址。

resource_pool_name – VMware 资源池的名称,或者无特定资源池时,则为“None”(虚拟机将分配给默认的“隐藏”资源池)。

port_group – 包含池成员 IP 的端口组名称(在虚拟机具有多个 vNIC 时有用),或者为“None”以使用第一个 vNIC 中的 IP。

缩减操作名称

#!/usr/bin/python

import sys

from avi.vmware_ops.vmware_scale import scale_out

vmware_settings = {

'vcenter': '10.10.10.10',

'user': 'root',

'password': 'vmware',

'vm_folder_name': 'Datacenter1/WebApp/Servers'}

scale_in(vmware_settings, *sys.argv)

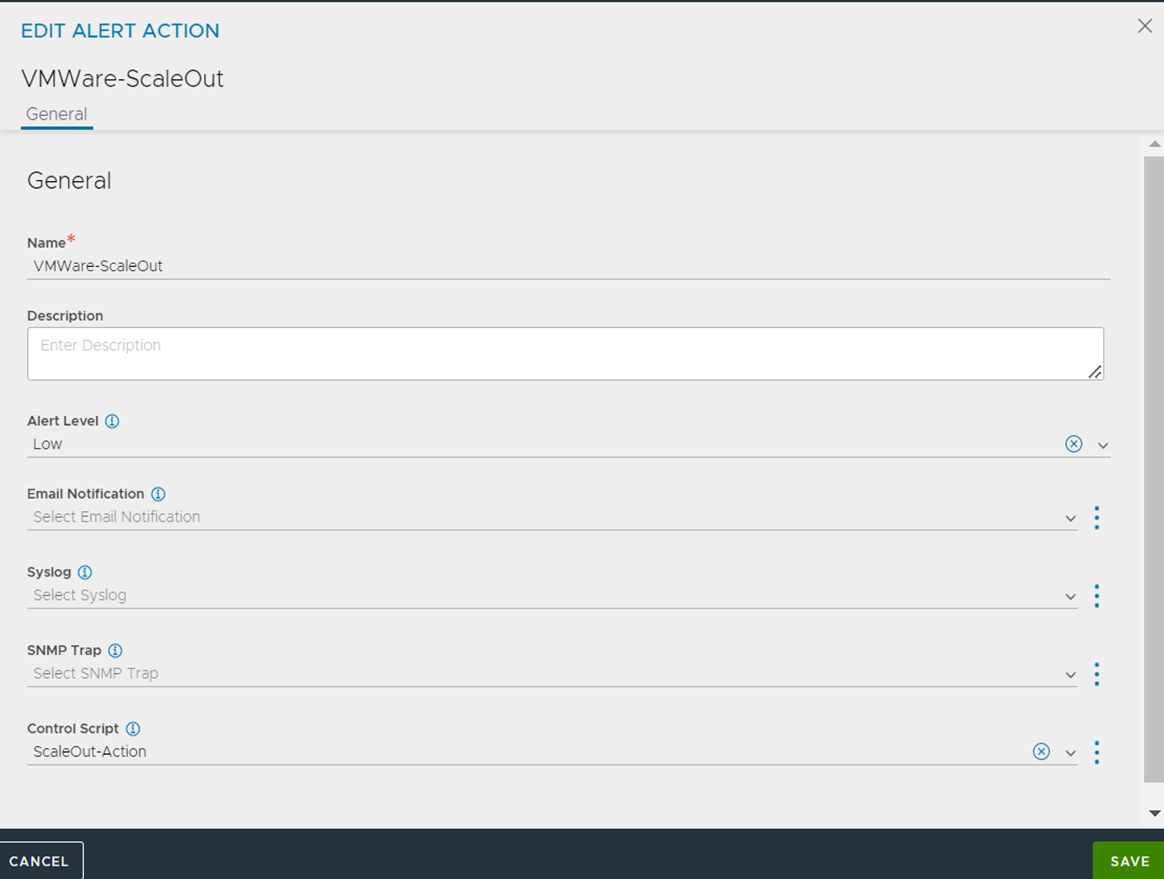

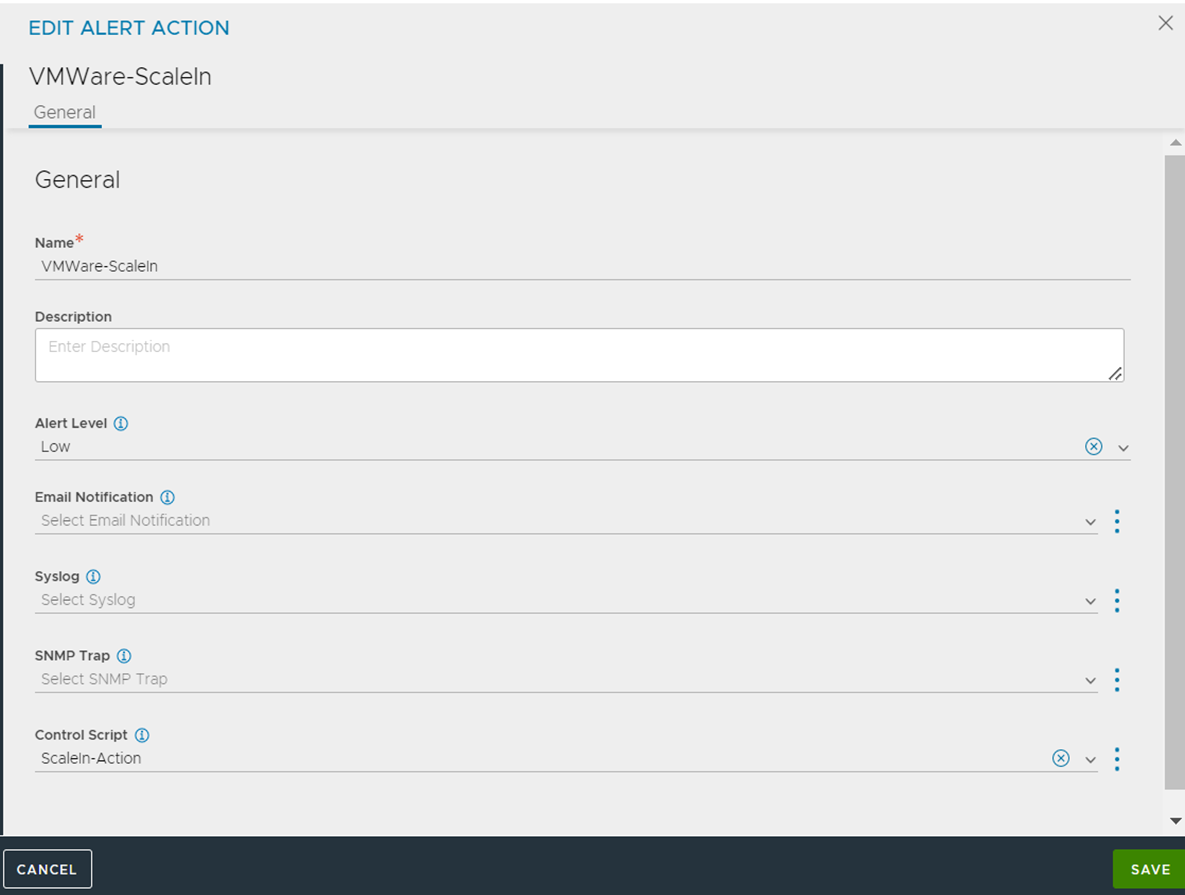

步骤 2:定义自动缩放警示

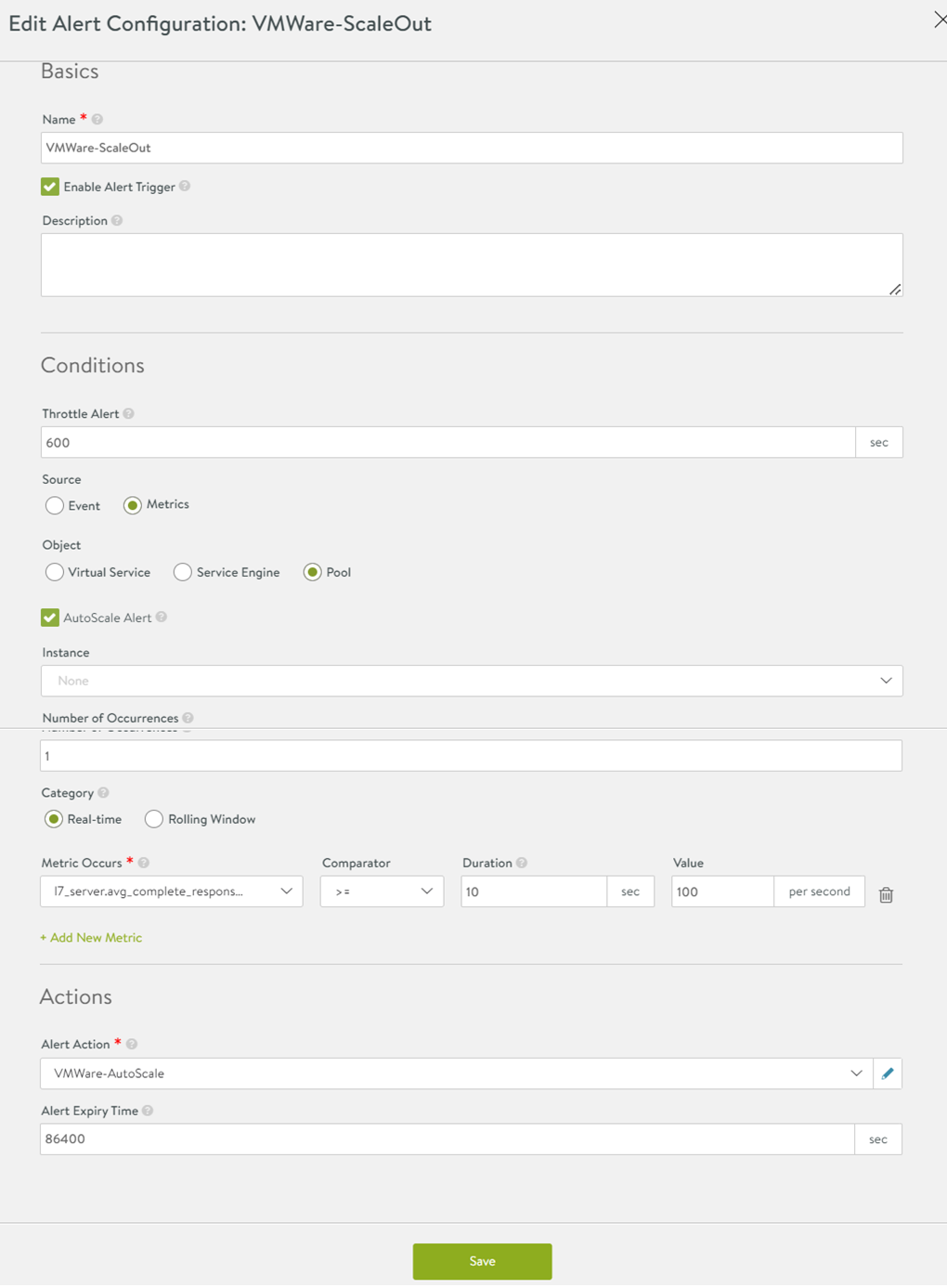

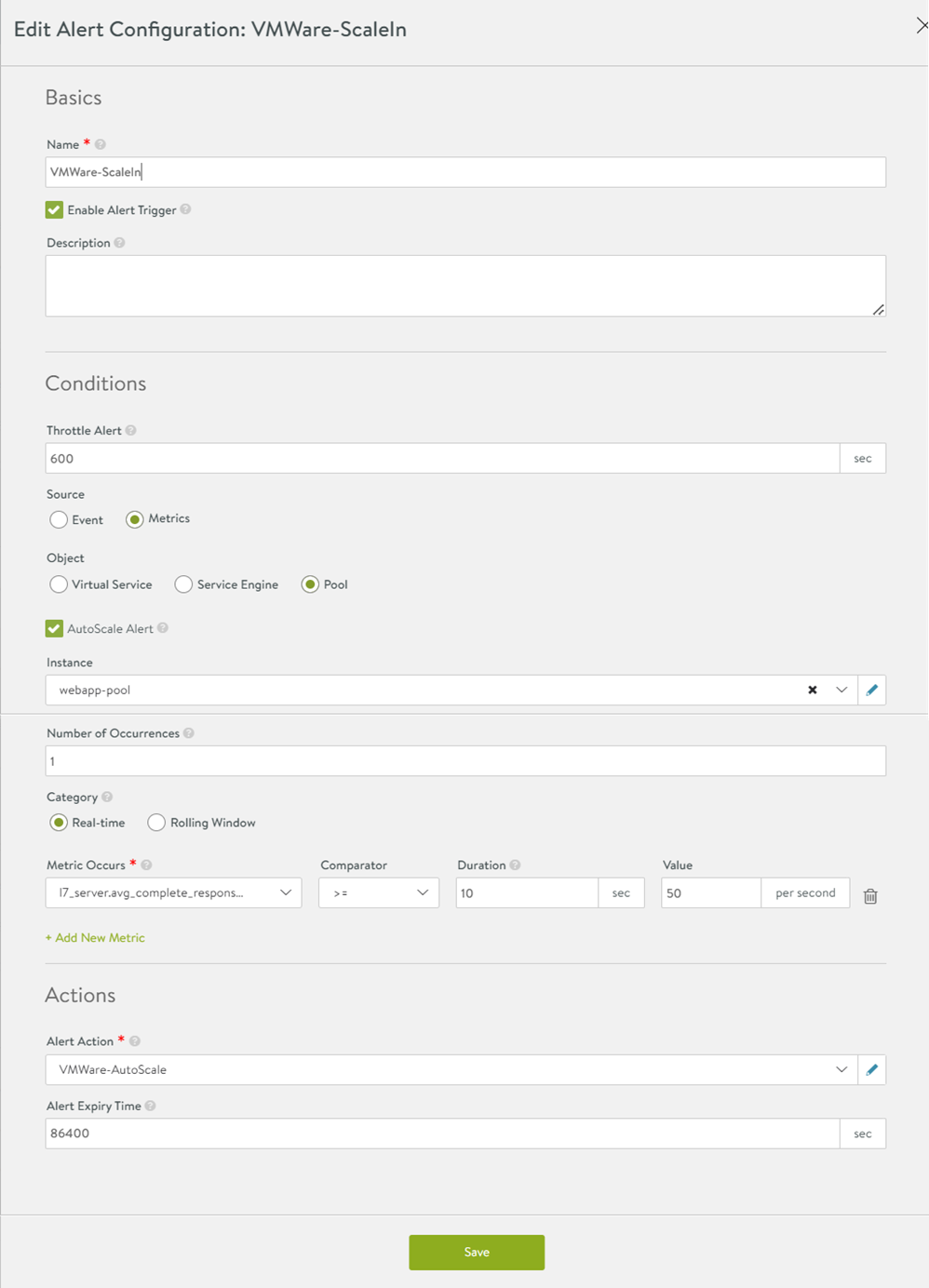

这些警示定义了要做出服务器自动缩放决策的衡量指标触发器阈值。这些警示不会直接运行缩减或扩展 ControlScript。它们将用于自动缩放策略中,与最小-最大服务器上的参数以及要递增或递减的服务器数量一起生成服务器自动扩展或服务器自动缩减事件。有关自动缩放事件的信息,请参阅步骤 3。

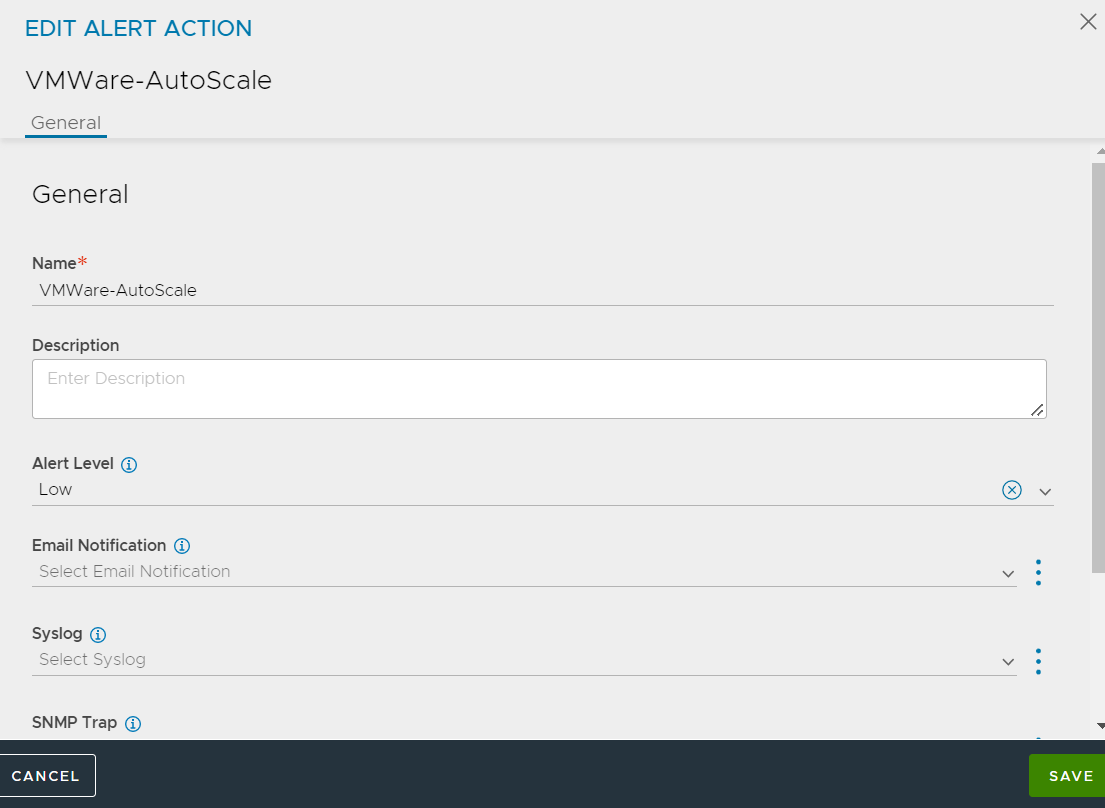

对这些警示执行的操作不得运行上述 ControlScript,但可以将其设置为生成信息性电子邮件、syslog、陷阱等。例如:

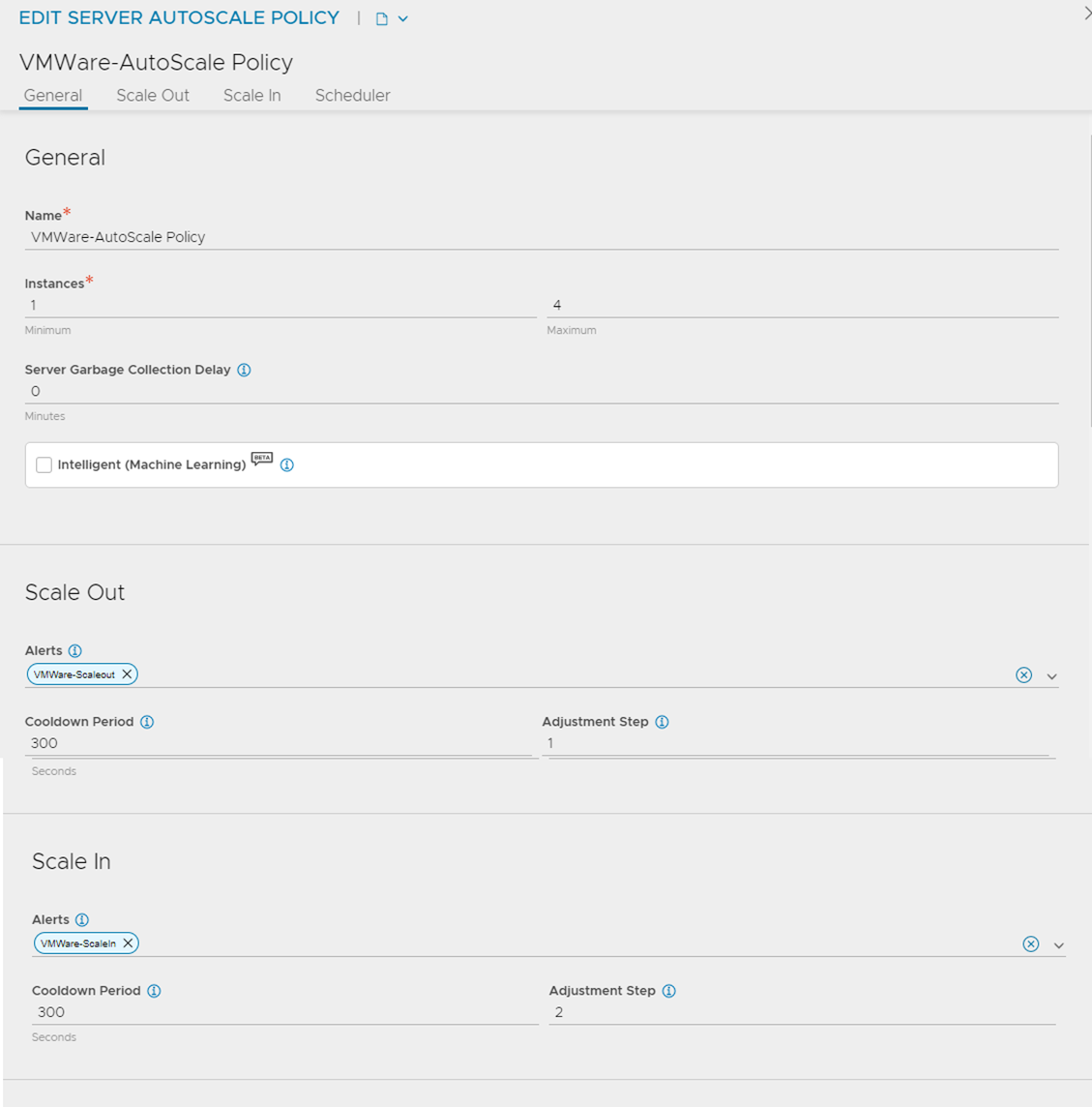

步骤 3:将自动缩放警示附加到服务器池的自动缩放策略

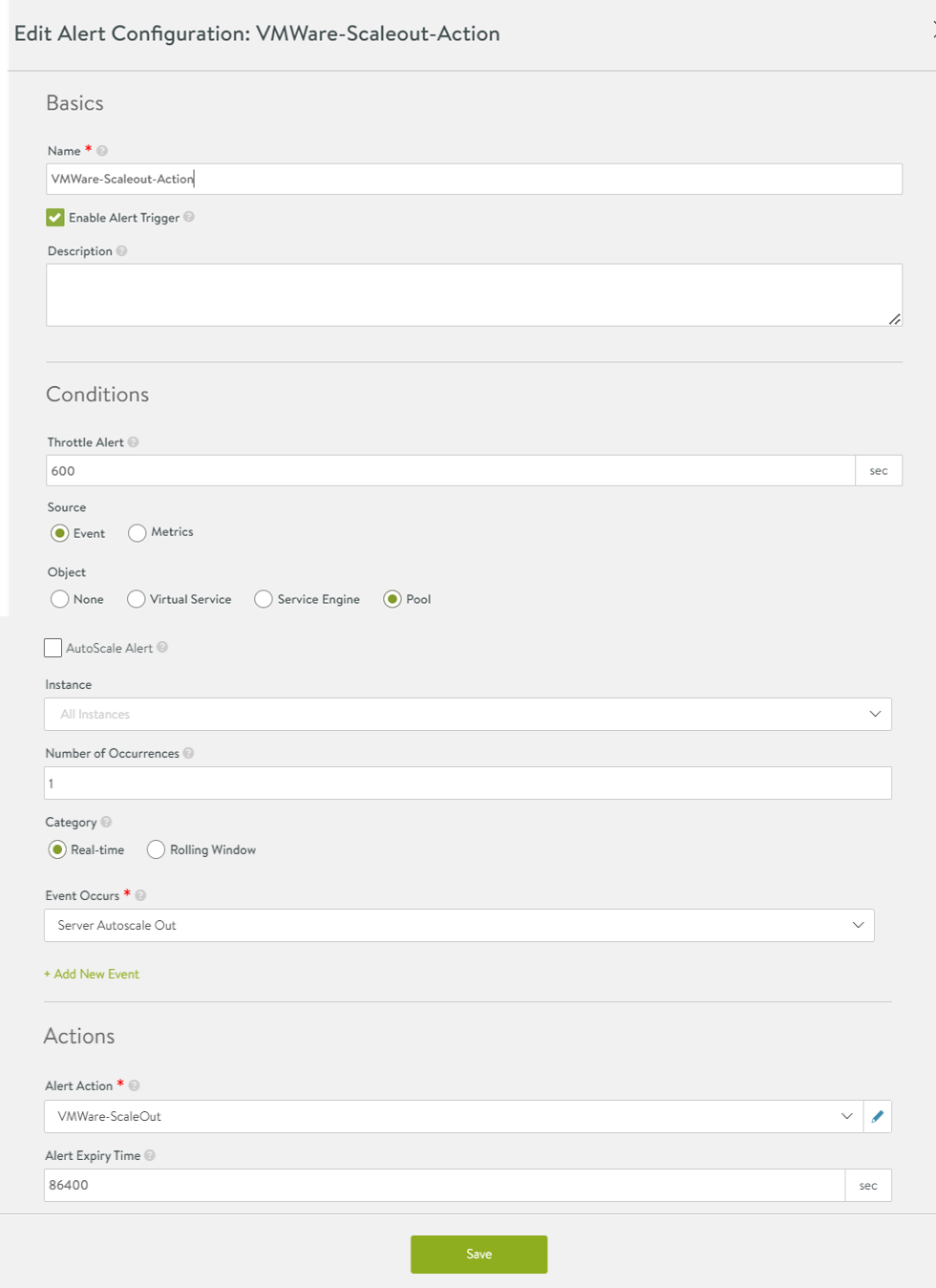

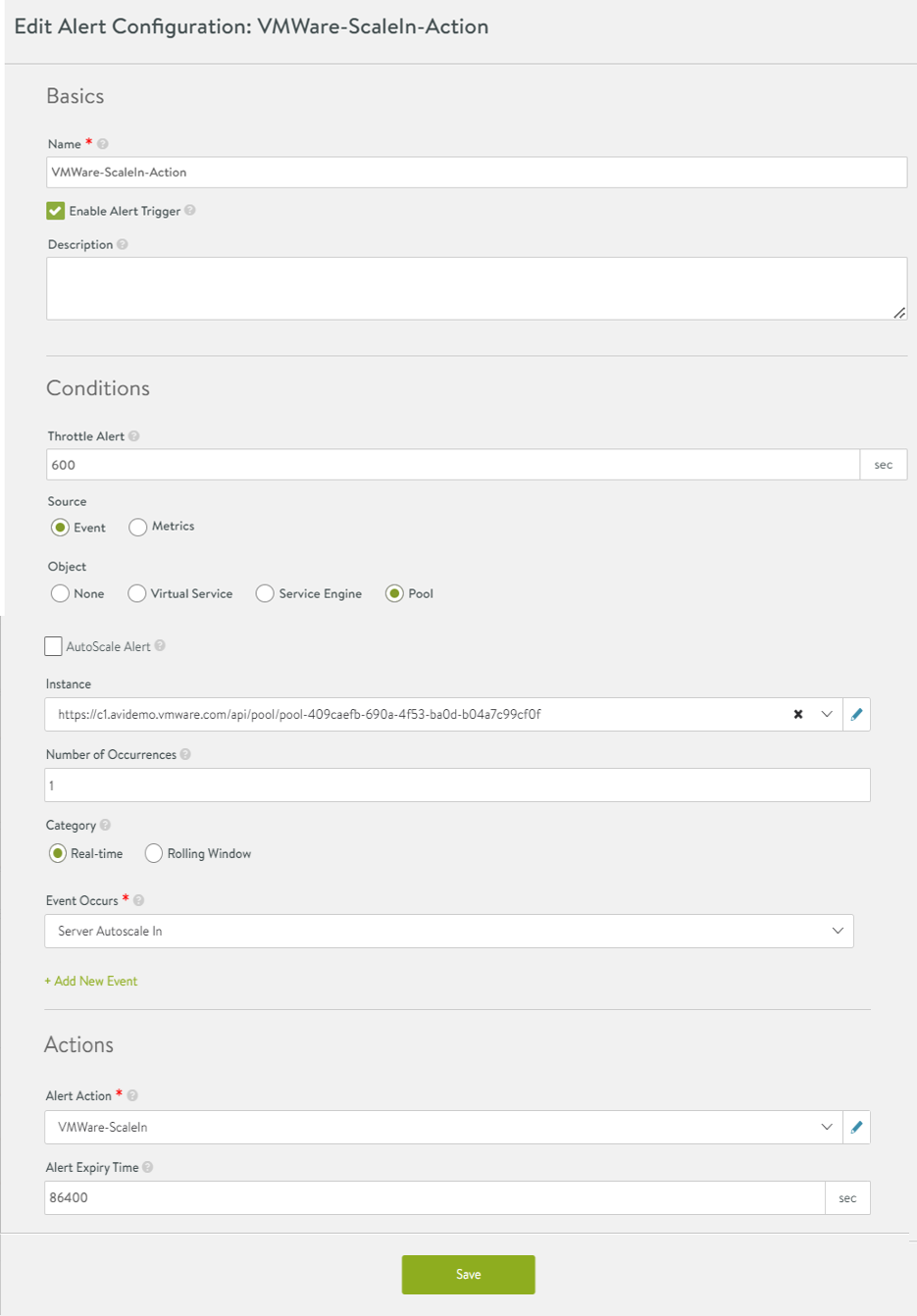

步骤 4:创建对事件触发的扩展和缩减警示以运行 ControlScript

Python 实用程序脚本

以下 Python 实用程序脚本必须复制到控制器才能供 ControlScript 使用。在 /opt/avi/python/lib 下创建一个名为 vmware_scale 的目录,并创建以下两个文件(需要 sudo):

vmutils.py

from pyVmomi import vim

from pyVim.connect import SmartConnectNoSSL, Disconnect

import time

def _get_obj(content, vimtype, name, folder=None):

"""

Get the vsphere object associated with a given text name

"""

obj = None

container = content.viewManager.CreateContainerView(

folder or content.rootFolder, vimtype, True)

for c in container.view:

if c.name == name:

obj = c

break

return obj

def _get_child_folder(parent_folder, folder_name):

obj = None

for folder in parent_folder.childEntity:

if folder.name == folder_name:

if isinstance(folder, vim.Datacenter):

obj = folder.vmFolder

elif isinstance(folder, vim.Folder):

obj = folder

else:

obj = None

break

return obj

def get_folder(si, name):

subfolders = name.split('/')

parent_folder = si.RetrieveContent().rootFolder

for subfolder in subfolders:

parent_folder = _get_child_folder(parent_folder, subfolder)

if not parent_folder:

break

return parent_folder

def get_vm_by_name(si, name, folder=None):

"""

Find a virtual machine by its name and return it

"""

return _get_obj(si.RetrieveContent(), [vim.VirtualMachine], name,

folder)

def get_resource_pool(si, name, folder=None):

"""

Find a resource pool by its name and return it

"""

return _get_obj(si.RetrieveContent(), [vim.ResourcePool], name,

folder)

def get_cluster(si, name, folder=None):

"""

Find a cluster by it's name and return it

"""

return _get_obj(si.RetrieveContent(), [vim.ComputeResource], name,

folder)

def wait_for_task(task, timeout=300):

"""

Wait for a task to complete

"""

timeout_time = time.time() + timeout

timedout = True

while time.time() < timeout_time:

if task.info.state == 'success':

return (True, task.info.result)

if task.info.state == 'error':

return (False, None)

time.sleep(1)

return (None, None)

def wait_for_vm_status(vm, condition, timeout=300):

timeout_time = time.time() + timeout

timedout = True

while timedout and time.time() < timeout_time:

if (condition(vm)):

timedout = False

else:

time.sleep(3)

return not timedout

def net_info_available(vm):

return (vm.runtime.powerState == vim.VirtualMachinePowerState.poweredOn

and

vm.guest.toolsStatus == vim.vm.GuestInfo.ToolsStatus.toolsOk

and

vm.guest.net)

class vcenter_session:

def __enter__(self):

return self.session

def __init__(self, host, user, pwd):

session = SmartConnectNoSSL(host=host, user=user, pwd=pwd)

self.session = session

def __exit__(self, type, value, traceback):

if self.session:

Disconnect(self.session)

vmware_scale.py

from __future__ import print_function

from pyVmomi import vim

import vmutils

from avi.sdk.samples.autoscale.samplescaleout import scaleout_params

import uuid

from avi.sdk.avi_api import ApiSession

import json

import os

def getAviApiSession(tenant='admin', api_version=None):

"""

Create local session to avi controller

"""

token = os.environ.get('API_TOKEN')

user = os.environ.get('USER')

tenant = os.environ.get('TENANT')

api = ApiSession.get_session("localhost", user, token=token,

tenant=tenant, api_version=api_version)

return api, tenant

def do_scale_in(vmware_settings, instance_names):

"""

Perform scale in of pool.

vmware_settings:

vcenter: IP address or hostname of vCenter

user: Username for vCenter access

password: Password for vCenter access

vm_folder_name: Folder containing VMs (or None for same as

template)

instance_names: Names of VMs to destroy

"""

with vmutils.vcenter_session(host=vmware_settings['vcenter'],

user=vmware_settings['user'],

pwd=vmware_settings['password']) as session:

vm_folder_name = vmware_settings.get('vm_folder_name', None)

if vm_folder_name:

vm_folder = vmutils.get_folder(session, vm_folder_name)

else:

vm_folder = None

for instance_name in instance_names:

vm = vmutils.get_vm_by_name(session, instance_name, vm_folder)

if vm:

print('Powering off VM %s...' % instance_name)

power_off_task = vm.PowerOffVM_Task()

(power_off_task_status,

power_off_task_result) = vmutils.wait_for_task(

power_off_task)

if power_off_task_status:

print('Deleting VM %s...' % instance_name)

destroy_task = vm.Destroy_Task()

(destroy_task_status,

destroy_task_result) = vmutils.wait_for_task(

destroy_task)

if destroy_task_status:

print('VM %s deleted!' % instance_name)

else:

print('VM %s deletion failed!' % instance_name)

else:

print('Unable to power off VM %s!' % instance_name)

else:

print('Unable to find VM %s!' % instance_name)

def do_scale_out(vmware_settings, pool_name, num_scaleout):

"""

Perform scale out of pool.

vmware_settings:

vcenter: IP address or hostname of vCenter

user: Username for vCenter access

password: Password for vCenter access

cluster_name: vCenter cluster name

template_folder_name: Folder containing template VM, e.g.

'Datacenter1/Folder1/Subfolder1' or None to search all

template_name: Name of template VM

vm_folder_name: Folder to place new VM (or None for same as

template)

customization_spec_name: Name of a customization spec to use

resource_pool_name: Name of VMWare Resource Pool or None for default

port_group: Name of port group containing pool member IP

pool_name: Name of the pool

num_scaleout: Number of new instances

"""

new_instances = []

with vmutils.vcenter_session(host=vmware_settings['vcenter'],

user=vmware_settings['user'],

pwd=vmware_settings['password']) as session:

template_folder_name = vmware_settings.get('template_folder_name',

None)

template_name = vmware_settings['template_name']

if template_folder_name:

template_folder = vmutils.get_folder(session,

template_folder_name)

template_vm = vmutils.get_vm_by_name(

session, template_name,

template_folder)

else:

template_vm = vmutils.get_vm_by_name(

session, template_name)

vm_folder_name = vmware_settings.get('vm_folder_name', None)

if vm_folder_name:

vm_folder = vmutils.get_folder(session, vm_folder_name)

else:

vm_folder = template_vm.parent

csm = session.RetrieveContent().customizationSpecManager

customization_spec = csm.GetCustomizationSpec(

name=vmware_settings['customization_spec_name'])

cluster = vmutils.get_cluster(session,

vmware_settings['cluster_name'])

resource_pool_name = vmware_settings.get('resource_pool_name', None)

if resource_pool_name:

resource_pool = vmutils.get_resource_pool(session,

resource_pool_name)

else:

resource_pool = cluster.resourcePool

relocate_spec = vim.vm.RelocateSpec(pool=resource_pool)

clone_spec = vim.vm.CloneSpec(powerOn=True, template=False,

location=relocate_spec,

customization=customization_spec.spec)

port_group = vmware_settings.get('port_group', None)

clone_tasks = []

for instance in range(num_scaleout):

new_vm_name = '%s-%s' % (pool_name, str(uuid.uuid4()))

print('Initiating clone of %s to %s' % (template_name,

new_vm_name))

clone_task = template_vm.Clone(name=new_vm_name,

folder=vm_folder,

spec=clone_spec)

print('Task %s created.' % clone_task.info.key)

clone_tasks.append(clone_task)

for clone_task in clone_tasks:

print('Waiting for %s...' % clone_task.info.key)

clone_task_status, clone_vm = vmutils.wait_for_task(clone_task,

timeout=600)

ip_address = None

if clone_vm:

print('Waiting for VM %s to be ready...' % clone_vm.name)

if vmutils.wait_for_vm_status(clone_vm,

condition=vmutils.net_info_available,

timeout=600):

print('Getting IP address from VM %s' % clone_vm.name)

for nic in clone_vm.guest.net:

if port_group is None or nic.network == port_group:

for ip in nic.ipAddress:

if '.' in ip:

ip_address = ip

break

if ip_address:

break

else:

print('Timed out waiting for VM %s!' % clone_vm.name)

if not ip_address:

print('Could not get IP for VM %s!' % clone_vm.name)

power_off_task = clone_vm.PowerOffVM_Task()

(power_off_task_status,

power_off_task_result) = vmutils.wait_for_task(

power_off_task)

if power_off_task_status:

destroy_task = clone_vm.Destroy_Task()

else:

print('New VM %s with IP %s' % (clone_vm.name,

ip_address))

new_instances.append((clone_vm.name, ip_address))

elif clone_task_status is None:

print('Clone task %s timed out!' % clone_task.info.key)

return new_instances

def scale_out(vmware_settings, *args):

alert_info = json.loads(args[1])

api, tenant = getAviApiSession()

(pool_name, pool_uuid,

pool_obj, num_scaleout) = scaleout_params('scaleout',

alert_info,

api=api,

tenant=tenant)

print('Scaling out pool %s by %d...' % (pool_name, num_scaleout))

new_instances = do_scale_out(vmware_settings,

pool_name, num_scaleout)

# Get pool object again in case it has been modified

pool_obj = api.get('pool/%s' % pool_uuid, tenant=tenant).json()

new_servers = pool_obj.get('servers', [])

for new_instance in new_instances:

new_server = {

'ip': {'addr': new_instance[1], 'type': 'V4'},

'hostname': new_instance[0]}

new_servers.append(new_server)

pool_obj['servers'] = new_servers

print('Updating pool with %s' % new_server)

resp = api.put('pool/%s' % pool_uuid, data=json.dumps(pool_obj))

print('API status: %d' % resp.status_code)

def scale_in(vmware_settings, *args):

alert_info = json.loads(args[1])

api, tenant = getAviApiSession()

(pool_name, pool_uuid,

pool_obj, num_scaleout) = scaleout_params('scalein',

alert_info,

api=api,

tenant=tenant)

remove_instances = [instance ['hostname'] for instance in

pool_obj['servers'][-num_scaleout:]]

pool_obj['servers'] = pool_obj['servers'][:-num_scaleout]

print('Scaling in pool %s by %d...' % (pool_name, num_scaleout))

resp = api.put('pool/%s' % pool_uuid, data=json.dumps(pool_obj))

print('API status: %d' % resp.status_code)

do_scale_in(vmware_settings, remove_instances)