With variables and expressions, you can use input parameters and output parameters with your pipeline tasks. The parameters you enter bind your pipeline task to one or more variables, expressions, or conditions, and determine the pipeline behavior when it runs.

Pipelines can run simple or complex software delivery solutions

When you bind pipeline tasks together, you can include default and complex expressions. As a result, your pipeline can run simple or complex software delivery solutions.

To create the parameters in your pipeline, click the Input or Output tab, and add a variable by entering the dollar sign $ and an expression. For example, this parameter is used as a task input that calls a URL: ${Stage0.Task3.input.URL}.

The format for variable bindings uses syntax components called scopes and keys. The SCOPE defines the context as input or output, and the KEY defines the details. In the parameter example ${Stage0.Task3.input.URL}, the input is the SCOPE and the URL is the KEY.

Output properties of any task can resolve to any number of nested levels of variable binding.

To learn more about using variable bindings in pipelines, see How do I use variable bindings in Automation Pipelines pipelines.

Using dollar expressions with scopes and keys to bind pipeline tasks

You can bind pipeline tasks together by using expressions in dollar sign variables. You enter expressions as ${SCOPE.KEY.<PATH>}.

To determine the behavior of a pipeline task, in each expression, SCOPE is the context that Automation Pipelines uses. The scope looks for a KEY, which defines the detail for the action that the task takes. When the value for KEY is a nested object, you can provide an optional PATH.

These examples describe SCOPE and KEY, and show you how you can use them in your pipeline.

Table 1.

Using SCOPE and KEY

| SCOPE |

Purpose of expression and example |

KEY |

How to use SCOPE and KEY in your pipeline |

| input |

Input properties of a pipeline: ${input.input1}

|

Name of the input property |

To refer to the input property of a pipeline in a task, use this format:

tasks:

mytask:

type: REST

input:

url: ${input.url}

action: get

input:

url: https://www.vmware.com

|

| output |

Output properties of a pipeline: ${output.output1}

|

Name of the output property |

To refer to an output property for sending a notification, use this format:

notifications:

email:

- endpoint: MyEmailEndpoint

subject: "Deployment Successful"

event: COMPLETED

to:

- [email protected]

body: |

Pipeline deployed the service successfully. Refer ${output.serviceURL}

|

| task input |

Input to a task: ${MY_STAGE.MY_TASK.input.SOMETHING}

|

Indicates the input of a task in a notification |

When a Jenkins job starts, it can refer to the name of the job triggered from the task input. In this case, send a notification by using this format:

notifications:

email:

- endpoint: MyEmailEndpoint

stage: MY_STAGE

task: MY_TASK

subject: "Build Started"

event: STARTED

to:

- [email protected]

body: |

Jenkins job ${MY_STAGE.MY_TASK.input.job} started for commit id ${input.COMMITID}.

|

| task output |

Output of a task: ${MY_STAGE.MY_TASK.output.SOMETHING}

|

Indicates the output of a task in a subsequent task |

To refer to the output of pipeline task 1 in task 2, use this format:

taskOrder:

- task1

- task2

tasks:

task1:

type: REST

input:

action: get

url: https://www.example.org/api/status

task2:

type: REST

input:

action: post

url: https://status.internal.example.org/api/activity

payload: ${MY_STAGE.task1.output.responseBody}

|

| var |

Variable: ${var.myVariable}

|

Refer to variable in an endpoint |

To refer to a secret variable in an endpoint for a password, use this format:

---

project: MyProject

kind: ENDPOINT

name: MyJenkinsServer

type: jenkins

properties:

url: https://jenkins.example.com

username: jenkinsUser

password: ${var.jenkinsPassword}

|

| var |

Variable: ${var.myVariable}

|

Refer to variable in a pipeline |

To refer to variable in a pipeline URL, use this format:

tasks:

task1:

type: REST

input:

action: get

url: ${var.MY_SERVER_URL}

|

| task status |

Status of a task: ${MY_STAGE.MY_TASK.status}

${MY_STAGE.MY_TASK.statusMessage}

|

|

|

| stage status |

Status of a stage: ${MY_STAGE.status}

${MY_STAGE.statusMessage}

|

|

|

Default Expressions

You can use variables with expressions in your pipeline. This summary includes the default expressions that you can use.

| Expression |

Description |

${comments} |

Comments provided when at pipeline execution request. |

${duration} |

Duration of the pipeline execution. |

${endTime} |

End time of the pipeline execution in UTC, if concluded. |

${executedOn} |

Same as the start time, the starting time of the pipeline execution in UTC. |

${executionId} |

ID of the pipeline execution. |

${executionUrl} |

URL that navigates to the pipeline execution in the user interface. |

${name} |

Name of the pipeline. |

${requestBy} |

Name of the user who requested the execution. |

${stageName} |

Name of the current stage, when used in the scope of a stage. |

${startTime} |

Starting time of the pipeline execution in UTC. |

${status} |

Status of the execution. |

${statusMessage} |

Status message of the pipeline execution. |

${taskName} |

Name of the current task, when used at a task input or notification. |

Using SCOPE and KEY in pipeline tasks

You can use expressions with any of the supported pipeline tasks. These examples show you how to define the SCOPE and KEY, and confirm the syntax. The code examples use MY_STAGE and MY_TASK as the pipeline stage and task names.

To find out more about available tasks, see What types of tasks are available in Automation Pipelines.

Table 2.

Gating tasks

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| User Operation |

|

Input |

summary: Summary of the request for the User Operation

description: Description of the request for the User Operation

approvers: List of approver email addresses, where each entry can be a variable with a comma, or use a semi-colon for separate emails

approverGroups: List of approver group addresses for the platform and identity

sendemail: Optionally sends an email notification upon request or response when set to true

expirationInDays: Number of days that represents the expiry time of the request

|

${MY_STAGE.MY_TASK.input.summary}

${MY_STAGE.MY_TASK.input.description}

${MY_STAGE.MY_TASK.input.approvers}

${MY_STAGE.MY_TASK.input.approverGroups}

${MY_STAGE.MY_TASK.input.sendemail}

${MY_STAGE.MY_TASK.input.expirationInDays}

|

|

Output |

index: Six-digit hexadecimal string that represents the request

respondedBy: Account name of the person who approved/rejected the User Operation

respondedByEmail: Email address of the person who responded

comments: Comments provided during response

|

${MY_STAGE.MY_TASK.output.index}

${MY_STAGE.MY_TASK.output.respondedBy}

${MY_STAGE.MY_TASK.output.respondedByEmail}

${MY_STAGE.MY_TASK.output.comments}

|

| Condition |

|

|

Input |

condition: Condition to evaluate. When the condition evaluates to true, it marks the task as complete, whereas other responses fail the task

|

${MY_STAGE.MY_TASK.input.condition}

|

|

Output |

result: Result upon evaluation

|

${MY_STAGE.MY_TASK.output.response}

|

Table 3.

Pipeline tasks

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| Pipeline |

|

Input |

name: Name of the pipeline to run

inputProperties: Input properties to pass to the nested pipeline execution

|

${MY_STAGE.MY_TASK.input.name}

${MY_STAGE.MY_TASK.input.inputProperties} # Refer to all properties

${MY_STAGE.MY_TASK.input.inputProperties.input1} # Refer to value of input1

|

|

Output |

executionStatus: Status of the pipeline execution

executionIndex: Index of the pipeline execution

outputProperties: Output properties of a pipeline execution

|

${MY_STAGE.MY_TASK.output.executionStatus}

${MY_STAGE.MY_TASK.output.executionIndex}

${MY_STAGE.MY_TASK.output.outputProperties} # Refer to all properties

${MY_STAGE.MY_TASK.output.outputProperties.output1} # Refer to value of output1

|

Table 4.

Automate continuous integration tasks

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| CI |

|

Input |

steps: A set of strings, which represent commands to run

export: Environment variables to preserve after running the steps

artifacts: Paths of artifacts to preserve in the shared path

process: Set of configuration elements for JUnit, JaCoCo, Checkstyle, FindBugs processing

|

${MY_STAGE.MY_TASK.input.steps}

${MY_STAGE.MY_TASK.input.export}

${MY_STAGE.MY_TASK.input.artifacts}

${MY_STAGE.MY_TASK.input.process}

${MY_STAGE.MY_TASK.input.process[0].path} # Refer to path of the first configuration

|

|

Output |

exports: Key-value pair, which represents the exported environment variables from the input export

artifacts: Path of successfully preserved artifacts

processResponse: Set of processed results for the input process

|

${MY_STAGE.MY_TASK.output.exports} # Refer to all exports

${MY_STAGE.MY_TASK.output.exports.myvar} # Refer to value of myvar

${MY_STAGE.MY_TASK.output.artifacts}

${MY_STAGE.MY_TASK.output.processResponse}

${MY_STAGE.MY_TASK.output.processResponse[0].result} # Result of the first process configuration

|

| Custom |

|

Input |

name: Name of the custom integration

version: A version of the custom integration, released or deprecated

properties: Properties to send to the custom integration

|

${MY_STAGE.MY_TASK.input.name}

${MY_STAGE.MY_TASK.input.version}

${MY_STAGE.MY_TASK.input.properties} #Refer to all properties

${MY_STAGE.MY_TASK.input.properties.property1} #Refer to value of property1

|

|

Output |

properties: Output properties from the custom integration response

|

${MY_STAGE.MY_TASK.output.properties} #Refer to all properties

${MY_STAGE.MY_TASK.output.properties.property1} #Refer to value of property1

|

Table 5.

Automate continuous deployment tasks: Cloud template

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| Cloud template |

|

|

Input |

action: One of createDeployment, updateDeployment, deleteDeployment, rollbackDeployment

blueprintInputParams: Used for the create deployment and update deployment actions

allowDestroy: Machines can be destroyed in the update deployment process.

CREATE_DEPLOYMENT

blueprintName: Name of the cloud templateblueprintVersion: Version of the cloud template OR

fileUrl: URL of the remote cloud template YAML, after selecting a GIT server. UPDATE_DEPLOYMENT Any of these combinations:

blueprintName: Name of the cloud templateblueprintVersion: Version of the cloud template OR

fileUrl: URL of the remote cloud template YAML, after selecting a GIT server. ------

deploymentId: ID of the deployment OR

deploymentName: Name of the deployment ------

DELETE_DEPLOYMENT

deploymentId: ID of the deployment OR

deploymentName: Name of the deployment ROLLBACK_DEPLOYMENT Any of these combinations:

deploymentId: ID of the deployment OR

deploymentName: Name of the deployment ------

blueprintName: Name of the cloud templaterollbackVersion: Version to roll back to |

|

|

Output |

|

Parameters that can bind to other tasks or to the output of a pipeline:

- Deployment Name can be accessed as ${Stage0.Task0.output.deploymentName}

- Deployment Id can be accessed as ${Stage0.Task0.output.deploymentId}

- Deployment Details is a complex object, and internal details can be accessed by using the JSON results.

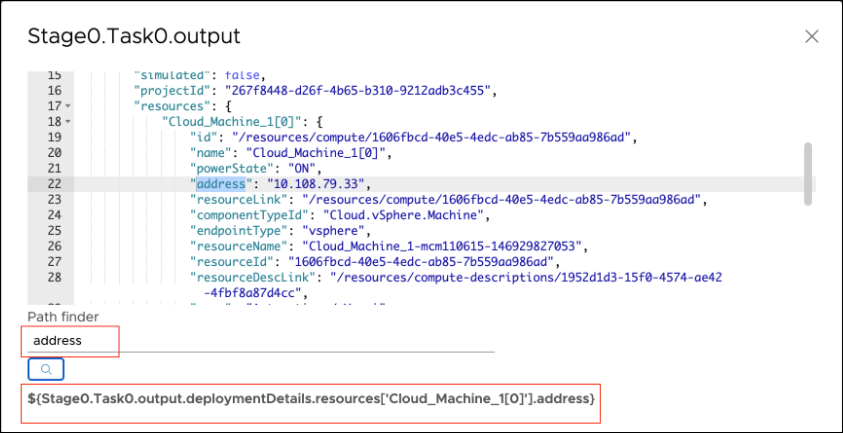

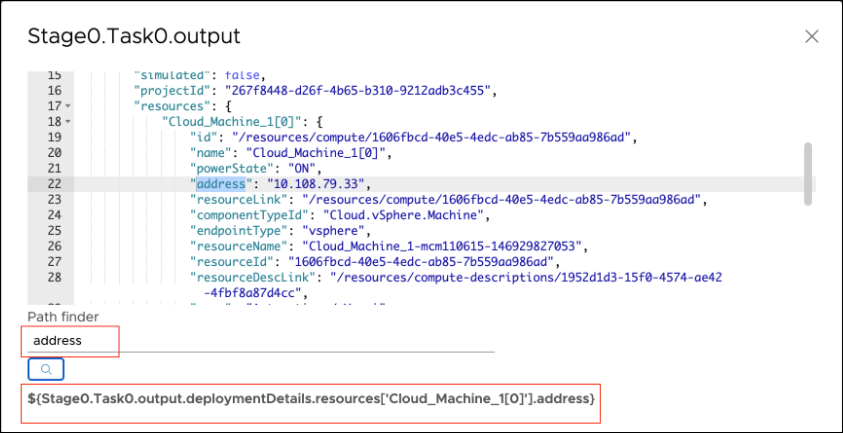

To access any property, use the dot operator to follow the JSON hierarchy. For example, to access the address of resource Cloud_Machine_1[0], the $ binding is: ${Stage0.Task0.output.deploymentDetails.resources['Cloud_Machine_1[0]'].address} Similarly, for the flavor, the $ binding is: ${Stage0.Task0.output.deploymentDetails.resources['Cloud_Machine_1[0]'].flavor} In the Automation Pipelines user interface, you can obtain the $ bindings for any property.

- In the task output property area, click VIEW OUTPUT JSON.

- To find the $ binding, enter any property.

- Click the search icon, which displays the corresponding $ binding.

|

Example JSON output:

Sample deployment details object:

{

"id": "6a031f92-d0fa-42c8-bc9e-3b260ee2f65b",

"name": "deployment_6a031f92-d0fa-42c8-bc9e-3b260ee2f65b",

"description": "Pipeline Service triggered operation",

"orgId": "434f6917-4e34-4537-b6c0-3bf3638a71bc",

"blueprintId": "8d1dd801-3a32-4f3b-adde-27f8163dfe6f",

"blueprintVersion": "1",

"createdAt": "2020-08-27T13:50:24.546215Z",

"createdBy": "[email protected]",

"lastUpdatedAt": "2020-08-27T13:52:50.674957Z",

"lastUpdatedBy": "[email protected]",

"inputs": {},

"simulated": false,

"projectId": "267f8448-d26f-4b65-b310-9212adb3c455",

"resources": {

"Cloud_Machine_1[0]": {

"id": "/resources/compute/1606fbcd-40e5-4edc-ab85-7b559aa986ad",

"name": "Cloud_Machine_1[0]",

"powerState": "ON",

"address": "10.108.79.33",

"resourceLink": "/resources/compute/1606fbcd-40e5-4edc-ab85-7b559aa986ad",

"componentTypeId": "Cloud.vSphere.Machine",

"endpointType": "vsphere",

"resourceName": "Cloud_Machine_1-mcm110615-146929827053",

"resourceId": "1606fbcd-40e5-4edc-ab85-7b559aa986ad",

"resourceDescLink": "/resources/compute-descriptions/1952d1d3-15f0-4574-ae42-4fbf8a87d4cc",

"zone": "Automation / Vms",

"countIndex": "0",

"image": "ubuntu",

"count": "1",

"flavor": "small",

"region": "MYBU",

"_clusterAllocationSize": "1",

"osType": "LINUX",

"componentType": "Cloud.vSphere.Machine",

"account": "bha"

}

},

"status": "CREATE_SUCCESSFUL",

"deploymentURI": "https://api.yourenv.com/automation-ui/#/deployment-ui;ash=/deployment/6a031f92-d0fa-42c8-bc9e-3b260ee2f65b"

}

Table 6.

Automate continuous deployment tasks: Kubernetes

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| Kubernetes |

|

Input |

action: One of GET, CREATE, APPLY, DELETE, ROLLBACK

timeout: Overall timeout for any actionfilterByLabel: Additional label to filter on for action GET using K8S labelSelector GET, CREATE, DELETE, APPLY

yaml: Inline YAML to process and send to Kubernetesparameters: KEY, VALUE pair - Replace $$KEY with VALUE in the in-line YAML input areafilePath: Relative path from the SCM Git endpoint, if provided, from which to fetch the YAMLscmConstants: KEY, VALUE pair - Replace $${KEY} with VALUE in the YAML fetched over SCM.continueOnConflict: When set to true, if a resource is already present, the task continues. ROLLBACK

resourceType: Resource type to roll backresourceName: Resource name to roll backnamespace: Namespace where the rollback must be performedrevision: Revision to roll back to |

${MY_STAGE.MY_TASK.input.action} #Determines the action to perform.

${MY_STAGE.MY_TASK.input.timeout}

${MY_STAGE.MY_TASK.input.filterByLabel}

${MY_STAGE.MY_TASK.input.yaml}

${MY_STAGE.MY_TASK.input.parameters}

${MY_STAGE.MY_TASK.input.filePath}

${MY_STAGE.MY_TASK.input.scmConstants}

${MY_STAGE.MY_TASK.input.continueOnConflict}

${MY_STAGE.MY_TASK.input.resourceType}

${MY_STAGE.MY_TASK.input.resourceName}

${MY_STAGE.MY_TASK.input.namespace}

${MY_STAGE.MY_TASK.input.revision}

|

|

Output |

response: Captures the entire response

response.<RESOURCE>: Resource corresponds to configMaps, deployments, endpoints, ingresses, jobs, namespaces, pods, replicaSets, replicationControllers, secrets, services, statefulSets, nodes, loadBalancers.

response.<RESOURCE>.<KEY>: The key corresponds to one of apiVersion, kind, metadata, spec

|

${MY_STAGE.MY_TASK.output.response}

${MY_STAGE.MY_TASK.output.response.}

|

Table 7.

Integrate development, test, and deployment applications

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| Bamboo |

|

Input |

plan: Name of the plan

planKey: Plan key

variables: Variables to be passed to the plan

parameters: Parameters to be passed to the plan

|

${MY_STAGE.MY_TASK.input.plan}

${MY_STAGE.MY_TASK.input.planKey}

${MY_STAGE.MY_TASK.input.variables}

${MY_STAGE.MY_TASK.input.parameters} # Refer to all parameters

${MY_STAGE.MY_TASK.input.parameters.param1} # Refer to value of param1

|

|

Output |

resultUrl: URL of the resulting build

buildResultKey: Key of the resulting build

buildNumber: Build Number

buildTestSummary: Summary of the tests that ran

successfulTestCount: test result passed

failedTestCount: test result failed

skippedTestCount: test result skipped

artifacts: Artifacts from the build

|

${MY_STAGE.MY_TASK.output.resultUrl}

${MY_STAGE.MY_TASK.output.buildResultKey}

${MY_STAGE.MY_TASK.output.buildNumber}

${MY_STAGE.MY_TASK.output.buildTestSummary} # Refer to all results

${MY_STAGE.MY_TASK.output.successfulTestCount} # Refer to the specific test count

${MY_STAGE.MY_TASK.output.buildNumber}

|

| Jenkins |

|

Input |

job: Name of the Jenkins job

parameters: Parameters to be passed to the job

|

${MY_STAGE.MY_TASK.input.job}

${MY_STAGE.MY_TASK.input.parameters} # Refer to all parameters

${MY_STAGE.MY_TASK.input.parameters.param1} # Refer to value of a parameter

|

|

Output |

job: Name of the Jenkins job

jobId: ID of the resulting job, such as 1234

jobStatus: Status in Jenkins

jobResults: Collection of test/code coverage results

jobUrl: URL of the resulting job run

|

${MY_STAGE.MY_TASK.output.job}

${MY_STAGE.MY_TASK.output.jobId}

${MY_STAGE.MY_TASK.output.jobStatus}

${MY_STAGE.MY_TASK.output.jobResults} # Refer to all results

${MY_STAGE.MY_TASK.output.jobResults.junitResponse} # Refer to JUnit results

${MY_STAGE.MY_TASK.output.jobResults.jacocoRespose} # Refer to JaCoCo results

${MY_STAGE.MY_TASK.output.jobUrl}

|

| TFS |

|

Input |

projectCollection: Project collection from TFS

teamProject: Selected project from the available collection

buildDefinitionId: Build Definition ID to run

|

${MY_STAGE.MY_TASK.input.projectCollection}

${MY_STAGE.MY_TASK.input.teamProject}

${MY_STAGE.MY_TASK.input.buildDefinitionId}

|

|

Output |

buildId: Resulting build ID

buildUrl: URL to visit the build summary

logUrl: URL to visit for logs

dropLocation: Drop location of artifacts if any

|

${MY_STAGE.MY_TASK.output.buildId}

${MY_STAGE.MY_TASK.output.buildUrl}

${MY_STAGE.MY_TASK.output.logUrl}

${MY_STAGE.MY_TASK.output.dropLocation}

|

| vRO |

|

Input |

workflowId: ID of the workflow to be run

parameters: Parameters to be passed to the workflow

|

${MY_STAGE.MY_TASK.input.workflowId}

${MY_STAGE.MY_TASK.input.parameters}

|

|

Output |

workflowExecutionId: ID of the workflow execution

properties: Output properties from the workflow execution

|

${MY_STAGE.MY_TASK.output.workflowExecutionId}

${MY_STAGE.MY_TASK.output.properties}

|

Table 8.

Integrate other applications through an API

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| REST |

|

Input |

url: URL to call

action: HTTP method to use

headers: HTTP headers to pass

payload: Request payload

fingerprint: Fingerprint to match for a URL that is https

allowAllCerts: When set to true, can be any certificate that has a URL of https

|

${MY_STAGE.MY_TASK.input.url}

${MY_STAGE.MY_TASK.input.action}

${MY_STAGE.MY_TASK.input.headers}

${MY_STAGE.MY_TASK.input.payload}

${MY_STAGE.MY_TASK.input.fingerprint}

${MY_STAGE.MY_TASK.input.allowAllCerts}

|

|

Output |

responseCode: HTTP response code

responseHeaders: HTTP response headers

responseBody: String format of response received

responseJson: Traversable response if the content-type is application/json

|

${MY_STAGE.MY_TASK.output.responseCode}

${MY_STAGE.MY_TASK.output.responseHeaders}

${MY_STAGE.MY_TASK.output.responseHeaders.header1} # Refer to response header 'header1'

${MY_STAGE.MY_TASK.output.responseBody}

${MY_STAGE.MY_TASK.output.responseJson} # Refer to response as JSON

${MY_STAGE.MY_TASK.output.responseJson.a.b.c} # Refer to nested object following the a.b.c JSON path in response

|

| Poll |

|

Input |

url: URL to call

headers: HTTP headers to pass

exitCriteria: Criteria to meet to for the task to succeed or fail. A key-value pair of 'success' → Expression, 'failure' → Expression

pollCount: Number of iterations to perform. A Automation Pipelines administrator can set the poll count to a maximum of 10000.

pollIntervalSeconds: Number of seconds to wait between each iteration. The poll interval must be greater than or equal to 60 seconds.

ignoreFailure: When set to true, ignores intermediate response failures

fingerprint: Fingerprint to match for a URL that is https

allowAllCerts: When set to true, can be any certificate that has a URL of https

|

${MY_STAGE.MY_TASK.input.url}

${MY_STAGE.MY_TASK.input.headers}

${MY_STAGE.MY_TASK.input.exitCriteria}

${MY_STAGE.MY_TASK.input.pollCount}

${MY_STAGE.MY_TASK.input.pollIntervalSeconds}

${MY_STAGE.MY_TASK.input.ignoreFailure}

${MY_STAGE.MY_TASK.input.fingerprint}

${MY_STAGE.MY_TASK.input.allowAllCerts}

|

|

Output |

responseCode: HTTP response code

responseBody: String format of response received

responseJson: Traversable response if the content-type is application/json

|

${MY_STAGE.MY_TASK.output.responseCode}

${MY_STAGE.MY_TASK.output.responseBody}

${MY_STAGE.MY_TASK.output.responseJson} # Refer to response as JSON

|

Table 9.

Run remote and user-defined scripts

| Task |

Scope |

Key |

How to use SCOPE and KEY in the task |

| PowerShell To run a PowerShell task, you must:

|

|

Input |

host: IP address or hostname of the machine

username: User name to use to connect

password: Password to use to connect

useTLS: Attempt https connection

trustCert: When set to true, trusts self-signed certificates

script: Script to run

workingDirectory: Directory path to switch to before running the script

environmentVariables: A key-value pair of environment variable to set

arguments: Arguments to pass to the script

|

${MY_STAGE.MY_TASK.input.host}

${MY_STAGE.MY_TASK.input.username}

${MY_STAGE.MY_TASK.input.password}

${MY_STAGE.MY_TASK.input.useTLS}

${MY_STAGE.MY_TASK.input.trustCert}

${MY_STAGE.MY_TASK.input.script}

${MY_STAGE.MY_TASK.input.workingDirectory}

${MY_STAGE.MY_TASK.input.environmentVariables}

${MY_STAGE.MY_TASK.input.arguments}

|

|

Output |

response: Content of the file $SCRIPT_RESPONSE_FILE

responseFilePath: Value of $SCRIPT_RESPONSE_FILE

exitCode: Process exit code

logFilePath: Path to file containing stdout

errorFilePath: Path to file containing stderr

|

${MY_STAGE.MY_TASK.output.response}

${MY_STAGE.MY_TASK.output.responseFilePath}

${MY_STAGE.MY_TASK.output.exitCode}

${MY_STAGE.MY_TASK.output.logFilePath}

${MY_STAGE.MY_TASK.output.errorFilePath}

|

| SSH |

|

Input |

host: IP address or hostname of the machine

username: User name to use to connect

password: Password to use to connect (optionally can use privateKey)

privateKey: PrivateKey to use to connect

passphrase: Optional passphrase to unlock privateKey

script: Script to run

workingDirectory: Directory path to switch to before running the script

environmentVariables: Key-value pair of the environment variable to set

|

${MY_STAGE.MY_TASK.input.host}

${MY_STAGE.MY_TASK.input.username}

${MY_STAGE.MY_TASK.input.password}

${MY_STAGE.MY_TASK.input.privateKey}

${MY_STAGE.MY_TASK.input.passphrase}

${MY_STAGE.MY_TASK.input.script}

${MY_STAGE.MY_TASK.input.workingDirectory}

${MY_STAGE.MY_TASK.input.environmentVariables}

|

|

Output |

response: Content of the file $SCRIPT_RESPONSE_FILE

responseFilePath: Value of $SCRIPT_RESPONSE_FILE

exitCode: Process exit code

logFilePath: Path to file containing stdout

errorFilePath: Path to file containing stderr

|

${MY_STAGE.MY_TASK.output.response}

${MY_STAGE.MY_TASK.output.responseFilePath}

${MY_STAGE.MY_TASK.output.exitCode}

${MY_STAGE.MY_TASK.output.logFilePath}

${MY_STAGE.MY_TASK.output.errorFilePath}

|

How to use a variable binding between tasks

This example shows you how to use variable bindings in your pipeline tasks.

Table 10.

Sample syntax formats

| Example |

Syntax |

| To use a task output value for pipeline notifications and pipeline output properties |

${<Stage Key>.<Task Key>.output.<Task output key>} |

| To refer to the previous task output value as an input for the current task |

${<Previous/Current Stage key>.<Previous task key not in current Task group>.output.<task output key>} |

To learn more

To learn more about binding variables in tasks, see: