As a DevOps administrator or developer, you can create custom scripts that extend the capability of Automation Pipelines.

With your script, you can integrate Automation Pipelines with your own Continuous Integration (CI) and Continuous Delivery (CD) tools and APIs that build, test, and deploy your applications. Custom scripts are especially useful if you do not expose your application APIs publicly.

Your custom script can do almost anything you need for your build, test, and deploy tools integrate with Automation Pipelines. For example, your script can work with your pipeline workspace to support continuous integration tasks that build and test your application, and continuous delivery tasks that deploy your application. It can send a message to Slack when a pipeline finishes, and much more.

The Automation Pipelines pipeline workspace supports Docker and Kubernetes for continuous integration tasks and custom tasks.

For more information about configuring the workspace, see Configuring the Pipeline Workspace.

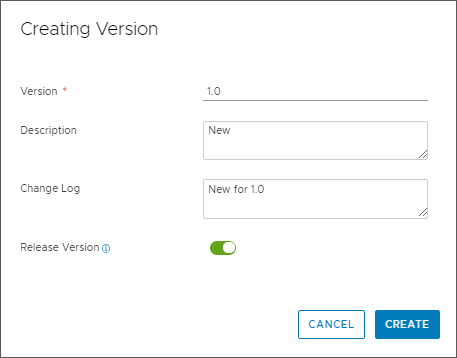

You write your custom script in one of the supported languages. In the script, you include your business logic, and define inputs and outputs. Output types can include number, string, text, and password. You can create multiple versions of a custom script with different business logic, input, and output.

The scripts that you create reside in your Automation Pipelines instance. You can import YAML code to create a custom integration or export your script as a YAML file to use in another Automation Pipelines instance.

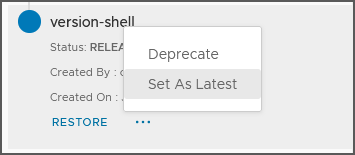

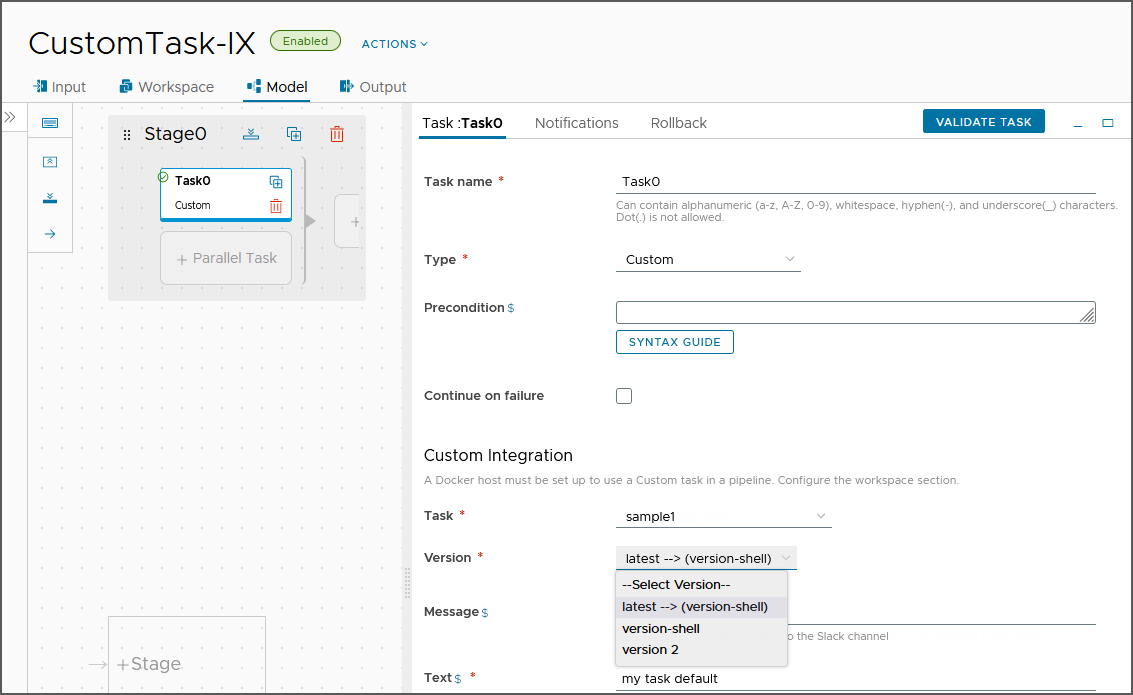

You have your pipeline run a released version of your script in a custom task. If you have multiple released versions, you can set one of them as latest so that it appears with latest --> when you select the custom task.

When a pipeline uses a custom integration, if you attempt to delete the custom integration, an error message appears and indicates that you cannot delete it.

Deleting a custom integration removes all versions of your custom script. If you have an existing pipeline with a custom task that uses any version of the script, that pipeline will fail. To ensure that existing pipelines do not fail, you can deprecate and withdraw the version of your script that you no longer want used. If no pipeline is using that version, you can delete it.

| What you do... | More information about this action... |

|---|---|

| Add a custom task to your pipeline. |

The custom task:

|

| Select your script in the custom task. |

You declare the input and output properties in the script. |

| Save your pipeline, then enable and run it. |

When the pipeline runs, the custom task calls the version of the script specified and runs the business logic in it, which integrates your build, test, and deploy tool with Automation Pipelines. |

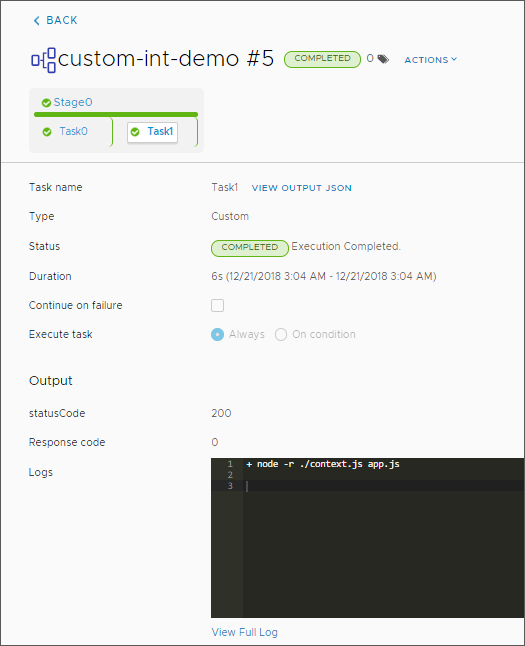

| After your pipeline runs, look at the executions. |

Verify that the pipeline delivered the results you expected. |

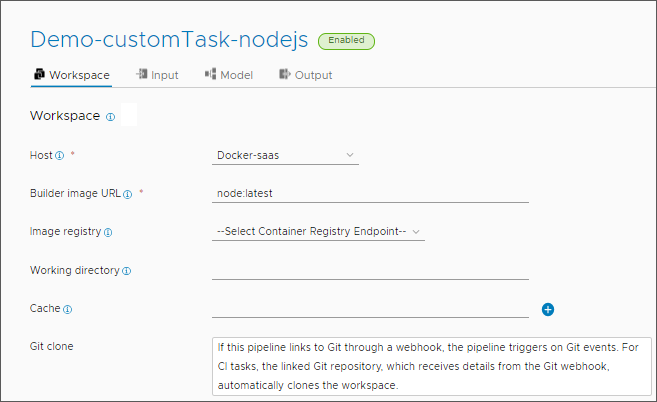

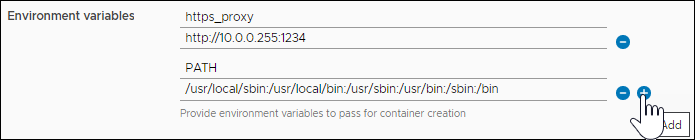

When you use a custom task that calls a Custom Integration version, you can include custom environment variables as name-value pairs on the pipeline Workspace tab. When the builder image creates the workspace container that runs the CI task and deploys your image, Automation Pipelines passes the environment variables to that container.

For example, when your Automation Pipelines instance requires a Web proxy, and you use a Docker host to create a container for a custom integration, Automation Pipelines runs the pipeline and passes the Web proxy setting variables to that container.

| Name | Value |

|---|---|

| HTTPS_PROXY | http://10.0.0.255:1234 |

| https_proxy | http://10.0.0.255:1234 |

| NO_PROXY | 10.0.0.32, *.dept.vsphere.local |

| no_proxy | 10.0.0.32, *.dept.vsphere.local |

| HTTP_PROXY | http://10.0.0.254:1234 |

| http_proxy | http://10.0.0.254:1234 |

| PATH | /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin |

Name-value pairs appear in the user interface like this:

This example creates a custom integration that connects Automation Pipelines to your Slack instance, and posts a message to a Slack channel.

Prerequisites

- To write your custom script, verify that you have one of these languages: Python 2, Python 3, Node.js, or any of the shell languages: Bash, sh, or zsh.

- Generate a container image by using the installed Node.js or the Python runtime.

Procedure

Results

Congratulations! You created a custom integration script that connects Automation Pipelines to your Slack instance, and posts a message to a Slack channel.

What to do next

Continue to create custom integrations to support using custom tasks in your pipelines, so that you can extend the capability of Automation Pipelines in the automation of your software release lifecycle.