AKO is an operator which works as an ingress Controller and performs specific functions in a Kubernetes/OpenShift environment with the Avi Load Balancer Controller. It remains in sync with the necessary Kubernetes/ OpenShift objects and calls the Avi Load Balancer Controller APIs to configure the virtual services.

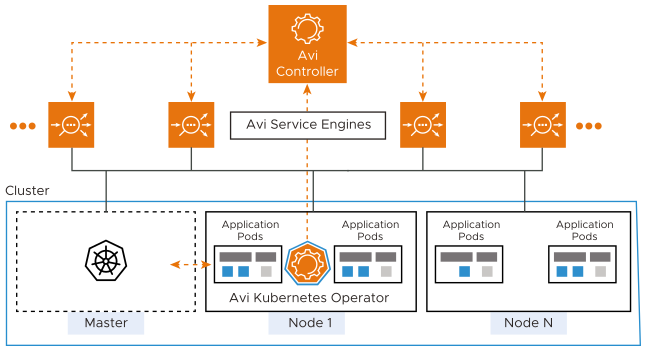

The AKO deployment consists of the following components:

The Avi Load Balancer Controller

The Service Engines (SE)

The Avi Kubernetes Operator (AKO)

An overview of the AKO deployment is as shown below:

Create a Cloud in Avi Load Balancer

The Avi Load Balancer infrastructure cloud will be used to place the virtual services that are created for the Kubernetes/ OpenShift application.

As a prerequisite to create the cloud, it is recommended to have IPAM and DNS profiles configured.

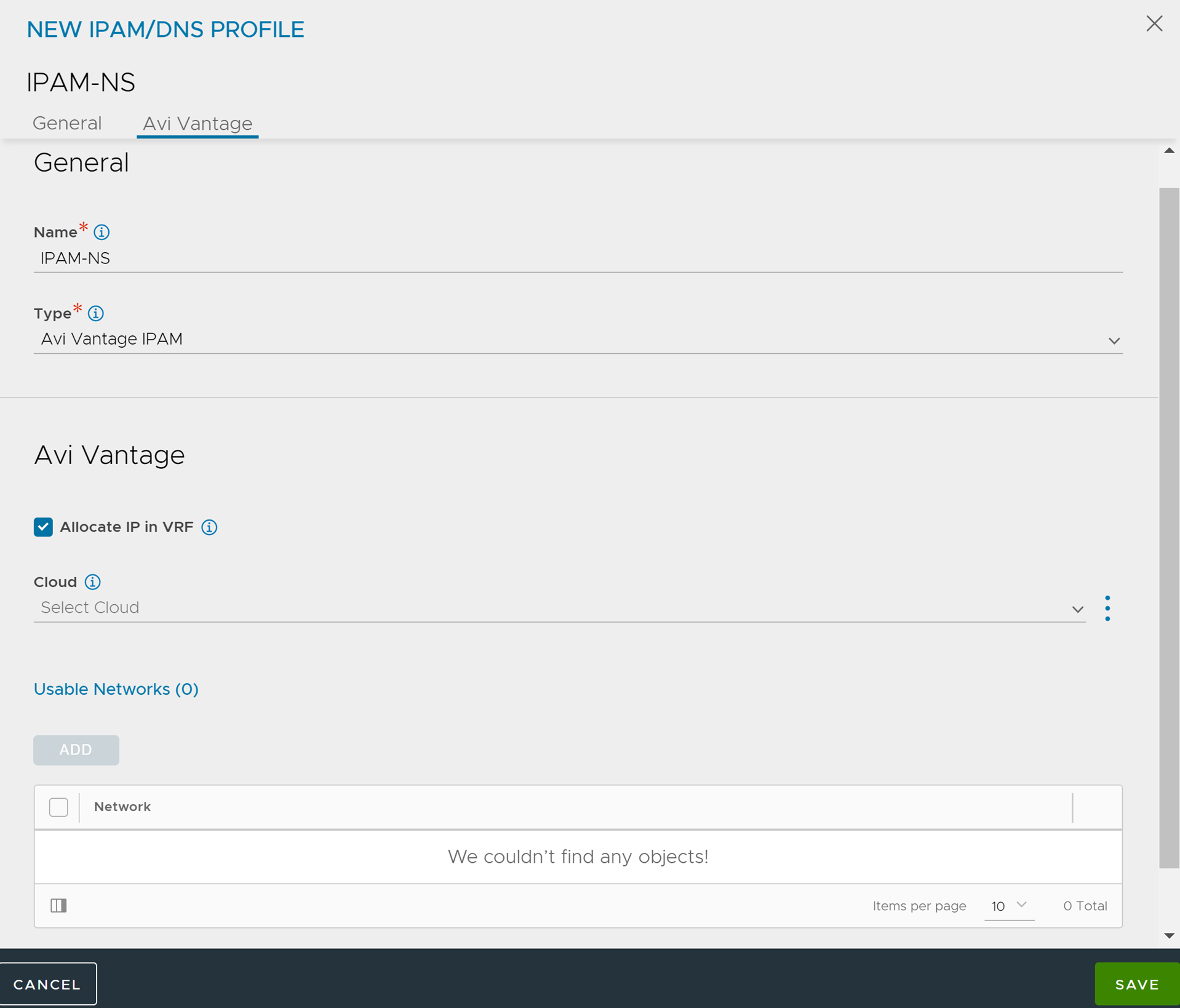

Configure IPAM and DNS Profile

Configure the IPAM profile and select the underlying network and the DNS profile which will be used for ingresses and external services.

To configure the IPAM Profile,

Navigate to .

Edit the IPAM profile as shown below:

Note:Usable network for the virtual services created by the AKO instance must be provided using the fields vipNetworkList|subnetIP|subnetPrefix fields during helm installation. For more information on Helm installation, see Install Helm CLI.

Click Save.

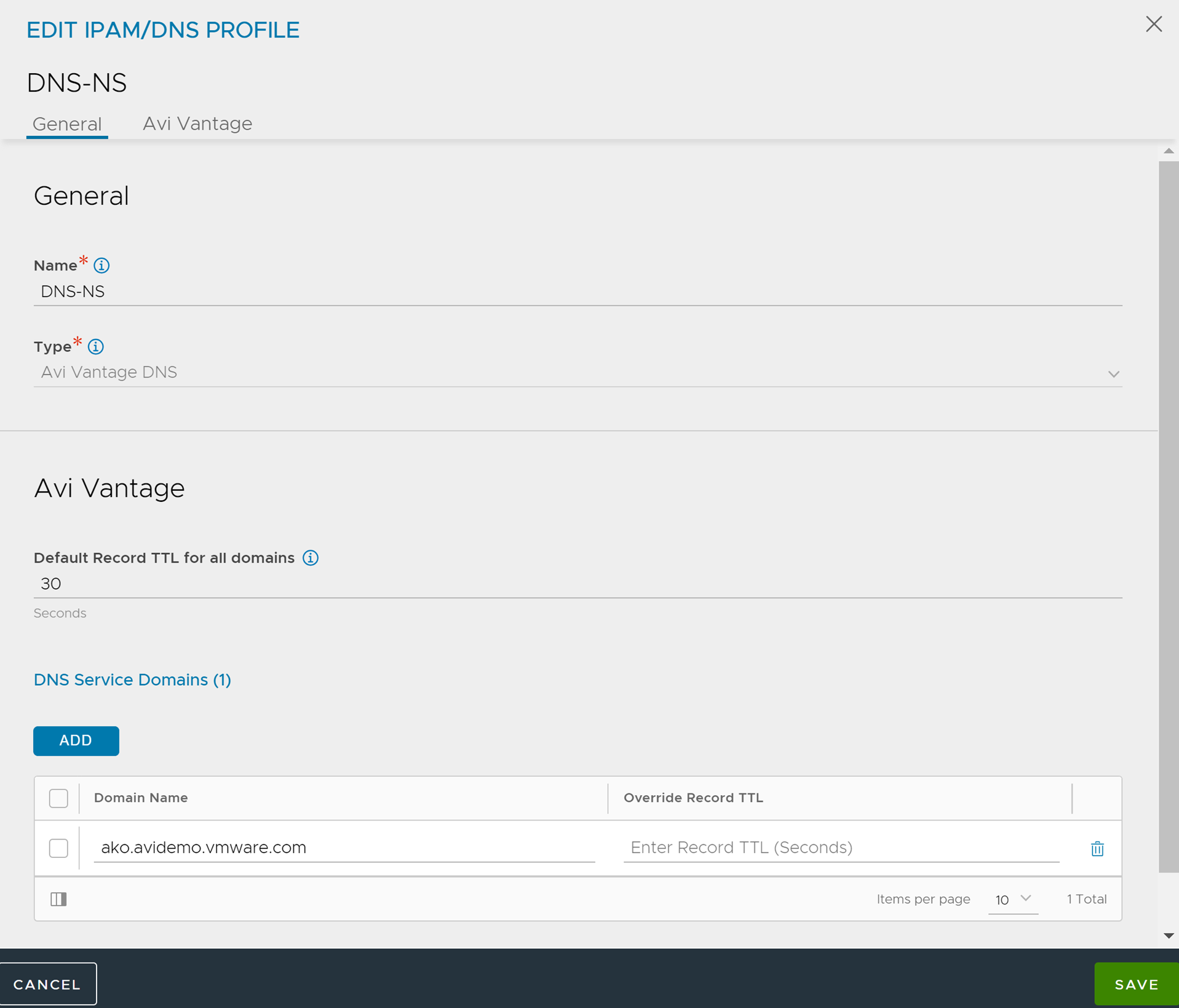

To configure the DNS Profile,

Navigate to .

Configure the DNS profile with the Domain Name.

Click Save.

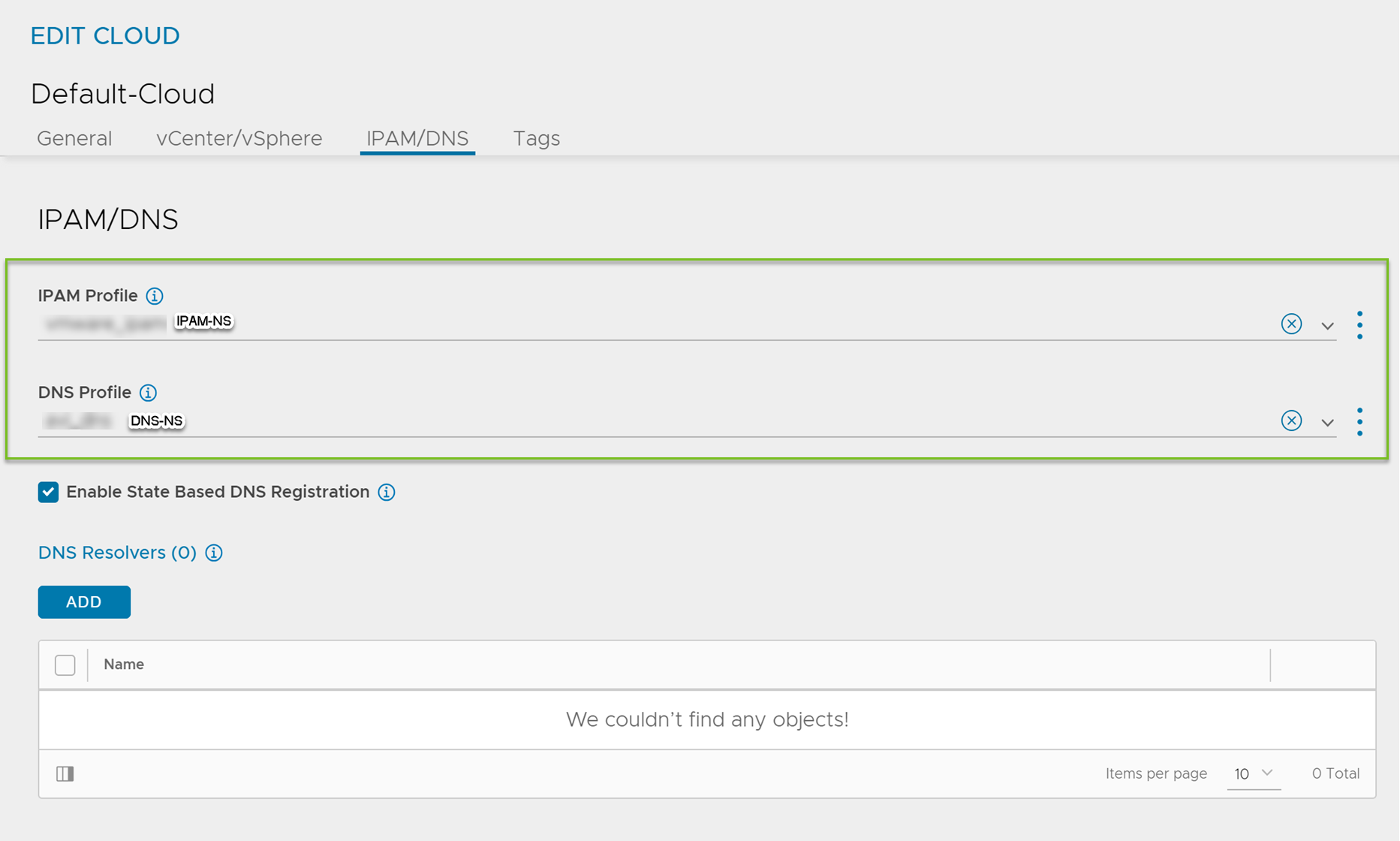

Configure the Cloud

Navigate to .

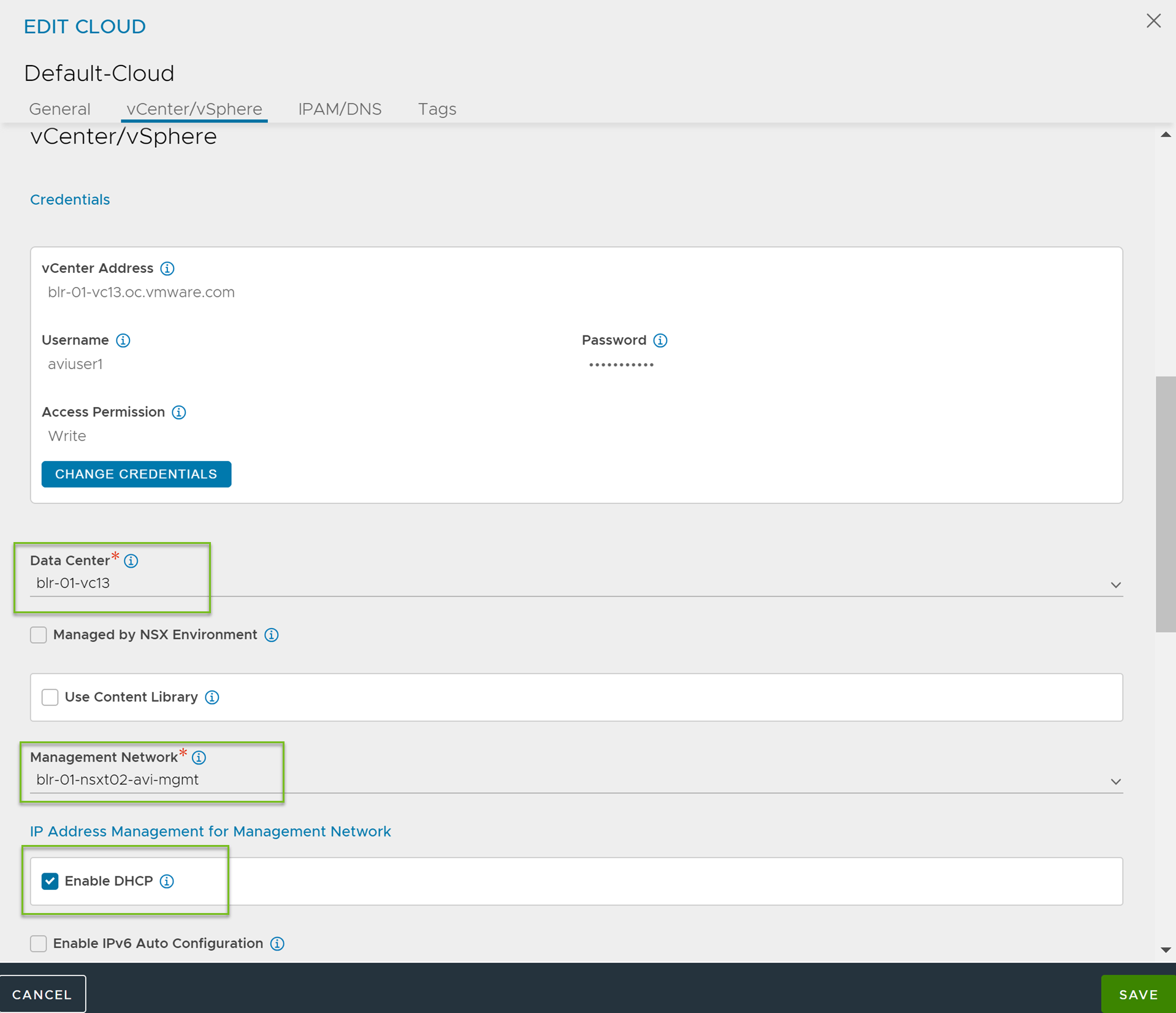

Select the vCenter cloud and click the edit icon.

Under the IPAM/DNS, select the IPAM and DNS profiles created for the north-south apps as shown below:

Under the vCenter/vSphere, select the Data Center and Management Network as shown below:

Under IP Address Management for Management Network, select Enable DHCP.

Click Save.

AKO in NSX-T deployments

Starting with AKO version 1.5.1, AKO supports the NSX-T write access cloud for both NCP and non-NCP CNIs. In NCP CNI, the pods are assumed to be routable from the SE’s backend data network segments. Due to this, AKO disables the static route configuration when the CNI is specified as ncp in the values.yaml. However, if non-ncp CNIs are used, AKO assumes that static routes can be configured on the SEs to reach the pod networks. In order for this scenario to be valid, the SEs backend data network must be configured on the same logical segment on which the Kubernetes/OpenShift cluster is run.

In addition to this, AKO supports both overlay and VLAN backed NSX-T cloud configurations. AKO automatically ascertains if a cloud is configured with overlay segments or is used with VLAN networks.

The VLAN backed NSX-T setup behaves the same as vCenter write access cloud, thus requiring no inputs from you. However, the overlay based NSX-T setups require configuring a logical segment as the backend data network and correspondingly configure the T1 router’s info during bootup of AKO through the Helm values parameter NetworkSettings.nsxtT1LR.

Configure SE Groups and Node Network List

SE Groups

AKO supports SE groups. Using SE groups, all the clusters can now share the same VRF. Each AKO instance mapped to a unique serviceEngineGroupName. This will be used to push the routes on the SE to reach the pods. Each cluster needs a dedicated SE group, which cannot be shared by any other cluster or workload.

Note:If the label is already configured, ensure the cluster name matches with the value.

Pre-requisites

Ensure that the Avi Load Balancer Controller is of version 18.2.10 or later.

Create SE groups per AKO cluster (out-of-band)

Node Network List

In a vCenter cloud, nodeNetworkList is a list of PG networks that OpenShift/Kubernetes nodes are a part of. Each node has a CIDR range allocated by Kubernetes. For each node network, the list of all CIDRs has to be mentioned in the nodeNetworkList.

For example, consider the Kubernetes nodes are a part of two PG networks - pg1-net and pg2-net. There are two nodes which belong to pg1-net with CIDRs

10.1.1.0/24and10.1.2.0/24. There are three nodes which belong to pg2-net with CIDRs20.1.1.0/24,20.1.2.0/24, and20.1.3.0/24.Then nodeNetworkList contains:

pg1-net

10.1.1.0/24

10.1.2.0/24

pg2-net

20.1.1.0/24

20.1.2.0/24

20.1.3.0/24

Note:The nodeNetworkList is only used in the ClusterIP deployment of AKO and in vCenter cloud and only when disableStaticRouteSync is set to

False.

If two Kubernetes clusters have overlapping CIDRs, the SE needs to identify the right gateway for each of the overlapping CIDR groups. This is achieved by specifying the right placement network for the pools that helps the Service Engine place the pools appropriately.

Configure the fields serviceEngineGroupName and nodeNetworkList in the values.yaml file.

Install Helm CLI

Helm is an application manager for OpenShift/Kubernetes. Helm charts are helpful in configuring the application. For more information, see Helm Installation.

AKO can be installed with or without internet access on the cluster.

For information on installing with internet access, see Install AKO for Kubernetes.

For information on installing without internet access, see Install AKO for Kubernetes.

Install AKO for Kubernetes

Create the avi-system namespace:

kubectl create ns avi-system

Note:AKO can run in namespaces other than avi-system. The namespace in which AKO is deployed, is governed by the --namespace flag value provided during Helm install. There are no updates in the setup steps. avi-system has been kept as is in the entire documentation, and must be replaced by the namespace provided during AKO installation.

Helm version 3.8 and above will be required to proceed with Helm installation.

Search the available charts for AKO:

helm show chart oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 Pulled: projects.registry.vmware.com/ako/helm-charts/ako:1.12.2 Digest: sha256:xyxyxxyxyx apiVersion: v2 appVersion: 1.12.2 description: A helm chart for Avi Kubernetes Operator name: ako type: application version: 1.12.2

Use the values.yaml from this chart to edit values related to the Avi Load Balancer configuration. To get the values.yaml for a release, run the following command:

helm show values oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 > values.yaml

Edit the values.yaml file and update the details according to your environment.

Install AKO:

Multiple AKO instances can be installed in a cluster.

Note:Out of multiple AKO instances, only one AKO instance must be Primary.

Each AKO instance must be installed in a different namespace.

Primary AKO Installation:

helm install --generate-name oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 -f /path/to/values.yaml --set ControllerSettings.controllerHost=<controller IP or Hostname> --set avicredentials.username=<avi-ctrl-username> --set avicredentials.password=<avi-ctrl-password> --set AKOSettings.primaryInstance=true --namespace=avi-system

Secondary AKO Installation:

helm install --generate-name oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 -f /path/to/values.yaml --set ControllerSettings.controllerHost=<controller IP or Hostname> --set avicredentials.username=<avi-ctrl-username> --set avicredentials.password=<avi-ctrl-password> --set AKOSettings.primaryInstance=false --namespace=avi-system

Verify the installation:

helm list -n avi-system NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ako-1691752136 avi-system 1 2024-09-02 deployed ako-1.12.2 1.12.2

Uninstall using Helm

To uninstall AKO using helm,

Run the following:

helm delete <ako-release-name> -n avi-system

Note:The ako-release-name is obtained by running the helm list as shown in the previous step.

Run the following:

kubectl delete ns avi-system

AKO in OpenShift Cluster

AKO can be used in the in an OpenShift cluster to configure routes and services of type Loadbalancer.

Pre-requisites for Using AKO in OpenShift Cluster

Configure an Avi Load Balancer Controller with a vCenter cloud and select the IPAM and DNS profiles created for the north-south apps. For more information, see Configure the Cloud.

Ensure the OpenShift version is 4.4 or higher to perform a Helm-based AKO installation.

Note:For OpenShift 4.x releases prior to 4.4 that do not have Helm, AKO needs to be either installed manually or Helm 3 needs to be manually deployed in the OpenShift cluster.

Ingresses, if created in the OpenShift cluster will not be handled by AKO.

Install AKO for OpenShift

Create the avi-system namespace.

oc new-project avi-system

Search for available charts.

helm show chart oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 Pulled: projects.registry.vmware.com/ako/helm-charts/ako:1.12.2 Digest: sha256:xyxyxxyxyx apiVersion: v2 appVersion: 1.12.2 description: A helm chart for Avi Kubernetes Operator name: ako type: application version: 1.12.2

Edit the values.yaml file and update the details according to your environment.

helm show values oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 > values.yaml

Install AKO.

helm install --generate-name oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 -f /path/to/values.yaml --set ControllerSettings.controllerHost=<controller IP or Hostname> --set avicredentials.username=<avi-ctrl-username> --set avicredentials.password=<avi-ctrl-password> --namespace=avi-system

Verify the installation.

helm list -n avi-system NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ako-1691752136 avi-system 1 2024-09-02 deployed ako-1.12.2 1.12.2

Installing AKO Offline Using Helm

Pre-requisites for Installation

Ensure the following prerequisites are met:

The Docker image is downloaded from the My VMware portal.

Log in to the portal using your My VMware credentials.

A private container registry to upload the AKO Docker image

Helm version 3.0 or higher installed

Installing AKO

To install AKO offline using Helm,

Extract the

.tarfile to get the AKO installation directory with the helm and docker images.tar -zxvf ako_cpr_sample.tar.gz ako/ ako/install_docs.txt ako/ako-1.12.2-docker.tar.gz ako/ako-1.12.2-helm.tgz

Change the working directory to this path:

cd ako/.Load the docker image in one of your machines.

sudo docker load < ako-1.12.2-docker.tar.gz

Push the docker image to your private registry. For more information, see Docker registry push.

Extract the AKO Helm package. This will create a sub-directory ako/ako which contains the Helm charts for AKO ( ako/chart.yaml crds templates values.yaml).

Update the helm values.yaml with the required AKO configuration (Controller IP/credentials, docker registry information, and so on).

Create the namespace avi-system on the OpenShift/Kubernetes cluster.

kubectl create namespace avi-system

Install AKO using the updated helm charts.

helm install ./ako --generate-name --namespace=avi-system

Upgrade AKO

AKO is stateless in nature. It can be rebooted/re-created without impacting the state of the existing objects in Avi Load Balancer if there is no requirement of an update to them. AKO will be upgraded using Helm.

During the upgrade process, a new docker image will be pulled from the configured repository and the AKO pod will be restarted.

On restarting, the AKO pod will re-evaluate the checksums of the existing Avi Load Balancer objects with the REST layer’s intended object checksums and do the necessary updates.

Notes for upgrading from versions lower than 1.5 to version 1.12.2. Refer to this section before upgrading from any version of AKO to AKO version 1.5 and higher:

Refactored VIP Network Inputs AKO version 1.5.1 deprecates subnetIP and subnetPrefix in the values.yaml (used during Helm installation), and allows specifying the information through the cidr field within vipNetworkList.

The existing AviInfraSetting CRDs need an explicit update after applying the updated CRD schema yaml. To update existing AviInfraSettings while performing a Helm upgrade,

Ensure that the AviInfraSetting CRDs have the required configuration. If not save it in the yaml files.

Upgrade the CRDs to provide vipNetworks in the new format. For more information, see To upgrade AKO using the Helm repository.

Updating the CRD schema would remove the currently invalid spec.network configuration in existing AviInfraSettings. Update the AviInfraSetting to follow the new schema as shown above and apply the changed yaml.

Proceed with Step 2 of upgrading AKO.

To upgrade AKO using the Helm repository,

Helm does not upgrade the CRDs during a release upgrade. Before you upgrade a release, run the following command to upgrade the CRDs:

helm template oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 --include-crds --output-dir <output_dir>

This will save the helm files to an output directory which will contain the CRDs corresponding to the AKO version. Install CRDs using:

kubectl apply -f <output_dir>/ako/crds/

The release is listed as shown below:

helm list -n avi-system NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION ako-1593523840 avi-system 1 2023-04-16 13:44:31.609195757 +0000 UTC deployed ako-1.10.3 1.10.3

Get the values.yaml for the latest AKO version:

helm show values oci://projects.registry.vmware.com/ako/helm-charts/ako --version 1.12.2 > values.yaml

Upgrade the helm chart:

helm upgrade ako-1593523840 oci://projects.registry.vmware.com/ako/helm-charts/ako -f /path/to/values.yaml --version 1.12.2 --set ControllerSettings.controllerHost=<IP or Hostname> --set avicredentials.password=<username> --set avicredentials.username=<username> --namespace=avi-system

Note:In a multiple AKO deployment scenario, all AKO instances must be on the same version.

Upgrading AKO Offline Using Helm

To upgrade AKO without using the online Helm repository,

Follow the steps 1 to 6 from the Installing AKO Offline Using Helm section.

Upgrade CRDs using the following command:

kubectl apply -f ./ako/crds/

Use the following command:

helm upgrade <release-name> ./ako -n avi-system

Delete AKO

Edit the configmap used for AKO and set the deleteConfig flag to

trueif you want to delete the AKO created objects. Else skip to step 2.kubectl edit configmap avi-k8s-config -n avi-system

Delete AKO using the command shown below:

helm delete $(helm list -n avi-system -q) -n avi-system

Note:Do not delete the configmap avi-k8s-config manually, unless you are doing a complete Helm uninstall. The AKO pod has to be rebooted if you delete and the avi-k8s-config configmap has to be reconfigured.

Parameters

The following table lists the configurable parameters of the AKO chart and their default values:

Parameter |

Description |

Default Values |

|---|---|---|

ControllerSettings.controllerVersion |

Avi Load Balancer Controller version |

Current Controller version |

ControllerSettings.controllerHost |

Used to specify the Avi Load Balancer Controller IP or Hostname |

None |

ControllerSettings.cloudName |

Name of the cloud managed in Avi Load Balancer |

Default-Cloud |

ControllerSettings.tenantsPerCluster |

Set to |

False |

ControllerSettings.tenantName |

Name of the tenant where all the AKO objects will be created in Avi Load Balancer |

Admin |

ControllerSettings.vrfName |

Name of the VRF under which all the AKO objects are created in Avi Load Balancer. Applicable only in the vCenter cloud. |

Empty String |

ControllerSettings.primaryInstance |

Specify if the AKO instance is primary or not. |

True |

L7Settings.shardVSSize |

Displays shard VS size enum values: LARGE, MEDIUM, SMALL |

LARGE |

AKOSettings.fullSyncFrequency |

Displays the full sync frequency |

1800 |

L7Settings.defaultIngController |

AKO is the default ingress controller |

True |

ControllerSettings.serviceEngineGroupName |

Displays the name of the Service Engine Group |

Default-Group |

NetworkSettings.nodeNetworkList |

Displays the list of networks and corresponding CIDR mappings for the Kubernetes nodes |

None |

AKOSettings.clusterName |

Unique identifier for the running AKO instance. AKO identifies objects it created on Avi Load Balancer Controller using this parameter |

Required |

NetworkSettings.subnetIP |

Subnet IP of the data network |

Deprecated |

NetworkSettings.subnetPrefix |

Subnet Prefix of the data network |

Deprecated |

NetworkSettings.vipNetworkList |

Displays the list of Network Names and Subnet information for VIP network, multiple networks allowed only for AWS Cloud |

Required |

NetworkSettings.enableRHI |

Publish route information to BGP peers |

False |

NetworkSettings.bgpPeerLabels |

Select BGP peers using bgpPeerLabels, for selective virtual service VIP advertisement. |

Empty List |

NetworkSettings.nsxtT1LR |

Unique ID (Note: not its display name) of the T1 Logical Router for Service Engine connectivity. This only applies to the NSX-T cloud. |

Empty String |

L4Settings.defaultDomain |

Used to specify a default sub-domain for L4 LB services |

First domainname found in cloud's DNS profile |

L4Settings.autoFQDN |

Specify the layer 4 FQDN format |

default |

L7Settings.noPGForSNI |

Skip using Pool Groups for SNI children |

False |

L7Settings.l7ShardingScheme |

Sharding scheme enum values: hostname, namespace |

hostname |

AKOSettings.cniPlugin |

Configure the string if your CNI is calico or openshift or ovn-kubernetes. For Cilium CNI, set the string as cilium only when using Cluster Scope mode for IPAM and leave it empty if using Kubernetes Host Scope mode for IPAM. |

calico|canal|flannel|openshift|antrea|ncp|ovn-kubernetes|cilium |

AKOSettings.enableEvents |

enableEvents can be changed dynamically from the configmap |

True |

AKOSettings.logLevel |

logLevel values: |

INFO |

AKOSettings.primaryInstance |

Specify AKO instance is primary or not |

True |

AKOSettings.deleteConfig |

Set to |

False |

AKOSettings.disableStaticRouteSync |

Deactivate static route syncing if set to |

False |

AKOSettings.apiServerPort |

Internal port for AKO's API server for the liveness probe of the AKO pod |

8080 |

AKOSettings.blockedNamespaceList |

List of Kubernetes/OpenShift namespaces blocked by AKO |

Empty List |

AKOSettings.istioEnabled |

Set to |

False |

AKOSettings.ipFamily |

Set to V6 if user wants to deploy AKO with V6 backend (vCenter cloud with calico CNI only) (tech preview) |

V4 |

AKOSettings.useDefaultSecretsOnly |

Restricts the secret handling to default secrets present in the namespace where AKO is installed in OpenShift clusters if set to |

False |

AKOSettings.layer7Only |

Operate AKO as a pure layer 7 ingress Controller |

False |

AKOSettings.blockedNamespaceList |

List of K8s/OpenShift namespaces blocked by AKO |

Empty List |

avicredentials.username |

Enter the Avi Load Balancer Controller username |

None |

avicredentials.password |

Enter the Avi Load Balancer Controller password |

None |

image.repository |

Specify docker-registry that has the AKO image |

avinetworks/ako |

image.pullSecrets |

Specify the pull secrets for the secure private container image registry that has the AKO image |

Empty List |

The field vipNetworkList is mandatory. This is used for allocating VirtualService IP by IPAM Provider module.

Each AKO instance mapped to a given Avi Load Balancer cloud must have a unique clusterName parameter. This would maintain the uniqueness of object naming across Kubernetes clusters.

Starting with version 1.12.1, AKO supports reading Avi Load Balancer Controller credentials including

certificateAuthorityDatafrom existingavi-secretfrom the namespace in which AKO is installed. If theusernameand eitherpasswordorauthtokenare not specified,avi-secretwill not be created as part of Helm installation. AKO will assume thatavi-secretalready exists in the namespace in which the AKO Helm release is installed and will reference it.