It is recommended to deploy Avi Load Balancer Controller in a three-node cluster. Owing to the limited availability of resources, some businesses may not find this recommendation preferable. In such cases, Avi Load Balancer Controller can be deployed as a standalone node. In a standalone node deployment, the availability of Avi Load Balancer Controller is maintained by the underlying infrastructure rather than the clustering mechanism.

By deploying and hosting Avi Load Balancer Controller on VMware, VMware’s native solutions can be utilized for maintaining high availability for Avi Load Balancer Controller. This section focuses on how a single node Avi Load Balancer Controller cluster is being hosted within VMware and how using native solutions we can maintain and restore Controller availability both proactively and reactively to an infrastructure impact.It focuses on how to use the VMware environment in conjunction with a deployed Controller. However, setting up the VMware environment is not within the scope of this section.

It is highly recommended to maintain up-to-date configuration archives. A single node cluster implies there is only one host maintaining the configuration. During disaster recovery, for example, after a storage failure, Avi Load Balancer Controller will have to be restored from the configuration archive.

For more information on VMware HA/ DRS/ vMotion on Controller cluster and Service Engines, see NSX Advanced Load Balancer High Availability in VMware vCenter Environment topic in the VMware Avi Load BalancerInstallation Guide.

VMware Solutions

VMware provides native mechanisms for maintaining availability of critical applications and virtual machines. vMotion and VMware High Availability (VMware HA) were tested, and any impact to availability of a single node Avi Load Balancer Controller was measured and data integrity validated.

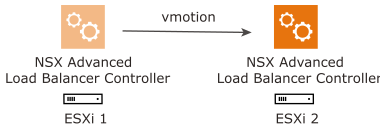

vMotion

vMotion allows live migration of a virtual machine from one host to another with no downtime. This is a proactive approach to maintaining availability of a virtual machine’s services, migrating virtual machines prior to server maintenance, or move virtual machines off a degraded or failing server.

For more information, see vMotion Overview and Best Practices for Configuring Resources for vMotion.

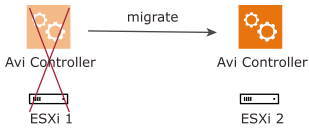

VMware High Availability (VMware HA)

VMware high availability provides failure protection for applications and virtual machines. In case of server failure, VMware HA allows virtual machines to be dynamically migrated and started up on another server restoring the application’s availability.

For more information, see About VMware HA and Best practices for Configuring Resources for VMware.

Validation Scenarios

The following scenarios were tested for validating, restoring, and maintaining the availability of a single node Avi Load Balancer Controller running on VMware:

vMotion live migration

Server failure

Setup Specifications

The following setup specifications were used when validating the scenarios:

VMware vSphere 6.0 update 3

Avi Load Balancer 17.2.7

NFS Shared Datastore

Use Cases

- vMotion Live Migration

-

During the vMotion live migration, Avi Load Balancer Controller was migrated from one server to another, all the while being powered on.

- Expectation

-

Availability maintained throughout with instances of increased API latency.

- Result

-

During the process, Avi Load Balancer Controller remained available with intermittent occurrences of increased latency to the API. After migration, all services are restored and the Controller is back to functioning normally. There was no data path impact (load balanced applications) for the duration of the test scenario.

- Server Failure

-

The ESXi server hosting the Avi Load Balancer Controller was made to fail.

- Expectation

-

Unavailability of Controller for 3 – 5 minutes, with additional 2-3 minutes before the controller services are up and running. VMWare high availability restores the availability of the Avi Load Balancer Controller.

- Result

-

On ESXi host failure, it took between 3-5 minutes for vCenter to detect the host failure, migrate the Avi Load Balancer Controller and power on.In the next 2-3 minutes, Controller services were up and the APIs were available.There was no data path impact (load balanced applications) for the duration of the test scenario.

Avi Load Balancer Deployment Recommendations

There are some best deployment recommendations to be considered when deploying using a single Avi Load Balancer Controller. These recommendations are applicable when the Avi Load Balancer Controller and Service Engines are hosted on the same VMware cluster. Owing to the potential fate sharing of the Service Engines and Avi Load Balancer Controller being hosted on the same cluster, both the control plane availability (Avi Load Balancer Controller) and the data plane (Service Engine) availability are taken into consideration. These recommendations may vary based on the:

For more information on configured SE group, see Elastic HA for NSX Advanced Load Balancer Service Engines topic in the VMware Avi Load BalancerInstallation Guide or Legacy HA for NSX Advanced Load Balancer Service Engines topic in the VMware Avi Load BalancerConfiguration Guide.

For more information on VMware integration, see Installing NSX Advanced Load Balancer in VMware vSphere Environments topic in the VMware Avi Load BalancerInstallation Guide.

These recommendations are applicable when the Avi Load Balancer Controller and Avi Load Balancer Service Engines are hosted on the same VMware server cluster.

Elastic HA - Write Access

When deploying Service Engines in an Elastic HA mode, it is recommended to host Avi Load Balancer Controller on different servers than the Service Engines. If the Service Engine and Controller are hosted on the same server, in a failure scenario, the virtual services hosted on that Service Engine will be impacted until the controller services are completely restored.

vMotion and DRS are not recommended for Service Engines.

- Configurations in Avi Load Balancer

-

Modify the Service Engine group to specify which hosts/clusters the Service Engines can/can not be created on. By doing so, you can specify hosts that the Controller will not be hosted on. For more information, see Performing an Include-Exclude Operation on a Cluster and Host topic in the VMware Avi Load BalancerConfiguration Guide.

- Configurations in VMWare

-

To keep the Avi Load Balancer Controller separate from the Service Engines, we will be using VM Affinity rules within VMWare. VM Affinity rules are configured such that the controller runs on servers different from the servers hosting the Service Engines.

For more information, see VMware VM Affinity and VMware VM Affinity Setup.

Elastic HA - No Access

When deploying Service Engines in an elastic HA mode, it is recommended to host Avi Load Balancer Controller on different servers than the Service Engines. If the Service Engine and Avi Load Balancer Controller are hosted on the same server, then in a failure scenario, the virtual services hosted on that Service Engine will be impacted until the controller services are completely restored.

vMotion and DRS are not recommended for Service Engines.

- Configurations in Avi Load Balancer

-

None required.

- Configurations in VMWare

-

To keep the Avi Load Balancer Controller separate from the Service Engines, we will be using VM Affinity rules within VMWare. VM Affinity rules are configured such that the controller runs on servers different from the servers hosting the Service Engines. We will use one rule for defining which hosts are eligible for the controller and another for servers for which the Service Engines are eligible for. By doing so, you can specify different hosts for Avi Load Balancer Controller than those that are specified for the Service Engines. These rules should keep the Service Engines separated from the Avi Load Balancer Controllers, thus reducing the risk to data plane traffic in the event of a host failure.

For more information, see VMware VM Affinity and VMware VM Affinity Setup.

- Legacy HA - write access

-

When deploying Service Engines in the Legacy HA mode, it is recommended that Avi Load Balancer Controller is hosted on different servers than the Service Engines. If the primary Service Engine and Controller are hosted on the same server, in a failure scenario, the virtual services hosted on that Service Engine will be impacted until the controller services are completely restored.

Note:vMotion and DRS are not recommended for Service Engines.

- Configurations in Avi Load Balancer

-

Modify the Service Engine group to specify which hosts or clusters in which the Service Engines can/cannot be created on. By doing so, you can specify hosts that Avi Load Balancer Controller will not be hosted on.

For more information, see Performing an Include-Exclude Operation on a Cluster and Host topic in the VMware Avi Load BalancerConfiguration Guide.

Configurations in Avi Load Balancer

To keep the Avi Load Balancer Controller separate from the Service Engines, we will be using VM Affinity rules within VMWare. VM Affinity rules are configured such that Avi Load Balancer Controller runs on servers different from the servers hosting the Service Engines.

For more information, see VMware VM Affinity and VMware VM Affinity Setup.

Legacy HA - No Access

When deploying Service Engines in an elastic HA mode, it is recommended to host Avi Load Balancer Controller on different servers than the Service Engines. If the Service Engine and Avi Load Balancer Controller are hosted on the same server, in a failure scenario, the virtual services hosted on that Service Engine will be impacted until the Avi Load Balancer Controller services are completely restored.

vMotion and DRS are not recommended for Service Engines.

If the server resources are not sufficient to keep the Avi Load Balancer Controller separate from all the Service Engines, it is recommended to not select Distribute Load within the Legacy HA configuration. This will result in only a single Service Engine being primary for all virtual services.

- Configurations in Avi Load Balancer

-

If there are not enough compute resources available preventing the controller from being hosted separately from all Service Engines, do not configure Distribute Load within the Legacy HA setup.

For more information, see Legacy HA for NSX Advanced Load Balancer Service Engines topic in the VMware Avi Load BalancerConfiguration Guide.

- Configuration in VMware

-

To host the Avi Load Balancer Controller separately we will be using the VM Affinity rules within VMware. We will use one rule for defining which hosts are eligible for the controller and another for servers for which the Service Engines are eligible for. By doing so, you specify different hosts for the controller than those specified for the Service Engines. These rules should keep the Service Engines separated from the Controllers, thus reducing the risk to data plane traffic in the event of a host failure.

If there are not enough server resources available to keep Avi Load Balancer Controller hosted separately from all the Service Engines, setup the Affinity rules to keep the controller separate from the primary Service Engine. This will prevent impact to the data plane traffic in the event of the server hosting Avi Load Balancer Controller fails.

For more information, see VMware VM Affinity and VMware VM Affinity Setup.

In conclusion, VMware’s native solutions can be relied upon to provide High Availability for Avi Load Balancer Controller when a business decides that a single node Controller suits them the best.