Avi Load Balancer publishes minimum and recommended resource requirements for Avi Load Balancer SEs. This section provides details on sizing. You can consult your Avi Load Balancer sales engineer for recommendations that are tailored to the exact requirements.

The SEs can be configured with one vCPU core and 2 GB RAM, up to 64 vCPU cores and 256 GB RAM.

It is recommended for a ServiceEngine to have at least four GB of memory when GeoDB is in use.

In write access mode, you can configure SE resources for newly created SEs within the SE Group properties.

For the SE in read or no orchestrator modes, the SE resources are manually allocated to the SE virtual machine when it is being deployed.

Avi Load Balancer SE performance is determined by several factors, including hardware, SE scaling, and the ciphers and certificates used. Performance can be broken down into the following primary benchmark metric:

Connections, Requests and SSL Transactions per second (CPS/ RPS/ TPS) - Primarily gated by the available CPU.

Bulk throughput - Dependent upon CPU, PPS, and environment-specific limitations.

Concurrent connections — Dependent on SE memory.

This section illustrates the expected real-world performance and discusses SE internals on computing and memory usage.

CPU

Avi Load Balancer supports x86 based processors, including those from AMD and Intel. Leveraging AMD’s and Intel’s processors with AES-NI and similar enhancements steadily enhances the performance of the Avi Load Balancer with each successive generation of the processor.

CPU is a primary factor in SSL handshakes (TPS), throughput, compression, and WAF inspection.

Performance increases linearly with CPU if CPU usage limit or environment limits has not reached the threshold. CPU is the primary constraint for both transactions per second and bulk throughput.

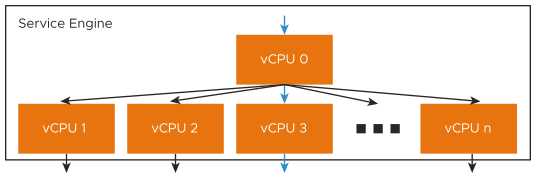

Increasing CPU cores allocated to a Service Engine provides near-linear increases in SSL performance, as CPU is the primary constraint for both transactions per second and bulk throughput. A single SE can scale up to 36 vCPU cores. Within an SE, one or more CPU cores will be given a dispatcher role. It will interface with NICs and distribute network flows across the other cores within the system, effectively load balancing the traffic to other CPU cores. Each core is responsible for terminating TCP, SSL, and other processing determined by the virtual service configuration. The vCPU 0 shown in the diagram acts as the dispatcher and can also handle some percentage of SSL traffic if it has the available capacity. By using a system of internally load balancing across CPU cores, Avi Load Balancer can scale linearly across the ever-increasing capacity.

If the SE VM’s virtualized CPU did not get a sufficient share of the physical CPU on the host or was interrupted, it results in SE Kernel Panic Crashes. This happens only in virtualized environments. Also, This was seen internally on 2 Core Service Engines. The solution to mitigate this behaviour is to enable CPU reservation in the SEGroup properties. The configuration is as follows:

[admin:Avi-Controller]: > show serviceenginegroup Default-Group | grep cpu_reserve | cpu_reserve | True | [admin:Avi-Controller]: >

Memory

Memory allocated to the SE primarily impacts concurrent connections and HTTP caching. Doubling the memory will double the ability of the SE to perform these tasks. The default is two GB memory, reserved within the hypervisor for VMware clouds. See SE Memory Consumption for a verbose description of expected concurrent connections. Generally, SSL connections consume about twice as much memory as HTTP layer 7 connections and four times as much memory as layer 4 with TCP proxy.

Starting with Avi Load Balancer 30.1.1 version, Avi Load Balancer mandates minimum memory requirement for Service Engine's to be 2 GB. Service Engines running in any previous version with less than 2 GB, needs to be upgraded to minimum of 2GB memory prior to upgrade.

NIC

Throughput through a SE can be a gating factor for the bulk throughput and sometimes for SSL-TPS. The throughput for an SE is highly dependent upon the platform. For maximum performance on a single SE, it is recommended to use bare metal or Linux cloud deployments, using an Intel 10 Gb/s or greater NIC capable of supporting DPDK.

Disk

The SEs can store logs locally before they are sent to the Controllers for indexing. Increasing the disk will increase the log retention on the SE. SSD is preferred over hard drives, as they can write the log data faster.

The recommended minimum size for storage is 15 GB, ((2 * RAM) + 5 GB) or 15 GB, whichever is greater. 15 GB is the default for SEs deployed in VMware clouds.

Disk Capacity for Logs

Avi Load Balancer computes the disk capacity it can use for logs based on the following parameters:

Total disk capacity of the SE.

Number of SE CPU cores.

The main memory (RAM) of the SE.

Maximum storage on the disk not allocated for logs on the SE (configurable through SE runtime properties).

Minimum storage allocated for logs irrespective of SE size.

You can calculate the capacity reserved for debug logs and client logs as follows:

Debug Logs capacity = (SE Total Disk * Maximum Storage not allocated for logs on SE)/ 100

Client Logs capacity = Total Disk – Debug Logs capacity

Adjustments to these values are done based on configured value for minimum storage allocated for logs and RAM of SE, and so on.

PPS

PPS is generally limited by hypervisors. Limitations are different for each hypervisor and version. PPS limits on Bare metal (no hypervisor) depend on the type of NIC used and how Receive Side Scaling (RSS) is leveraged.

RPS (HTTP Requests Per Second)

RPS is dependent on the CPU or the PPS limits. It indicates the performance of the CPU and the limit of PPS that the SE can push.

SSL Transactions Per Second

In addition to the hardware factors outlined above, TPS is dependent on the negotiated settings of the SSL session between the client and Avi Load Balancer. The following are the points to consider for sizing based on SSL TPS:

Avi Load Balancer supports RSA and Elliptic Curve (EC) certificates. The type of certificate used along with the cipher selected during negotiation, determines the CPU cost of establishing the session.

RSA 2k keys are computationally more expensive compared to EC. Avi Load Balancer recommends you to use EC with PFS, which provides the best performance and the best possible security.

RSA certificates can still be used as a backup for clients that do not support current industry standards. As Avi Load Balancer supports both an EC certificate and an RSA certificate on the same virtual service, you can gradually migrate to using EC certificates with minimal user experience impact. For more information, see EC versus RSA Certificate Priority.

Default SSL profiles of Avi Load Balancer prioritize EC over RSA and PFS over non-PFS.

For detailed information on performance of RSA and EC certificates on different sizes of Service Engine, see Avi Load Balancer Performance Datasheet topic in the VMware Avi Load Balancer Installation Guide.

EC using perfect forward secrecy (ECDHE) is about 15% more expensive than EC without PFS (ECDH).

SSL session reuse gives better SSL Performance for real-world workloads.

To achieve greater TPS numbers, use faster processors, bare metal servers instead of virtual machines, more CPU cores, and scale across multiple Service Engines. Real-world performance will likely be higher due to SSL session reuse.

Bulk Throughput

The maximum throughput for a virtual service depends on the CPU and the NIC or hypervisor.

Using multiple NICs for client and server traffic can reduce the possibility of congestion or NIC saturation. The maximum packets per second for virtualized environments vary dramatically and will be the same limit regardless of the traffic being SSL or unencrypted HTTP.

For throughput numbers, see Performance Datasheet section in Preparing for Installation topic in VMware Avi Load BalancerInstallation Guide. SSL throughput numbers are generated with standard ciphers mentioned in the datasheet. Using more esoteric or expensive ciphers can harm throughput. Similarly, using less secure ciphers, such as RC4-MD5, will provide better performance but are also not recommended by security experts.

Generally, the TPS impact is negligible on the CPU of the SE if SSL re-encryption is required, since most of the CPU cost for establishing a new SSL session is on the server and not on the client. For the bulk throughput, the impact on the CPU of the SE will be double for this metric.

Concurrent Connections (also known as Open Connections)

While planning SE sizing, the impact of concurrent connections must not be overlooked. The concurrent benchmark numbers floating around are generally for layer 4 in a pass-through or non-proxy mode.

In other words, they are many orders of magnitude greater than what can be achieved. Consider 40kB of Memory per SSL terminated connection in the real world as a preliminary but conservative estimate. The amount of HTTP header buffering, caching, compression, and other features play a role in the final number. For more information, see SE Memory Consumption, including the methods for optimizing for greater concurrency.

Scale-out capabilities across Service Engines

Avi Load Balancer can also scale traffic across multiple SEs. Scale-out allows linear scaling of workload. Scale-Out is primarily useful when CPU, memory, and PPS become the limiting factor.

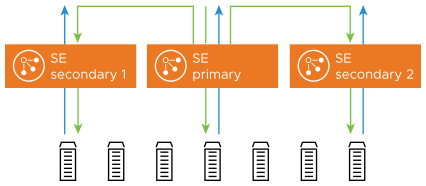

Native auto-scale feature of Avi Load Balancer (L2 Scaleout) allows a virtual service to be scaled out across four SEs. Using ECMP scale-out (L3 Scale-out), a virtual service can be scaled out to multiple SEs with a linear scale for workloads.

The following diagrams shows L2 Scale-out.

For more information on the scale-out feature, see Autoscale Service Engines.

Service Engine Performance Datasheet

For more information on the above limitations and sizing of the Service Engine based on your applications behavior and load requirement, see Performance Datasheet.

The following are the points to be considered while sizing for different environments:

vCenter and NSX-T cloud

CPU reservation (configurable through se-group properties) is recommended.

RSS configuration for VMware based on the load requirement.

Baremetal and CSP (Linux Server Cloud) Deployment

A single SE can scale up to 36 cores for Linux server cloud (LSC) deployment for Baremetal and CSP.

PPS Limits on different clouds depend on either hypervisor or NIC used and how dispatcher cores and RSS are configured. For more information on recommended configuration and feature support, see Configuring TSO, GRO, and RSS.

SR-IOV and PCIe Passthrough are used in some environments to bypass the PPS limitations and provide line-rate speeds. For more information on support for SR-IOV, seeSystem Requirements topic in the VMware Avi Load BalancerInstallation Guide.

Sizing for public clouds must be decided based on the cloud limits and SE performance on different clouds for different virtual machine sizes.