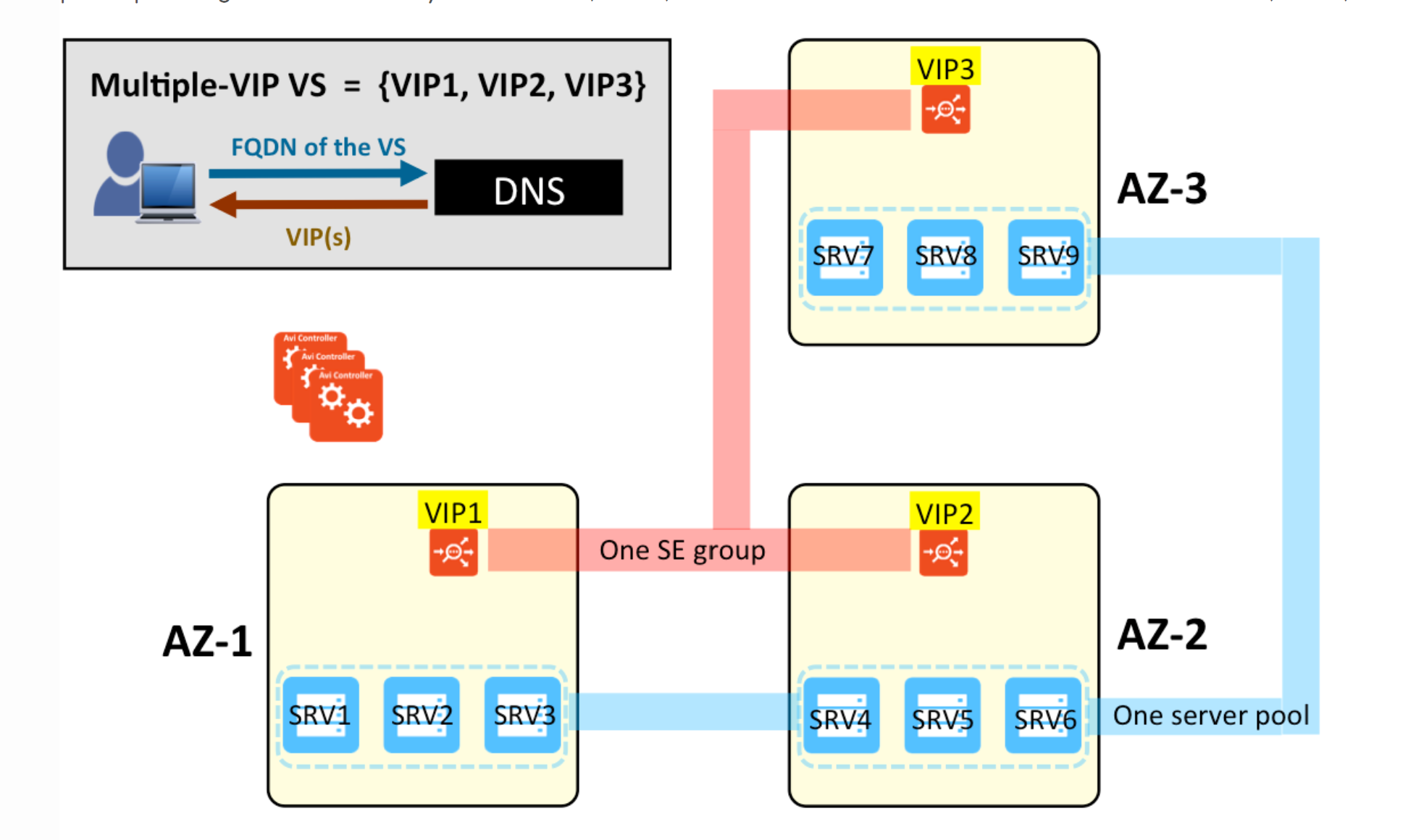

The Multi-AZ feature addresses load-balancing back-end servers of an application (virtual service) spread across multiple AZs in AWS with a VIP in each AZ. Without this feature, the user has to configure separate virtual services, one in each AZ. Avi Load Balancer centrally configures the Multi-AZ VS and combines analytics and logs across multiple VIPs of the same VS into a single consolidated view for the application.

Refer to the below diagram - The application’s virtual service shares a common pool of nine servers (SRV1 - SRV9) in a single pool spanning the three availability zones (AZ-1, AZ-2, AZ-3). The servers are accessed via 3 VIPs (VIP1, VIP2, VIP3), one per AZ.

In a Multi-AZ deployment, it is not required to have Active/Active HA for VIPs within each AZ. The Multi-AZ deployment automatically provides the HA by having SE instances across AZs. Also, the Avi Load Balancer supports non-disruptive upgrades (SE in each AZ is upgraded one at a time, keeping the VS always up during the upgrade). Hence the recommended HA mode is N+M with a buffer of 0.

For more information on Control plane and Data Plane, see HA for Avi Controllers and Creating Virtual Service.

DNS

For the Multi-AZ feature, the virtual service must be configured with an FQDN. Avi Load Balancer integrates with DNS as well as Route 53 in AWS. One or the other is a pre-requisite for the Multi-AZ feature. In both cases, all the VIPs for the VS are automatically populated in the DNS. It is recommended to configure DNS with a consistent hashing algorithm to minimize cross-AZ traffic (AWS charges extra for inter-AZ traffic).

Server Selection

The load balancing algorithms of Avi Load Balancer are available for server selection. In the current release, it does not automatically localize traffic on a VIP to the pool servers in the same AZ.

Operational Status

Each individual VIP generates an event when it is UP/DOWN. This information can be used to determine the health of the VIP. Starting with 17.1.2, when a VIP is down, Avi Load Balancer automatically withdraws that VIP from DNS (be it DNS or Route 53). When the VIP is up again, the DNS is updated automatically as well.

VS oper_status |

VIP oper_status |

|---|---|

down |

if no VIP is UP |

up |

if any of the VIPs are UP |

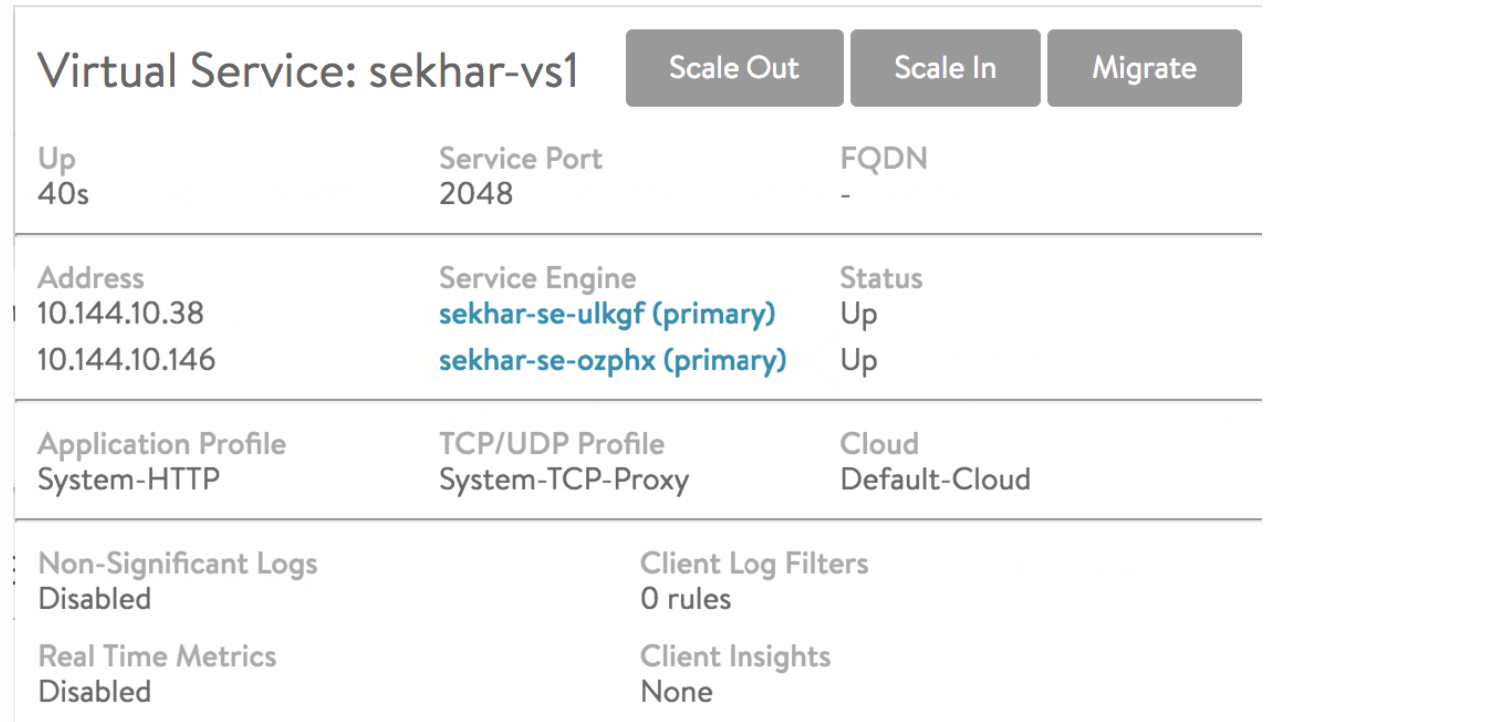

In the virtual service list shown below, the IP addresses of the member VIPs are revealed.

Virtual service health on a per-VIP basis can be viewed in the virtual service’s submenu, as shown below:

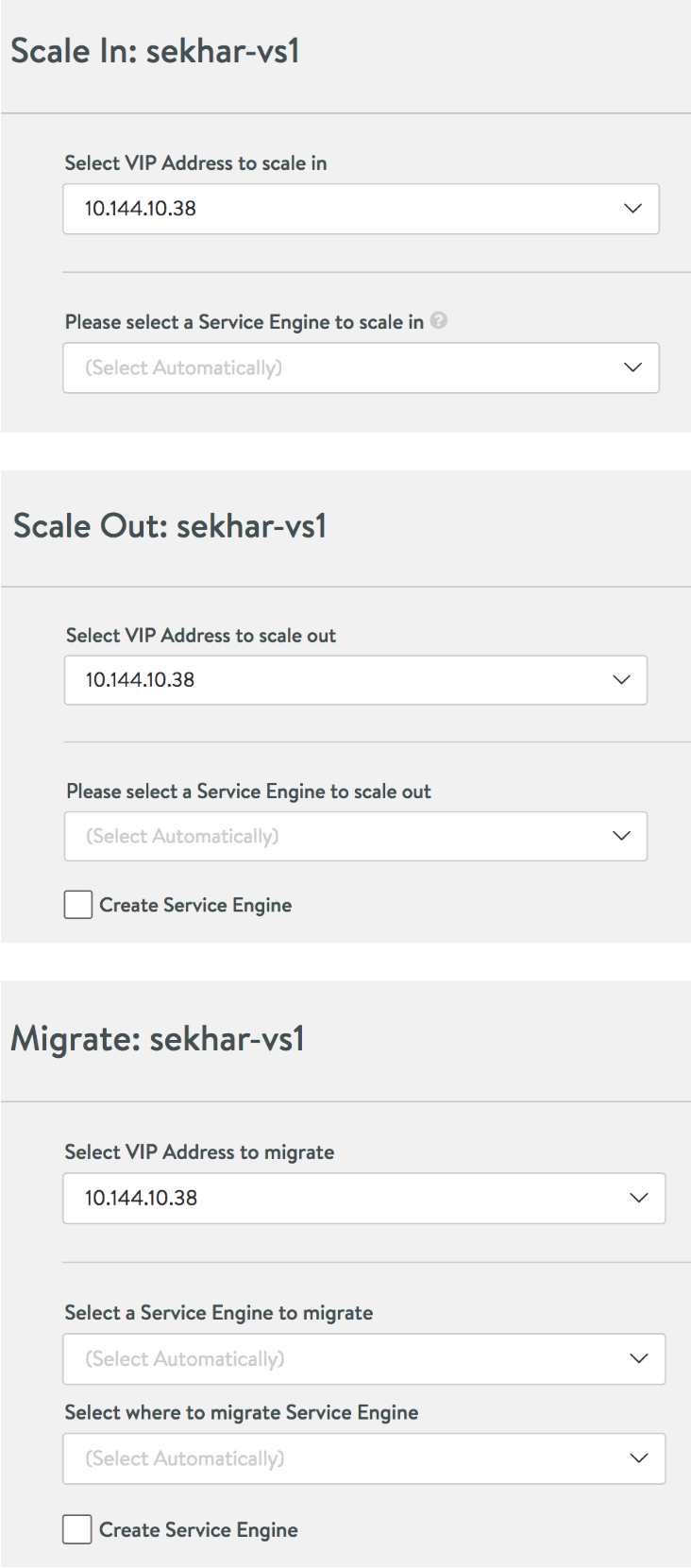

Clicking the Scale Out, Scale In, or Migrate button appearing in the above display results in windows with drop-down menus that enable selection of a particular VIP to be scaled or migrated.

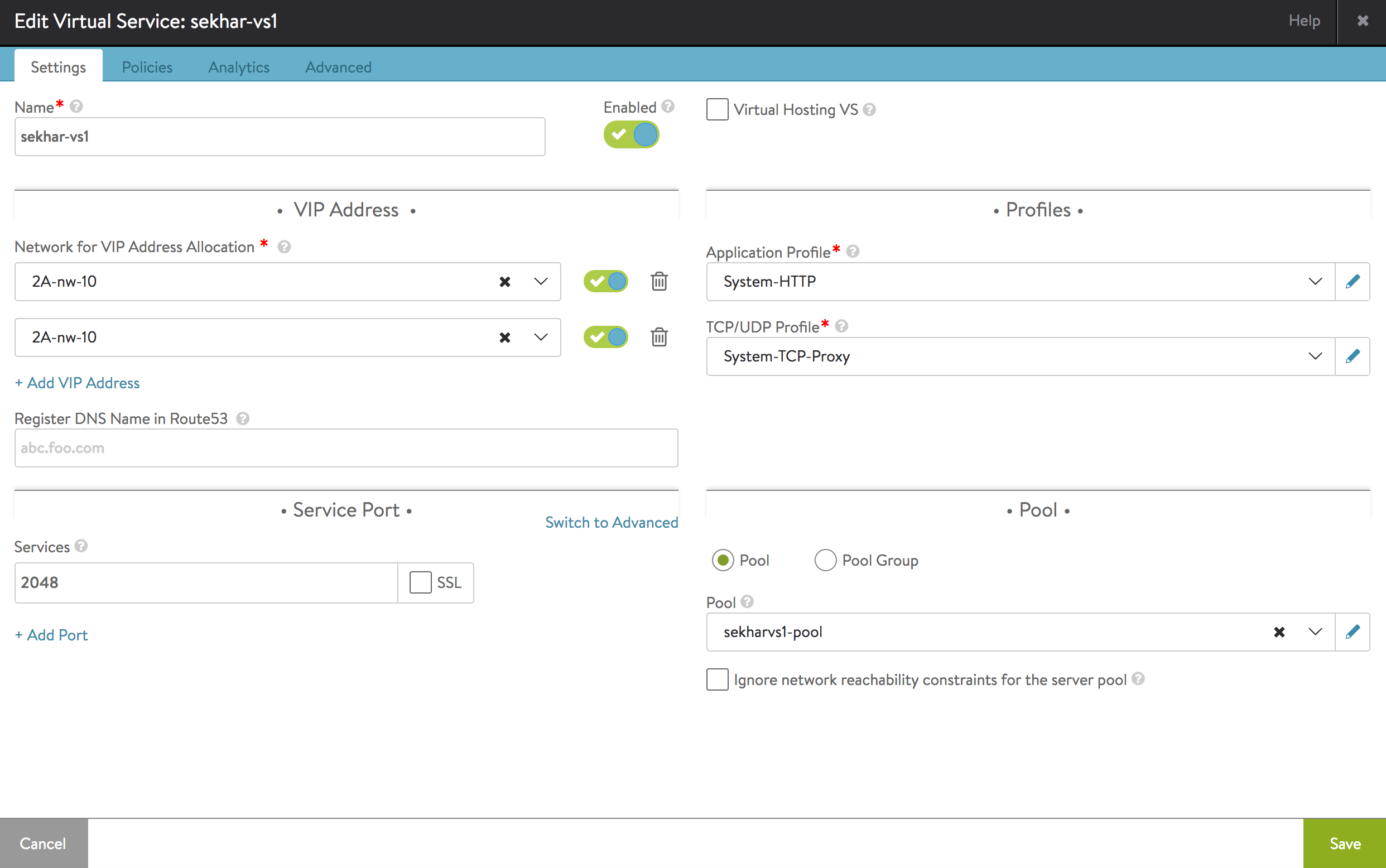

Creating a Multi-VIP VS in the UI

The user can use the Multi-AZ feature by specifying more than one VIP in the list within the VIP Address section of the Settings tab of the VS editor. Two VIPs are selected in the example below by specifying two networks from which they will be auto-allocated. In addition, its FQDN needs to be registered with Route 53.