The pyVmomi VMware automation library is available on the Avi Load Balancer Controller for use by ControlScripts.

One common use of such automation is for back-end, pool-server autoscaling. The steps outlined in this topic enable automatic scale in and scale out of pool servers, based on an autoscaling policy. For more details on pyVmomi, see Python SDK for the VMware vSphere Management API.

Configuring Pool-Server Autoscaling in VMware Clouds

To configure scale out for a server pool, the following configuration is required. All the following configuration steps must be carried out in the same tenant as the pool.

Create ControlScripts

Define autoscaling alerts

Attach the autoscaling alerts to an autoscaling policy for the server pool

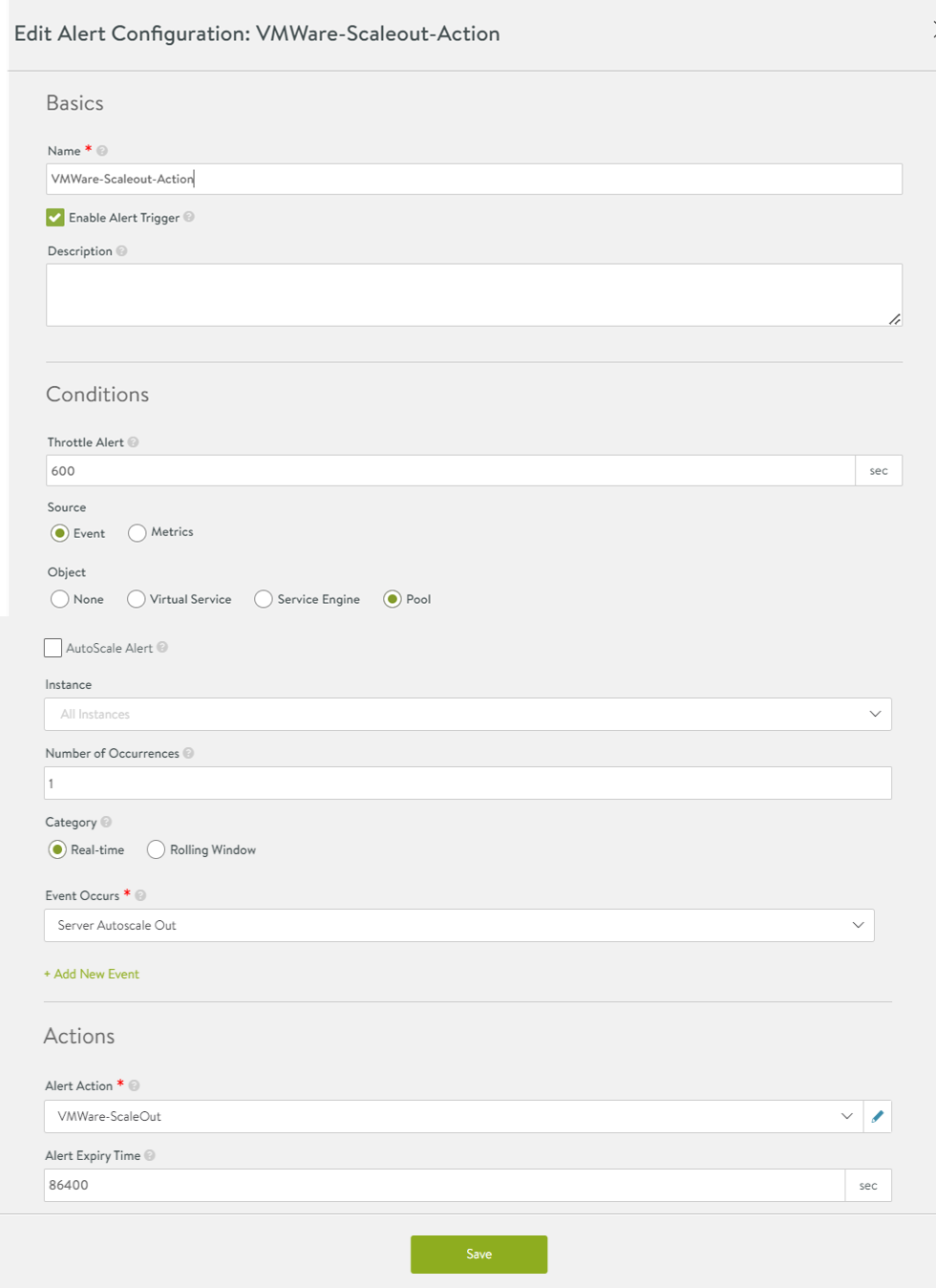

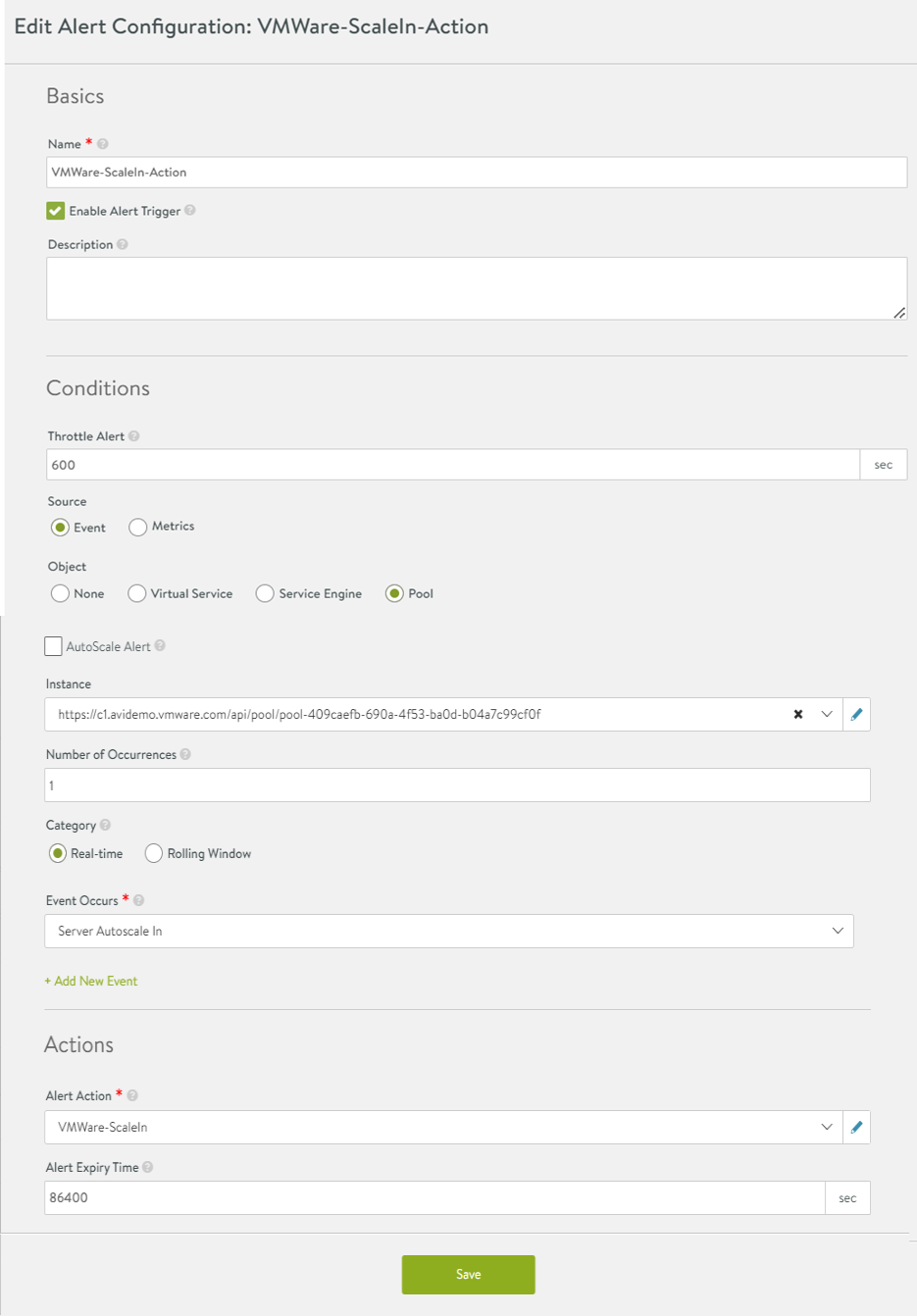

Create scale-out and scale-in alerts triggered on the Server Autoscale Out-Server Autoscale In events to run the relevant ControlScripts.

Create Python utility scripts (This step is only required for Avi Load Balancer versions 21.1.4, 21.1.5, 22.1.1, and 22.1.2)

Step 1: Create ControlScripts

Name: Scaleout-Action

#!/usr/bin/python

import sys

from avi.vmware_ops.vmware_scale import scale_out

vmware_settings = {

'vcenter': '10.10.10.10',

'user': 'root',

'password': 'vmware',

'cluster_name': 'Cluster1',

'template_name': 'web-app-template',

'template_folder_name': 'Datacenter1/WebApp/Template',

'customization_spec_name': 'web-app-centos-dhcp',

'vm_folder_name': 'Datacenter1/WebApp/Servers',

'resource_pool_name': None,

'port_group': 'WebApp'}

scale_out(vmware_settings, *sys.argv)

The vmware_settings parameters are as follows:

vcenter: IP address or hostname of vCenter.

user: Username for vCenter access.

password: Password for vCenter access.

cluster_name: vCenter cluster name.

template_folder_name: Folder containing template VM, for instance, Datacenter1/Folder1/Subfolder1 or None to search all (it is preferred to specify a folder if there are a large number of VMs).

template_name: Name of template VM.

vm_folder_name: Folder name to place new VM (or None for same as template).

customization_spec_name: Name of a customization spec to use. DHCP must be used to allocate IP addresses to the newly created VMs.

resource_pool_name: Name of VMware Resource Pool or None for no specific resource pool (VM will be assigned to the default “hidden” resource pool).

port_group: Name of port group containing pool member IP (useful if the VM has multiple vNICs) or None to use the IP from the first vNIC.

Name: Scalein-Action

#!/usr/bin/python

import sys

from avi.vmware_ops.vmware_scale import scale_out

vmware_settings = {

'vcenter': '10.10.10.10',

'user': 'root',

'password': 'vmware',

'vm_folder_name': 'Datacenter1/WebApp/Servers'}

scale_in(vmware_settings, *sys.argv)

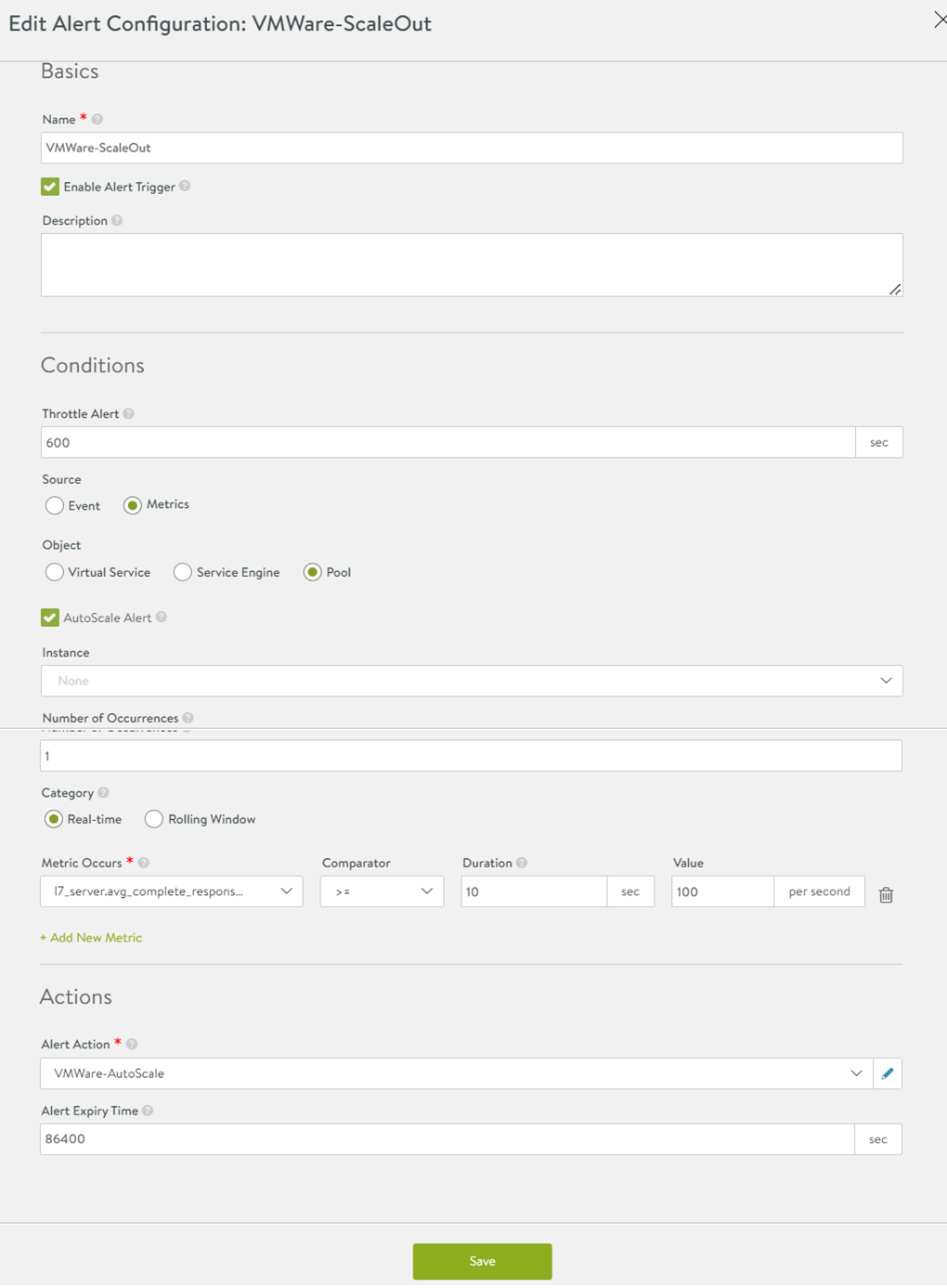

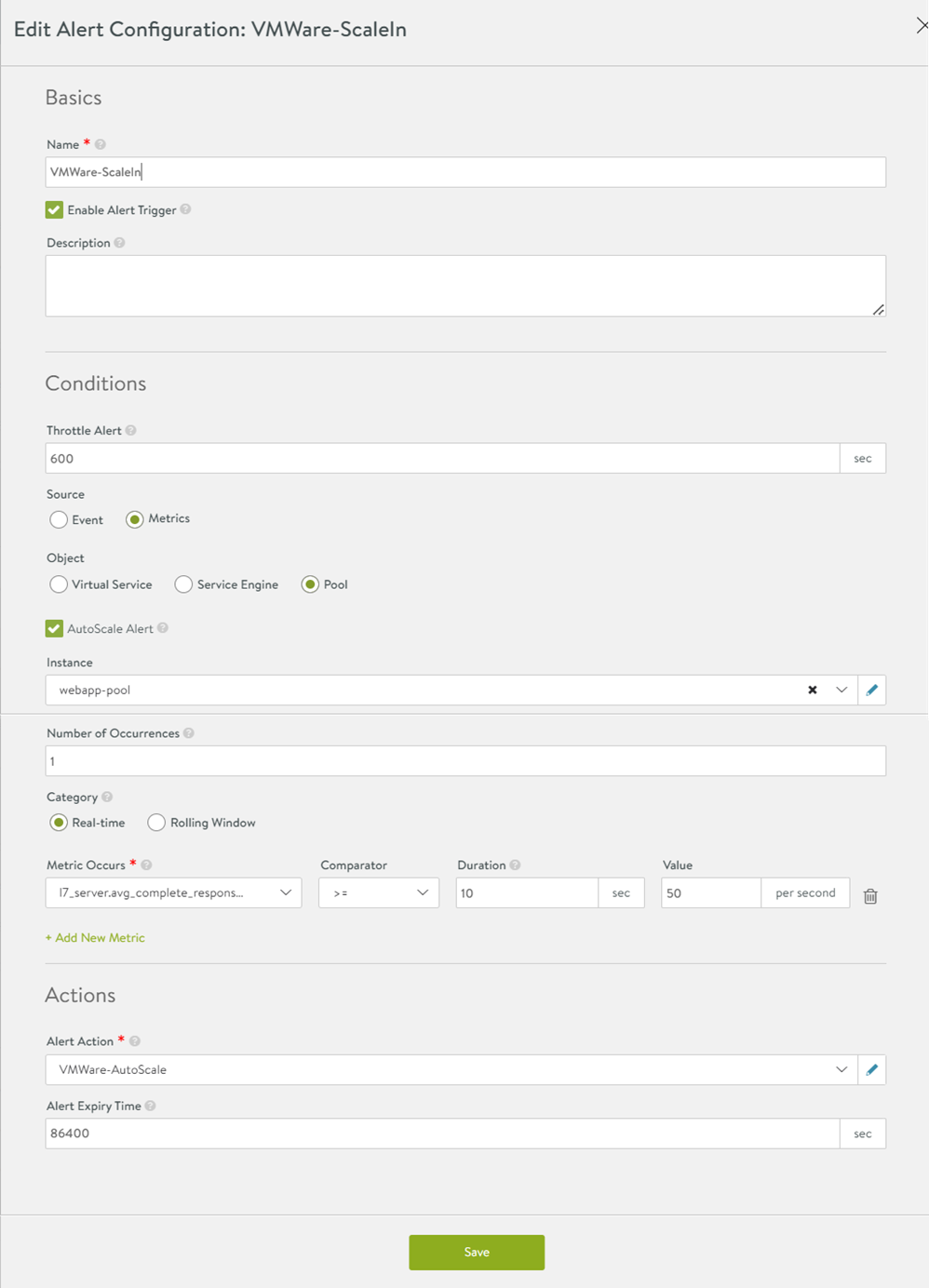

Step 2: Define Autoscaling Alerts

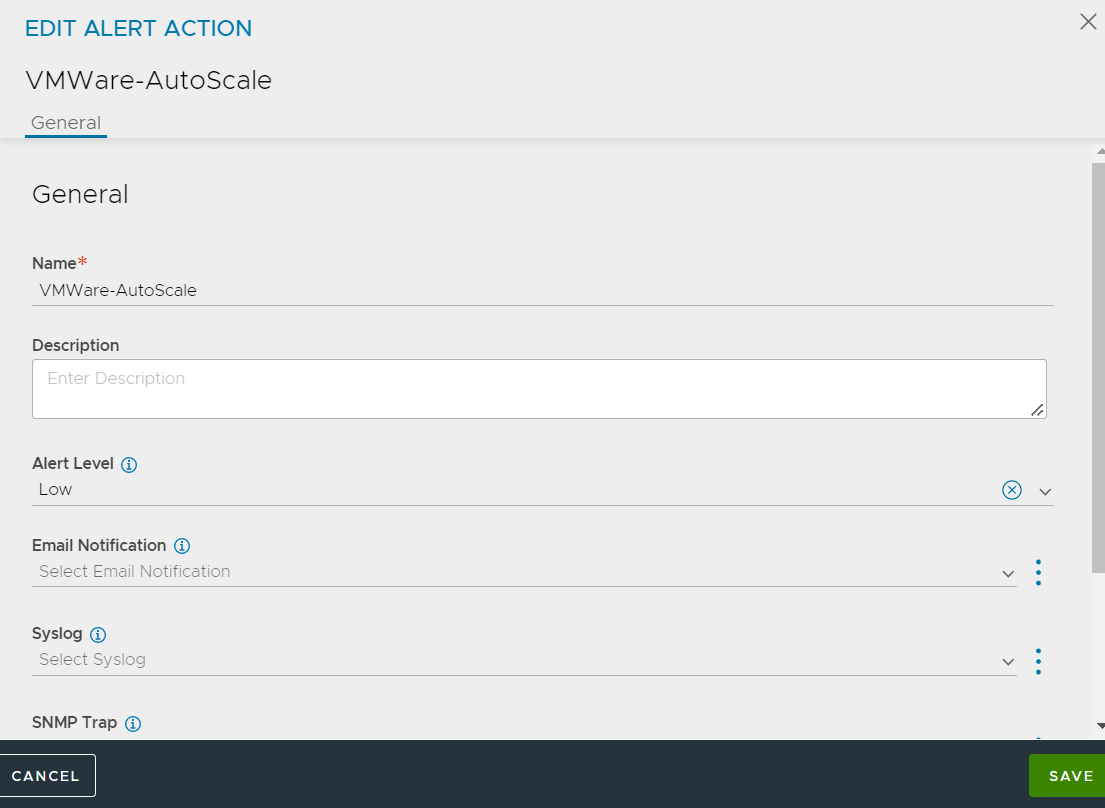

These alerts define the metric trigger thresholds for a server autoscaling decision to be made. These alerts do not run the scale in or scale out ControlScripts directly. They are used in an autoscaling policy, along with the parameters on min-max servers and the number of servers to increment or decrement to generate a Server Autoscale Out or Server Autoscale In event. For information on Autoscale events , see Step 3.

The action on these alerts must not run the above ControlScripts, but could be set to generate informational e-mails, syslogs, traps, and so on. For instance,

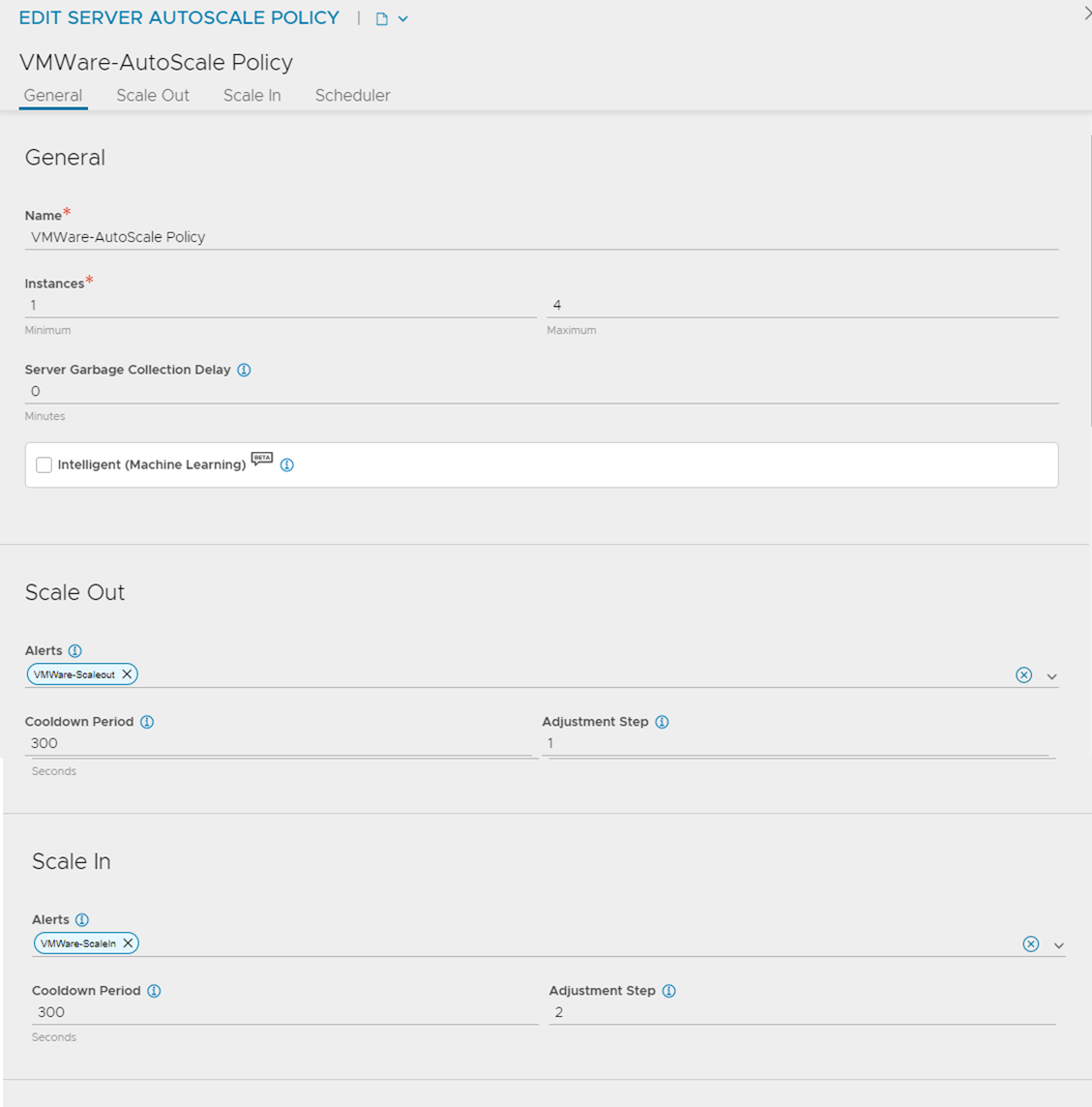

Step 3: Attach Autoscaling Alerts to an Autoscaling Policy for the Server Pool

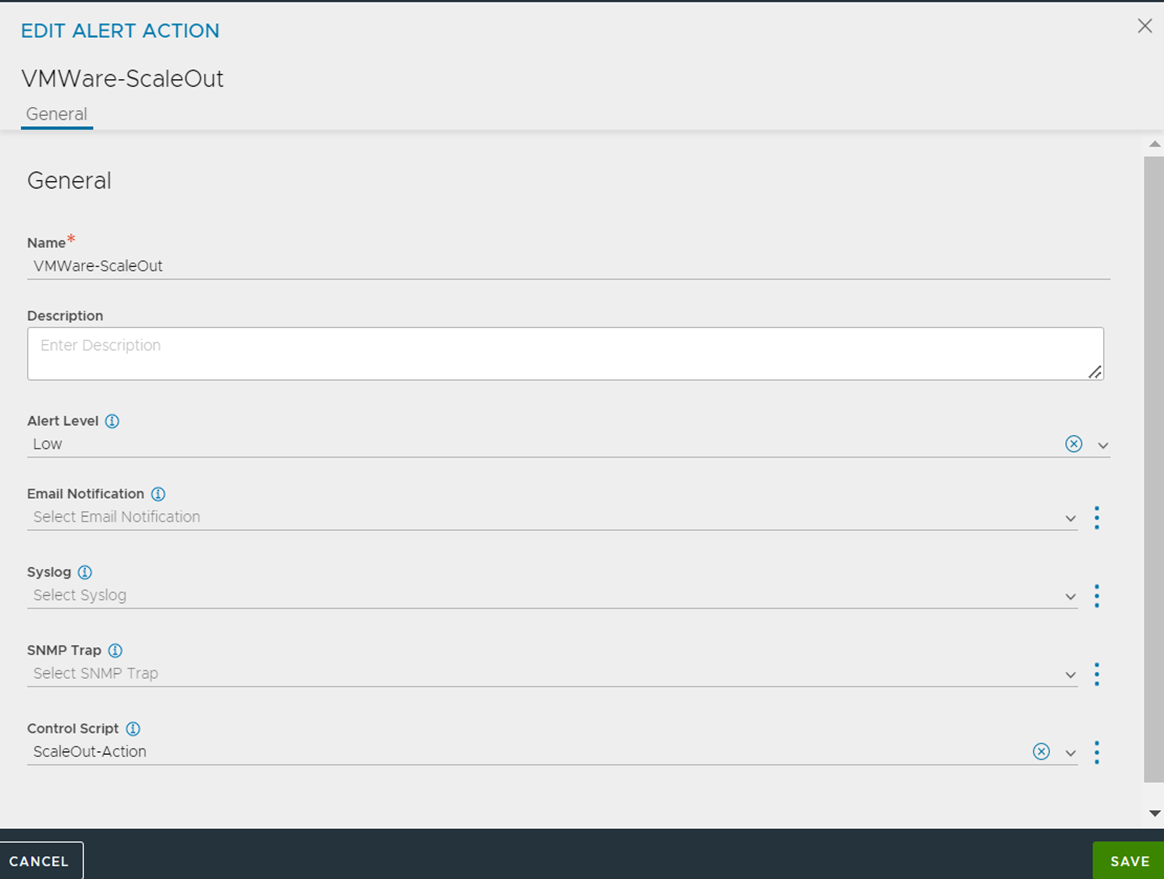

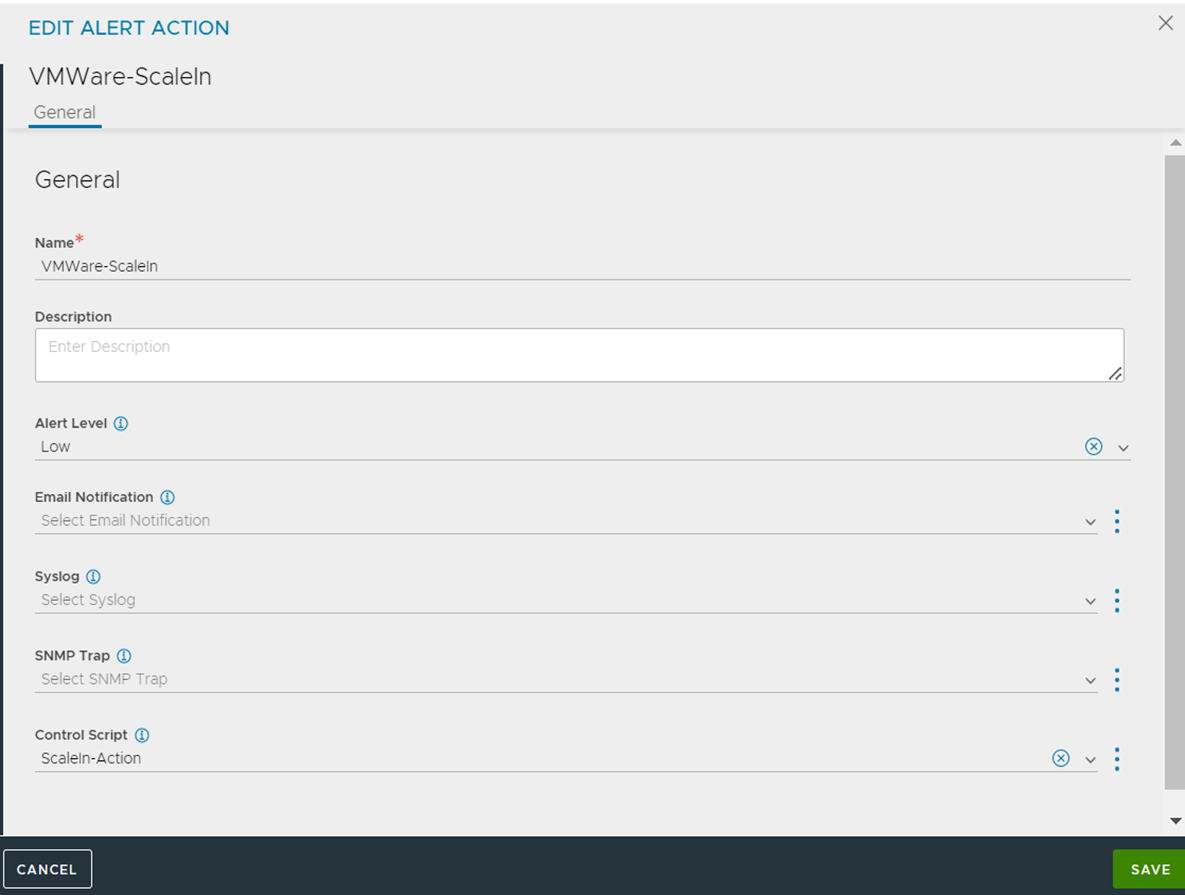

Step 4: Create Scale-out and Scale-in Alerts Triggered on Events to Run ControlScripts

Python Utility Scripts

The steps below are only necessary in NSX Advanced Load Balancer version 21.1.4, 21.1.5, 22.1.1 and 22.1.2 specifically. In later versions, these scripts will be available by default on the Controller and no additional user action is required.

- Step 1:

-

SSH to the Controller bash shell using the “admin” account.

- Step 2:

-

Specify the following commands to become root and switch to the ControlScript shared script location:

admin@controller:~$ sudo -s [sudo] password for admin: <enter password> root@rc-controller-1a:/home/admin# cd /opt/avi/python/lib/avi/scripts/csshared

- Step 3:

-

Create a directory named ‘autoscaling’ as follows:

root@rc-controller-1a:/opt/avi/python/lib/avi/scripts/csshared# mkdir autoscaling

- Step 4:

-

Change to this directory and create

__init__.pyas follows:root@rc-controller-1a:/opt/avi/python/lib/avi/scripts/csshared# cd autoscaling root@rc-controller-1a:/opt/avi/python/lib/avi/scripts/csshared/autoscaling# touch __init__.py

- Step 5:

-

Create a file

vmutils.pyas follows:root@rc-controller-1a:/opt/avi/python/lib/avi/scripts/csshared/autoscaling# vi vmutils.py

Press ‘A’ to enter insert mode, paste the below script then press ESC and type

‘:wq!’to save and exitvi.############################################################################ # ======================================================================== # Copyright 2022 VMware, Inc. All rights reserved. VMware Confidential # ======================================================================== ### from pyVmomi import vim #pylint: disable=no-name-in-module from pyVim.connect import SmartConnectNoSSL, Disconnect import time def _get_obj(content, vimtype, name, folder=None): """ Get the vsphere object associated with a given text name """ obj = None container = content.viewManager.CreateContainerView( folder or content.rootFolder, vimtype, True) for c in container.view: if c.name == name: obj = c break return obj def _get_child_folder(parent_folder, folder_name): obj = None for folder in parent_folder.childEntity: if folder.name == folder_name: if isinstance(folder, vim.Datacenter): obj = folder.vmFolder elif isinstance(folder, vim.Folder): obj = folder else: obj = None break return obj def get_folder(si, name): subfolders = name.split('/') parent_folder = si.RetrieveContent().rootFolder for subfolder in subfolders: parent_folder = _get_child_folder(parent_folder, subfolder) if not parent_folder: break return parent_folder def get_vm_by_name(si, name, folder=None): """ Find a virtual machine by its name and return it """ return _get_obj(si.RetrieveContent(), [vim.VirtualMachine], name, folder) def get_resource_pool(si, name, folder=None): """ Find a resource pool by its name and return it """ return _get_obj(si.RetrieveContent(), [vim.ResourcePool], name, folder) def get_cluster(si, name, folder=None): """ Find a cluster by it's name and return it """ return _get_obj(si.RetrieveContent(), [vim.ComputeResource], name, folder) def wait_for_task(task, timeout=300): """ Wait for a task to complete """ timeout_time = time.time() + timeout timedout = True while time.time() < timeout_time: if task.info.state == 'success': return (True, task.info.result) if task.info.state == 'error': return (False, None) time.sleep(1) return (None, None) def wait_for_vm_status(vm, condition, timeout=300): timeout_time = time.time() + timeout timedout = True while timedout and time.time() < timeout_time: if (condition(vm)): timedout = False else: time.sleep(3) return not timedout def net_info_available(vm): return (vm.runtime.powerState == vim.VirtualMachinePowerState.poweredOn and vm.guest.toolsStatus == vim.vm.GuestInfo.ToolsStatus.toolsOk and vm.guest.net) class vcenter_session: def __enter__(self): return self.session def __init__(self, host, user, pwd): session = SmartConnectNoSSL(host=host, user=user, pwd=pwd) self.session = session def __exit__(self, type, value, traceback): if self.session: Disconnect(self.session) - Step 6:

-

Create a file vmware_scale.py: as follows:

root@rc-controller-1a:/opt/avi/python/lib/avi/scripts/csshared/autoscaling# vi vmware_scale.py

Press ‘A’ to enter insert mode, paste the below script then press ESC and type ‘:wq!’ to save and exit vi.

############################################################################ # ======================================================================== # Copyright 2022 VMware, Inc. All rights reserved. VMware Confidential # ======================================================================== ### from pyVmomi import vim from avi.scripts.csshared.autoscaling import vmutils import uuid from avi.sdk.avi_api import ApiSession import json import os def scaleout_params(scaleout_type, alert_info, api=None, tenant='admin'): pool_name = alert_info.get('obj_name') pool_obj = api.get_object_by_name('pool', pool_name, tenant=tenant) print('pool obj ', pool_obj) pool_uuid = pool_obj['uuid'] num_autoscale = 0 for events in alert_info.get('events', []): event_details = events.get('event_details') if not event_details: continue autoscale_str = 'server_auto%s_info' % scaleout_type autoscale_info = event_details.get(autoscale_str) if not autoscale_info: continue num_autoscale_field = 'num_%s_servers' % scaleout_type num_autoscale = autoscale_info.get(num_autoscale_field) return pool_name, pool_uuid, pool_obj, num_autoscale def getAviApiSession(tenant='admin', api_version=None): """ Create local session to avi controller """ token = os.environ.get('API_TOKEN') user = os.environ.get('USER') tenant = os.environ.get('TENANT') api = ApiSession.get_session(os.environ.get('DOCKER_GATEWAY') or 'localhost', user, token=token, tenant=tenant, api_version=api_version) return api, tenant def do_scale_in(vmware_settings, instance_names): """ Perform scale in of pool. vmware_settings: vcenter: IP address or hostname of vCenter user: Username for vCenter access password: Password for vCenter access vm_folder_name: Folder containing VMs (or None for same as template) instance_names: Names of VMs to destroy """ with vmutils.vcenter_session(host=vmware_settings['vcenter'], user=vmware_settings['user'], pwd=vmware_settings['password']) as session: vm_folder_name = vmware_settings.get('vm_folder_name', None) if vm_folder_name: vm_folder = vmutils.get_folder(session, vm_folder_name) else: vm_folder = None for instance_name in instance_names: vm = vmutils.get_vm_by_name(session, instance_name, vm_folder) if vm: print('Powering off VM %s...' % instance_name) power_off_task = vm.PowerOffVM_Task() (power_off_task_status, power_off_task_result) = vmutils.wait_for_task( power_off_task) if power_off_task_status: print('Deleting VM %s...' % instance_name) destroy_task = vm.Destroy_Task() (destroy_task_status, destroy_task_result) = vmutils.wait_for_task( destroy_task) if destroy_task_status: print('VM %s deleted!' % instance_name) else: print('VM %s deletion failed!' % instance_name) else: print('Unable to power off VM %s!' % instance_name) else: print('Unable to find VM %s!' % instance_name) def do_scale_out(vmware_settings, pool_name, num_scaleout): """ Perform scale out of pool. vmware_settings: vcenter: IP address or hostname of vCenter user: Username for vCenter access password: Password for vCenter access cluster_name: vCenter cluster name template_folder_name: Folder containing template VM, e.g. 'Datacenter1/Folder1/Subfolder1' or None to search all template_name: Name of template VM vm_folder_name: Folder to place new VM (or None for same as template) customization_spec_name: Name of a customization spec to use resource_pool_name: Name of VMWare Resource Pool or None for default port_group: Name of port group containing pool member IP pool_name: Name of the pool num_scaleout: Number of new instances """ new_instances = [] with vmutils.vcenter_session(host=vmware_settings['vcenter'], user=vmware_settings['user'], pwd=vmware_settings['password']) as session: template_folder_name = vmware_settings.get('template_folder_name', None) template_name = vmware_settings['template_name'] if template_folder_name: template_folder = vmutils.get_folder(session, template_folder_name) template_vm = vmutils.get_vm_by_name( session, template_name, template_folder) else: template_vm = vmutils.get_vm_by_name( session, template_name) vm_folder_name = vmware_settings.get('vm_folder_name', None) if vm_folder_name: vm_folder = vmutils.get_folder(session, vm_folder_name) else: vm_folder = template_vm.parent csm = session.RetrieveContent().customizationSpecManager customization_spec = csm.GetCustomizationSpec( name=vmware_settings['customization_spec_name']) cluster = vmutils.get_cluster(session, vmware_settings['cluster_name']) resource_pool_name = vmware_settings.get('resource_pool_name', None) if resource_pool_name: resource_pool = vmutils.get_resource_pool(session, resource_pool_name) else: resource_pool = cluster.resourcePool relocate_spec = vim.vm.RelocateSpec(pool=resource_pool) clone_spec = vim.vm.CloneSpec(powerOn=True, template=False, location=relocate_spec, customization=customization_spec.spec) port_group = vmware_settings.get('port_group', None) clone_tasks = [] for instance in range(num_scaleout): new_vm_name = '%s-%s' % (pool_name, str(uuid.uuid4())) print('Initiating clone of %s to %s' % (template_name, new_vm_name)) clone_task = template_vm.Clone(name=new_vm_name, folder=vm_folder, spec=clone_spec) print('Task %s created.' % clone_task.info.key) clone_tasks.append(clone_task) for clone_task in clone_tasks: print('Waiting for %s...' % clone_task.info.key) clone_task_status, clone_vm = vmutils.wait_for_task(clone_task, timeout=600) ip_address = None if clone_vm: print('Waiting for VM %s to be ready...' % clone_vm.name) if vmutils.wait_for_vm_status(clone_vm, condition=vmutils.net_info_available, timeout=600): print('Getting IP address from VM %s' % clone_vm.name) for nic in clone_vm.guest.net: if port_group is None or nic.network == port_group: for ip in nic.ipAddress: if '.' in ip: ip_address = ip break if ip_address: break else: print('Timed out waiting for VM %s!' % clone_vm.name) if not ip_address: print('Could not get IP for VM %s!' % clone_vm.name) power_off_task = clone_vm.PowerOffVM_Task() (power_off_task_status, power_off_task_result) = vmutils.wait_for_task( power_off_task) if power_off_task_status: destroy_task = clone_vm.Destroy_Task() else: print('New VM %s with IP %s' % (clone_vm.name, ip_address)) new_instances.append((clone_vm.name, ip_address)) elif clone_task_status is None: print('Clone task %s timed out!' % clone_task.info.key) return new_instances def scale_out(vmware_settings, *args): alert_info = json.loads(args[1]) api, tenant = getAviApiSession() (pool_name, pool_uuid, pool_obj, num_scaleout) = scaleout_params('scaleout', alert_info, api=api, tenant=tenant) print('Scaling out pool %s by %d...' % (pool_name, num_scaleout)) new_instances = do_scale_out(vmware_settings, pool_name, num_scaleout) # Get pool object again in case it has been modified pool_obj = api.get('pool/%s' % pool_uuid, tenant=tenant).json() new_servers = pool_obj.get('servers', []) for new_instance in new_instances: new_server = { 'ip': {'addr': new_instance[1], 'type': 'V4'}, 'hostname': new_instance[0]} new_servers.append(new_server) pool_obj['servers'] = new_servers print('Updating pool with %s' % new_servers) resp = api.put('pool/%s' % pool_uuid, data=json.dumps(pool_obj)) print('API status: %d' % resp.status_code) def scale_in(vmware_settings, *args): alert_info = json.loads(args[1]) api, tenant = getAviApiSession() (pool_name, pool_uuid, pool_obj, num_scaleout) = scaleout_params('scalein', alert_info, api=api, tenant=tenant) remove_instances = [instance['hostname'] for instance in pool_obj['servers'][-num_scaleout:]] pool_obj['servers'] = pool_obj['servers'][:-num_scaleout] print('Scaling in pool %s by %d...' % (pool_name, num_scaleout)) resp = api.put('pool/%s' % pool_uuid, data=json.dumps(pool_obj)) print('API status: %d' % resp.status_code) do_scale_in(vmware_settings, remove_instances)

The above steps should be followed independently for all three Controllers in a Cluster.