This section explains the network topologies supported by Avi Load Balancer in GCP.

The following are the types of networks used:

- Frontend Data Network

-

This network connects the VIP to the SEs. All VIP traffic reaches the SEs through this network.

- Backend Data Network

-

This network connects the SEs to the application servers. All traffic between the SEs and the application servers flows through this network.

- Management Network

-

This network connects the SEs with the Controller for all management operations.

You can share VPC networks across projects in GCP. For more details on shared VPC, see Shared VPC guide.

GCP does not allow two NICs from the same VPC (even if there are multiple subnets in the VPC) to be associated with a single virtual machine (Creating instances with multiple network interfaces). So both the Data and Management NICs have to be in separate VPCs.

GCP load balancer forwards traffic to only the first interface of the virtual machine.

Network Configuration Modes

The following are the types of network configuration modes:

Inband Management

One-Arm with Dedicated Management

Two-Arm Mode with Dedicated Management

Inband Management

The following are the features of inband management:

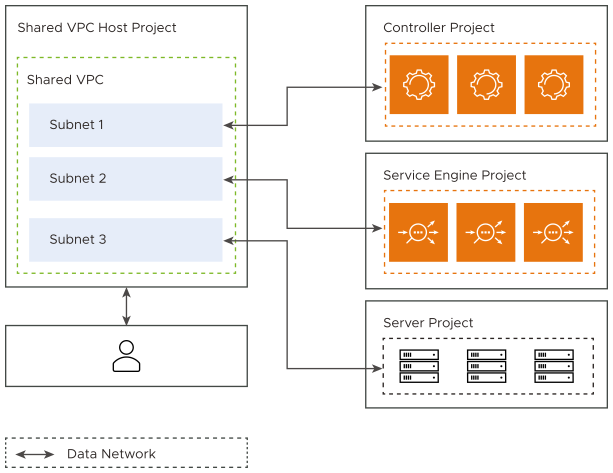

The SEs are connected to only one VPC subnet.

No network isolation between data (front-end and back-end) and management traffic, as both go though the same VPC subnet.

The SE subnet VPC can be a shared VPC that provides the flexibility of having Controllers, SEs, and servers in different projects without the need for VPC peering.

The inband management needs atleast one vCPU for the SE virtual machines.

The following is the diagrammatic representation of the inband management:

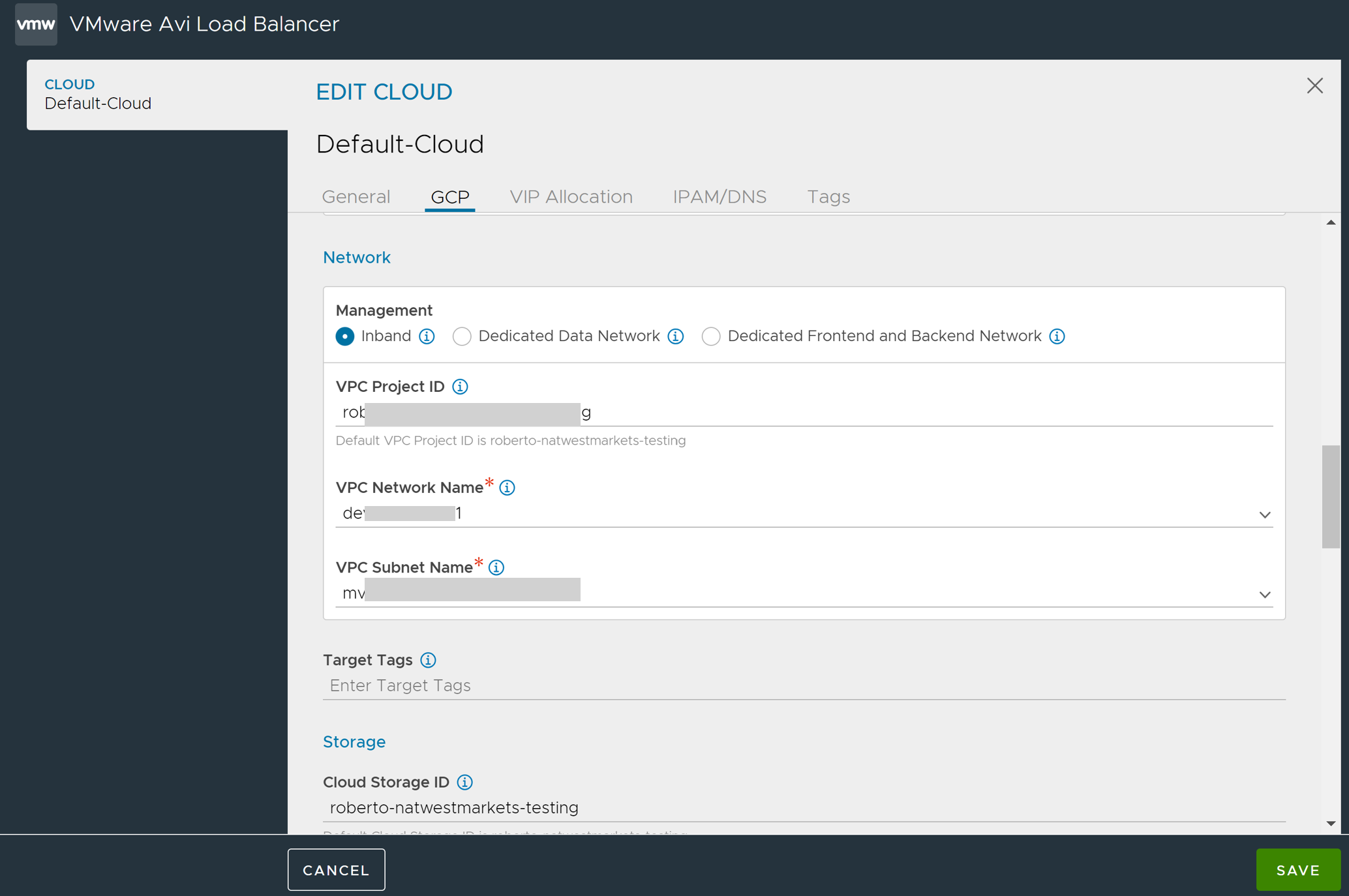

Configuring GCP Cloud with Inband Management using the UI

Navigate to .

Either edit an existing cloud or click CREATE > Google Cloud Platform to create a new cloud.

Configure the required fields under the General tab.

Under the Network tab, click Inband as the mode for network configuration.

The Project ID of the Service Engine is selected by default as the VPC Project ID.

Select the Service Engine Network Name as the VPC Network Name.

-

Select the Service Engine subnet name as the VPC Subnet Name.

Click Save.

Configuring GCP Cloud with Inband Management using the CLI

Set the network_config field of gcp_configuration to inband_management. The following fields need to be set in the inband object:

vpc_project_id: The VPC project ID of the network that needs to be attached to the SE virtual machine. By default, the SE project ID is used.vpc_network_name:The VPC name of the network that needs to be attached to the SE virtual machine.vpc_subnet_name: The VPC subnet name from which the SE interface IP is allocated.

You can set credentials while configuring cloud through CLI using the following CLI command:

[admin:controller]: cloud:gcp_configuration> cloud_credentials_ref cross-project-creds cross-project-creds [admin:controller]: cloud:gcp_configuration> cloud_credentials_ref cross-project-creds

Example

[admin:controller]: > configure cloud gcp-cloud-inband [admin:controller]: cloud> vtype cloud_gcp [admin:controller]: cloud> gcp_configuration [admin:controller]: cloud:gcp_configuration> se_project_id service-engine-project [admin:controller]: cloud:gcp_configuration> region_name us-central1 [admin:controller]: cloud:gcp_configuration> zones us-central1-a [admin:controller]: cloud:gcp_configuration> zones us-central1-b [admin:controller]: cloud:gcp_configuration> network_config config inband_management [admin:controller]: cloud:gcp_configuration:network_config> inband [admin:controller]: cloud:gcp_configuration:network_config:inband> vpc_network_name network-shared [admin:controller]: cloud:gcp_configuration:network_config:inband> vpc_project_id network-project [admin:controller]: cloud:gcp_configuration:network_config:inband> vpc_subnet_name subnet-1 [admin:controller]: cloud:gcp_configuration:network_config:inband> save [admin:controller]: cloud:gcp_configuration:network_config> save [admin:controller]: cloud:gcp_configuration> save [admin:controller]: cloud> save +------------------------------+----------------------------------------+ | Field | Value | +------------------------------+----------------------------------------+ | uuid | cloud-ce377a7b-965f-4714-be1a-7b6c0e64c22f| | name | gcp-cloud-inband | | vtype | CLOUD_GCP | | apic_mode | False | | gcp_configuration | | | region_name | us-central1 | | zones[1] | us-central1-a | | zones[2] | us-central1-b | | se_project_id | service-engine-project | | network_config | | | config | INBAND_MANAGEMENT | | inband | | | vpc_subnet_name | subnet-1 | | vpc_project_id | network-project | | vpc_network_name | network-shared | | vip_allocation_strategy | | | mode | ROUTES | | dhcp_enabled | True | | mtu | 1500 bytes | | prefer_static_routes | False | | enable_vip_static_routes | False | | license_type | LIC_CORES | | state_based_dns_registration | True | | ip6_autocfg_enabled | False | | dns_resolution_on_se | False | | enable_vip_on_all_interfaces | False | | tenant_ref | admin | | license_tier | ENTERPRISE_18 | | autoscale_polling_interval | 60 seconds | +------------------------------+----------------------------------------+ [admin:10-138-10-31]: >

One-Arm with Dedicated Management

The following are the features of one-arm with dedicated management:

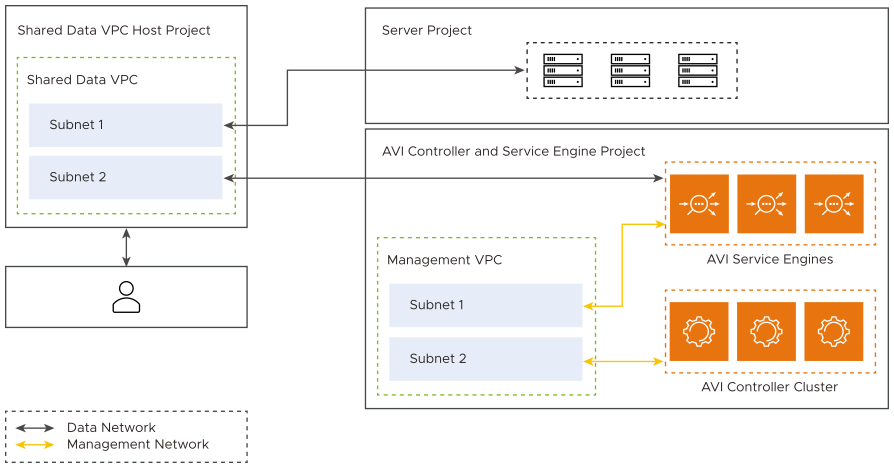

The SEs are connected to two VPC subnets. The first is for management traffic and the second, for data traffic.

Though network isolation between data and management traffic is provided, front end data and back end data go through the same network.

First interface of the SE is connected to the data network.

Shared VPC is supported on multiple NICs. So both the data NIC and the management NIC can be from a shared VPC.

If the SEs and the Controller are in different projects, the management VPC needs to be peered with the other project VPC.

Needs atleast one vCPU for the SE virtual machines.

In prior Avi Load Balancer versions, the shared VPC was supported only on the first NIC. So only the data NIC can be in a shared VPC.

The following is the diagrammatic representation of one-arm with dedicated management:

Configuring GCP Cloud through CLI

Set the network_config field of gcp_configuration to one_arm_mode. The following fields need to be set in the one_arm object:

data_vpc_project_id: The VPC project ID of the data network which needs to be attached to the Service Engine virtual machine. By default, the Service Engine project ID will be used.data_vpc_network_name: The VPC network name of the data network which needs to be attached to the Service Engine virtual machine.data_vpc_subnet_name: The VPC subnet name from which the Service Engine data interface IP will be allocated.management_vpc_network_name: The VPC network name of the management network which needs to be attached to the Service Engine virtual machine.management_vpc_subnet_name: The VPC subnet name from which the Service Engine management interface IP will be allocated.management_vpc_project_id: The project ID of the Service Engine management network. By default, Service Engine Project ID will be used.

Example

[admin:controller]: > configure cloud gcp-cloud-onearm [admin:controller]: cloud> vtype cloud_gcp [admin:controller]: cloud> gcp_configuration [admin:controller]: cloud:gcp_configuration> se_project_id service-engine-project [admin:controller]: cloud:gcp_configuration> region_name us-central1 [admin:controller]: cloud:gcp_configuration> zones us-central1-a [admin:controller]: cloud:gcp_configuration> zones us-central1-b [admin:controller]: cloud:gcp_configuration> network_config config one_arm_mode [admin:controller]: cloud:gcp_configuration:network_config> one_arm [admin:controller]: cloud:gcp_configuration:network_config:one_arm> data_vpc_project_id data-network-project-id [admin:controller]: cloud:gcp_configuration:network_config:one_arm> data_vpc_network_name data-network [admin:controller]: cloud:gcp_configuration:network_config:one_arm> data_vpc_subnet_name data-subnet [admin:controller]: cloud:gcp_configuration:network_config:one_arm> management_vpc_network_name management-network [admin:controller]: cloud:gcp_configuration:network_config:one_arm> management_vpc_subnet_name management-subnet [admin:controller]: cloud:gcp_configuration:network_config:one_arm> management_vpc_project_id management-vpc-project-id [admin:controller]: cloud:gcp_configuration:network_config:one_arm> save [admin:controller]: cloud:gcp_configuration:network_config> save [admin:controller]: cloud:gcp_configuration> save [admin:controller]: cloud> save +-----------------------------------+-----------------------------------+ | Field | Value | +-----------------------------------+-----------------------------------+ | uuid | cloud-d9e4f50b-eab8-40a1-be1f-b6a90e597ac8 | | name | gcp-cloud-onearm | | vtype | CLOUD_GCP | | apic_mode | False | | gcp_configuration | | | region_name | us-central1 | | zones[1] | us-central1-a | | zones[2] | us-central1-b | | se_project_id | service-engine-project | | network_config | | | config | ONE_ARM_MODE | | one_arm | | | data_vpc_subnet_name | data-subnet | | data_vpc_project_id | data-network-project-id | | management_vpc_subnet_name | management-subnet | | data_vpc_network_name | data-network | | management_vpc_network_name | management-network | | management_vpc_project_id | management-vpc-project-id | | vip_allocation_strategy | | | mode | ROUTES | | dhcp_enabled | True | | mtu | 1500 bytes | | prefer_static_routes | False | | enable_vip_static_routes | False | | license_type | LIC_CORES | | state_based_dns_registration | True | | ip6_autocfg_enabled | False | | dns_resolution_on_se | False | | enable_vip_on_all_interfaces | False | | tenant_ref | admin | | license_tier | ENTERPRISE_18 | | autoscale_polling_interval | 60 seconds | +-----------------------------------+-----------------------------------+ [admin:admin:controller]: >

Two-Arm Mode with Dedicated Management

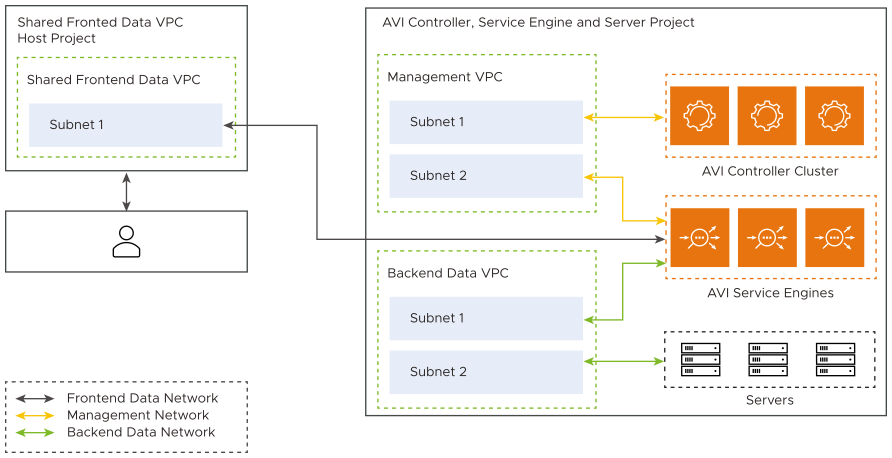

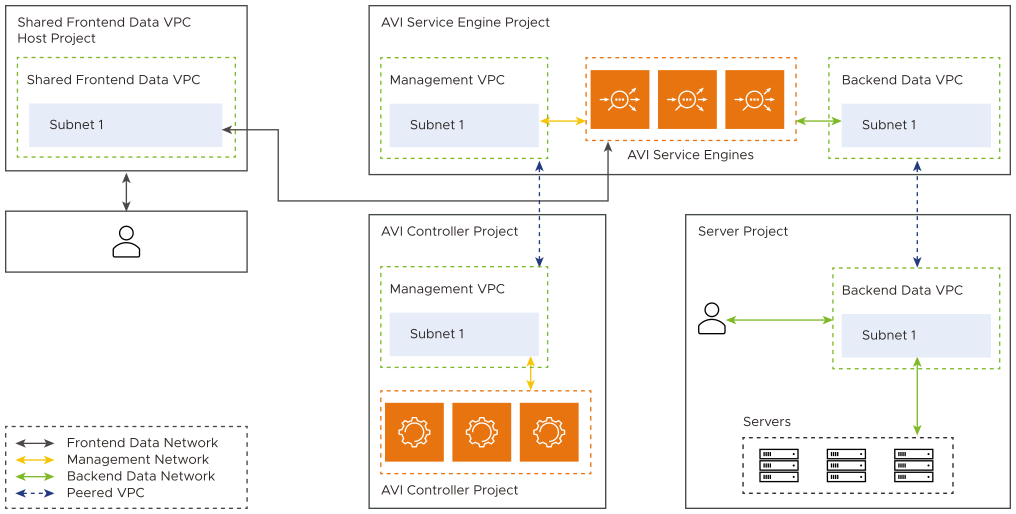

The following are the features of the two-arm mode with dedicated management:

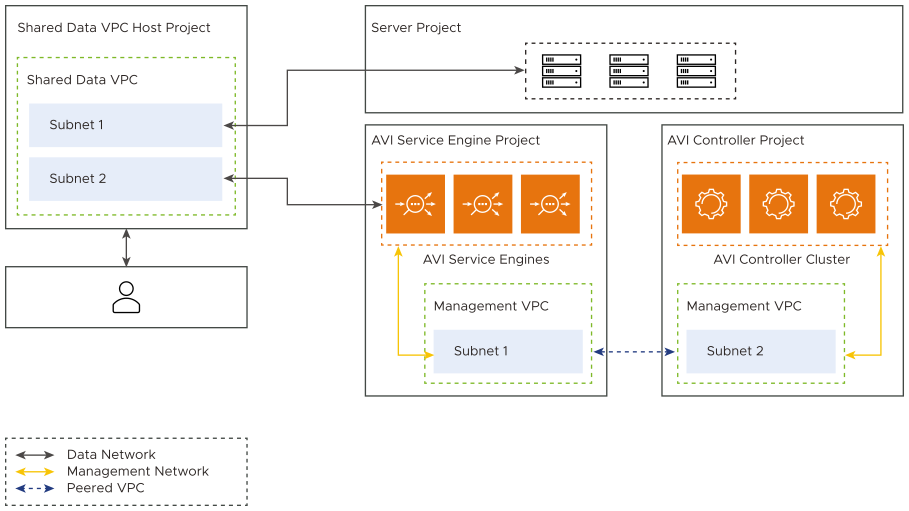

The SEs are connected to three VPC subnets, one each for front end data traffic, management traffic, and back end data traffic.

Provides isolation between management, front end data, and back end data networks.

First interface of the SE is connected to the front end data network.

Shared VPC is supported on multiple NICs. Therefore, all the three NICs can be from shared VPCs.

In earlier versions of the Avi Load Balancer, shared VPC was supported only on the first NIC. So only the front end data NIC could be in a shared VPC.

If the SEs and the Controller are in different projects, the management VPC needs to be peered with the other project VPC.

The third interface of the SE is connected to the back end data network.

Needs atleast four vCPUs for the SE virtual machine. You can add a flavor having four or more vCPUs in the

ServiceEngineGroupproperties.

The following is the diagrammatic representation of two-arm mode with dedicated management:

Configuring GCP Cloud through CLI

Set the network_config field of gcp_configuration to two_arm_mode. The following fields need to be set in the two_arm object.

frontend_data_vpc_project_id: The VPC project ID of the frontend data network which needs to be attached to the Service Engine virtual machine. By default, the SEproject ID is used.frontend_data_vpc_network_name: The VPC network name of the frontend data network which needs to be attached to the SE virtual machine.frontend_data_vpc_subnet_name: The VPC subnet name from which the SE frontend data interface IP is allocated.management_vpc_network_name: The VPC network name of the management network which needs to be attached to the SE virtual machine.management_vpc_subnet_name: The VPC subnet name from which the SE management interface IP is allocated.backend_data_vpc_network_name: The VPC network name of the backend data network which needs to be attached to the SE virtual machine.backend_data_vpc_subnet_name: The VPC subnet name from which the SE backend data interface IP is allocated.management_vpc_project_id: The project ID of the SE management network. By default, the SE project ID is used.backend_data_vpc_project_id: The project ID of the SE backend data network. By default, the SE project ID is used.

Example

[admin:controller]: > configure cloud gcp-cloud-twoarm [admin:controller]: cloud> vtype cloud_gcp [admin:controller]: cloud> gcp_configuration [admin:controller]: cloud:gcp_configuration> se_project_id service-engine-project [admin:controller]: cloud:gcp_configuration> region_name us-central1 [admin:controller]: cloud:gcp_configuration> zones us-central1-a [admin:controller]: cloud:gcp_configuration> zones us-central1-b [admin:controller]: cloud:gcp_configuration> network_config config two_arm_mode [admin:controller]: cloud:gcp_configuration:network_config> two_arm [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_project_id frontend-data-network-project [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_network_name frontend-data-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_subnet_name frontend-data-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> management_vpc_network_name management-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> management_vpc_subnet_name management-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> management_vpc_project_id management-vpc-project-id [admin:controller]: cloud:gcp_configuration:network_config:two_arm> backend_data_vpc_project_id system-testing-02 [admin:controller]: cloud:gcp_configuration:network_config:two_arm> [admin:controller]: cloud:gcp_configuration:network_config:two_arm> backend_data_vpc_network_name backend-data-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> backend_data_vpc_subnet_name backend-data-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> save [admin:controller]: cloud:gcp_configuration:network_config> save [admin:controller]: cloud:gcp_configuration> save [admin:controller]: cloud> save +--------------------------------------+--------------------------------+ | Field | Value | +--------------------------------------+--------------------------------+ | uuid | cloud-500d70f5-0457-467c-b796-7652c701e2c7 | | name | gcp-cloud-twoarm | | vtype | CLOUD_GCP | | apic_mode | False | | gcp_configuration | | | region_name | us-central1 | | zones[1] | us-central1-a | | zones[2] | us-central1-b | | se_project_id | service-engine-project | | network_config | | | config | TWO_ARM_MODE | | two_arm | | | frontend_data_vpc_subnet_name | frontend-data-subnet-name | | frontend_data_vpc_project_id | frontend-data-network-project | | management_vpc_subnet_name | management-subnet-name | | backend_data_vpc_subnet_name | backend-data-subnet-name | | frontend_data_vpc_network_name | frontend-data-network-name | | management_vpc_network_name | management-network-name | | management_vpc_project_id | management-vpc-project-id | | backend_data_pc_project_id | backend-data-pc-project-id | | backend_data_vpc_network_name | backend-data-network-name | | vip_allocation_strategy | | | mode | ROUTES | | dhcp_enabled | True | | mtu | 1500 bytes | | prefer_static_routes | False | | enable_vip_static_routes | False | | license_type | LIC_CORES | | state_based_dns_registration | True | | ip6_autocfg_enabled | False | | dns_resolution_on_se | False | | enable_vip_on_all_interfaces | False | | tenant_ref | admin | | license_tier | ENTERPRISE_18 | | autoscale_polling_interval | 60 seconds | +--------------------------------------+--------------------------------+ [admin:controller]: > Configuring ServiceEngineGroup via CLI You can set GCP n2-standard-4 machine type in the Service Engine group as follows: [admin:controller]: > configure serviceenginegroup Default-Group [admin:controller]: serviceenginegroup> instance_flavor n2-standard-4 [admin:controller]: serviceenginegroup> save

Configuring Static Routes for Backend Servers Reachability

The static routes are required if the backend servers are in subnets other than the SE backend data subnet configured in the cloud.

The static routes needs to be configured with:

The destination range set to the backend servers subnet prefix.

The next hop set to the gateway of GCP backend data subnet connected to the SE.

In the following configuration, 10.152.134.192/26 is the subnet prefix of the servers subnet and 10.152.134.1 is the gateway of the subnet attached to the SE in the backend subnet VPC.

Example

[admin:controller]: > configure vrfcontext global Multiple objects found for this query. [0]: vrfcontext-0390ab9e-510c-49ab-8906-7f6eb72ef7f9#global in tenant admin, Cloud Default-Cloud [1]: vrfcontext-ef6ae4f4-42c2-4225-9194-cec7ae294979#global in tenant admin, Cloud gcp-cloud-twoarm Select one: 1 [admin:controller]: vrfcontext> static_routes New object being created [admin:controller]: vrfcontext:static_routes> prefix 10.152.134.192/26 [admin:controller]: vrfcontext:static_routes> next_hop 10.152.134.1 [admin:controller]: vrfcontext:static_routes> route_id 1 [admin:controller]: vrfcontext:static_routes> save [admin:controller]: vrfcontext> save +------------------+-------------------------------------------------+ | Field | Value | +------------------+-------------------------------------------------+ | uuid | vrfcontext-ef6ae4f4-42c2-4225-9194-cec7ae294979 | | name | global | | static_routes[1] | | | prefix | 10.152.134.192/26 | | next_hop | 10.152.134.1 | | route_id | 1 | | system_default | True | | tenant_ref | admin | | cloud_ref | gcp-cloud-twoarm | +------------------+-------------------------------------------------+ [admin:admin:controller]: >

VIP on all Interfaces

You can configure the VIPs in GCP Cloud to list all the SE data interfaces. Using this feature, you can access VIP from both frontend and backend data VPCs.

This feature can be used only with following Avi Load Balancer cloud configuration:

vip_allocation_strategyis set to routes. The GCP routes for the VIP are created in both the frontend data and backend data VPCs. See Configuring the IPAM for GCP to know about different VIP allocation strategies in GCP.network_configmode is two_arm.

You can enable this feature by setting enable_vip_on_all_interfaces field in the Avi Load Balancer cloud configuration. All the SEs in the Service Engine Groups are listed on all data interfaces.

You cannot change this field if the Virtual Services and the SEs already exist for the Avi Load Balancer cloud.

GCP IPAM on GCP is not supported.

Configuring through CLI

You can configure the VIPs through CLI using the following commands:

[admin:admin:controller]: > configure cloud gcp-cloud-twoarm-all-interfaces [admin:controller]: cloud> vtype cloud_gcp [admin:controller]: cloud> gcp_configuration [admin:controller]: cloud:gcp_configuration> se_project_id service-engine-project [admin:controller]: cloud:gcp_configuration> region_name us-central1 [admin:controller]: cloud:gcp_configuration> zones us-central1-a [admin:controller]: cloud:gcp_configuration> zones us-central1-b [admin:controller]: cloud:gcp_configuration> network_config config two_arm_mode [admin:controller]: cloud:gcp_configuration:network_config> two_arm [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_project_id frontend-data-network-project [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_network_name frontend-data-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> frontend_data_vpc_subnet_name frontend-data-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> management_vpc_network_name management-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> management_vpc_subnet_name management-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> backend_data_vpc_network_name backend-data-network-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> backend_data_vpc_subnet_name backend-data-subnet-name [admin:controller]: cloud:gcp_configuration:network_config:two_arm> save [admin:controller]: cloud:gcp_configuration:network_config> save [admin:controller]: cloud:gcp_configuration> save [admin:controller]: cloud> enable_vip_on_all_interfaces [admin:controller]: cloud> save +--------------------------------------+--------------------------------+ | Field | Value | +--------------------------------------+--------------------------------+ | uuid | cloud-cd70e433-a85f-49db-bfbe-b9db6a938ba6 | | name | gcp-cloud-twoarm-all-interfaces| | vtype | CLOUD_GCP | | apic_mode | False | | gcp_configuration | | | region_name | us-central1 | | zones[1] | us-central1-a | | zones[2] | us-central1-b | | se_project_id | service-engine-project | | network_config | | | config | TWO_ARM_MODE | | two_arm | | | frontend_data_vpc_subnet_name | frontend-data-subnet-name | | frontend_data_vpc_project_id | frontend-data-network-project | | management_vpc_subnet_name | management-subnet-name | | backend_data_vpc_subnet_name | backend-data-subnet-name | | frontend_data_vpc_network_name | frontend-data-network-name | | management_vpc_network_name | management-network-name | | backend_data_vpc_network_name | backend-data-network-name | | vip_allocation_strategy | | | mode | ROUTES | | dhcp_enabled | True | | mtu | 1500 bytes | | prefer_static_routes | False | | enable_vip_static_routes | False | | license_type | LIC_CORES | | state_based_dns_registration | True | | ip6_autocfg_enabled | False | | dns_resolution_on_se | False | | enable_vip_on_all_interfaces | True | | tenant_ref | admin | | license_tier | ENTERPRISE_18 | | autoscale_polling_interval | 60 seconds | +--------------------------------------+--------------------------------+ [admin:controller]: >

GCP Service Account needs to have a role with the following permission in all the VPC Projects:

network_project_role.yaml

For more details, see Roles and Permissions (GCP Full Access).

VIP Route Priority

Currently, you can create routes to VIP in GCP with a default priority of 2000 and also configure the route priority. You can only modify VIP route priority when there are no virtual services in the cloud, or if all the virtual services are in disabled state. Route priority can only be set if you chose Routes under VIPAllocationMode. All the newly created routes are created with the new value of route_priority.