A common requirement when testing a new load balancer is to validate that it correctly balances the load. The round-robin load-balancing algorithm is commonly used as a simple test case.

The round-robin load-balancing algorithm, which is the oldest and most trusted load balancing method, can exhibit unexpected, non-uniform distribution of traffic. In many instances, this non-uniform distribution of traffic is due to interoperation with other features.

From an external view, such as through tcpdump, it might appear as if round robin is not operating correctly since connections are distributed in a non-uniform fashion. While it is reasonable to expect round robin to interoperate with other features without issues, the following are many possible reasons for the non-uniform distribution of load.

Potential Causes of Non-uniform Round-robin Load Balancing

- Persistence

-

Persistence is the opposite of load balancing. Once a client has connected, persistence enables it to stick to the same server for a duration of time. Validate if no persistence is configured within the pool.

- Passive Health Monitor

-

This monitor listens to client-to-server interaction and dynamically adjusts the ratio of traffic a server receives, depending on the responses it sends. So while the server might be passing the active (static) health monitor and this server sends out a few 503 (server busy) messages, the passive monitor throttles back the volume of traffic sent to the server, thereby disrupting the expected round-robin behavior.

- Server Ratio

-

Under the Servers tab of the pool, ensure that all servers have the same ratio.

- Connection Multiplex

-

By default, connection multiplex is enabled within the HTTP application profile, attempting to accelerate traffic by keeping idle connections open for further reuse rather than terminating a client connection and immediately opening a new one for the next client. Due to this reuse, you will not see a clear one-to-one correlation between client connections to server connections.

- Scaled Across SEs

-

The Avi Load Balancer SEs are based on a distributed-system model. This impacts round-robin at various levels. Firstly, virtual services must be scaled out across multiple SEs for increased capacity or better high availability. Each SE individually performs round robin for the connections managed by it.

- Scaled Across CPU Cores

-

Internally within an SE, a CPU core is designated as a dispatcher, which picks up packets from the NIC and distributes them (based on packet streams/connections) to the CPU cores (including the one it is residing on). It takes into account how busy each core is to ensure that the least-loaded CPU core answers the connection.

On a busy system, this means that the CPU core with the dispatcher (generally vCPU0) will tend to handle less traffic than the other cores. Each CPU or vCPU within the SE independently makes load-balancing decisions. So both CPU0 and CPU1 perform round robin. The first client connection goes to CPU0, which sends the connection to server 1. The second connection will go to CPU1, which can also send the connection to server 1.

Validation

To validate that the load balancing behavior is correct, modify the configuration based on the recommendations above, if applicable, and then view the logs for the virtual service. Ensure that both significant and non-significant logs are displayed. If non-significant logs are not captured, they must be enabled on the Analytics page of the virtual service configuration.

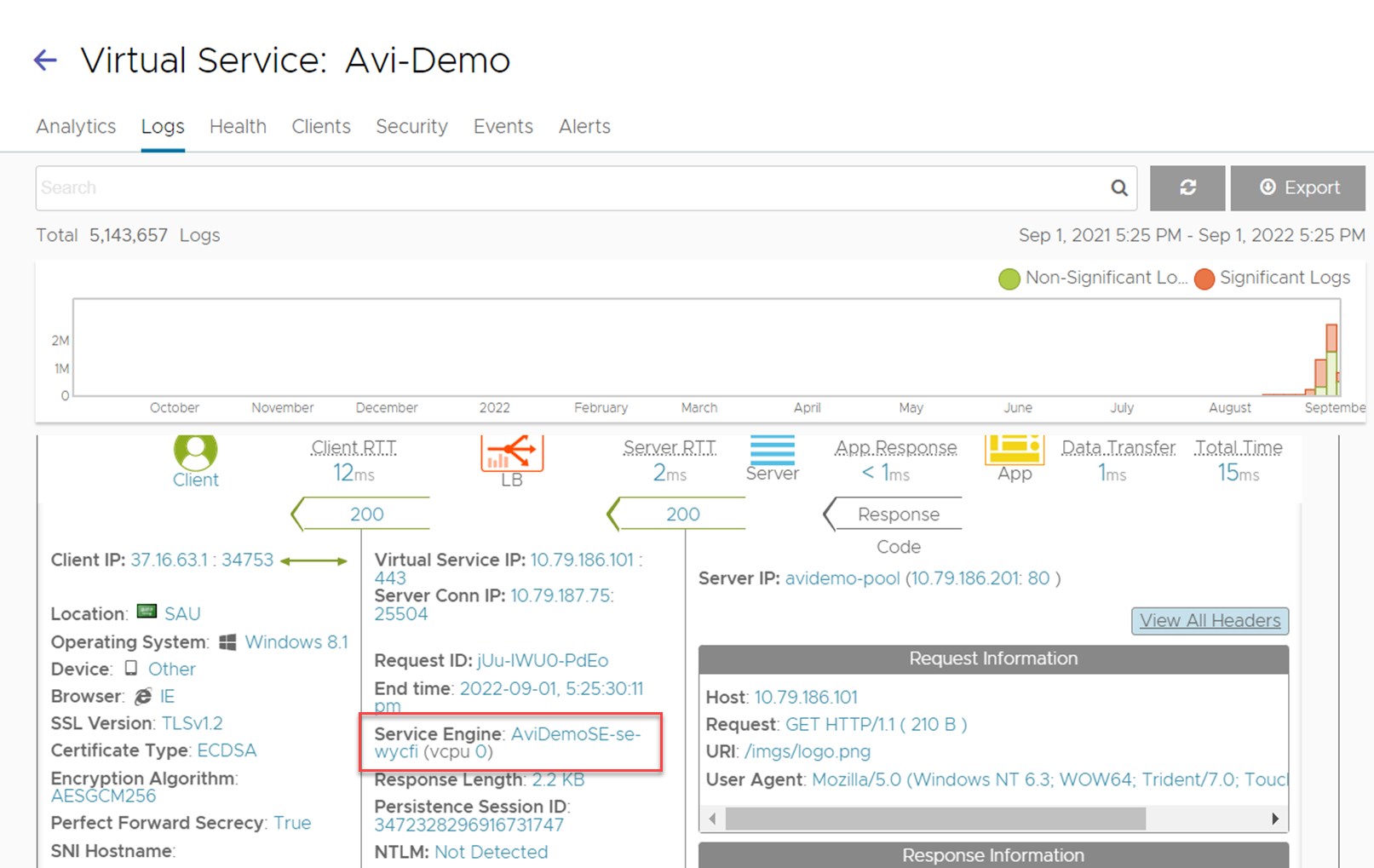

Open a log to see the expanded view. The Service Engine field shows the vCPU used by the connection. If the virtual service is scaled across multiple SEs, click the SE IP address to add to the log filter. Click the vCPU number, which filters logs for connections that were handled by this CPU.

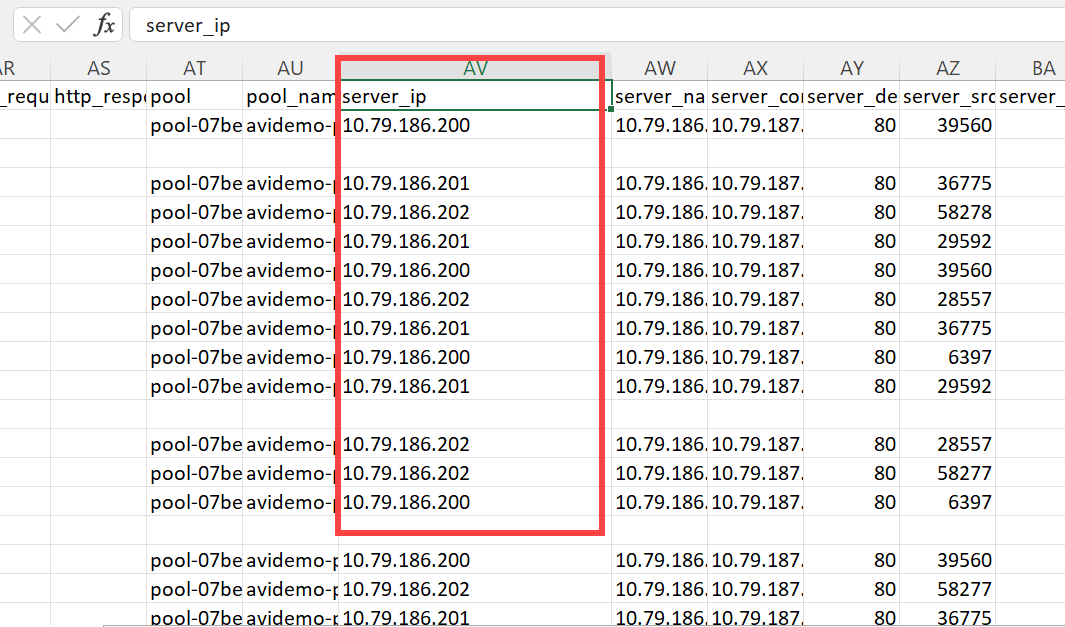

Download the resulting logs by clicking the Export button at the top right corner of the Logs page. In the resulting CSV file, the Server_IP column shows the order of requests or connections sent to servers from the filtered SE CPU. In the example above, column AU shows that round-robin server selection is occurring correctly.