Load balancing for app volume manager can be achieved by configuring an L7 virtual service with HTTPS application profile.

App Volumes servers do not support connections for the same client originating from different source IP addresses. In the case where the virtual service is deployed as an Active/Active scale out, it is possible that multiple connections from the same client are processed by different Service Engines. As each Service Engine uses a distinct SNAT IP, the servers may see multiple connections for the same client with a different source IP, resulting in authentication failures. To address this issue, use either one of the options given below:

Option 1: Use an Active/Standby Service Engine group when load balancing App Volumes

Option 2: If native scale out is being used, configure the flow distribution algorithm to be based only the client IP address rather than the client IP and port through the CLI:

configure virtualservice <appvol-vs-name> flow_dist flow_dist consistent_hash_source_ip_address save

Option 2 is only applicable in the case of native scale out. In the case where ECMP scale out is used (for example, with BGP), the distribution of flows across Service Engines is dependent on the ECMP hash algorithm used by the upstream router. If that hash is based on the full 5-tuple (source/destination IP/port/protocol) then this issue will be encountered.

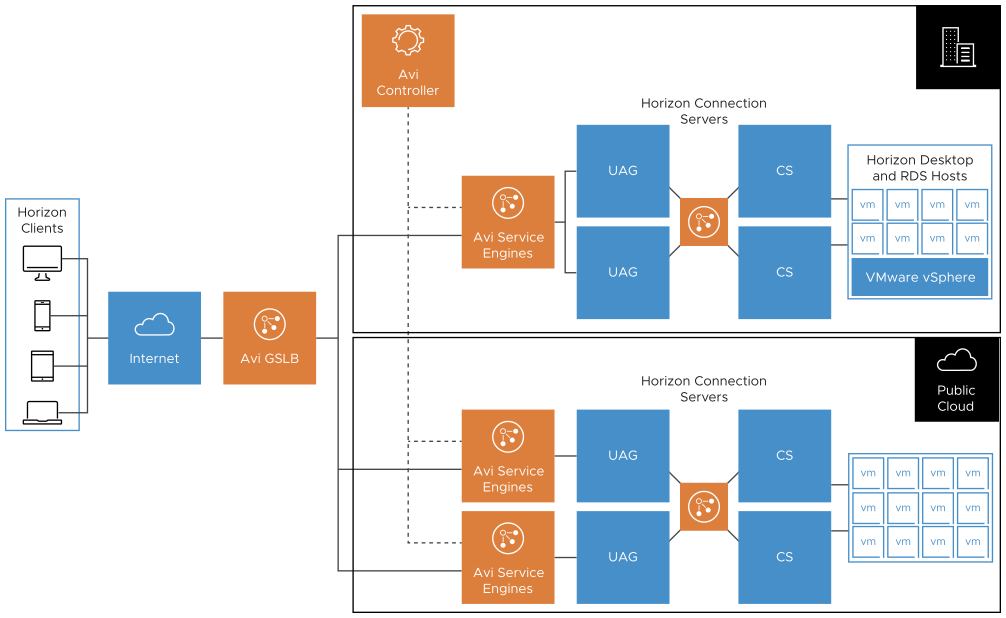

Multi-site with GSLB

Avi Load Balancer can be configured with GSLB using any load balancing algorithm (geo, source IP based, and, so on) to direct the traffic to the required site. This avoids east-west traffic even within the same geo. Site 1 could be on any ecosystem and different to the site 2 ecosystem. For example, site 1 could be on prem and site 2 could be on VMC.

High Availability

It is recommended to deploy the Avi Load Balancer Service Engines in the elastic Active/Active high availability mode. In active/active high availability deployment mode, the Virtual Service is placed on both Service Engines. Hence a failure of one Service Engine will momentarily affect only the user traffic flowing through that particular SE. For more information, see High Availability and Redundancy topic in the VMware Avi Load Balancer Configuration Guide.

For best performance, it is recommended to use separate Avi Load Balancer SEs for Horizon. Do not place any other virtual service on the same SEs.

Sizing Considerations

Avi Load Balancer Controller sizing |

It is recommended to have 3 Controllers in a cluster configured for production deployments. Avi Load Balancer Controller requires minimum specifications of CPU, RAM, and storage. For more information on sizing guidelines, see NSX Advanced Load Balancer Controller Sizing topic in the VMware Avi Load Balancer Installation Guide. |

Avi Load Balancer SE sizing |

For Horizon deployments, the SE sizing depends on number of users and the throughput per user. SE sizing also depends on the applications accessed over the VDI as higher bandwidth apps like video and 3D modeling would require higher throughput. Highest contributing factor to throughput would be the secondary protocols (Blast/PCoIP). The number of SEs depends on the deployment model chosen to load balance UAGs as discussed below:

Active/Active high availability configuration requires a minimum of two service engines. Each site will require to be sized for its own SEs with an additional SE for GSLB requirement between sites. |

Sizing Examples for Single VIP with Two Virtual Services and Single L4 Virtual Service

Example 1

No. of Users |

Approximate Throughout Per User |

Total Throughput = No. of Users X Throughput Per User |

Active/Active HA |

|---|---|---|---|

250 |

600 Kbps |

150 Mbps |

1 Core SE X 2 |

Example 2

Number of Users |

Approximate Throughout Per User |

Total Throughput = Number of Users X Throughput Per User |

Active/Active HA |

|---|---|---|---|

1500 |

2 Mbps |

3 Gbps |

2 Core SE X 2 |

Example 3

1000 users with high workloads (3D modeling, Hi-definition video, 3D graphics) in a single site.

Number of Users |

Approximate Throughout Per User |

Total Throughput = Number of Users X Throughput Per User |

Active/Active HA |

|---|---|---|---|

1000 |

20 Mbps |

20 Gbps |

4 Core SE X 4 |

For video or jitter-sensitive applications, dedicated dispatcher is recommended for best performance.

For high bandwidth applications like video, L3 scale out is recommended for best performance.

Example 4

No. of Users |

Approximate Throughout Per User |

Total Throughput = No. of Users X Throughput Per User |

Active/Active HA |

|---|---|---|---|

5000 |

2 Mbps |

10 Gbps |

3 Core SE X 2 |

It is recommended to have dedicated dispatcher for higher PPS for better performance.

Example 5

10000 users with small workloads (email, MS Office applications, multiple monitors) in a single site.

No. of Users |

Approximate Throughout Per User |

Total Throughput = No. of Users X Throughput Per User |

Active/Active HA |

|---|---|---|---|

10000 |

600 Kbps |

6 Gbps |

2 Core SE X 2 |

The summary of sizing reference for a single site is as shown below:

Throughput Per Users |

Small (Maximum of 600 kbps per user) |

Medium (Maximum of 2 Mbps Per User) |

Large (Maximum of 20 Mbps Per User |

|---|---|---|---|

100 |

1 Core X 2 SEs |

1 Core X 2 SEs |

1 Core X 2 SEs |

700 |

1 Core X 2 SEs |

1 Core X 2 SEs |

4 Core X 3SEs |

1000 |

1 Core X 2 SEs |

1 Core X 2 SEs |

4 Core X 4 SEs (L3 scale out is recommended) |

5000 |

2 Core X 2 SEs |

4 Core X 2 SEs |

4 Core X 16 SEs (L3 scale out is required) |

10000 |

2 Core X 2 SEs |

4 Core X 4 SEs (L3 scale out is recommended) |

4 Core X 32 SEs (L3 scale out is required) |

Sizing Examples for n+1 VIP

Number of Users |

Number of SEs |

|---|---|

Up to 1000 users |

1 core * 2 SEs |

1000 - 5000 users |

2 core * 2 SEs |

5000 - 10000 users |

3 core * 2 SEs |

Sizing Examples for Connection Server Load Balancing

Number of Users |

Number of SEs |

|---|---|

Upto 1000 users |

1 core * 2 SEs |

1000 - 5000 users |

2 core * 2 SEs |

5000 - 10000 users |

3 core * 2 SEs |

Sizing with GSLB

GSLB requires 1 SE per site. This can be a 1 core SE for GSLB for Horizon. For example, 250 users with small workloads (email, MS Office applications, multiple monitors) in site 1. 250 users with high workloads (3D modeling, Hi Def video, 3D graphics) in site 2.

Site |

Number of Users |

Approximate Throughput per User |

Total Throughput = Number of Users X Throughput per User (Maximum of 20 Mbps Per User |

Number of SEs Active/Active HA |

GSLB |

|---|---|---|---|---|---|

Site 1 |

250 |

600 Kbps |

150 Mbps |

1 core SE X 2 |

1 core SE |

Site 2 |

250 |

600 Kbps |

150 Mbps |

1 core SE X 2 |

1 core SE |

Total Cores = 6 |

4 |

2 |