Use this section as a reference to aid you while using VMware Cloud Director Container Service Extension as a service provider administrator.

You can view the errors in the Kubernetes Container Clusters UI in the Cluster Information page, in the Events tab.

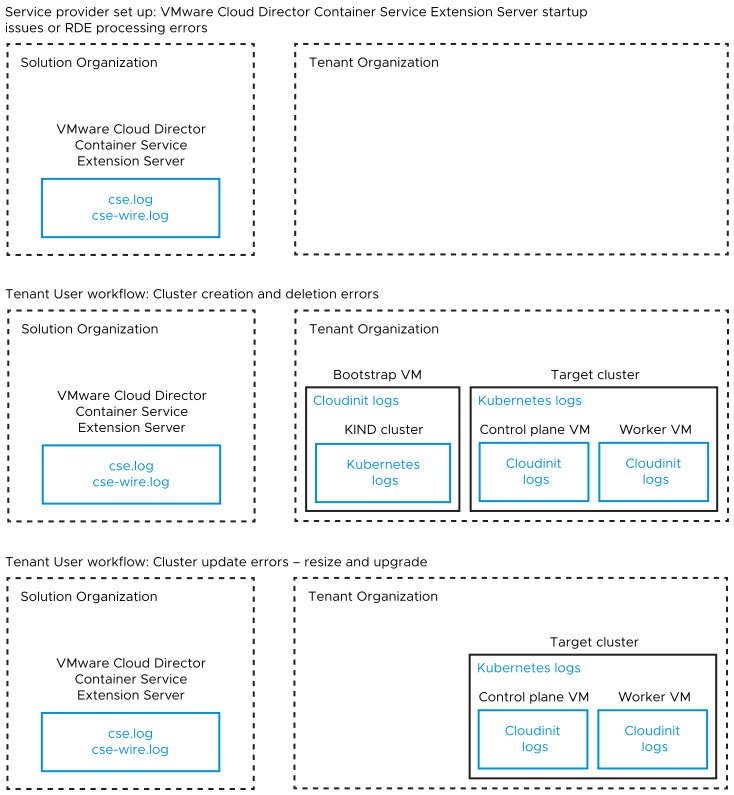

VMware Cloud Director Container Service Extension 4.x stack involves more than one component running in different virtual machines. For any errors, it is necessary to collect and analyze logs from various sources. The following diagram details the various sources of logs for Tanzu Kubernetes Grid cluster lifecycle management workflows.

- In the above diagram, Kubernetes logs can include CAPI, Kubernetes Cluster API Provider for VMware Cloud Director, Kubernetes Cloud Provider for VMware Cloud Director, Kubernetes Container Storage Interface driver for VMware Cloud Director, RDE Projector, and other pod logs.

- In the above diagram,

Cloudinitlogs can includecloud-final.out,cloud-final.err, andcloud-****.

Troubleshooting through the Kubernetes Container Clusters UI

You can view the errors in the Kubernetes Container Clusters UI in the Cluster Information page, in the Events tab.

Log Analysis from VMware Cloud Director Container Service Extension Server

Log into the VMware Cloud Director Container Service Extension server VM, and collect and analyze the following logs:

.~/cse.log- .

~/cse-wire.logif exists .~/cse.sh.log.~/cse-init-per-instance.log.~/config.tomlNote: It is necessary to remove the API token before you upload the logs.

Log Analysis from Bootstrap VM

<cluster name>. If the VM does not exist, skip this step.

/var/log/cloud-init.out/var/log/cloud-init.err/var/log/cloud-config.out/var/log/cloud-config.err/var/log/cloud-final.out/var/log/cloud-final.err/var/log/script_err.log- Use the following scripts to collect and analyze the Kubernetes logs from the KIND cluster running on the bootstrap VM. For more information see https://github.com/vmware/cloud-provider-for-cloud-director/tree/main/scripts.

- Use

kind get kubeconfigto retrieve the kubeconfig >chmod u+x generate-k8s-log-bundle.sh>./generate-k8s-log-bundle.sh <kubeconfig of the KIND cluster>

- Use

Log Analysis from the Target Cluster

Download the kubeconfig of the target cluster from the Kubernetes Container Clusters UI, and run the following script with the kubeconfig set to the target cluster.

- Use the following script to collect and analyze the Kubernetes logs from the Target Cluster running on the Control Plane and Worker Node VMs. For more information see https://github.com/vmware/cloud-provider-for-cloud-director/tree/main/scripts.

- Download the

kubeconfigof the target cluster from the Kubernetes Container Clusters UI. >chmod u+x generate-k8s-log-bundle.sh>./generate-k8s-log-bundle.sh <kubeconfig of the target cluster>

- Download the

Log Analysis from an Unhealthy Control Plane or Worker Node of the Target Cluster

Log into the problematic VM associated with the Kubernetes node, and collect and analyze the following events:

/var/log/capvcd/customization/error.log/var/log/capvcd/customization/status.log/var/log/cloud-init-output.log/root/kubeadm.err

Analyze the associated Server Configuration and Cluster Info Entities

VCDKEConfigRDE Instance: Configuration details for the VMware Cloud Director Container Service Extension server.- Get the result of https://{{vcd}}/cloudapi/1.0.0/entities/types/vmware/VCDKEConfig/1.1.0.

- Remove the Github personal token before you upload or share this entity.

capvcdClusterRDE instance associated with the cluster. This represents the current status of the cluster.- Retrieve the RDE ID from the Cluster Information page in the Kubernetes Container Clusters UI.

- Get the result of https://{{vcd}}/cloudapi/1.0.0/entities/{{cluster-id}}

- Remove the API token, and the

kubeconfigif RDE is a version less than 1.2 before you upload or share the entity.Note: For RDEs of version >= 1.2, the API token andkubeconfigdetails are already hidden and encrypted. No action is necessary.