Design of the physical data center network includes defining the network topology for connecting physical switches and ESXi hosts, determining switch port settings for VLANs and link aggregation, and designing routing.

A software-defined network (SDN) both integrates with and uses components of the physical data center. SDN integrates with your physical network to support east-west transit in the data center and north-south transit to and from the SDDC networks.

Several typical data center network deployment topologies exist:

Core-Aggregation-Access

Leaf-Spine

Hardware SDN

Leaf-Spine is the default data center network deployment topology used for VMware Cloud Foundation.

VLANs and Subnets for VMware Cloud Foundation

Configure your VLANs and subnets according to the guidelines and requirements for VMware Cloud Foundation.

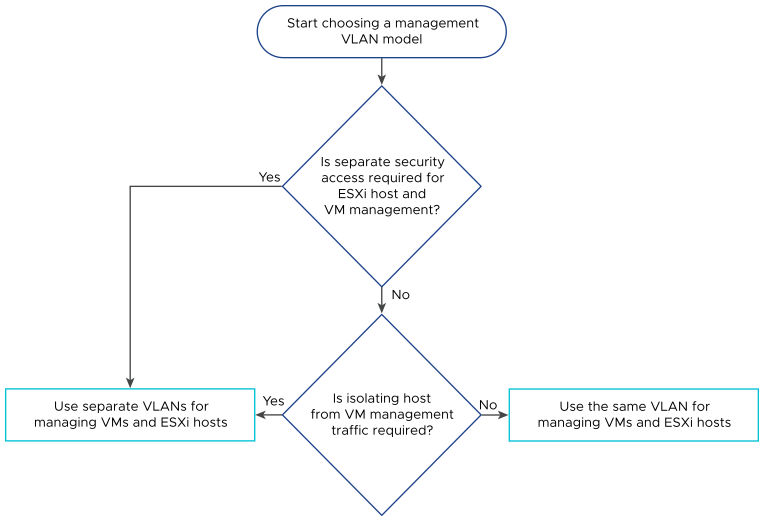

When designing the VLAN and subnet configuration for your VMware Cloud Foundation deployment, consider the following guidelines:

All Deployment Topologies |

Multiple Availability Zones |

NSX Federation Between Multiple VMware Cloud Foundation Instances |

Multi-Rack Compute VI Workload Domain Cluster |

|---|---|---|---|

|

|

|

Use the RFC 1918 IPv4 address space for these subnets and allocate one octet by rack and another by network function. |

When deploying VLANs and subnets for VMware Cloud Foundation, they must conform to the following requirements according to the VMware Cloud Foundation topology:

Function |

VMware Cloud Foundation Instances with a Single Availability Zone |

VMware Cloud Foundation Instances with Multiple Availability Zones |

|---|---|---|

VM management |

|

|

Host management - first availability zone |

|

|

vSphere vMotion - first availability zone |

|

|

vSAN - first availability zone |

|

|

Host overlay - first availability zone |

|

|

NFS |

|

|

Uplink01 |

|

|

Uplink02 |

|

|

Edge overlay |

|

|

Host management - second availability zone |

|

|

vSphere vMotion - second availability zone |

|

|

vSAN - second availability zone |

|

|

Host overlay - second availability zone |

|

|

Edge RTEP |

|

|

Management and Witness - witness appliance at a third location |

|

|

Function |

VMware Cloud Foundation Instances With Multi-Rack Compute VI Workload Domain Cluster |

VMware Cloud Foundation Instances with Multi-Rack NSX Edge Availability |

|---|---|---|

VM management |

|

|

Host management |

|

|

vSphere vMotion |

|

|

vSAN |

|

|

Host overlay |

|

|

NFS |

|

|

Uplink01 |

|

|

Uplink02 |

|

|

Edge overlay |

|

|

Leaf-Spine Physical Network Design Requirements and Recommendations for VMware Cloud Foundation

Leaf-Spine is the default data center network deployment topology used for VMware Cloud Foundation. Consider network bandwidth, trunk port configuration, jumbo frames and routing configuration for NSX in a deployment with a single or multiple VMware Cloud Foundation instances.

Leaf-Spine Physical Network Logical Design

Leaf-Spine Physical Network Design Requirements and Recommendations

The requirements and recommendations for the leaf-spine network configuration determine the physical layout and use of VLANs. They also include requirements and recommendations on jumbo frames, and on network-related requirements such as DNS, NTP and routing.

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-NET-REQD-CFG-001 |

Do not use EtherChannel (LAG, LACP, or vPC) configuration for ESXi host uplinks. |

|

None. |

VCF-NET-REQD-CFG-002 |

Use VLANs to separate physical network functions. |

|

Requires uniform configuration and presentation on all the trunks that are made available to the ESXi hosts. |

VCF-NET-REQD-CFG-003 |

Configure the VLANs as members of a 802.1Q trunk. |

All VLANs become available on the same physical network adapters on the ESXi hosts. |

Optionally, the management VLAN can act as the native VLAN. |

VCF-NET-REQD-CFG-004 |

Set the MTU size to at least 1,700 bytes (recommended 9,000 bytes for jumbo frames) on the physical switch ports, vSphere Distributed Switches, vSphere Distributed Switch port groups, and N-VDS switches that support the following traffic types:

|

|

When adjusting the MTU packet size, you must also configure the entire network path (VMkernel network adapters, virtual switches, physical switches, and routers) to support the same MTU packet size. In an environment with multiple availability zones, the MTU must be configured on the entire network path between the zones. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-NET-L3MR-REQD-CFG-001 |

For a multi-rack compute VI workload domain cluster, provide separate VLANs per rack for each network function.

|

A Layer 3 leaf-spine fabric has a Layer 3 boundary at the leaf switches in each rack creating a Layer 3 boundary between racks. |

Requires additional VLANs for each rack. |

VCF-NET-L3MR-REQD-CFG-002 |

For a multi-rack compute VI workload domain cluster, the subnets for each network per rack must be routable and reachable to the leaf switches in the other racks.

|

Ensures the traffic for each network can flow between racks. |

Requires additional physical network configuration to make networks routable between racks. |

Requirement ID |

Design Requirement |

Justification |

Implication |

|---|---|---|---|

VCF-NET-REQD-CFG-005 |

Set the MTU size to at least 1,500 bytes (1,700 bytes preferred; 9,000 bytes recommended for jumbo frames) on the components of the physical network between the VMware Cloud Foundation instances for the following traffic types.

|

|

When adjusting the MTU packet size, you must also configure the entire network path, that is, virtual interfaces, virtual switches, physical switches, and routers to support the same MTU packet size. |

VCF-NET-REQD-CFG-006 |

Ensure that the latency between VMware Cloud Foundation instances that are connected in an NSX Federation is less than 500 ms. |

A latency lower than 500 ms is required for NSX Federation. |

None. |

VCF-NET-REQD-CFG-007 |

Provide a routed connection between the NSX Manager clusters in VMware Cloud Foundation instances that are connected in an NSX Federation. |

Configuring NSX Federation requires connectivity between the NSX Global Manager instances, NSX Local Manager instances, and NSX Edge clusters. |

You must assign unique routable IP addresses for each fault domain. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

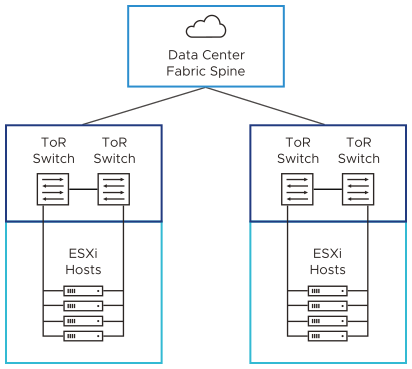

VCF-NET-RCMD-CFG-001 |

Use two ToR switches for each rack. |

Supports the use of two 10-GbE (25-GbE or greater recommended) links to each server, provides redundancy and reduces the overall design complexity. |

Requires two ToR switches per rack which might increase costs. |

VCF-NET-RCMD-CFG-002 |

Implement the following physical network architecture:

|

|

|

VCF-NET-RCMD-CFG-003 |

Use a physical network that is configured for BGP routing adjacency. |

|

Requires BGP configuration in the physical network. |

VCF-NET-RCMD-CFG-004 |

Assign persistent IP configurations for NSX tunnel endpoints (TEPs) that use static IP pools instead of dynamic IP pool addressing. |

|

Adding more hosts to the cluster may require the static IP pools to be increased.. |

VCF-NET-RCMD-CFG-005 |

Configure the trunk ports connected to ESXi NICs as trunk PortFast. |

Reduces the time to transition ports over to the forwarding state. |

Although this design does not use the STP, switches usually have STP configured by default. |

VCF-NET-RCMD-CFG-006 |

Configure VRRP, HSRP, or another Layer 3 gateway availability method for these networks.

|

Ensures that the VLANs that are stretched between availability zones are connected to a highly- available gateway. Otherwise, a failure in the Layer 3 gateway will cause disruption in the traffic in the SDN setup. |

Requires configuration of a high availability technology for the Layer 3 gateways in the data center. |

VCF-NET-RCMD-CFG-007 |

Use separate VLANs for the network functions for each cluster. |

Reduces the size of the Layer 2 broadcast domain to a single vSphere cluster. |

Increases the overall number of VLANs that are required for a VMware Cloud Foundation instance. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-NET-DES-RCMD-CFG-001 |

Implement the following physical network architecture:

|

Supports the requirements for high bandwidth and packets per second for large-scale deployments. |

Requires 100-GbE network switches. |

Recommendation ID |

Design Recommendation |

Justification |

Implication |

|---|---|---|---|

VCF-NET-RCMD-CFG-008 |

Provide BGP routing between all VMware Cloud Foundation instances that are connected in an NSX Federation setup. |

BGP is the supported routing protocol for NSX Federation. |

None. |

VCF-NET-RCMD-CFG-009 |

Ensure that the latency between VMware Cloud Foundation instances that are connected in an NSX Federation is less than 150 ms for workload mobility. |

A latency lower than 150 ms is required for the following features:

|

None. |