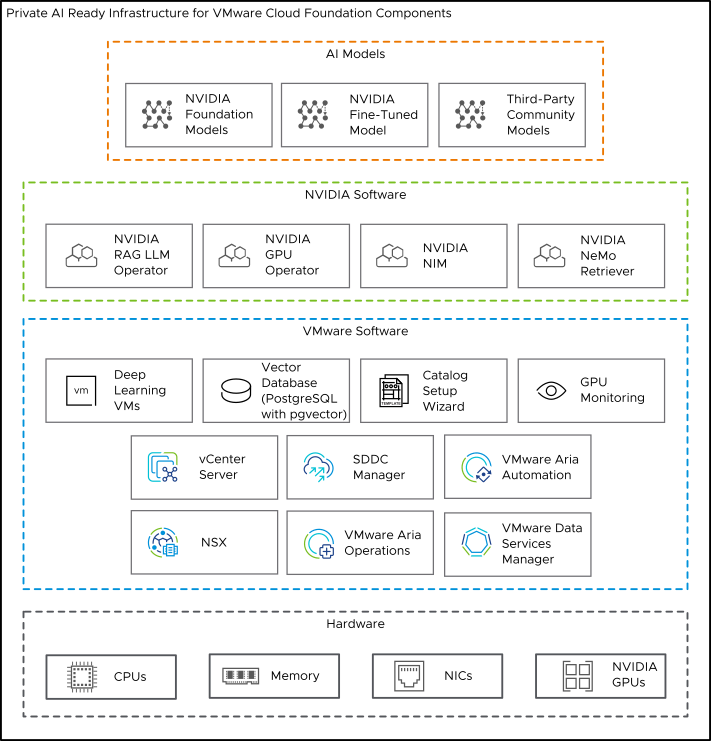

VMware Private AI Foundation with NVIDIA is an add-on solution on top of VMware Cloud Foundation that consists of multiple elements which you can use to deploy and manage your AI workloads delivered by VMware and NVIDIA.

Building on top of VMware Cloud Foundation, VMware Private AI Foundation with NVIDIA is an integrated solution designed to accelerate the enterprise GenAI journey. It includes support for open LLM Models, AI frameworks and software technology for developers to build, customize, and deploy generative AI models with billions of parameters.

VMware Private AI Foundation with NVIDIA supports two use cases:

- Development use case

-

Data scientists dealing with complex machine learning tasks and GPUs face significant challenges in managing software versions and dependencies. VMware Private AI Foundation with NVIDIA includes custom Deep Learning VM images that are pre-configured with popular frameworks and optimized for deep learning workloads. These deep learning VMs align with the underlying infrastructure to facilitate inferencing and simplify AI developer workflows.

Cloud administrators and DevOps engineers can provision AI workloads, including Retrieval-Augmented Generation (RAG), in the form of deep learning virtual machines, pre-configured with popular frameworks and optimized for deep learning workloads. Data scientists can use these deep learning virtual machines for AI development.

- Production use case

-

Cloud administrators can provide DevOps engineers with a VMware Private AI Foundation with NVIDIA environment for provisioning production-ready AI workloads on Tanzu Kubernetes Grid (TKG) clusters on vSphere with Tanzu.

VMware Cloud Foundation automation enables the initialization and deployment of GPU-enabled VMs for specific use cases, including options for NGC packages and TKG clusters. Cloud administrators can use the Private AI Automation Services Wizard in VMware Aria Automation to set up self-service catalog items for various GenAI applications.

Components Added by VMware Private AI Foundation with NVIDIA

VMware Private AI Foundation with NVIDIA adds certain components on top of the private AI infrastructure on top of vSphere and vSphere with Tanzu discussed in Detailed Design of Private AI Ready Infrastructure for VMware Cloud Foundation.

- Deep Learning VM images.

- Self-service catalog items in Service Broker in VMware Aria Automationfor provisioning deep learning VMs and AI-accelerated Tanzu Kubernetes Grid cluster.

- GPU-related metrics in VMware Aria Operations for monitoring GPU use on ESXi hosts with NVIDIA GPUs and across vSphere clusters with such hosts.

- Vector databases, managed by VMware Data Services Manager, for use in Retreival Augmented Generation (RAG) workloads.

Deep Learning VM Images

The deep learning VM images included in VMware Private AI Foundation with NVIDIA come preconfigured with leading machine learning libraries, DL workloads, and tools. They are specifically optimized and tested by NVIDIA and VMware to leverage GPU acceleration as part of a VMware Cloud Foundation environment.

Deep learning VM images are delivered as vSphere VM templates, hosted and published by VMware in a content library. You can use these images to deploy a deep learning VM by using the vSphere Client or VMware Aria Automation.

The content library with deep learning VM images for VMware Private AI Foundation with NVIDIA is available at the https://packages.vmware.com/dl-vm/lib.json URL. In a connected environment, you create a subscribed content library connected to this URL, and in a disconnected environment - a local content library where you upload images from the central content library.

There are several ways to deploy a deep learning VM:

- Directly in to vSphere from the content library.

- As a virtual machine in the VM Service of the Supervisor by using the kubectl command line tool.

- By using the Service Broker catalog in VMware Aria Automation

You can deploy a deep learning VM with one of the following DL workloads from NVIDIA.

Software Bundle |

Description |

|---|---|

PyTorch |

The PyTorch NGC Container is optimized for GPU acceleration, and contains a validated set of libraries that enable and optimize GPU performance. This container also contains software for accelerating ETL (DALI, RAPIDS), training (cuDNN, NCCL), and inference (TensorRT) workloads. |

TensorFlow |

The TensorFlow NGC Container is optimized for GPU acceleration, and contains a validated set of libraries that enable and optimize GPU performance. This container might also contain modifications to the TensorFlow source code in order to maximize performance and compatibility. The container also contains software for accelerating ETL (DALI, RAPIDS), training (cuDNN, NCCL), and inference (TensorRT) workloads. |

CUDA Samples |

This is a collection of containers to run CUDA workloads on the GPUs. The collection includes containerized CUDA samples for example, vectorAdd (to demonstrate vector addition), nbody (or gravitational n-body simulation) and other examples. These containers can be used for validating the software configuration of GPUs in the system or simply to run some example workloads. |

DCGM Exporter |

NVIDIA Data Center GPU Manager (DCGM) is a suite of tools for managing and monitoring NVIDIA datacenter GPUs in cluster environments. The monitoring stacks usually consist of a collector, a time-series database to store metrics and a visualization layer. DCGM-Exporter is an exporter for Prometheus to monitor the health and get metrics from GPUs. |

Triton Inference Server |

Triton Inference Server provides a cloud and edge inferencing solution optimized for both CPUs and GPUs. Triton supports an HTTP/REST and GRPC protocol that allows remote clients to request inferencing for any model being managed by the server. For edge deployments, Triton is available as a shared library with a C API that allows the full functionality of Triton to be included directly in an application. |

Generative AI Workflow - RAG |

This reference solution demonstrates how to find business value in generative AI by augmenting an existing foundational LLM to fit your business use case. This is done using RAG which retrieves facts from an enterprise knowledge base containing a company’s business data. Pay special attention to the ways in which you can augment an LLM with your domain-specific business data to create AI applications that are agile and responsive to new developments. |

For information on deep learning VM images in VMware Private AI Foundation with NVIDIA, see About Deep Learning VM Images in VMware Private AI Foundation with NVIDIA and Deep Learning Workloads in VMware Private AI Foundation with NVIDIA in the VMware Private AI Foundation with NVIDIA Guide.