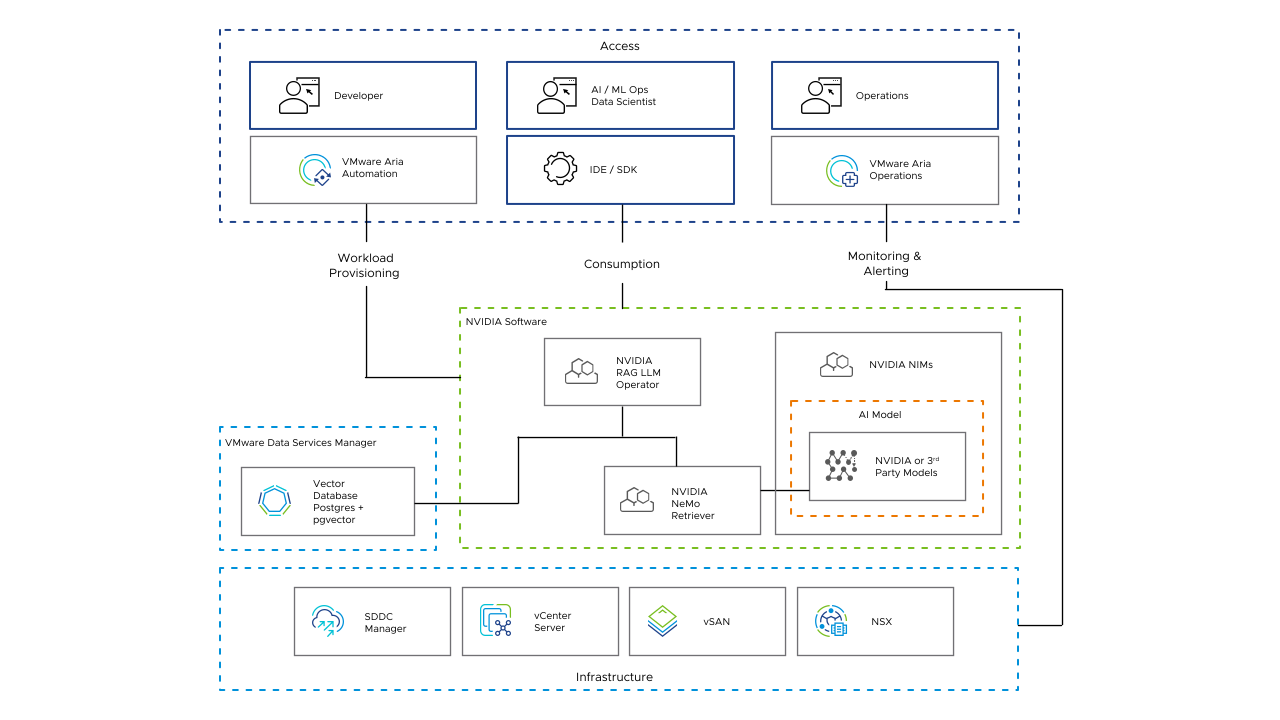

The logical design consists of multiple elements which you can use to deploy and manage infrastructure used to run AI and GPU-enabled high performance workloads.

AI infrastructure is comprised of hardware and software elements essential for fine-tuning and serving (inferencing) models and other AI workloads. This includes specialized processors such as GPUs (hardware), as well as optimization and deployment tools (software), all managed by VMware Cloud Foundation. The software components within VMware Cloud Foundation provide the functionality to host compute, network, and storage for virtual machines and container-based workloads using vSphere with Tanzu, NSX, and vSAN.

You activate and configure vSphere with Tanzu on your shared edge and workload vSphere cluster in the VI workload domain. NSX Edge nodes provide load balancing, north-south connectivity, and all required networking for the Kubernetes services. The ESXi hosts in the shared edge and workload vSphere cluster are prepared as NSX transport nodes to provide distributed routing and firewall services to your customer workloads as well as GPU resources by using multiple technologies like NVIDIA vGPU or MIG.

Private AI ready infrastructure environment consists of multiple elements.

- ESXi Host Vendor Components

-

Vendor-provided ESXi components, such as VIB files, are used to enable GPUs and for communication channels for metrics and detailed information of their devices.

- Supervisor

-

The Supervisor is a unique kind of Kubernetes cluster that uses ESXi hosts as worker nodes through an additional process called Spherelet that is created on each host.

- Harbor Supervisor Service

-

Harbor is deployed as a service in a Supervisor.

- VM Service

-

The VM Service in vSphere with Tanzu enables DevOps engineers to deploy and run VMs, in addition to containers, in a shared Kubernetes environment. Both containers and VMs share the same vSphere namespace resources and can be managed from a single vSphere with Tanzu interface.

- Tanzu Kubernetes Grid Service

-

The Tanzu Kubernetes Grid Service deploys Tanzu Kubernetes Grid clusters as Photon OS appliances on top of the Supervisor.

- Tanzu Kubernetes Grid Cluster

-

Upstream-compliant Kubernetes cluster to run container workloads.

- Tanzu vSphere Pods

-

The Container Runtime for ESXi (CRX) uses the same hardware virtualization techniques as VMs and it has a VM boundary around it. A direct boot technique is used, which allows the Linux guest of CRX to initiate the main init process without passing through kernel initialization. This allows vSphere Pods to boot nearly as fast as containers, allowing standalone VMs to run on top of the Supervisor Cluster.

- Kubernetes Operators

-

Kubernetes Operators on the Tanzu Kubernetes Clusters are used to automate the installation of drivers for GPU and high-speed interconnect devices.

- VMware Data Services Manager

-

VMware Data Services Manager offers database management in vSphere. It supports on-demand provisioning and automated management of PostgreSQL and MySQL databases in a vSphere environment.

Private AI ready infrastructure serves as the foundational building blocks based on VMware Cloud Foundation for running joint private AI solutions with partners such as NVIDIA.