If you use Kubernetes as your orchestration framework, you can install and deploy the DSM Consumption Operator to enable native, self-service consumption of VMware Data Services Manager from your Kubernetes environment.

Be familiar with basic concepts and terminology related to the DSM Consumption Operator.

- Consumption Operator - The operator used to manage resources against the DSM Gateway.

- Cloud Administrator - An administrator of a Kubernetes cluster that consumes DSM in a self-service manner from within Kubernetes clusters. The Cloud Administrator is responsible for installing and setting up the Consumption Operator. The Cloud Administrator account maps to a DSM user account within DSM. For information about the DSM user, see About Roles and Responsibilities and Configuring VMware Data Services Manager in the vSphere Client.

- Cloud User - A developer using a Kubernetes cluster to consume database cluster from DSM in a self-service manner.

- DSM Gateway - The gateway implementation based on Kubernetes. It is used within DSM to provide a Kubernetes API for infrastructure policies, Postgres clusters, and MySQL clusters.

- Consumption Cluster - The Kubernetes cluster where the consumption operator and custom resources are deployed to use the DSM API for self-service.

- Infrastructure Policy - Allows vSphere administrators to define and set limits to specific compute, storage, and network resources that DSM database workloads can use.

Supported DSM Version

The following table maps the Consumption Operator version with the DSM version.

| Consumption Operator | DSM Version |

|---|---|

| 1.0.0 | 2.0.x |

Workflow

Follow these steps to install and configure the DSM Consumption Operator:

Step 1: Satisfy the Requirements.

Step 2: Install the DSM Consumption Operator. This task is performed by the Cloud Administrator.

Step 3: Configure a User Namespace. This task is performed by the Cloud Administrator.

Step 4: Create a Database Cluster. This task is performed by Database Users.

Step 1: Requirements

Before you begin installing and deploying the DSM Consumption Operator, ensure that the following requirements are met:

- Helm v3.8+

- You have a DSM provider deployed and configured in your organization. For DSM installation, follow steps in Installing and Configuring VMware Data Services Manager.

- You have a running Kubernetes cluster.

Step 2: Install the DSM Consumption Operator

This task is performed by the Cloud Administrator.

Prerequisites

As a Cloud Administrator, obtain the following details from the DSM Administrator:

- DSM username and password for the Cloud Administrator.

- DSM provider URL.

- TLS certificate for secure communication between consumption operator and DSM provider. Save this certificate in a file named 'root_ca' under directory 'consumption/'. These names are just examples. You can use other names, but make sure to use them correctly in the helm and kubectl commands during the installation. For more details, see Getting Provider VM Certificate

- List of infrastructure policies that are supported by the DSM provider and are allowed to be used in the given consumption cluster.

- List of backup locations that are supported by the DSM provider and are allowed to be used in the given consumption cluster.

Getting Provider VM Certificate

If you are a DSM Administrator, you need to share the provider VM CA certificate with the Cloud Administrator. Use one of the following methods to get this certificate.

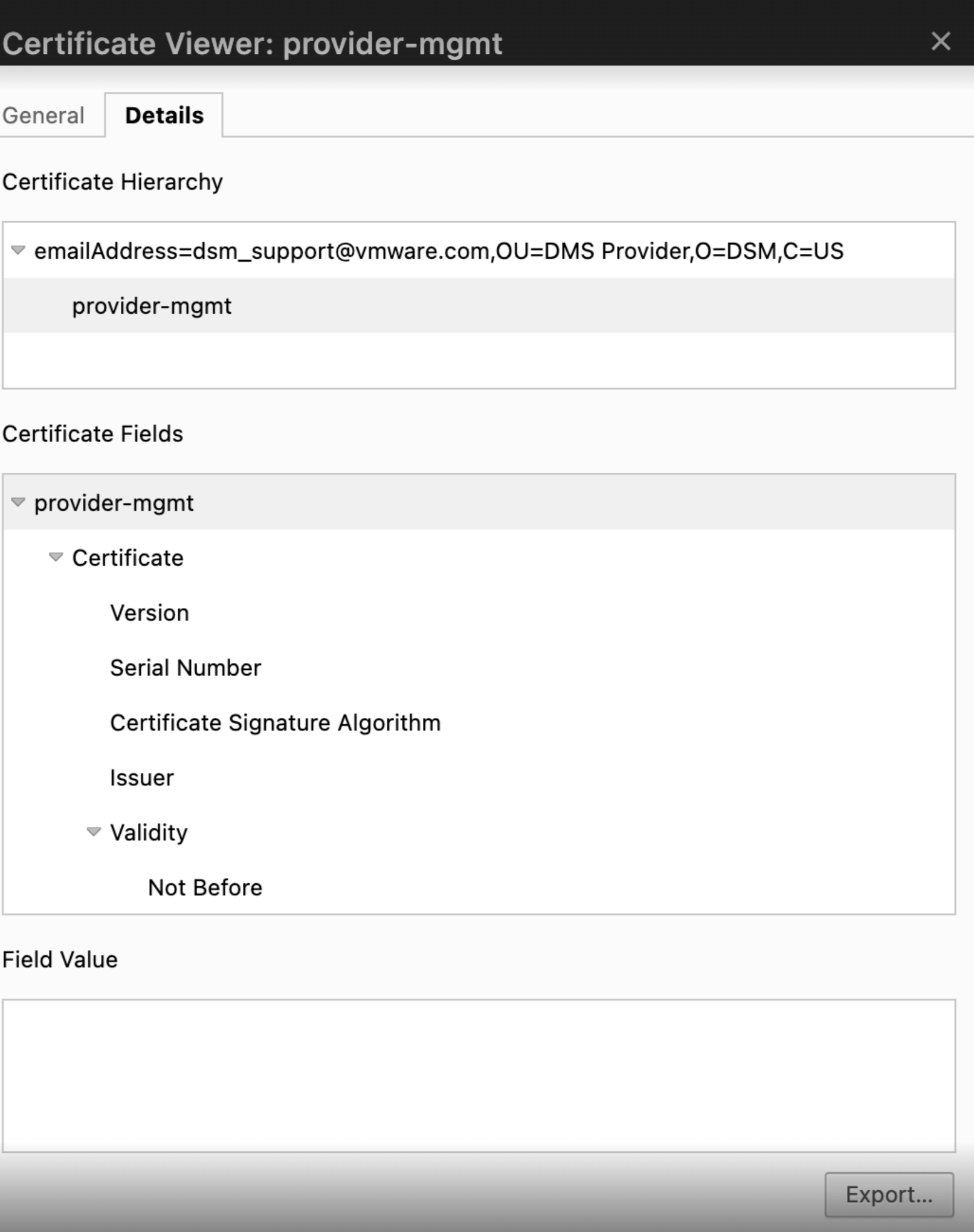

From Chrome Browser:

Open the DSM UI portal.

Click the icon to the left of the URL in the address bar.

In the dropdown list, click the Connection tab > Certificate to open the Certificate Viewer window.

Click the Details tab, click Export, and save the certificate with the .pem extension locally.

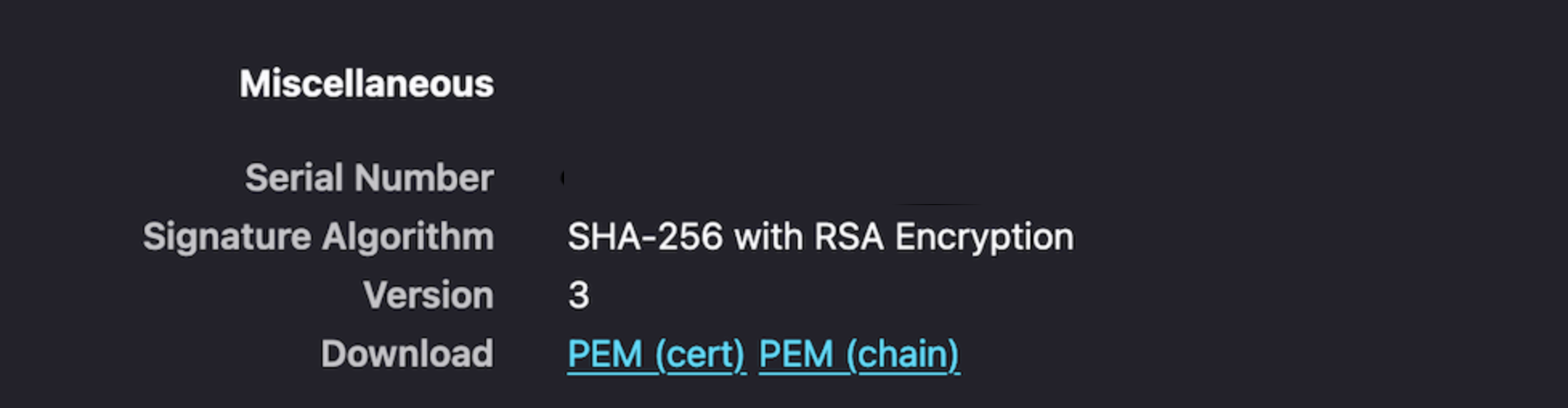

From Firefox Browser:

Open DSM UI portal.

Click the lock icon to the left of the URL in the address bar.

Click Connection Secure > More Information.

On the Security window, click View Certificate.

In the Miscellaneous section, click

PEM (cert)to download the CA certificate for Provider VM.

Procedure

Follow this procedure to install the DSM Consumption Operator.

Pull the helm chart from registry and unpack it in a directory.

helm pull oci://projects.packages.broadcom.com/dsm-consumption-operator/dsm-consumption-operator --version 1.0.0 -d consumption/ --untarThis action will pull the DSM consumption operator helm chart and untar the package in the

consumption/directory.Create a

values-override.yamlfile and update appropriate DSM resources, including infrastructure policies, backup locations, and so on.In the

consumption directory, copy thevalues.yaml and create a newvalues_override.yamlfile. Make sure to update the registry inimage.namein values_override.yaml to the Broadcom registry as shown in the below snippet. Use the following as an example:imagePullSecret: registry-creds replicas: 1 image: name: projects.packages.broadcom.com/dsm-consumption-operator/consumption-operator tag: 1.0.0 dsm: authSecretName: dsm-auth-creds # allowedInfrastructurePolicies is a mandatory field that needs to be filled with allowed infrastructure policies for the given consumption cluster allowedInfrastructurePolicies: - infra-policy-01 # allowedBackupLocations is a mandatory field that holds a list of backup locations that can be used by database clusters created in this consumption cluster allowedBackupLocations: - default-backup-storage # consumptionClusterName is an optional name that you can provide to identify the Kubernetes cluster where the operator is deployed consumptionClusterName: "vcd-org-k8s-cluster" # psp field allows you to deploy the operator on pod security policies-enabled Kubernetes cluster. # Set psp.required to true and provide the ClusterRole corresponding to the restricted policy. psp: required: false role: ""Note: If you are using TKG, switch

psp.requiredtotrueand use psp role, for example:psp:vmware-system-restricted. For more information on TKG and PodSecurityPolicies, see Using Pod Security Policies with Tanzu Kubernetes Clusters.Create an operator namespace.

kubectl create namespace dsm-consumption-operator-systemCreate a docker registry secret named

registry-creds.This secret is needed to pull the image from the registry where the consumption operator image exists.

The secret name should match the value in the field

imagePullSecretin thevalues_override.yamlyou created before.If you are directly using the default registry i.e. Broadcom Artifactory, you don't need authentication. You can simply create a registry secret using the following command, ignoring the username and password values:

kubectl -n dsm-consumption-operator-system create secret docker-registry registry-creds \ --docker-server=https://projects.packages.broadcom.com \ --docker-username=ignore \ --docker-password=ignoreIf you are using your own internal registry, where the consumption operator image exists, you need to provide those credentials here:

kubectl -n dsm-consumption-operator-system create secret docker-registry registry-creds \ --docker-server=<DOCKER_REGISTRY> \ --docker-username=<REGISTRY_USERNAME> \ --docker-password=<REGISTRY_PASSWORD>Create an authentication secret that includes all the information needed to connect to the DSM provider.

The following example uses the Daily CI environment.

kubectl -n dsm-consumption-operator-system create secret generic dsm-auth-creds \ --from-file=root_ca=consumption/root-ca \ --from-literal=dsm_user=<CLOUD_ADMIN_USERNAME> \ --from-literal=dsm_password=<CLOUD_ADMIN_PASSWORD> \ --from-literal=dsm_endpoint=<DSM_PROVIDER_ENDPOINT>Get all these values from the DSM administrator, who is managing the DSM provider instance.

Note: Make sure that this secret name matches the value in the field 'dsm.authSecretName' in the 'values_override.yaml' file.Install the operator. Make sure that you already have the operator helm chart pulled and a

values_override.yamlfile in theconsumption/directory.helm install dsm-consumption-operator consumption/dsm-consumption-operator -f consumption/values_override.yaml --namespace dsm-consumption-operator-systemAs a Cloud Admin, check that the operator pod is up and running in the given operator namespace:

kubectl get pods -n dsm-consumption-operator-system NAME READY STATUS RESTARTS AGE dsm-consumption-operator-controller-manager-7c69b5cbdc-s5jfl 1/1 Running 0 3h41m

Step 3: Configure a User Namespace

This task is performed by the Cloud Administrator.

Once the consumption operator is deployed successfully, the Cloud Administrator can set up Kubernetes namespaces for the Cloud Users so that they can start deploying databases on DSM.

The Cloud Administrator can also have namespace-specific enforcements to allow or disallow the use of InfrastructurePolicies and BackupLocations in different namespaces.

To achieve this policy enforcement, consumption operator introduces two custom resources called InfraPolicyBinding and BackupLocationBinding. They are simple objects with no spec field. The Cloud Administrator only needs to make sure that the name of the binding object matches the InfrastructurePolicy or BackupLocation that the namespace is allowed to use.

Create a file dev-team-ns.yaml and run the following command:

kubectl apply -f dev-team-ns.yaml

This action creates a namespace called dev-team, and creates infrastructurepolicybinding and backuplocationbinding.

To check the status of these bindings, you can run kubectl get on those resources and check the status column.

Use the following as an example:

---

apiVersion: v1

kind: Namespace

metadata:

name: dev-team

---

apiVersion: infrastructure.dataservices.vmware.com/v1alpha1

kind: InfrastructurePolicyBinding

metadata:

name: infra-policy-01

namespace: dev-team

---

apiVersion: databases.dataservices.vmware.com/v1alpha1

kind: BackupLocationBinding

metadata:

name: default-backup-storage

namespace: dev-team

As a Cloud Administrator, you can configure multiple namespaces with different infrastructure policies and backup locations in the same consumption cluster.

You can also set up multiple consumption clusters to connect to a single DSM provider.

You can use the same cluster name in different namespaces or different Kubernetes clusters. However, in DSM 2.0, same namespace cannot have a PostgresCluster and a MySQLCluster with the same name.

Step 4: Create a Database Cluster

This task is performed by Cloud Users.

View available infrastructure policies by running the following command:

kubectl get infrastructurepolicybinding -n dev-teamYou can also check the status field of each infrapolicybinding to find out the values of vmClass, storagePolicy, and so on.

View available backup locations by running the following command:

kubectl get backuplocationbinding -n dev-teamCreate a Postgres or MySQL database cluster.

For Postgres, save the following content in a file called

pg-dev-cluster.yamland runkubectl apply -f pg-dev-cluster.yaml.apiVersion: databases.dataservices.vmware.com/v1alpha1 kind: PostgresCluster metadata: name: pg-dev-cluster namespace: dev-team spec: replicas: 1 version: "14" vmClass: name: medium storageSpace: 20Gi infrastructurePolicy: name: infra-policy-01 storagePolicyName: dsm-test-1 backupConfig: backupRetentionDays: 91 schedules: - name: full-weekly type: full schedule: "0 0 * * 0" - name: incremental-daily type: incremental schedule: "0 0 * * *" backupLocation: name: default-backup-storageFor MySQL, save the following content in a file called

mysql-dev-cluster.yamland runkubectl apply -f mysql-dev-cluster.yaml.apiVersion: databases.dataservices.vmware.com/v1alpha1 kind: MySQLCluster metadata: name: mysql-dev-cluster namespace: dev-team spec: members: 1 version: "8.0.32" vmClass: name: medium storageSpace: 25Gi backupConfig: backupRetentionDays: 91 schedules: - name: full-30mins type: full schedule: "*/30 * * * *" infrastructurePolicy: name: infra-policy-01 storagePolicyName: dsm-test-1 backupLocation: name: default-backup-storageFor more information on the PostgresCluster and MySQLCluster API fields, see VMware Data Services Manager API Reference.

Step 5: Connect to the Database Cluster

Once the cluster is in ready state, use its status field to get connection details. Password is stored in a secret in the same namespace and with name as referenced in

status.passwordRef.namebelow.status: connection: dbname: pg-dev-cluster host: <host-IP> passwordRef: name: pg-dev-cluster port: 5432 username: pgadminTo get the password, run:

kubectl get secrets/pg-dev-cluster --template={{.data.password}} | base64 -DTest the connection to your newly-created database cluster using

psql. Enter password when prompted.psql -h <host-IP> -p 5432 -U pgadmin -d pg-dev-cluster Password for user pgadmin: SSL connection (protocol: TLSv1.3, cipher: TLS_AES_256_GCM_SHA384, bits: 256, compression: off) Type "help" for help. pg-dev-cluster=#