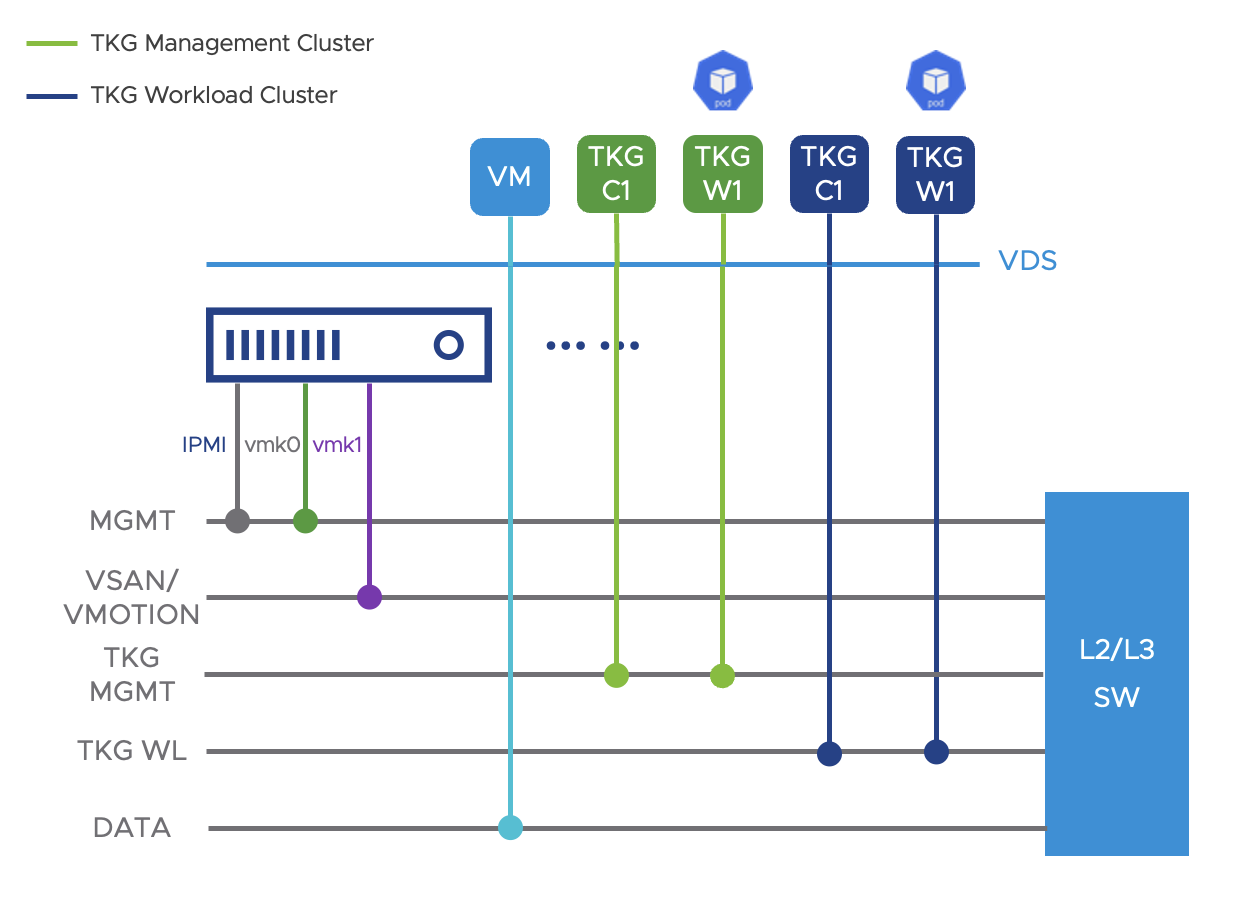

For consistency in managing VLANs, NSX-T-based networking or vSphere Distributed Switch (VDS) is recommended in the data center for Enterprise Edge deployments. At least five VLANs are recommended as discussed in the section, and separate port groups should be allocated to TKG management and workload clusters.

VLAN requirements and L2 Design

In the data center, NSX-T-based networking or vSphere Distributed Switch (VDS) is recommended to unify the management of VLANs in the vSphere cluster and maintain configuration consistency across the cluster. A minimum of five VLANs will be required per the illustrated diagram. VMkernel adapters for management and vSAN should be added to the matching port groups accordingly to enable vCenter connectivity, vSAN/vMotion, and other services. It is recommended to allocate separate port groups to the TKG management and TKG workload clusters –you may potentially need more than one VLAN for TKG workload clusters if there will be a large number of Kubernetes clusters. The port group ‘DATA’ can be used to run virtual machines and other infrastructure, as well as used for inbound application network forwarding traffic to the pods running in TKG workload clusters. Proxies and external load balancers such as NSX Advanced Load Balancer Service Engines should be connected to the ‘DATA’ VLAN to forward and monitor user traffic destined for the TKG clusters.

VLAN |

Purpose |

DHCP Required |

Comments |

|---|---|---|---|

MGMT |

Management VLAN for vmk0 and IPMI interfaces, and Infrastructure VMs |

No |

|

VSAN/VMOTION |

Network to connect VMkernel interface for vSAN and vMotion |

No |

Recommend MTU of 9000 to support jumbo frames |

TKG MGMT |

Network for TKG Management Clusters |

Yes |

Deploy both DC management cluster and Edge management cluster in this network. Reserve a fixed range of 10 IP addresses for TKG management cluster master nodes |

TKG WKL |

Network for TKG Workload master node and worker nodes |

Yes |

Reserve a fixed range of 30 IP addresses for VIP’s used for TKG workload cluster control plane |

DATA |

Network for VM’s and inbound application traffic |

No |

Inbound client traffic to applications should traverse this VLAN if external load balancers outside of TKG clusters are used |

For the networks ‘TKG MGMT’ and ‘TKG WKL’ planned for TKG management and workload clusters, DHCP is required for IP address assignment for the master nodes and worker nodes. In each of those VLANs, reserve a fixed IP range outside of the DHCP scope for the virtual IP address (VIP) to be used by the control plane nodes for HA. Whether it’s NSX ALB or Kube-vip being used as an external load balancer, a VIP must be assigned to the TKG management or workload cluster’s control plane even if only one master node is deployed. It is a static IP address chosen by the administrator that should be part of the network subnet. Therefore, it’s recommended to plan and reserve an IP range specifically for future VIP usage.

For data center designs that leverage NSX ALB, follow this product documentation for networking and other recommendations.

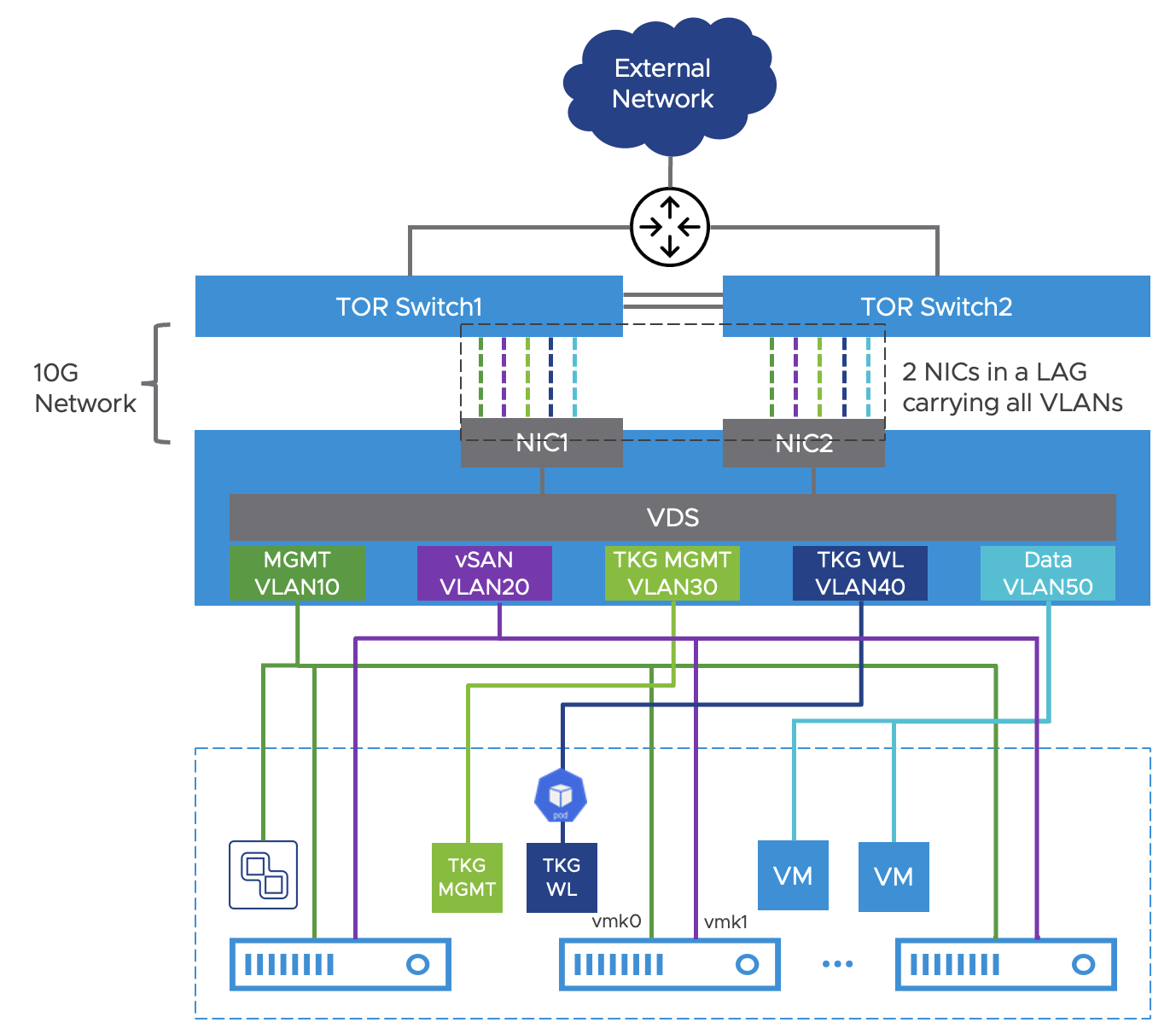

Infrastructure and Networking

The data center vSphere cluster should have a minimum of 4 hosts with vSAN enabled for shared storage. Highly available data center switches will provide networking services and routing for the VMs and workloads deployed in the cluster. These modern Top of Rack (TOR) switches are expected to support features such as multi-chassis link aggregation (MLAG) for LAN redundancy and bandwidth optimization and allow host uplinks to be connected in an active/active manner when LACP is configured. Each vSphere host should have at least 2 NICs for upstream connectivity to the TOR switches.

The data center cluster should leverage vSphere Distributed Switch (VDS) for networking and create the five VLANs and the uplink as port groups in VDS. Each host should configure two or more physical uplinks in a link aggregation group (LAG) after adding the LAG in LACP settings from the VDS. The data center switches, which generally support MLAG or similar technologies, shall be configured with matching EtherChannel and layer 2 configurations on their ports. This enables each host to aggregate their bandwidth and allows each port group to utilize NIC teaming and failover for traffic load balancing and failover. You can find more information about link aggregation in ESXi from this KB. The VDS uplink should be configured as a trunk port in most network designs to allow all the local port groups.

The reference network design is provided above with the following requirements:

Use a minimum of two NICs per host to connect to the top of the rack (TOR) switch.

If the data center switches support MLAG, VSS, or virtual port channel (vPC), configure LACP for the uplinks in VDS and enable NIC Teaming and Failover for port groups (VLANs).

The upstream data center infrastructure should provide networking services – i.e., DHCP, DNS, and NTP to the Virtual machines and workloads in the cluster and serve as the default gateway for the configured VLAN networks.

Except for the vSAN network subnet, the other four VLANs must have reachability to edge networks. Upstream routing devices should adverse the network prefixes, so they are routable from edge sites.

Internet access for the pods in the TKG management and workload clusters is required. A web proxy is recommended for outbound traffic.

Data Center Network Routing

The data center requires network connectivity to edges without network address translation (NAT) between the sites. This is necessary for TKG management clusters to provision the workload clusters and the associated components at the edge sites. For most enterprises, the connectivity is enabled by private connectivity over MPLS networks or VPN networks built over public ISP circuits. The Enterprise Edge does not provide a native network routing solution and relies on existing routing infrastructure in the data center to facilitate remote network connectivity.

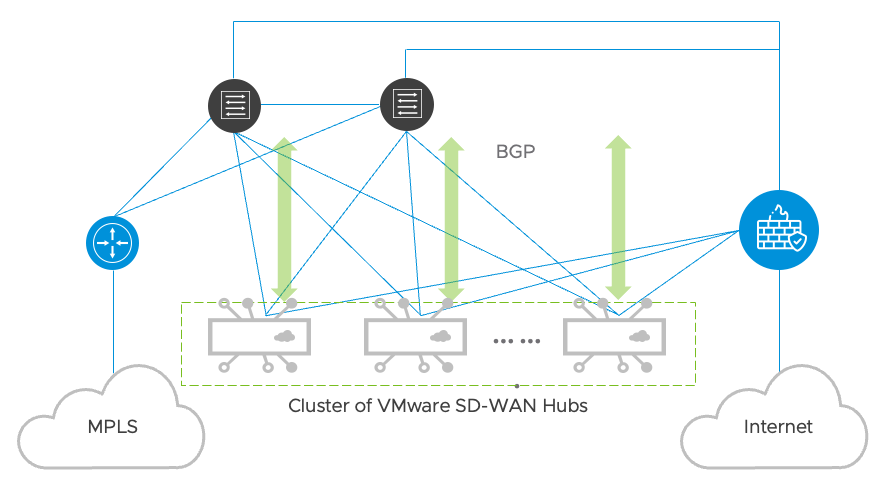

VMware SD-WAN can be integrated into the data center to provide connectivity between the edge and the data center. Leveraging existing WAN connections such as MPLS and Internet, VMware SD-WAN creates an Overlay network to connect the edge sites regardless of the underlying transport. It requires VMware SD-WAN Hubs to be deployed in the data center as virtual appliances to allow overlay tunnels established from the edge SD-WAN sites. An edge site with only an Internet circuit will build SD-WAN tunnels using only its Internet connection, while edge sites with both MPLS and Internet circuits will connect to the SD-WAN Overlay by building tunnels using both MPLS and Internet simultaneously.

Complementing VMware SD-WAN to an Enterprise Edge deployment will provide the following benefits in terms of edge connectivity:

Enhance connectivity from remote edge sites with poor WAN connections with link remediation techniques.

Link aggregation and application layer QoS for control plane traffic from edges to data center.

Provide a backup path for edge workload clusters to SaaS and Internet. This can be achieved by failing over traffic to a redundant link at the edge or backhauling the traffic through the data center via SD-WAN.

It is recommended to deploy the SD-WAN Hubs in the data center as a hub cluster in an off-path design. An off-path design places the hubs out of the existing SDDC north-south routing path and requires the SD-WAN hubs to run BGP – a dynamic routing protocol, with the layer 3 switches on the LAN side. The Hubs will also have their WAN interfaces connected to public Internet circuits through the firewall and private WAN connections to establish the SD-WAN overlays. The SD-WAN Hubs will dynamically advertise the Edge networks to the data center switches and utilize routing preferences to ensure traffic flow is symmetric. This design minimizes impact on the existing SDDC network architecture and enables the administrator to selectively onboard edge networks through SD-WAN. The Reference Design section will provide more details on SD-WAN implementation in the Edge sites.

A reference design for SD-WAN Hubs deployment is shown in the following figure. The Hubs are deployed as virtual machines in the data center cluster but logically connected to the MPLS network and the Internet connection behind a firewall. They will establish overlay tunnels across both MPLS and the Internet to build an overlay network to connect remote edge networks. Each hub will be configured to peer with the data center switches using BGP peering on its LAN interfaces. Through the BGP peering, the Hubs will learn the data center routes and dynamically advertise the data center prefixes to edge SD-WAN sites. Conversely, it will learn the edge networks automatically and adverse those edge prefixes to the local data center routers and switches. SD-WAN enables the deployment of edge networking to be simple, dynamic, and scalable.