The NVIDIA vGPU software includes two components: the Virtual GPU Manager and a separate guest OS NVIDIA vGPU driver. This section will walk users through installing vGPU technology on the vSphere hosts and enabling vGPU for virtual machines.

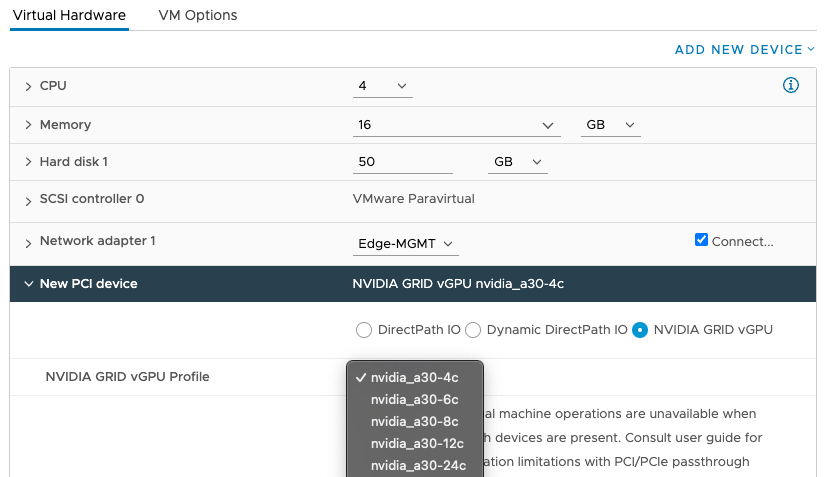

In Enterprise Edge deployments running on vSphere, NVIDIA vGPU is a method for using and sharing a single GPU device between multiple virtual machines. Given the use cases at the Enterprise Edge mainly involve computer vision, machine learning, and AI workloads, the NVIDIA Virtual ComputeServer (vCS) is what we recommend for the vGPU software license.

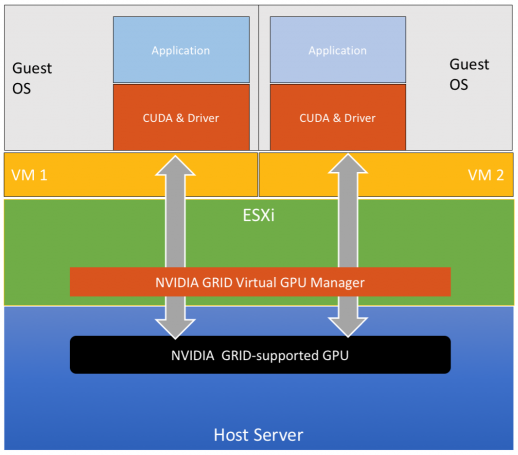

The NVIDIA vGPU software consists of two separate parts. First is the NVIDIA Virtual GPU Manager that is loaded as a VMware Installation Bundle (VIB) on the vSphere host, and the second part is the vGPU driver that is installed within the guest operating system of the virtual machine using the GPU. Deploying GPUs in vSphere with vGPU allows administrators to choose between dedicating all the GPU resources to one virtual machine or allowing GPU sharing between multiple virtual machines. The figure below shows the architecture demonstrating how ESXi and virtual machines work in conjunction with vGPU software to deliver GPU sharing.

The instructions to set up NVIDIA vGPU on vSphere are detailed in this blog post. They can be summarized into the following steps:

Prerequisites

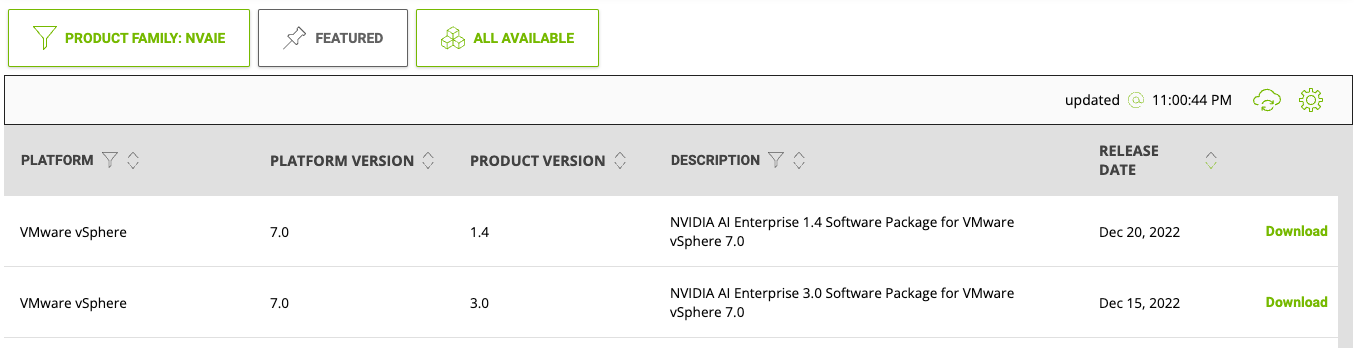

Access to the NVIDIA licensing portal and NVIDIA AI Enterprise software

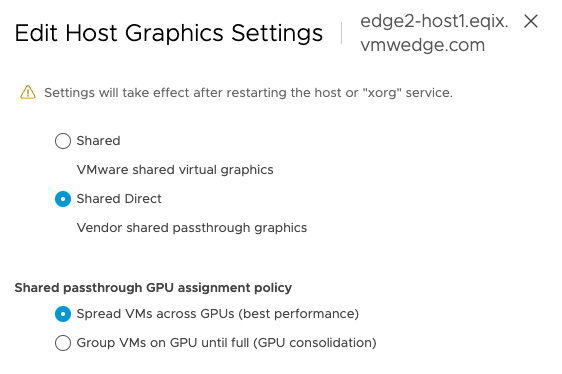

vCenter access with read and write privileges for Host Graphics Settings

SSH access to the vSphere hosts in the cluster

Procedure

Results

Applications running in multiple virtual machines will now be able to share a GPU. The vGPU technology offers the choice between dedicating a full GPU device to one virtual machine or allowing partial sharing of a GPU device by multiple virtual machines, making it a useful option when applications do not need the full power of a GPU or when there is a limited number of GPU devices available.

At the time of writing this guide, Tanzu Kubernetes Grid (TKG) versions up to 1.6 do not support the deployment of GPU-enabled workload clusters using the vGPU method, only PCI passthrough is supported for TKG workloads. The ECS Enterprise Edge aims to support vGPU-enabled clusters leveraging NVIDIA GPU Operator in the future.

What to do next

Restart the

nvidia-griddservice in the virtual machine after vGPU driver installation