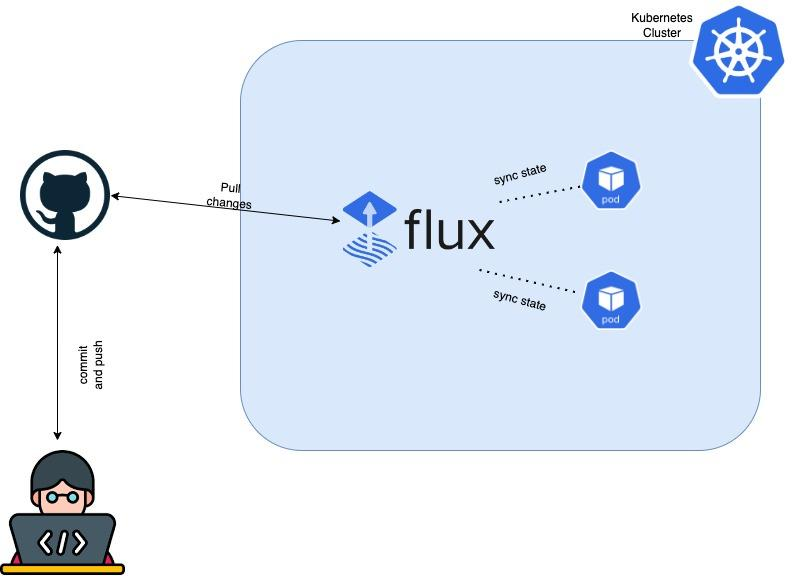

Edge Compute Stack uses Flux CD for implementing portions of its GitOps procedures, this is automatically added to the Edge host and configured ready for use. Flux CD is a tool used for GitOps, which is a framework for managing infrastructure and deployments through tools and processes used successfully in application development such as version-controlled repositories and CI/CD, or Flux CD specifically focuses on continuous delivery (CD) by automating the deployment of applications and infrastructure changes based on Git repository changes.

Because GitOps uses and relies on Git itself as a mechanism of version control, but also triggering the CI/CD pipeline - the repository structure becomes very important. The Git repository structure is crucial in the context of Flux CD and GitOps for several reasons:

Version Control: Git provides version control for infrastructure configurations and deployment manifests. With a structured repository, you can track changes over time, roll back to previous versions if needed, and collaborate effectively.

Organization: A well-organized repository structure enhances clarity and maintainability. It allows you to separate different components such as application code, configuration files, deployment manifests, and scripts into distinct directories or branches.

Granular Access Control: Git repositories support access control at various levels (repository, branch, directory). By structuring your repository appropriately, you can enforce access controls based on roles and responsibilities, ensuring that only authorized users can make changes to specific parts of the infrastructure.

Automated Workflows: Flux CD relies on Git repository changes to trigger automated workflows for deployment and configuration updates. A structured repository makes it easier to define rules and automation scripts that respond to specific changes in the repository, leading to efficient and reliable deployment processes.

Environment Management: With a structured repository, you can manage multiple environments (e.g., development, staging, production) more effectively. Each environment can have its branch or directory within the repository, allowing for controlled promotion of changes across environments.

Auditing and Compliance: A well-structured Git repository facilitates auditing and compliance requirements. You can track who made changes, when they were made, and why, providing transparency and accountability in the deployment and configuration management process.

In this portion of the document we will walk through some best practices, and simple examples of how to structure your Git repository - these are just examples and further examples, and alternative methods can be found on Flux’s documentation: https://fluxcd.io/flux/guides/repository-structure/

Repository Structure

Using Flux CD to manage the deployment of infrastructure and applications across multiple edge locations needs some consideration. You will not want to replicate the same configuration across multiple edge sites. Instead, you will want to create base configuration and rely on Flux’s powerful patching leveraging Kustomize to patch or modify any site or edge specific configuration where required.

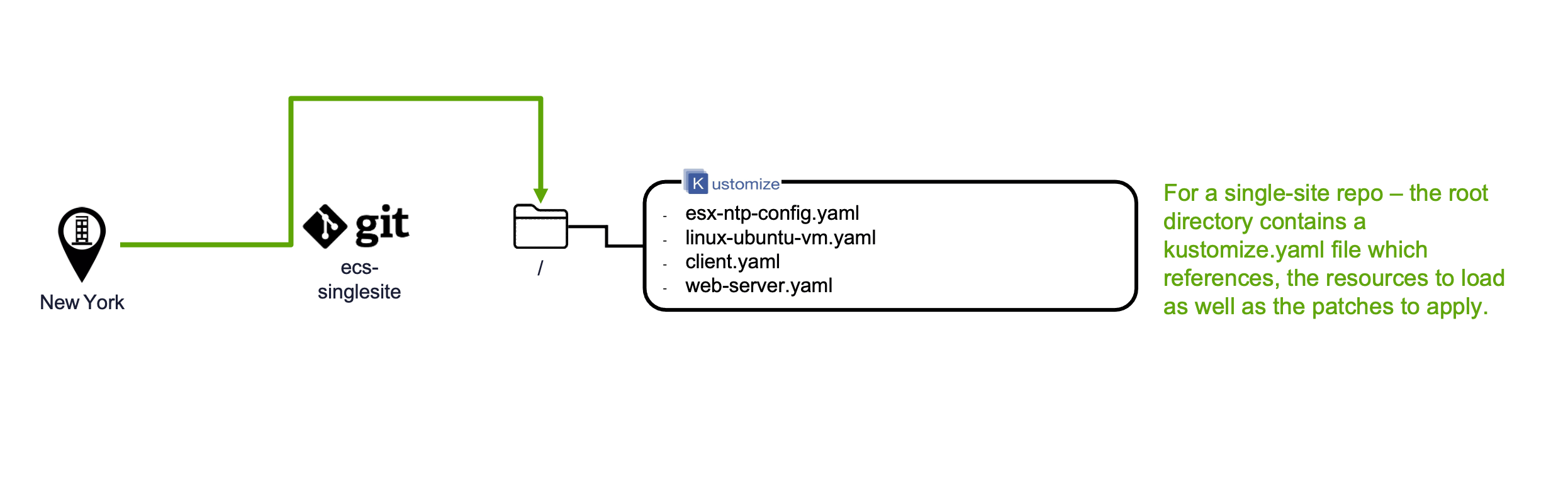

Single-Site Example

To showcase how this works we will start off with a simple example of a single repository, and a single site.

The single-site repository example is here: https://github.com/saqebakhter/ecs-singlesite-example. There are no folders within the repository. All the YAML files are in the root folder, along with the kustomization.yaml file which indicates which YAML files to load, as well as what patches to apply.

---

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- linux-ubuntu-vm.yaml

- web-server.yaml

- esx-ntp-config.yaml

patches:

- patch: |

- op: replace

path: /spec/className

value: best-effort-xsmall

target:

kind: VirtualMachine

name: linux-vm

---

The above YAML manifest would load the three YAML files specified and apply the patch to /spec/className to be of best-effort-xsmall. This can be useful for example if you have different deployment sizes based on the size of the server i.e.) large vs xsmall. The advantage of having a single repo allows for quick deployment if all sites have the same exact requirements. However, the disadvantages are quite large as fleet management at scale becomes a problem i.e.) any differing site would need its own repository, and being able to leverage shared manifests across them becomes a challenge. In the next section we dive into how to solve this challenge by leveraging a multi-site repository structure.

Multi-Site Example

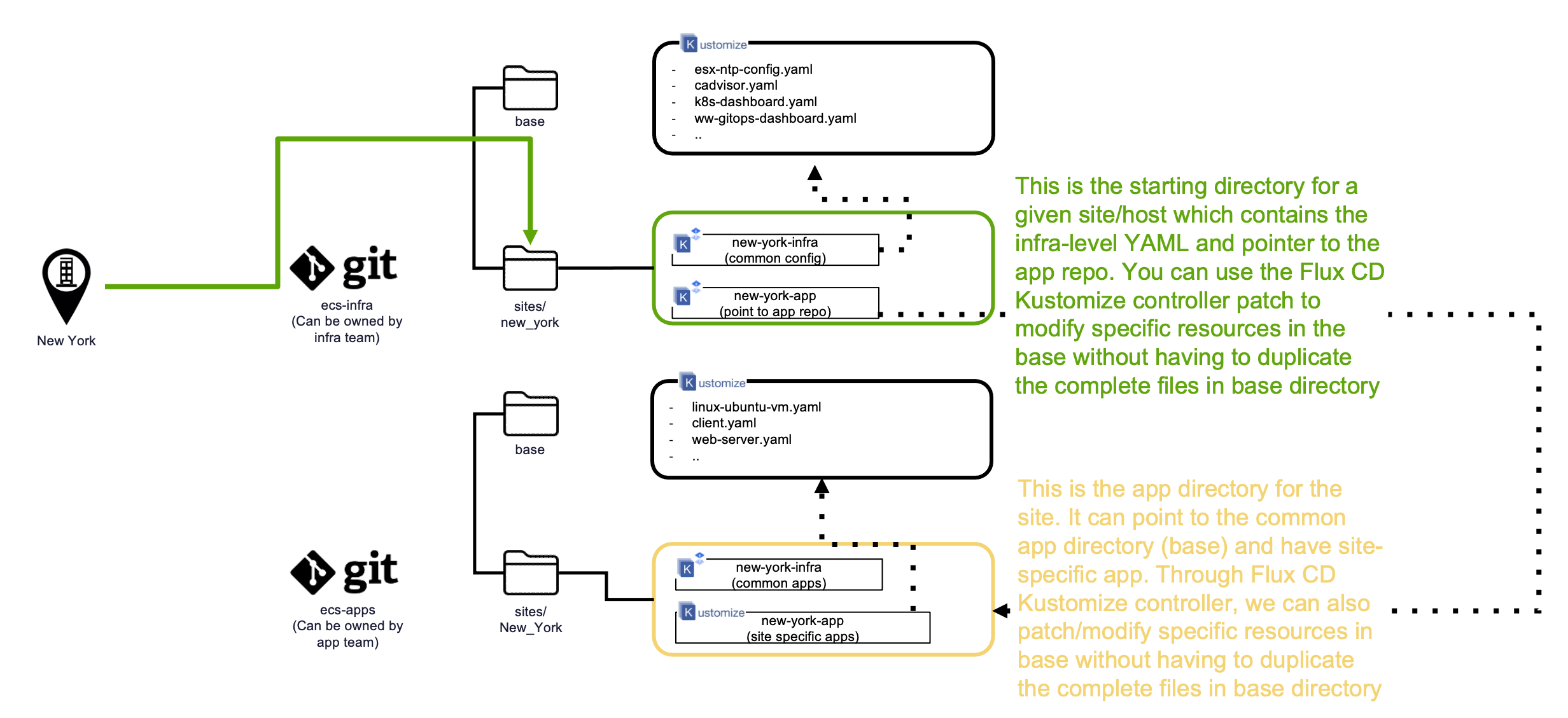

We have created an example of how to structure the Git repository. It is organized in such a way as to allow shared configuration across sites i.e.) Let’s say you have stores in New York, London, and Tokyo - and all of those stores run the same applications, managing the YAML manifests as singular objects makes the most sense. The repository structure in the example below also allows for site specific YAML manifests to be applied as well. There are two repositories that are used, one for infrastructure components (think logging, ESXi settings, etc.) and another repository for business applications.

The infrastructure repository example is here: https://github.com/saqebakhter/ecs-infra-example. Within the repo you will see that there is a folder called base and a folder called sites - which contains subfolders for each of the sites: New York, London, and Tokyo .

The application repository example is here: https://github.com/saqebakhter/ecs-apps-example. It follows a similar pattern of having a base folder and a sites folder.

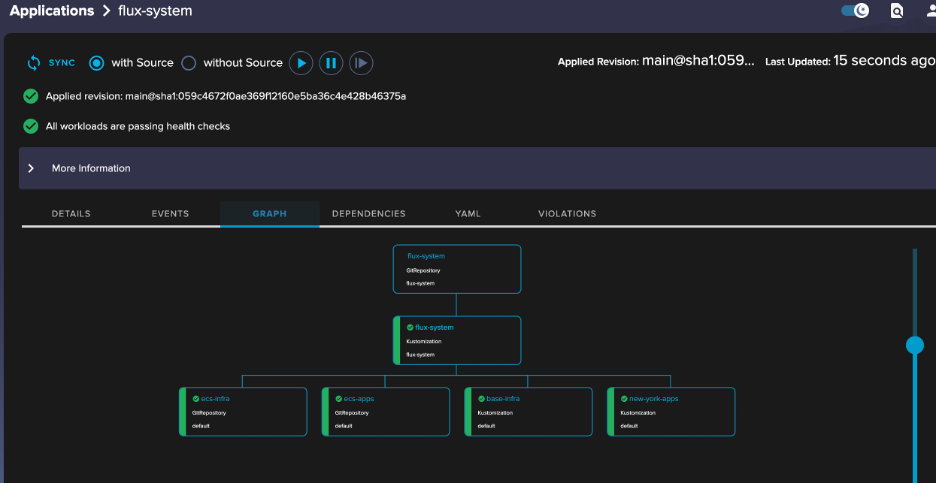

In essence the repository structure looks like this, the Flux CD would be initialized with the ecs-infra/sites/<site_name> folder, which would then reference the other Git Repos, and folder as needed. The following example of how a particular edge site or host can be structured.

A given site (New York) or host will bootstrap to a particular git repository and a directory as a starting point. Below explains why the repositories are structured as such:

We propose to have two git repositories, one can be managed by the infrastructure team (ecs-infra) and another one managed by the application team (ecs-apps).

The cluster will bootstrap with the infrastructure git-repo (ecs-infra) and a path that points to the directory where the artifacts for the site/host (sites/New_York) are stored

Within the git://ecs-infra/sites/New_York, there are several artifacts, one points to the common infrastructure artifacts (git://ecs-infra/base), and another one point to the site-specific application repo (git://ecs-apps/sites/New_York). This provides demarcation of the git repositories owner between the infrastructure/platform and the application teams

Flux CD leverages Kustomize Controller. What it enables is the ability for a site-specific directory, e.g. git://ecs-infra/sites/New_York to point to the common artifacts (in base directory) that are common for all sites. In addition, through Kustomize patch, specific section or values that are in the artifacts of the base directory can be modified (or patch). The benefit is we do not need to repeat the full artifact files in every site which makes this much more scalable when changes in the common sections are required

Application manifests are kept in a separate git repository, with the assumption that the application team will want to own this git repository. Within the git://ecs-infra/sites/New_York, we create another resource that points to the site-specific directory where all the application artifacts are stored (git://ecs-app/sites/New_York). The artifacts within the site-specific application directory can follow the same structure as what is done in the site specific infrastructure directory, e.g. point to the base directory where all the common application artifacts are stored

The base folder has the YAML manifests that are applied to every site. The base folder is referenced from the <site_name> folder, as can be seen in the YAML manifest called git://ecs-infra/sites/new_york/new_york.yaml

---

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: ecs-infra

namespace: default

spec:

interval: 1m

url: https://github.com/saqebakhter/ecs-infra-example.git

ref:

branch: main

---

### The above manifest will add a new Git repo into the Flux backend - this git repo is the ecs-infra repo, where the infrastructure related manifests exist.

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: ecs-apps

namespace: default

spec:

interval: 1m

url: https://github.com/saqebakhter/ecs-apps-example.git

ref:

branch: main

---

### The above manifest will add a new Git repo into the Flux backend - this Git repo is the ecs-apps repo, where the business application related manifests exist.

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: base-infra

namespace: default

spec:

interval: 10m

sourceRef:

kind: GitRepository

name: ecs-infra

path: ./base

prune: true

timeout: 1m

---

### The above manifest creates a Kustomization resource using the ecs-infra GitRepo and load the YAML manifests from the /base folder within the repository.

---

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: new-york-apps

namespace: default

spec:

interval: 10m

sourceRef:

kind: GitRepository

name: ecs-apps

path: ./sites/new_york

prune: true

timeout: 1m

### The above manifest creates a Kustomization resources using the ecs-apps GitRepo and load the YAML manifests from the git://ecs-apps/sites/new_york folder. The kustomization.yaml file within this folder also references manifests in the git://ecs-apps/base/ folder as well. Refer to the diagram for the multi-site repository structure above.

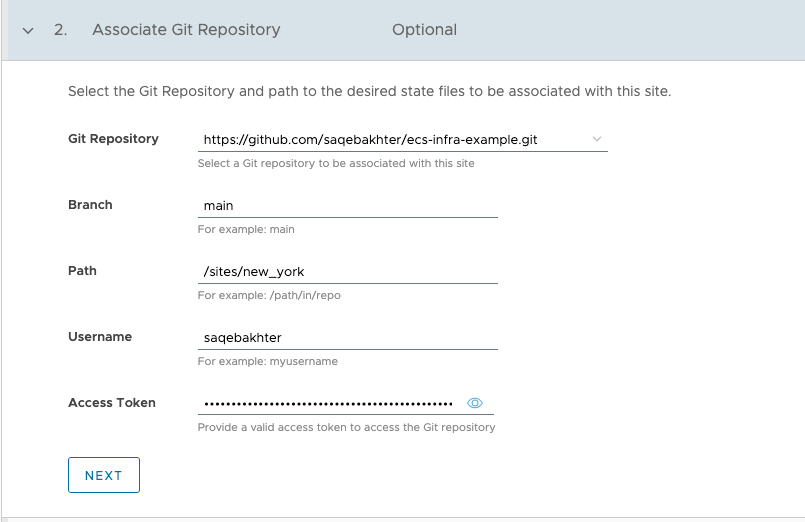

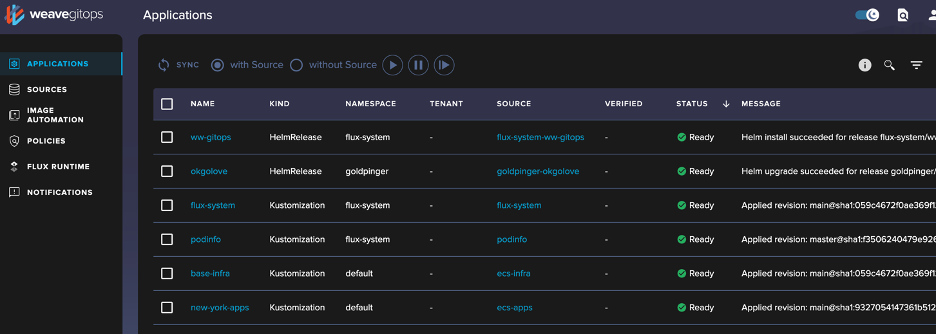

Using and Understanding the Example(s)

To use the examples, they can be directly referenced into a Edge Compute Stack host, or forked into your own personal repository. As mentioned in the previous section the entry point to leverage the examples would be ecs-infra-examples/sites/new_york/ - that would be the path for the Git Repo to provide in the Edge Compute Stack service. It would look like this within the Edge Compute Stack service:

This will trigger Flux CD to load the manifest file from the ecs-infra/sites/new_york/ folder, and in turn trigger all the other manifests defined to be loaded as well. As of the writing of this document the following apps/configuration settings will take place:

ecs-infra

vROPs cAdvisor

ESXi NTP Configuration

K8S Dashboard

WeaveWorks Dashboard

ecs-apps

Simple Container example (client)

Linux Ubuntu VM (show how to deploy a VM)

PodInfo - deployed using Kustomization patches

Nginx - webserver

PodInfo - deployed using Helm Values

WeaveWorks

Let’s take a deeper look at a few of the examples to understand how exactly they are deployed, and in some cases provide some good insight to the Flux environment. Starting with WeaveWorks ecs-infra/base/ww-gitops-dashboard.yaml. The Weave Works example is deployed by first adding the Helm Repository as a source into Flux, and then Flux is instructed to install the weave-gitops chart from that Helm Repository.

This block of YAML adds the Helm repository:

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: HelmRepository

metadata:

annotations:

metadata.weave.works/description: This is the source location for the Weave GitOps

Dashboard's helm chart.

labels:

app.kubernetes.io/component: ui

app.kubernetes.io/created-by: weave-gitops-cli

app.kubernetes.io/name: weave-gitops-dashboard

app.kubernetes.io/part-of: weave-gitops

name: ww-gitops

namespace: flux-system

spec:

interval: 1h0m0s

type: oci

url: oci://ghcr.io/weaveworks/charts

This block of YAML installs the Helm chart:

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

annotations:

metadata.weave.works/description: This is the Weave GitOps Dashboard. It provides

a simple way to get insights into your GitOps workloads.

name: ww-gitops

namespace: flux-system

spec:

chart:

spec:

chart: weave-gitops

sourceRef:

kind: HelmRepository

name: ww-gitops

interval: 1h0m0s

values:

service:

type: NodePort

nodePort: 30091

adminUser:

create: true

passwordHash: $2a$10$KC8Cv5ReNOmYQmkqKHsAfef09EF66QxR9Mzk281A/mRDAIlFDRcl2

username: admin

Some interesting things to note here are that with Flux you can define the Helm values upfront - similar to how you would use Helm value files in-lieu of those files you can specify the values directly into the YAML manifest. As can be seen above the service type is set to NodePort at port 30091, along with creating an admin user.

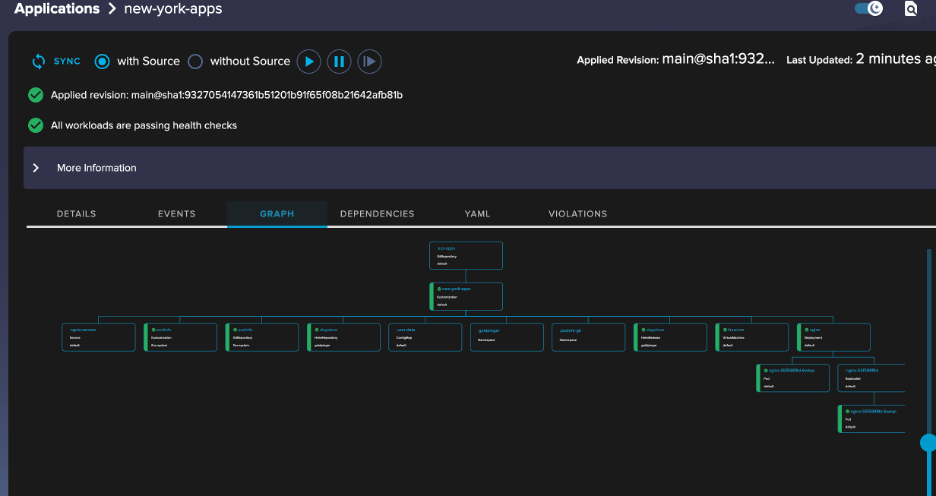

When I browse to that NodePort on the Worker node - we can analyze the Flux deployment in detail.

As you start drilling into the visualization tree you can see how the different components are interrelated along with the status of the deployments. WeaveWorks provides a good way to test and validate that your repository structure is working as expected.

PodInfo

The next example we wanted to look at was PodInfo - it’s been deployed as part of the base applications from the ecs-apps repository (git://ecs-apps/base/). For the Podinfo example the Git repository is added, along with kustomization patches to change the service type to a NodePort.

Kustomization in Flux not only automates the deployment of Kubernetes manifests from Git repositories but also offers the ability to patch resources for environment-specific needs. It dynamically customizes manifests through patches before applying them to the cluster, ensuring precise configuration adjustments and seamless integration with the existing setup.

The PodInfo Kustomization example is broken into two files found in ecs-apps/base/podinfo-source.yaml and ecs-apps/base/podinfo-kustomization.yaml . The source file defines the Git Repository to sync the manifest from, in this case it’s the PodInfo Git Repo.

apiVersion: source.toolkit.fluxcd.io/v1beta2

kind: GitRepository

metadata:

name: podinfo

namespace: flux-system

spec:

interval: 30s

ref:

branch: master

url: https://github.com/stefanprodan/podinfo

The second YAML file defines the actual kustomize file to load from the Git Repo, along with any patches that need to be applied to the YAML.

apiVersion: v1

kind: Namespace

metadata:

name: podinfo-git

---

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: podinfo

namespace: flux-system

spec:

interval: 5m0s

path: ./kustomize

prune: true

sourceRef:

kind: GitRepository

name: podinfo

targetNamespace: podinfo-git

patches:

- patch: |-

apiVersion: autoscaling/v2beta2

kind: HorizontalPodAutoscaler

metadata:

name: podinfo

spec:

minReplicas: 2

target:

name: podinfo

kind: HorizontalPodAutoscaler

- patch: |-

apiVersion: v1

kind: Service

metadata:

name: podinfo

spec:

type: NodePort

ports:

- name: http

port: 9898

protocol: TCP

targetPort: http

nodePort: 30098

- name: grpc

port: 9999

protocol: TCP

targetPort: grpc

nodePort: 30099

target:

name: podinfo

kind: Service

In this case the patches are changing the minReplica, and serviceType from the default values set in the YAML. If you also look at the kustomize file itself you can see it references loading 3 other YAML files https://github.com/stefanprodan/podinfo/blob/master/kustomize/kustomization.yaml, which are hpa.yaml, deployment.yaml, service.yaml . So, in essence those three YAML files will be applied to the cluster, along with the patches from kustomization YAML. The result will be a PodInfo deployment in the podinfo-git namespace, with a service running on the NodePort of 30098.

Goldpinger

Another application example that has been deployed is the goldpinger application which is part of the New York specific applications (git://ecs-apps/sites/new_york). Goldpinger has been deployed by a Helm Chart, with the service type configured to be a Node Port.

The Helm Controller in Flux CD complements Kustomization by managing Helm chart releases declaratively. It handles automated updates, rollbacks, and custom configurations of Helm charts via Kubernetes manifests, streamlining the deployment process.

Integrated into the Flux CD toolkit, these components empower a GitOps methodology, enabling version-controlled, consistent, and automated cluster management. This approach enhances reliability and simplifies the maintenance of complex systems.

The example has one file found here: git://ecs-apps/sites/new_york/goldpinger-helm.yaml . It’s a single file with two YAML manifests the first part defining the Helm Repository as a source for Flux, and the second part deploying the Chart itself.

apiVersion: v1 kind: Namespace metadata: name: goldpinger --- apiVersion: source.toolkit.fluxcd.io/v1beta2 kind: HelmRepository metadata: name: okgolove namespace: goldpinger spec: interval: 1m0s url: https://okgolove.github.io/helm-charts/ ---

The above YAML defines the Helm Repository, and creates a new namespace called goldpinger. After that the below YAML deploys the Helm Chart and sets the serviceType to be NodePort. More detailed documentation of the Flux Helm Controller can be found here: https://fluxcd.io/flux/use-cases/helm/

---

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: okgolove

namespace: goldpinger

spec:

interval: 10m

timeout: 5m

chart:

spec:

chart: goldpinger

sourceRef:

kind: HelmRepository

name: okgolove

interval: 5m

releaseName: goldpinger

install:

remediation:

retries: 3

upgrade:

remediation:

retries: 3

values:

namespace: goldpinger

service:

type: NodePort

Once you've set up a repository structure, it becomes a framework that allows you to expand by adding additional site folders. These folders can then reference base folders where common components and applications are stored. This setup is particularly useful because it enables you to share resources across all sites, ensuring consistency and efficiency in development.

Moreover, this structure also provides flexibility in terms of configurations. You can tailor configurations to specific sites, allowing for site-specific settings and adjustments. This level of customization ensures that each site can have its unique configuration while still benefiting from shared components and applications.

Additionally, you can opt for a different organizational approach by focusing on staging versus production environments rather than individual sites. This shift in perspective can streamline development and deployment processes, making it easier to manage different versions of your applications and configurations.

The integration of Helm and Kustomization controllers in Flux further enhances this flexibility. These controllers allow you to make granular adjustments to your deployments. For example, you can easily modify parameters such as the number of replicas or the type of service based on the specific requirements of each environment, whether it's staging or production. This level of control ensures that your applications are configured optimally for each environment, contributing to a smoother development and deployment workflow.