Working with vPAC services requires multiple networks to monitor the incoming traffic. Real-time workloads, such as vPR, are recommended to be networked directly to dedicated host ports, while non-real-time applications, such as vAC, can benefit from the security and flexibility of a software-defined network.

VMware has extensive capabilities for network security with their VeloCloud SD-WAN products like VeloCloud Orchestrator and VeloCloud Edge among others. Zero-trust models can be constructed using network segmentation, built-in firewalls can be deployed and configured with great flexibility, and intrusion detection and prevention can detect, deny, and quarantine bad actors. Advanced threat monitoring, anti-virus, and vulnerability detection is also available at all levels of the compute stack.

Refer to Offensive Security for in-depth descriptions of the capabilities.

Regarding vPAC Ready Infrastructure, the topology of the software-defined landscape, regarding power utilities, must be carefully defined. Currently, enabling the highest performance possible, particularly for protection applications, requires direct VM access to the industry-protocol data streams.

There are several routes by which traffic can be directed into virtualized infrastructure. Two of these methods can deliver packets directly from server Network Interface Cards (NICs) to applications:

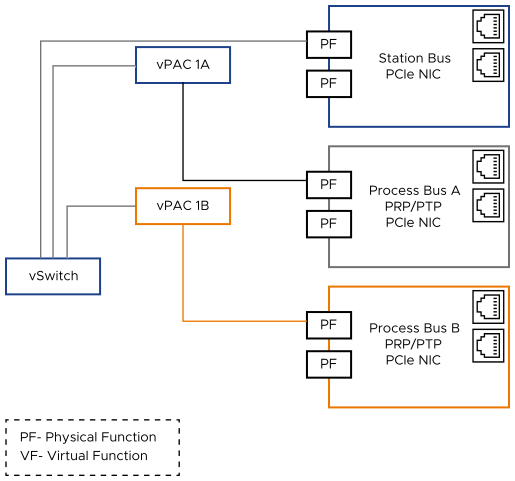

PCI Passthrough: Direct access to hardware devices from a software-defined application. This is the method which the vPAC Ready Infrastructure validated solution used for the applications being tested (ABB SSC600 SW and Kalkitech VPR).

Figure 1. NIC PCI Pass-Through

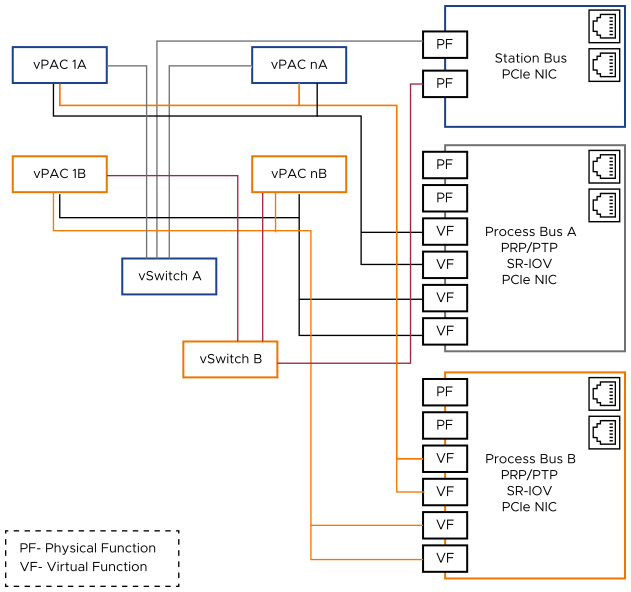

SR-IOV: Single Root – Input/Output Virtualization is an extension of the PCIe specification, allowing separate access to Physical Functions (PFs) and Virtual Functions (VFs). VFs identify as independent, directly accessible devices to the software-defined applications, leveraging resources on-board the NIC.

Figure 2. NIC SR-IOV

The reasons for using these direct networking methods for ingress or egress traffic is to deliver it all predictably, and with low latency, to the supported applications, and to deliver industry-specific protocols that might not currently be supported by the infrastructure’s virtual switches.

Much traffic is expected to be facilitated on different networks (separated either by physical or virtual Local Area Networks, LANs, or VLANs). For a utility, these are split into two: a station bus and a process bus (which are defined in Glossary of Terms).

As shown in the preceding diagrams, the station bus that carries less critical traffic, is routed through vSwitches. Protocols making up this traffic likely include types that are routable. Process bus traffic is typically, exclusively made up of non-routable portions of standard IEC 61850 and related protocols (for example, IEC 61869-9).

When infrastructure is combined (station bus + process bus), or when time-sensitive process bus traffic is extended beyond the local LANs (for example, through tunneling or by using R-GOOSE or R-SV for each IEC 61850-90-5), security might be desirable outside of the scope of the virtual environment. There are definitions within IEC 62351-6 that provide measures to secure IEC 61850 communications. Multiple vendors already offer security key (symmetrical) solutions. But it must also be noted that a special exemption is provided for this type of traffic within the NERC CIP-005 standards. So, the extra layer of security provided must be at the discretion of an individual entity.

All vPAC workloads are deployed to a vSphere cluster and, if compatible, use VLAN-backed port groups. Standard vSwitches are used in the reference architecture, but for improved management and isolation of workloads, distributed vSwitches are recommended for station bus and northbound traffic.

The following table details physical networking configuration design decisions.

Decision ID |

Design Decision |

Design Justification |

Design Implication |

|---|---|---|---|

VPAC-PNET-001 |

Combined or segregated station bus (L3 traffic) and process bus (L2 traffic). |

Administration of segregated networks might prove simpler procedurally for some organizations. However, combined networks can still leverage VLANs to filter and direct traffic. |

Separated station and process buses might require additional hardware (switches and NICs) with more host connections. Redundancy choices must be made separately for each network. |

VPAC-PNET-002 |

Process bus network interface cards passthrough/SR-IOV VM connections. |

NICs supporting industry standard PRP and PTP protocols must be utilized for each vPAC VM to ensure consistent real-time performance. |

A specialty NIC for each VM must be procured, to deliver process bus communications carrying PRP and PTP capabilities. |

VPAC-PNET-003 |

PTP distribution through local process bus traffic or through routed station bus in a Wide Area Network (WAN) configuration. |

PTP delivered by a local clock is simpler to implement, but less resilient and less efficient when compared to WAN. Dispersing multiple grandmasters off-premises provides greater redundancy (leveraging PTP’s BMCA). |

Local NIC connections at the host must facilitate PTP for real-time workloads and VMware sync agent. PTP WAN might not be feasible for organizations without high-speed networking between end points requiring PTP, and specific protocol support (for example, power profile) must be enabled at each connection point. |

VPAC-PNET-004 |

Management network redundancy, for remote maintenance and vSAN/vMotion connections, if used. |

Prevent network outages for singular cable or NIC hardware failures. |

At least two separate physical NIC ports must be assigned to the Management vSwitch to enable network redundancy of the management traffic. |

PRP and PTP-compatible NICs are used to facilitate industry standard protocols within the VVS reference architecture, and to achieve the highest performance with the lowest network latency. A few commercially available and tested PRP/PTP NICs are listed in the following table.

Manufacturer |

Part Number |

+PTP Power Profile |

|---|---|---|

TT Tech |

Flexibillis (FRC), 13205 |

Yes |

Welotech (SOC-e based) |

HSR-PRP PCIe Card |

Yes |

Relyum (SOC-e based) |

RELY-SYNC-HSR/PRP-PCIe |

Yes |

Advantech |

ECU-P1522 |

No |

Advantech |

ECU-P1524SPE/P1524PE |

No |

There are additional NICs more commonly available today, based on the Ethernet controllers indicated in Common Ethernet Controllers Supporting IEEE 1588 PTP. These cards are not likely to feature PRP, which might not be necessary for all deployments, particularly if any critical VMs requiring network redundancy have the built-in ability to act as a Dually Attached Node (DAN).

You must evaluate each NIC before use, to ensure that the NICs meet their environmental requirements. It includes both the physical surroundings (such as temperature, ESD protection, and other factors) and the architectural requirements (such as SFP compatibility, bandwidth capabilities, specific protocol features).

Ethernet controllers known to support VMware ESXi PTP time provider VM are also noted here.

General Ethernet Controllers w/IEEE 1588 Support |

+ESXi PTP Time Synchronization |

|---|---|

Intel-based chipsets |

|

i210 |

- |

i350 (+ SR-IOV) |

- |

x550 (+ SR-IOV) |

- |

x710 (+ SR-IOV) |

- |

e810 (+ SR-IOV) |

Yes |

Specific requirements for vMotion and vSAN management networks can be found in the VMware ECS Overview documentation. For the two-node cluster represented in VVS Reference Architecture, the following network considerations are made:

ESXi NIC compatible with ESXi PTP time synchronization (Intel x710 ethernet controller).

ESXi NIC management and vSAN/vMotion PF port connections capable of greater than or equal to 10 Gbps and connect local hosts back-to-back on physically separate network cards.

NICs 1 and 2 feature PRP DAN and PTP power profile protocol support.