A container encapsulates an application in a form that is portable and easy to deploy. Containers can run without changes on any compatible system, in any public or private cloud, in a traditional enterprise computing environment, or an edge computing device.

Containers consume resources efficiently, enabling high density and resource utilization. Although containers can be used with almost any application, they are frequently associated with microservices, in which multiple containers run separate application components or services. The containers that make up microservices are typically coordinated and managed using a container orchestration platform, such as Kubernetes.

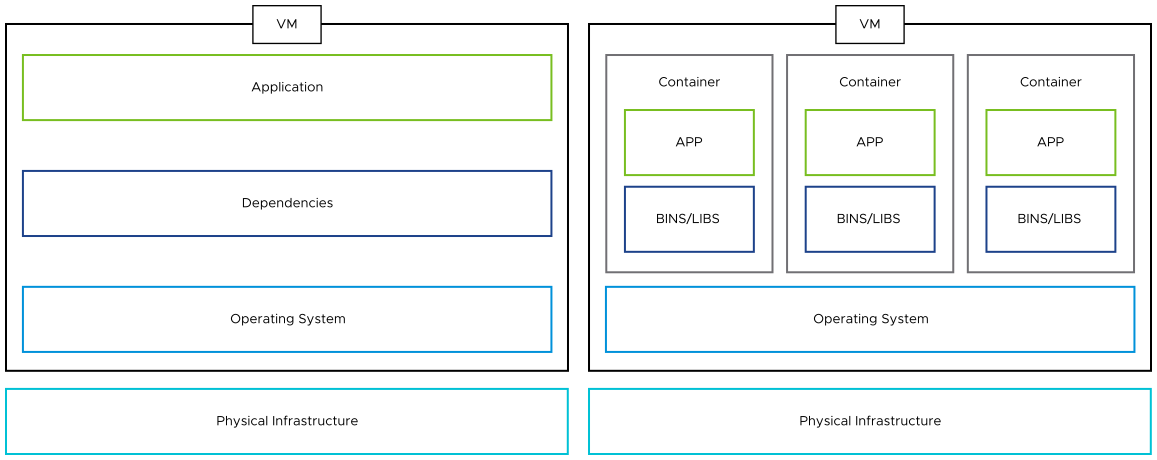

Containers and Virtual Machines

At the simplest level, the difference between a VM and a container is that every VM runs a full or partial instance of an operating system, whereas multiple containers share a single operating system instance. A container is a lightweight, standalone, executable package that, in conjunction with the host system, includes everything necessary to run an application such as code, runtime, system tools, system libraries, and settings, enabling multiple containerized applications to run independently on a single host system. Since multiple containers can run inside a VM, the benefits of both can be combined.

Multiple containers can run in lightweight VMs to increase security and isolation. The VM creates an infrastructure-level boundary that container traffic cannot cross, reducing exposure if a service is compromised.

Therefore, when containers are implemented within a VM, their usage and the choice of container runtime becomes less critical.

Container Runtime

A container runtime is the software that manages and configures the components required to run containers. It makes it easier to securely run and efficiently deploy containers. A container is not a first-class object in the Linux kernel. Instead, it is a combination of multiple settings of kernel namespaces, cgroups (which define how much CPU and memory can be used), and Linux Security Modules (LSMs, such as selinux).

Open Container Initiative (OCI) runtimes are low-level runtimes in charge of configuring the kernel resources for running a containe (RunC is a common example). Instead of using the native constructs for isolating containers, some OCI runtimes use sandboxing or virtualization to create more strict separation between the container and its host.

Another type of runtime implements the Container Runtime Interface (CRI). These high-level runtimes provide orchestrators, such as Kubernetes, a uniform way to manage the container lifecycle and monitor running containers.

Containers, like VMs, provide a consistent runtime environment for processes and isolate processes from each other and from the underlying operating system. However, unlike VMs, which run a separate OS, containers generally share the host’s OS and rely on kernel namespaces and resource groups (that is, Linux cgroups) for isolation and resource management. Although this soft isolation is configurable, it does not have an inherent property that guarantees any kind of isolation. Administrators must be aware of the level of privilege each container has in the server it is running on and the resulting risk of an escape from a compromised container to its hosting server and the rest of the cluster.

Pod

Pods are the smallest deployable units of computing that can be created and managed in Kubernetes.

A pod is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. A pod's contents are always co-located and co-scheduled, and run in a shared context. A pod models an application-specific logical host - it contains one or more application containers that are tightly coupled.

Common Pod Configurations and Associated Risks

Privileged containers: Although they might run in separate namespaces, these containers have all the capabilities of a root user and full access to the host’s kernel and devices. In terms of security, they do not offer isolation and must be treated as privileged host processes.

Run as user: By default, processes in containers run as the root user (user id 0), which is also the root user in the host. Although the namespace and cgroup isolations still apply for those processes, the risk of container escape by leveraging some vulnerability is much higher for processes running as root. Running a container as root, while very easy to do, is almost always a misconfiguration.

Capabilities: When a container is privileged or running as root, it has all the capabilities provided by the Linux kernel. In some cases, the container only requires a specific set of capabilities, such as access to the networking subsystem. It is always better to run the container as a non-root user and to specify the kernel capabilities it requires, if any.

Host namespace: In general, each container must run in separate network, Process ID (PID), and Inter-Process Communication (IPC) namespaces. However, containers can be configured to share one or more of these namespaces with their host. Although useful at times, such configuration increases the chance of container escape and lateral movement. For example, in Kubernetes, containers running in the host’s network namespace cannot be isolated using network policies.

Lack of resource quotas: By default, there is no limit to the amount of CPU or memory that processes in a container can use. Without explicitly setting those limits, a compromised (or just misbehaving) container can easily disrupt the stability of the host it is running in and (in some cases) the entire cluster, by consuming all the host’s resources, causing processes running in other containers and directly on the host to run out of resources.

Enforcing Best Practices and Policies

PodSecurityPolicy is a resource type offered by Kubernetes to enforce polices and best practices for new pods in a cluster. Most rules in these policies have to do with the pod’s runtime attributes such as privileged, sharing the host’s namespaces and additional Linux capabilities. Other rules address the volumes mounted to the pod.

PodSecurityPolicy can also be used to define more secure defaults for new pods, such as preventing privilege escalation or turning off some default capabilities. When applying these kinds of rules, the PodSecurityPolicy validates and mutates the pod’s configuration.

Although the PodSecurityPolicy is a powerful tool that is offered natively in Kubernetes, it has some drawbacks that limit its adoption:

It only applies to pods. It does not cover other kinds of resources such as services, nodes, or roles.

Scope is hard to define. The way to define which policies apply to a pod is by using Role-Based Access Control (RBAC).

Pod rejection events can be hard to find and understand.

There is no audit-only mode. There is no way to test which pod might fail a policy check before actually applying that policy and re-creating the pods.

There is no way to add new rules other than updating Kubernetes itself.

To overcome the limitations of the PodSecurityPolicy tool, Kubernetes supports custom admission control by validating webhook. In this approach, one or more servers are defined (known as admission controllers), which approves or rejects Kubernetes API calls. The Kubernetes API server is configured to send a webhook to each admission controller on some, or all, incoming API calls. If all webhooks return as approved, the API call is run; otherwise, it is rejected.

A validating webhook admission controller configured to enforce workload security best practices, combined with a tight RBAC policy are the best ways to minimize the security and operational risks introduced to a cluster through the Kubernetes API.

Securing Container Network Communications

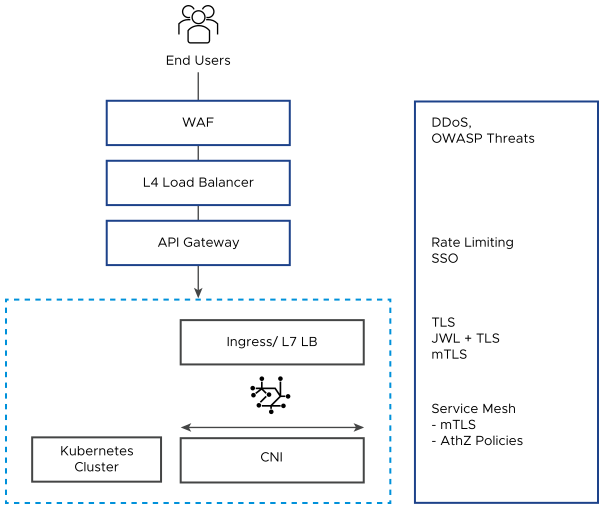

External access to an application running on a Kubernetes cluster is gained by exposing the service using a Service.Type of NodePort, LoadBalancer, Ingress API, External API gateway, or Ingress gateway.

The basic security configuration provided within the Kubernetes Ingress API uses Transport Layer Security (TLS) to secure ingress traffic, which assumes that TLS is terminated at the Ingress. As described in the Kubernetes documentation, Ingress is secured by specifying a Secret resource that contains a TLS private key and certificate. The TLS secret must contain keys named tls.crt and tls.key that contain the certificate and private key to use for TLS. The secret is referenced in the Ingress API, which tells the Ingress controller to secure communications from the client to the load balancer using TLS. This configuration requires that the certificate used to create the TLS secret contain a Common Name (CN) — also known as a Fully Qualified Domain Name (FQDN) — for the host.

Depending on the Ingress controller used, other encryption methods are also supported, including Mutual TLS (mTLS) and Secure Sockets Layer (SSL) passthrough. Because TLS encryption requires certificates, it is important to look at options that enables users to manage these certificates in an easy and automated way, such as a cert-manager that is native to Kubernetes and automatically provisions SSL certificates for services.

Implementing Container Authentication and Authorization

Apart from traffic encryption, incoming requests must also be validated to provide access to services or to an API, based on the end user. Various methods are supported (depending on the Ingress controller used or, in some cases, by using API or Ingress gateways). These methods include Basic Auth, API Keys, Open Authorization (OAuth), and OpenID Connect (OIDC).

Configuring Ingress Security

Additional security-related features can be configured at an Ingress layer. Some of these features are included in advanced Ingress controllers, while others might require a custom gateway (like an API Gateway). Some of these features include Web Application Firewall (WAF) and Rate Limiting.

Depending on the Ingress controller or API gateway implementation, the position of the different security functions can be interchanged. Some implementations, like VMware NSX Advanced Load Balancer, consolidate all or some of these functions in a single ingress solution.

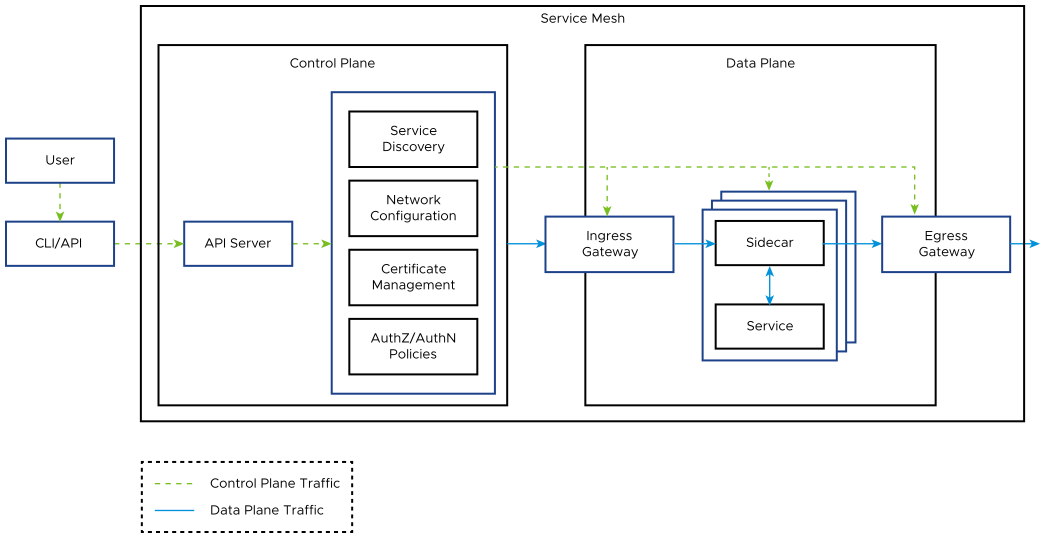

Managing and Securing Traffic between Container Microservices

With a microservices-based architecture, securing communication between the services within an application becomes as important as securing ingress traffic, particularly given that these containers can be deployed across multiple clusters and clouds. This is where a service mesh is required.

A service mesh is a platform layer that provides applications with features like service discovery, resilience, observability, and security, without requiring application developers to integrate or modify their code to use these services. All these features are abstracted from developers using an architecture in which the communication between all the services happens through a sidecar proxy, which sits alongside each service, creating a service mesh. An example of a service mesh solution is VMware Tanzu Service Mesh. In most service mesh implementations, sidecars are managed and configured by the centralized control plane for traffic routing and policy enforcement. In Kubernetes, the injection of the sidecar alongside an application container is transparent to the user and happens automatically.

A service mesh provides secure connectivity by encrypting the traffic between services. It also manages authentication and authorization of service communications at scale. Authentication policies are provided at two levels: service-to-service authentication (mTLS) and request authentication (at ingress).