This section provides information about configuring the ESXi cluster.

The following ESXi cluster features are used:

vSphere Distributed Resource Scheduler (DRS) - Disabled

Normally DRS is very desirable for vSAN since it provides initial placement assistance of VMs and load balances the environment when there is an imbalance. It also migrates virtual machines to their correct site according to the VM or host affinity rules.

vSphere High Availability (HA) - Enabled

Host Monitoring - Enabled

Failure conditions and responses:

Host failure - Restart VMs (restart VMs using VM restart priority ordering)

Proactive HA - Disabled (because DRS is disabled).

Host Isolation - Disabled (VMs on isolated hosts remain powered on).

Datastore with Permanent Device Loss - Power off and restart VMs (Datastore protection enabled, always attempt to restart VMs).

Datastore with All Paths Down - Power off and restart VMs (Datastore protection enabled ensure resources are available before restarting VMs).

Guest not heart beating - Reset VMs (VM monitoring enabled, VMs reset).

vSAN Services - Enabled

Deduplication and compression - Enabled (reduces the data stored on the physical disks. Deduplication and compression only works for all-flash disk groups).

Encryption - Disabled (data-at-rest encryption).

Performance Service – Enabled (the performance service collects and analyzes statistics and displays the data in a graphical format).

vSAN iSCSI Target Service - Disabled (allows the exposure of vSAN storage capacity to hosts outside of this cluster).

File Service - Disabled (allows a vSphere admin to provision a file share from their vSAN cluster, the file share can be accessed using NFS).

Virtual Machine Configuration for Performance Testing

VMware Photon OS, including the real-time kernel, hosts vPAC PoC applications inside a virtual machine, due to the requirement for a real-time operating system.

Workloads on ESXi hosts must also not be over committed in terms of memory or CPU resource allocation, when assigning to real-time applications.

Test Virtual Machines (VMs) configuration:

Four VMs each with:

Four vCPUs

16 GB RAM memory

40 GB disk using vSAN storage or Local storage

The following software was installed on the VMs:

Photon 5.0 (x86_64) – Kernel 6.1.75-1.ph5-rt

VMware Tools version 11296

Cyclictest v2.7

Update Photon to the latest real-time kernel version:

Log into the VM OS as root and enter the following commands in the command prompt:

tdnf update libsolv

tdnf update linux-rt

Now reboot the VM two times in a row.

Ensure to update the Photon OS to the latest kernel version as optimizations are frequently made that can provide latency improvements.

The following settings are changed to provide the best performance for VMs. These settings can be changed in ESXi or vSphere Client from the VM Edit Settings menu (when the VM is powered off).

Configure vCPUs / Cores per Socket / Number of Sockets:

Go to , select 1 for Cores per Socket, and click OK.

Deactivate logging on the VM:

Go to , deselect Enabble logging, and click OK.

Enable backing up guest vRAM:

Go to , click Edit Configuration, and click Add Configuration Parameters.

sched.cpu.affinity.exclusiveNoStats=”TRUE”

monitor.forceEnableMPTI=”TRUE”

timeTracker.lowLatency=”TRUE”

Click OK.

Set VM Latency Sensitivity to High:

Set CPU Reservation: Go to , enter the number of MHz to reserve, and click OK.

Set Memory Reservation: Go to , select the Reserve all guest memory (All locked) checkbox, and click OK.

Set Latency Sensitivity: Go to , select High, and click OK.

Note:By setting the VM Latency Sensitivity to High, major sources of extra latency imposed by the virtualization layer are removed to achieve low response time and low jitter. This setting provides exclusive access to physical resources to avoid resource contention due to sharing.

This setting is also done with the CPU and memory reservations. The CPU Reservation reserves the appropriate amount of MHz to enable the ESXi scheduler to determine the best processors on which to run the workload. So, the ESXi scheduler is pinning the CPUs for the user. Additional CPU Scheduling Affinity is not required. The Memory Reservation ensures all guest memory allocated for the VM and workload is always available.

Within the Linux VM OS, Tuned is a feature that can be used as a profile-based system tool that offers predefined profiles to handle common use cases such as high throughput, power saving, or low latency.

Update Tuned:

Log in to the VM as root user.

To start Tuned, run this command: systemctl start tuned

To enable Tuned to start every time the machine boots, run this command: systemctl enable tuned

To update, run these commands:

rpm -qa tuned

tdnf update tuned

Isolate vCPU to run the workload or cyclic test using Tuned :

Edit the following file with vi: vi /etc/tuned/ real-time-variables.conf

Follow the examples provided and add the following line: isolated_cores=1

Save the changes and close the file.

Note:Isolating vCPUs is different than pinning CPUs.

Pinning CPUs tells the scheduler to use specific physical CPUs on the host for a particular VM (performed with ESXi configuration noted earlier).

Isolating vCPUs tells the VM to use specific vCPUs for the workload, which leaves the remaining vCPUs for the guest OS to utilize.

Use Tuned to set the OS profile to real-time:

To view the available installed profiles, run this command: tuned-adm list

To view the currently activated profile, run this command: tuned-adm active

To select or activate a profile, run this command: tuned-adm profile real-time

To verify that the real-time profile is active, run this command: tuned-adm active

To reboot the guest OS, run this command: reboot

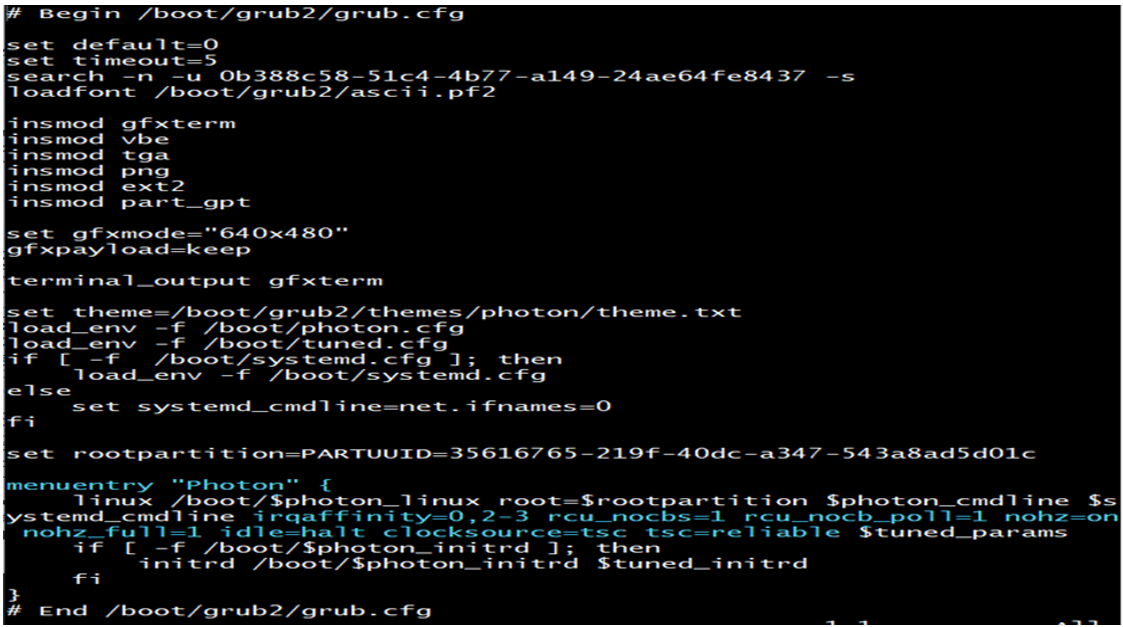

Additional parameters to set for Photon OS within the grub.cfg file:

Edit the file using vi: vi /boot/grub2/grub.cfg

Add the following parameters on the menu entry line:

Table 1. Photon OS Grub.cfg File Tuning Parameters Parameters

Example

Comments

irqaffinity=<list of non-isolated vCPUs>

irqaffinity=0,2-3

Interrupt affinity – steer interrupts away from isolated vCPUs

rcu_nocbs=<list of isolated vCPUs>

rcu_nocbs=1

RCU callbacks – steer movable OS overhead away from isolated CPUs

rcu_nocb_poll=1

rcu_nocb_poll=1

nohz=on

nohz=on

Eliminating periodic timer – avoid periodic timer interrupts

nohz_full=<list of isolated vCPUs>

nohz_full=1

idle=halt

idle=halt

CPU idle – Prevent CPUs from going into deep sleep states

clocksource=tsc

clocksource=tsc

Timer skew detection

Save changes and reboot

tsc=reliable

Timer skew detection

Figure 1. Grub.cfg File

The grub.cfg file has a single menu entry line for the Photon OS as shown in the following image (identified in blue text) and is kept independent of the kernel RPM. Any changes made to this file remains valid and applicable, even if the kernel version is changed or updated. The parameters must be appended to the same line, without introducing any new line characters.

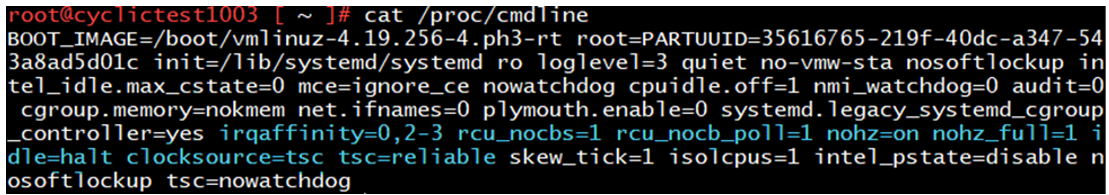

Verify that the variables are added:

Figure 2. Verify Variables Added with cat/proc/cmdline

Precision Time Protocol

The following instructions can be followed to configure time synchronization between VMs and a centralized clock source using a PTP host.

The PTP and NTP services cannot run simultaneously. To enable PTP, ensure that the NTP service is disabled first. Additionally, when the PTP service is enabled, the manual time configuration becomes inactive.

Change the NTP and PTP service status on the host manually:

In the vSphere Client home page, go to

Select a host.

On the Configure tab, select .

Change the status of the NTP or PTP service.

Table 2. NTP/PTP Service Stop and Start Option

Description

Change the NTP service status

Select NTP Daemon and click Stop.

Change the PTP service status

Select PTP Daemon and click Start.

Use PTP servers for time and date synchronization of a host:

From the vSphere Client home page, go to

Select a host.

On the Configure tab, select .

In the Precision Time Protocol pane, click Edit.

In the Edit Precision Time Protocol dialog box, edit the precision time protocol settings.

Select Enable.

From the Network interface drop-down menu, select a network interface.

Click OK.

Go to , select Sychronize time periodically, and click OK.

PTP configuration enhancements are made to ESXi that enable a more accurate time synchronization throughout the stack (see this blog post for details).