VMware Greenplum is a massively parallel processing database server specially designed to manage large scale analytic data warehouses and business intelligence workloads. Apache Spark is a fast, general-purpose computing system for distributed, large-scale data processing.

The provides high speed, parallel data transfer between Greenplum Database and Apache Spark clusters to support:

- Interactive data analysis

- In-memory analytics processing

- Batch ETL

- Continuous ETL pipeline (streaming)

Architecture

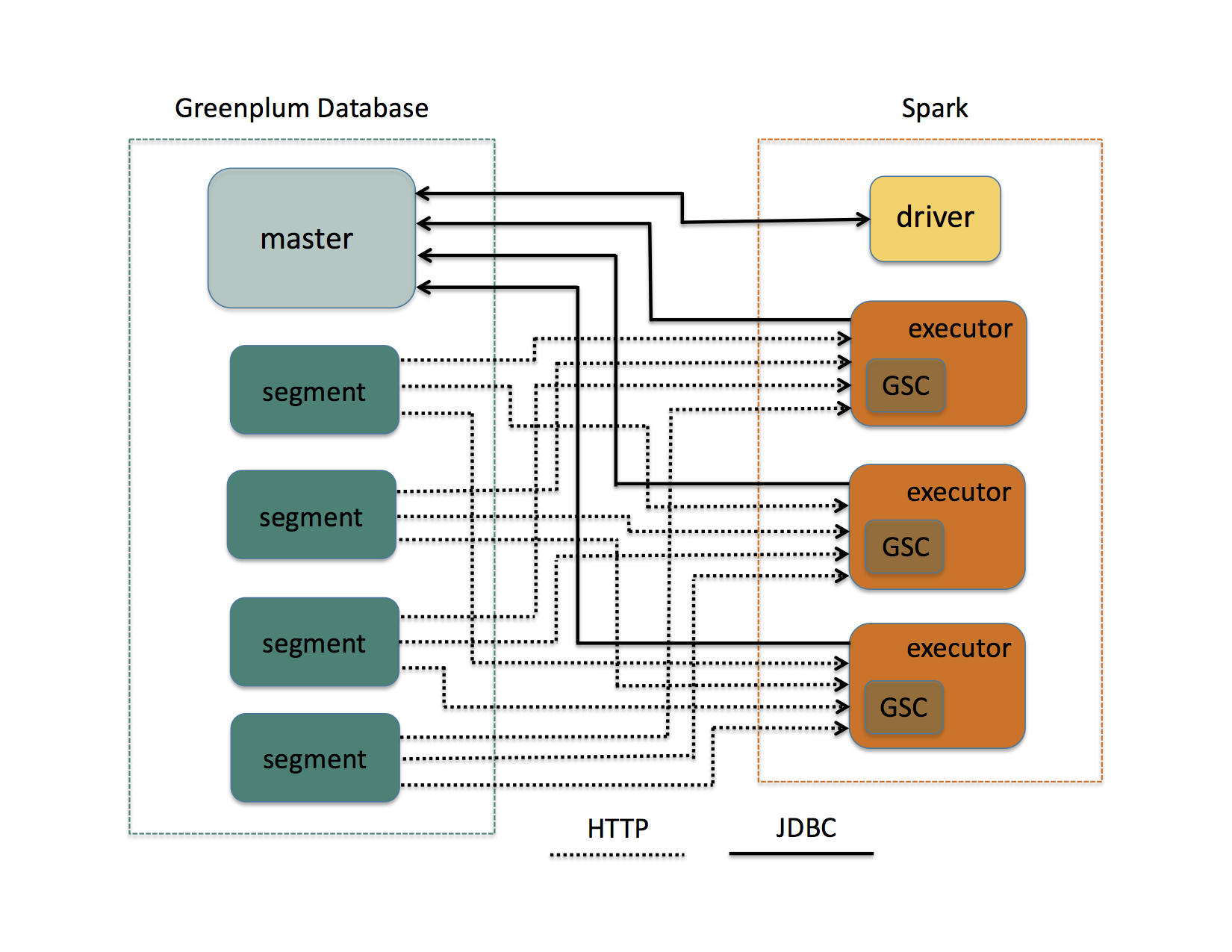

A Spark application consists of a driver program and executor processes running on worker nodes in your Spark cluster. When an application uses the Connector to load a Greenplum Database table into Spark, the driver program initiates communication with the Greenplum Database master node via JDBC to request metadata information. This information helps the Connector determine where the table data is stored in Greenplum Database, and how to efficiently divide the data/work among the available Spark worker nodes.

Greenplum Database stores table data across segments. A Spark application using the Connector to load a Greenplum Database table identifies a specific table column as a partition column. The Connector uses the data values in this column to assign specific table data rows on each Greenplum Database segment to one or more Spark partitions.

Within a Spark worker node, each application launches its own executor process. The executor of an application using the Connector spawns a task for each Spark partition. A read task communicates with the Greenplum Database master via JDBC to create and populate an external temporary table with the data rows managed by its Spark partition. Each Greenplum Database segment then transfers this table data via HTTP directly to its Spark task. This communication occurs across all segments in parallel.

Figure: Connector Architecture