Each Tanzu Greenplum Text/Apache Solr node is a Java Virtual Machine (JVM) process and is allocated memory at startup. The maximum amount of memory the JVM will use is set with the -Xmx parameter on the Java command line. Performance problems and out of memory failures can occur when the nodes have insufficient memory.

Other performance problems can result from resource contention between the Greenplum Database, Solr, and ZooKeeper clusters.

This topic discusses Tanzu Greenplum Text use cases that stress Solr JVM memory in different ways and the best practices for preventing or alleviating performance problems from insufficient JVM memory and other causes.

Indexing Large Numbers of Documents

Indexing documents consumes data in Solr JVM memory. When the index is committed, parts of the memory are released, but some data remains in memory to support fast search. By default, Solr performs an automatic soft commit when 1,000,000 documents are indexed or 20 minutes (1,200,000 milliseconds) have passed. A soft commit pushes documents from memory to the index, freeing JVM memory. A soft commit also makes the documents visible in searches. A soft commit does not, however, make the index updates durable; it is still necessary to commit the index with the gptext.commit() user-defined function.

You can configure an index to perform a more frequent automatic soft commit by editing the solrconfig.xml file for the index:

$ gptext-config edit -f solrconfig.xml -i <db>.<schema>.<index-name>

The <autoSoftCommit> element is a child of the <updateHandler> element. Edit the <maxDocs> and <maxTime> values to reduce the time between automatic commits. For example, the following settings perform an autocommit every 100,000 documents or 10 minutes.

<autoSoftCommit>

<maxDocs>100000</maxDocs>

<maxTime>600000</maxTime>

</autoSoftCommit>

Indexing Very Large Documents

Indexing very large documents can use a large amount of JVM memory. To manage this, you can set the gptext.idx_buffer_size configuration parameter to reduce the size of the indexing buffer.

See Changing Tanzu Greenplum Text Server Configuration Parameters for instructions to change configuration parameter values.

Determining the Number of Tanzu Greenplum Text Nodes to Deploy

A Tanzu Greenplum Text node is a Solr instance managed by Tanzu Greenplum Text. The Solr nodes can be deployed on the Greenplum Database cluster hosts or on separate hosts accessible to the Greenplum Database cluster. The number of nodes is configured during Tanzu Greenplum Text installation.

The maximum recommended number of Solr nodes you can deploy is the number of Greenplum Database primary segments. However, the best practice recommendation is to deploy fewer nodes with more memory rather than to divide the memory available to Tanzu Greenplum Text among the maximum number of nodes. Use the JAVA_OPTS installation parameter to set memory size for the nodes.

A single Solr node per host can easily handle several indexes. Each additional node consumes additional CPU and memory resources, so it is desirable to limit the number of nodes per host. For most Tanzu Greenplum Text installations, a single Solr node per host is sufficient.

If the JVM has a very large amount of memory, however, garbage collection can cause long pauses while the JVM reorganizes memory. Also, the JVM employs a memory address optimization that cannot be used when JVM memory exceeds 32GB, so at more than 32GB, a Solr node loses capacity and performance. Therefore, no node should have more than 32GB of memory.

Calculating the Memory Configuration for Solr Nodes

When you determine the number of Solr nodes to deploy and how much memory to allocate to them, it is useful to estimate how much memory Solr will need to create and query your indexes. This is important because if you do not allow enough memory, Solr nodes may crash with out of memory errors. This section helps to estimate the resources Solr will need on each host based on the number and characteristics of the documents you index and how many queries you plan to execute concurrently. When you know how much memory you need per host for your indexes, you can decide how many Solr nodes to deploy.

You can estimate the total memory required per host using the following formula.

TotalMem = TermDictIndexMem + DocMem + IndexBufMem + (PerQueryMem * ConcurrentQueries) + CacheMem

The terms in this formula are defined as follows:

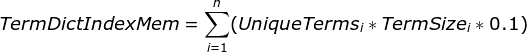

TermDictIndexMem Memory for the Solr term dictionary index.

- n - number of fields to index

- UniqueTermsi - estimated number of unique terms expected for field number i.

- TermSizei - average term length for field number i.

- 0.1 - assumed compression ratio for the term dictionary index.

DocMem Memory for each document's metadata.

DocNum * 1

- DocNum - total number of indexed documents. Each document has one byte of metadata.

IndexBufMem Memory for the replica index buffer.

100 * 1024 * 1024 * R

- R - Number of replicas on each host. (Up to 100MB for each replica's buffer.)

PerQueryMem Memory for each running query.

8 * 1024 * 1024 * Rows / 10000

- Rows - the

rowsparameter in thegptext.search()function. Use an estimate of 8MB for 10000 query results.

ConcurrentQueries Number of concurrent queries.

CacheMem Memory for the Solr cache.

256 * PostingLen * 8

- PostingLen - The average length of the posting list. The posting list records the locations of a term within a document. Terms that occur more frequently in a document have longer posting lists.

Example

A 4-host cluster has 2 Tanzu Greenplum Text indexes.

- Index 1 has 10 million documents with 1 indexed field. The field has about 20 million unique terms with an average length of 20 bytes.

- Index 2 has 100 million documents with 1 indexed field. The field has about 150 million unique terms with an average length of 5 bytes.

- Each index has 64 replicas (there are 16 replicas on each host).

- Search queries limit rows to 250000:

gptext.search("index_name", "search_word", "filters", "rows=250000"). - Ten concurrent queries.

- No Solr cache.

The memory units in the these calculations are bytes unless qualified with a unit specifier.

TermDictIndex =

(20000000 * 20 + 150000000 * 5) * 0.1 = 115000000DocMem =

10000000 + 100000000 = 110000000

The initial memory used on each host after Solr starts up is (115000000 + 110000000) = 215MB / 4 = 54MB.

- IndexBufMem =

100 * 1024 * 1024 * 16 * 2 = 3200MB

The maximum additional memory used during a Tanzu Greenplum Text index operation is 3200MB.

PerQueryMem =

8 * 1024 * 1024 * 250000 / 1000 = 200MBConcurrentQueries =

10CacheMem =

0

The maximum additional memory used for Tanzu Greenplum Text searches is 200 * 10 = 2000MB. This memory is consumed on each Solr node.

The total memory needed per Solr node depends on how many nodes are deployed on each host.

- Total Memory =

54MB + 3200MB + (2000MB * SolrNodeNum)

One Solr node deployed on each host needs (54MB + 3200MB + 2000MB * 1) = 5.3GB memory per node.

Two Solr nodes on each host need (54MB + 3200MB + 2000MB * 2) / 2 = 3.6GB memory per node.

Both of these plans could use a JVM size of 8GB or 16GB.

Manage Indexing and Search Loads

With high indexing or search load, JVM garbage collection pauses can cause the Solr overseer queue to back up. For a heavily loaded Tanzu Greenplum Text system, you can prevent some performance problems by scheduling document indexing for times when search activity is low.

Terms Queries and Out of Memory Errors

The gptext.terms() function retrieves terms vectors from documents that match a query. An out of memory error may occur if the documents are large, or if the query matches a large number of documents on each node. Other factors can contribute to out of memory errors when running a gptext.terms() query, including the maximum memory available to the Solr nodes (-Xmx value in JAVA_OPTS) and concurrent queries.

If you experience out of memory errors with gptext.terms() you can set a lower value for the term_batch_size Tanzu Greenplum Text configuration variable. The default value is 1000. For example, you could try running the failing query with term_batch_size set to 500. Lowering the value may prevent out of memory errors, but performance of terms queries can be affected.

See Tanzu Greenplum Text Configuration Parameters for help setting Tanzu Greenplum Text configuration parameters.

ZooKeeper Best Practices

Good Tanzu Greenplum Text performance requires fast responses for ZooKeeper requests. Under heavy load, ZooKeeper can become slow or pause during JVM garbage collections. This can cause SolrCloud timeout issues and affects Tanzu Greenplum Text query performance, so ensuring good ZooKeeper performance is crucial for the Tanzu Greenplum Text cluster.

Suggestions for optimizing ZooKeeper performance:

- Install ZooKeeper on separate hosts. (The nodes can be small.)

- If you put ZooKeeper on the same hosts with Greenplum Database and Solr nodes, try to put ZooKeeper on less loaded hosts, perhaps the master and standby master hosts, or ETL hosts.

- Put the ZooKeeper data directory on disks separate from the Greenplum Database data disks.

- Do not use more than 3 or 5 ZooKeeper nodes.

- ZooKeeper performs best when its database is cached so it does not have to go to disk for lookups. If you find that ZooKeeper JVMs have frequent disk accesses, look for ways to improve file caching or move ZooKeeper disks to faster storage.