This topic describes the workflow of the Greenplum Database upgrade process. Learn what the different gpupgrade phases involve in order to estimate how long it takes and what are the requirements your environment needs to meet for a successful upgrade.

NoteIn this documentation, we refer to the existing Greenplum Database 5 installation as the source cluster and to the new Greenplum Database 6 installation as the target cluster. They both reside in the same set of hosts.

High Level Workflow

- You upgrade the source cluster to the latest Greenplum Database 5x version.

- You install the latest

gpupgradeand Greenplum Database 6.x version for the target cluster. gpupgradeinitializes a fresh target cluster in the same set of hosts as the source cluster.gpupgradeusespg_upgradeto upgrade the data and catalog in-place into the target cluster.- The target cluster becomes the source cluster.

gpupgrade Workflow

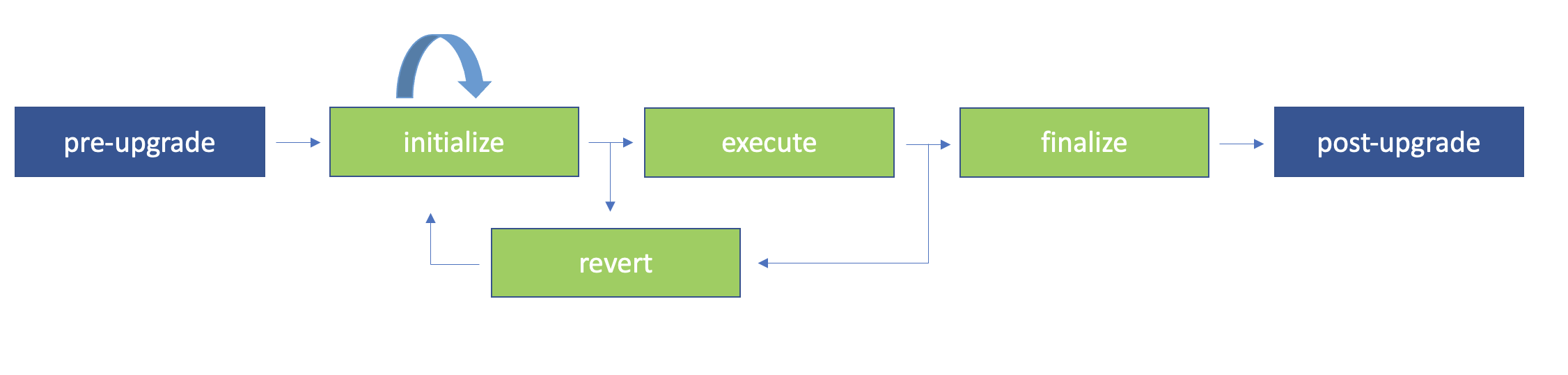

The following diagram shows the different phases of the Greenplum upgrade process flow:

ImportantThe upgrade process requires downtime. We refer to the downtime required to perform the upgrade as the upgrade window.

gpupgradeallows you to perform some of the steps to prepare your cluster for the upgrade before entering the upgrade window. Read the documentation carefully to understand how the process works.

Pre-upgrade

- Perform the pre-upgrade preparatory actions a few weeks before the upgrade date.

- During this phase you review the relevant documentation pages, upgrade the source cluster to the latest Greenplum 5.x version, and download and install the latest Greenplum 6.x version and the latest

gpupgradeutility software. - For detailed steps in this phase, see Perform the Pre-Upgrade Actions.

Initialize

- During this phase the source cluster is still running.

- You run the command

gupupgrade initialize, which generates scripts that collect statistics and check for catalog inconsistencies between source and target cluster. - The execution of the scripts require downtime: you may schedule maintenance windows for this purpose before you continue with the upgrade during the upgrade window.

- You may cancel the process during this phase by reverting the upgrade.

- The

gpupgrade initializecommand the starts the hub and agent processes, intializes the target cluster, and runs checks against the source cluster, includingpg_upgrade --check. - For information about this phase, see Initialize the Upgrade (gpupgrade initialize).

Execute

- You must perform the tasks in this phase within the upgrade window.

- You run the command

gpupgrade execute, which stops the source cluster and upgrades the master and primary segments usingpg_upgrade. - You verify the target cluster before you choose to finalize or revert the upgrade.

- For information about this phase, see Run the Upgrade (gpupgrade execute).

Finalize

- You must perform the tasks in this phase within the upgrade window

- You run the

gpupgrade finalizecommand, which upgrades the segment mirrors and standby master. - You cannot revert the upgrade once you enter this phase.

- For information about this phase, see Finalize the Upgrade (gpupgrade finalize).

Post-upgrade

- Once the upgrade is completed, you edit configuration and user-defined files, and other cleanup tasks.

- For information about this phase, see Perform the Post-Upgrade Actions.

Revert

- You must perform the tasks in this phase within the upgrade window

- You run the command

gpupgrade revert, which restores the cluster to the state it was before you rangpupgrade. - You may run

gpupgrade revertafter the Initialize or Execute phases, but not after Finalize. - The revert speed depends on when you are running the command, and the upgrade mode you chose.

- If the source cluster has no standby master and segment mirrors, you cannot revert after the Execute phase if using

linkmode. - For information about this phase, see Reverting the Upgrade (gpupgrade revert).

Example

The following example illustrates using the gpupgrade utility for an environment with minimal set up. It assumes that you have Greenplum 5.28.10 on the source cluster, and that you have no installed extensions.

-

Install the latest target Greenplum version on all hosts.

gpscp -f all_hosts greenplum-db-6.24.0*.rpm =:/tmp gpssh -f all_hosts -v -e 'sudo yum install -y /tmp/greenplum-db-6.24.0*.rpm' -

Install

gupgradeon all hosts.gpscp -f all_hosts gpupgrade*.rpm =:/tmp gpssh -f all_hosts -v -e 'sudo yum install -y /tmp/gpupgrade*rpm' -

Copy the example config file to

$HOME/gpupgrade/(you must create this directory first) and update the required parameterssource_gphome,target_gphome,source_master_port.cp /usr/local/bin/greenplum/gpupgrade/gpupgrade_config $HOME/gpupgrade/ -

Run

gpupgrade initialize:gpupgrade initialize --verbose --file $HOME/gpupgrade/gpupgrade_config -

Run

gpupgrade execute:gpupgrade execute --verbose -

Run

gpupgrade finalize:gpupgrade finalize --verbose -

Update the Greenplum symlink so it points to the new installation:

gpssh -f all_hosts -v -e 'sudo rm /usr/local/greenplum-db && sudo ln -s /usr/local/greenplum-db-6.24.0 /usr/local/greenplum-db' -

Start the Target Cluster (in a new shell):

source /usr/local/greenplum-db-6.24.0/greenplum_path.sh gpstart -a