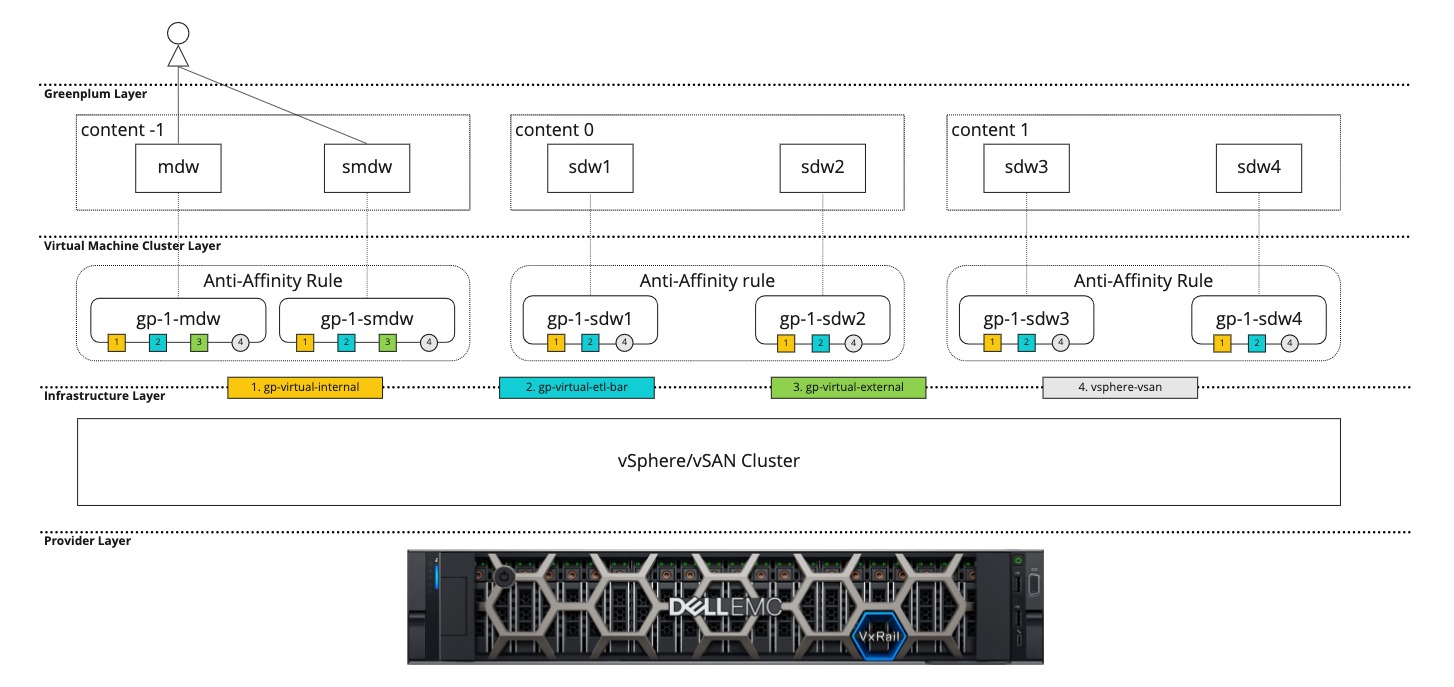

Layered Architecture

As depicted in the diagram below, VMware Greenplum on vSphere architecture is made up of multiple layers between Greenplum Database software and the underlying hardware. The architecture diagram describes the four abstraction layers. Although this topic assumes a Dell EMC VxRail reference architecture (see Dell EMC VxRail Reference Architecture), conceptually the layers above the infrastructure layer can be leveraged in other VMware vSphere environments, as long as the provider and infrastructure layers can provide similar or better infrastructure.

Provider Layer

This layer represents the resource provider, which can be based on physical hardware or a cloud provider. This layer includes the physical hosts running VMware ESXi software that form the basis of a vSphere and vSAN cluster. For this reference architecture, the resource provider is Dell EMC VxRail.

Infrastructure Layer

The infrastructure layer defines the different networks and the data storage that the virtual machines will use on the upper layer. In a VMware vSphere cluster environment managed by vCenter, the networks are defined as distributed port groups. There are three networks defined within this layer that are used by the Greenplum Cluster: gp-virtual-external, gp-virtual-internal and gp-virtual-etl-bar.

For this reference architecture the underlying data storage uses a vSAN cluster. An additional network called vsphere-vsan is defined for the vSAN cluster to use for communication.

Virtual Machine Cluster Layer

For a Greenplum cluster, there are two types of virtual machines (Greenplum Segment Hosts):

- Virtual Machines for Coordinator Hosts are used to run the Greenplum coordinator instances.

- Virtual Machines for Segment Hosts are used to run Greenplum segment instances.

The vSAN cluster provides reliable storage for all the virtual machines for coordinator and segment hosts, which store all the Greenplum data files under the data storage mounted on /gpdata.

The coordinator virtual machines are connected to the gp-virtual-internal network in order to support mirroring and interconnect traffic and to handle management operations via the Greenplum utilities such as gpstart, gpstop, etc. They are also connected to the gp-virtual-etl-bar network in order to support Extract, Transform, Load (ETL) and Backup and Restore (BAR) operations. In addition, the coordinator virtual machines are connected to the gp-virtual-external network, which routes external traffic into the Greenplum cluster.

The virtual machines for segment hosts are connected to the gp-virtual-internal network, which is used to handle mirroring and interconnect traffic. They are also connected to the gp-virtual-etl-bar network for Extract, Transform, Load (ETL) and Backup and Restore (BAR) operations.

For more information on the different networks and their configuration, see Setting Up VMware vSphere Network.

Greenplum Layer

This layer is equivalent to what a Greenplum Database Administrator would normally interact with. The Greenplum node names match the traditional Greenplum node naming convention:

cdwfor the Greenplum coordinator.scdwfor the Greenplum standby coordinator.sdw*for the Greenplum segments, both primaries and mirrors.

Unlike Greenplum clusters deployed on bare-metal infrastructure where many segment instances are deployed on a single segment host, VMware Greenplum on vSphere has fewer segment instances per host. Our recommended number is 1-4 segment instances for a single VM segment host.

High Availability

This design leverages VMware vSphere HA and DRS features in order to provide high availability on the virtual machine cluster layer, so that VMware vSphere can ensure high availability at the application level. Some of the benefits of this configuration are:

- Centralized storage.

- Dynamically balanced load based on the current state of the cluster.

- Virtual machines can be moved among ESXi hosts without affecting Greenplum high availability.

- Simplified mirroring placement.

- Better elasticity to handle ESXi hosts growth, as the virtual machines can be individually moved across hosts by DRS to balance the load if the cluster grows.

We also recommend configuring your vSAN storage policy with RAID to provide data fault tolerance.

VMware Greenplum also provides an option for high availability with segment and coordinator mirroring. With the built-in protection from VMware vSphere and vSAN, Greenplum mirroring is less necessary so it is recommended to run VMware Greenplum on vSphere in a mirrorless configuration for better cost efficiency. If the user wants additional high availability, Greenplum can be configured with segment mirroring and a standby coordinator. Note that this will use additional compute and storage resources in your cluster.

When running in a mirrored configuration, Greenplum segment and mirror pairs are mapped to virtual machines using Anti-Affinity rules so the virtual machines serving the same content ID will never be on the same ESXI host. This architecture further provides Greenplum high availability against a single ESXi host failure.