Checklist B is written for HCX deployments with VMware Cloud on AWS as the target, where HCX is automatically installed by enabling the service (in private cloud HCX deployments, the user handles full HCX deployment and configuration for the destination environment.

This document presented in using on-premises as the source to VMware Cloud on AWS SDDC as the destination. All the checklist tables assume the following information:

The on-premises vSphere environment contains the existing migration workloads and networks. This environment can be legacy vSphere or modern.

The destination environment is a VMware Cloud on AWS SDDC instance.

HCX Use Cases and POC Success Criteria

As described in the following table, understanding a few key concepts can help you to get started and be successful deploying HCX.

Key Concepts |

Examples |

|---|---|

▢ Plan the success criteria for the HCX proof of concept in your environment. |

Clearly define the success criteria. For example:

|

▢ Ensure features are available with the trial or full licenses obtained. |

See VMware HCX System Services. |

Collect vSphere Environment Details

This section identifies vSphere related information about the environments that is relevant for HCX deployments.

Environment Detail |

On-premises Environment |

VMware Cloud on AWS SDDC |

|---|---|---|

▢ vSphere Version: |

|

|

▢ Distributed Switches and Connected Clusters |

|

|

▢ ESXi Cluster Networks |

|

|

▢ NSX version and configurations: |

|

|

▢ Review and ensure all Software Version Requirements are satisfied. |

||

▢ vCenter Server URL: |

|

|

▢ Administrative accounts |

|

|

▢ NSX Manager URL: |

|

|

▢ NSX admin or equivalent account. |

|

|

▢ Destination vCenter SSO URL: |

|

|

▢ DNS Server: |

|

|

▢ NTP Server: |

|

|

▢ HTTP Proxy Server: |

|

|

Planning for the HCX Manager Deployment

This section identifies information for deploying the HCX Manager system on-premises. The HCX Manager at the VMware Cloud on AWS SDDC is deployed automatically when the service is enabled.

Source HCX Manager (type: Connector) |

Destination HCX Manager (type: Cloud) |

|

|---|---|---|

▢ HCX Manager Placement/Zoning: |

|

|

▢ HCX Manager Installer OVA: |

|

|

▢ HCX Manager Hostname / FQDN: |

|

|

▢ HCX Manager Internal IP Address: |

|

|

▢ HCX Manager External Name / Public IP Address: |

|

|

▢ HCX Manager admin / root password: |

|

|

▢ Verify external access for the HCX Manager: |

|

|

▢ HCX Activation / Licensing: |

|

|

Proxy requirements |

If a proxy server is configured, all HTTPS connections are sent to the proxy. An exclusion configuration is mandatory to allow HCX Manager to connect to local systems. The exclusions can be entered as supernets and wildcard domain names. The configuration can encompass these settings:

For example: 10.0.0.0/8, *.internal_domain.com |

Not applicable. |

Configuration and Service limits |

Review the HCX configuration and operational limits: VMware Configurations Maximum. |

Review the HCX configuration and operational limits: VMware Configurations Maximum. |

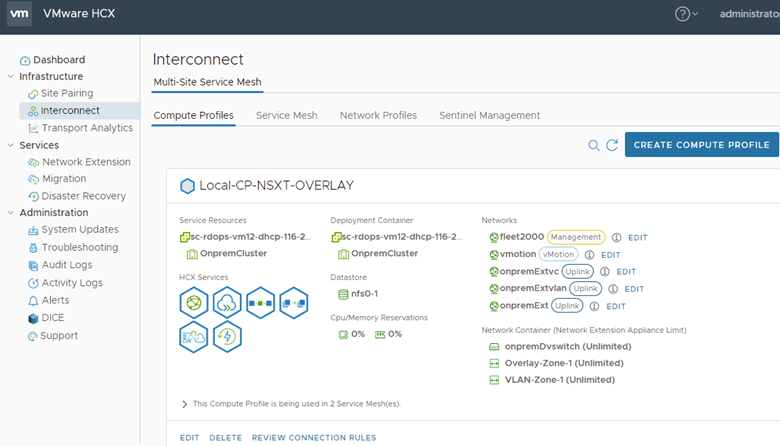

Planning the Compute Profile Configuration

A Compute Profile contains the catalog of HCX services and allows in-scope infrastructure to be planned and selected prior to deploying the Service Mesh. The Compute Profile describes how HCX deploys services and service appliances when a Service Mesh is created.

A Compute Profile is required in the on-premises HCX Connector.

A Compute Profile is pre-created in the VMware Cloud on AWS SDDC as part of enabling the HCX Add-on.

Review the table for a checklist of Compute Profile configuration items. For each row, the table describes how that information is used in the source and the destination Compute Profile.

On-premises Compute Profile |

SDDC Compute Profile |

|

|---|---|---|

▢ Compute Profile Name |

|

|

▢ Services to activate |

|

|

▢ Service Resources (Data Center or Cluster) |

|

|

▢ Deployment Resources (Cluster or Resource Pool) |

|

|

▢ Deployment Resources (Datastore) |

|

|

▢ Distributed Switches or NSX Transport Zone for Network Extension |

|

|

Planning the Network Profile Configurations

A Network Profiles contains information about the underlying networks and allows networks and IP addresses to be pre-allocated prior to creating a Service Mesh. Review and understand the information in Network Profile Considerations and Concepts before creating Network Profiles for HCX.

Network Profile Type |

On-premises Details |

VMware Cloud on AWS SDDC Details |

|---|---|---|

▢ HCX Uplink |

|

|

▢ HCX Management |

|

|

▢ HCX vMotion |

|

|

▢ HCX Replication |

|

|

Service Mesh Planning Diagram

The illustration summarizes HCX service mesh component planning.

Site to Site Connectivity

▢ Bandwidth for Migrations |

|

▢ Public IPs & NAT |

|

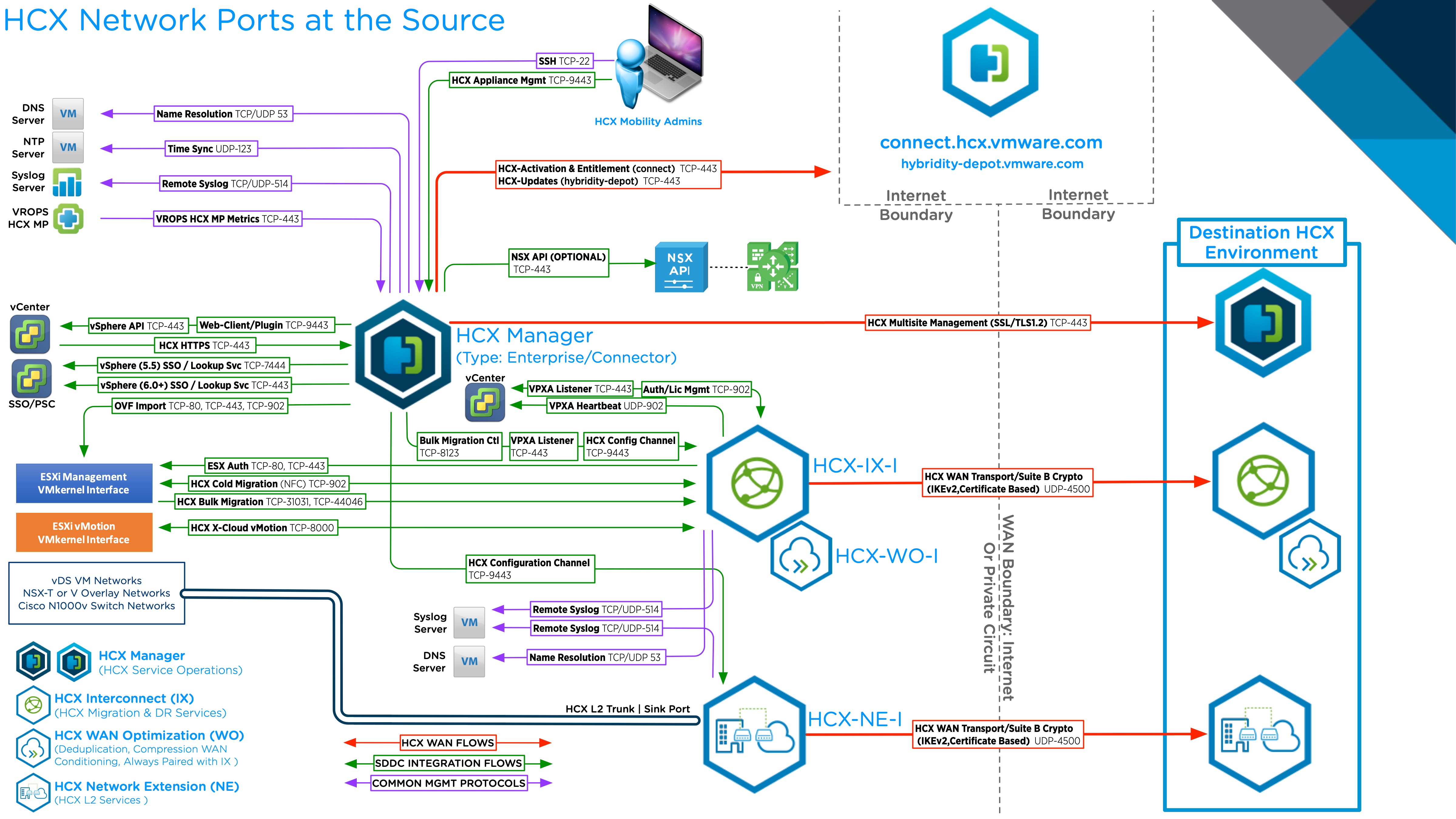

▢ Source HCX to Destination HCX Network Ports |

|

▢ Other HCX Network Ports |

|

HCX Network Ports On-Premises