The Compute Profile is a sub-component of the Service Mesh. The Compute Profile describes which HCX services run, and how they are deployed when the Service Mesh is created.

Introduction to Compute Profiles

A Compute Profile configuration required for Service Mesh deployments. It defines deployment parameters, and allows service. For configuration procedures, see Creating a Compute Profile. A Compute Profile is constructed of the following elements:

- Services

-

The HCX services that are activated when a Service Mesh is created (only licensed services can be activated).

- Service Clusters

-

At the HCX source, the Service Cluster hosts contains the virtual machines for migration. For Network Extension, only Distributed Switches connected to selected Service Clusters are displayed. A Datacenter container can be used to automatically include clusters within the Datacenter container. Clusters are automatically adjusted in the Compute Profile when clusters are removed or added to the Datacenter container.

At the HCX destination, the Service Clusters can be used as the target for migrations.

- Deployment Clusters

-

The Cluster (or Resource Pool) and Datastore that host the Service Mesh appliances.

For migrations to be successful, the Deployment Cluster must be connected such that the Service Cluster(s) vMotion and Management/Replication VMkernel networks are reachable to the HCX-IX appliance.

For Network Extension to be successful, the Deployment Cluster must be connected to a Distributed Switch that has full access to the VM network broadcast domains.

- Management Network Profile

-

The Network Profile that HCX uses for management connections.

- Uplink Network Profile

-

The Network Profile that HCX uses for HCX to HCX traffic.

- vMotion Network Profile

-

The Network Profile that HCX uses for vMotion-based connections with the ESXi cluster.

- Replication Network Profile

-

The Network Profile that HCX uses for Replication-based connections with the ESXi cluster.

- Distributed Switches for Network Extension

-

The Distributed Switches containing the virtual machine networks for extension.

- Guest Network Profile for OSAM

-

The Network Profile that HCX uses to receive connections from the Sentinel agents.

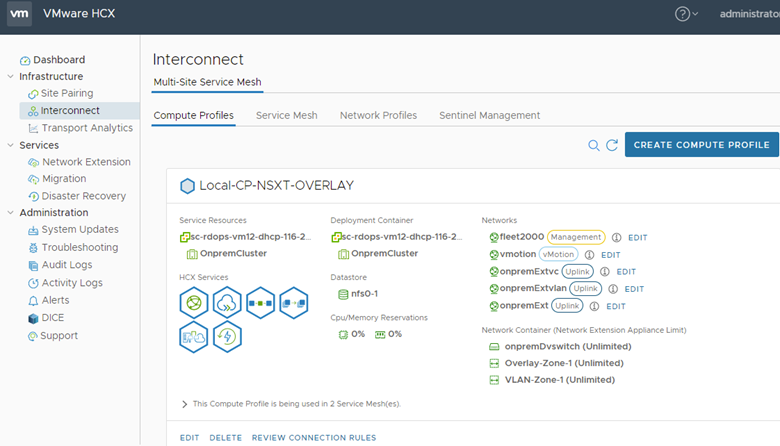

Characteristics of Compute Profiles

An HCX Manager system must have one Compute Profile.

The Compute Profile references clusters and inventory within the vCenter Server that is registered in HCX Manager (other vCenter Servers require their own HCX Manager).

Creating a Compute Profile does not deploy the HCX appliances (Compute Profiles can be created and not used).

Creating a Service Mesh deploys appliances using the settings defined in the source and the destination Compute Profiles.

A Compute Profile is "in use" when it is used in a Service Mesh configuration.

Changes to a Compute Profile profile are not affected in the Service Mesh until a Service Mesh Re-Sync action is triggered.

Compute Profiles and HCX Service Appliances

- HCX Interconnect (HCX-IX)

- Network Extension (HCX-NET-EXT)

- WAN Optimization (WAN-OPT)

- Sentinel Gateway (HCX-SGW)

- Sentinel Data Receiver (HCX-SDR)

The following Compute Profile considerations apply to these appliances.

- For HCX dataplane appliances HCX-NET-EXT, WAN-OPT, HCX-SGW, HCX-SDR, you can use Compute vMotion to relocate appliances from the vCenter within same deployment cluster as configured in Compute Profile.

- Using Compute vMotion, you can relocate HCX dataplane appliances to a different cluster other than deployment cluster with the following restrictions:

- The host/cluster must have a port group association with all networks defined under HCX Network Profile. For example, this can include the Uplink, Management, and vMotion networks.

- When relocating the WAN-OPT appliance in an NSX environment, the fleet network (hcx-UUID) must be mapped to all hosts/clusters.

- HCX redeploys appliances into same deployment cluster as configured under the Compute Profile during Upgrade or Redeploy operations, and removes previously deployed appliances from other clusters.

- The Appliances will be relocated/vMotion back to same deployment cluster as configured under CP during During a Service Mesh Resync operation, HCX relocates (vMotion) the appliances back to the same deployment cluster configured in the Compute Profile.

Note: For Storage vMotion requirements, the target datastore must have an association/reachability with all hosts/clusters to which the appliances relocate.When relocating Network Extension NE appliances, which are carrying data traffic over an extended datapath, the relocation process might experience some packet drops. This applies during relocation (vMotion) to the same cluster or a different cluster depending on the Compute Profile configuration.

Compute Profiles and Clusters

The examples that follow depict the configuration flexibility when using Compute Profiles to design HCX Service Mesh deployments. Each example is depicted in the context of inventory within a single vCenter Server connected to HCX. The configuration variations are decision points that apply uniquely to each environment.

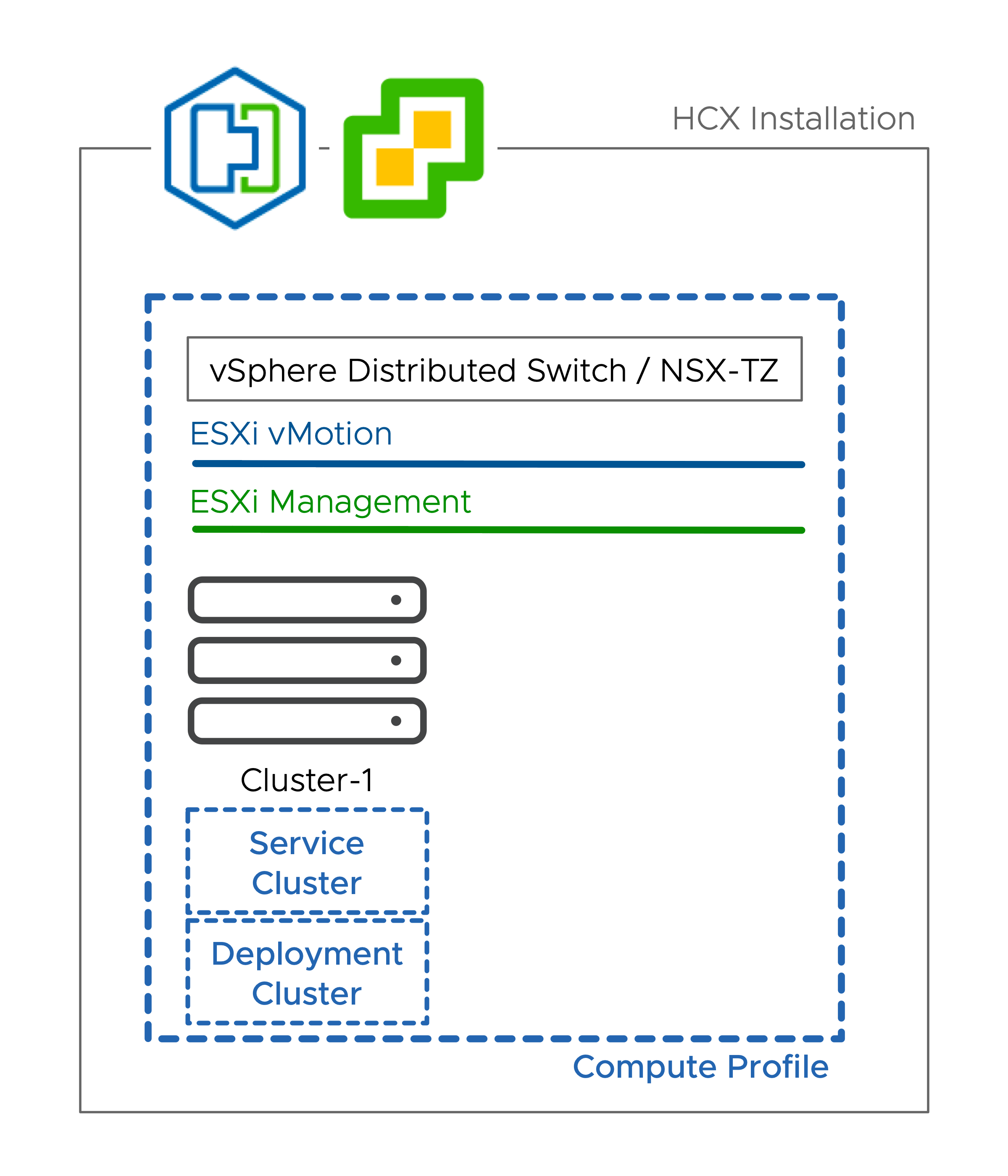

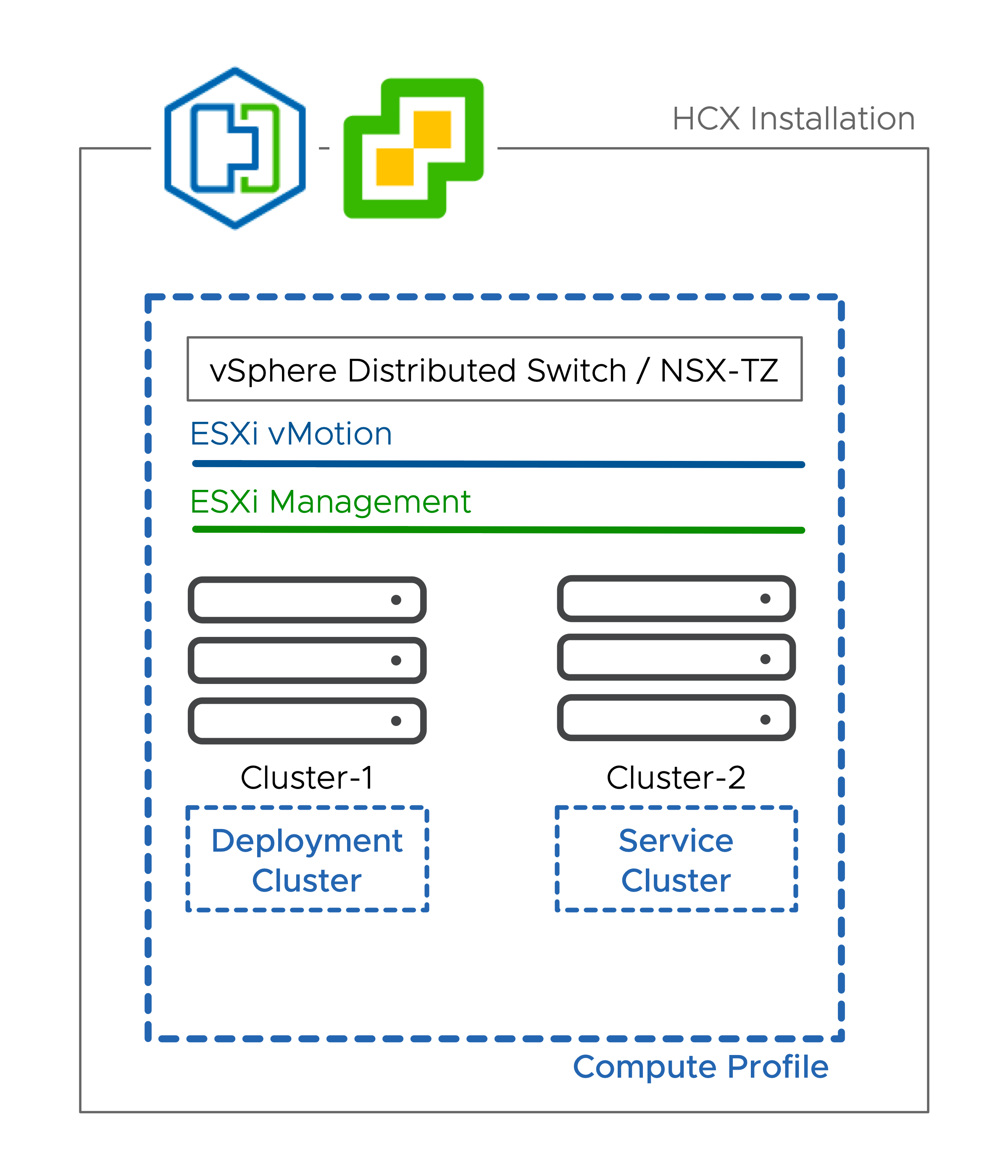

CP Configuration 1 - Single Cluster Deployments

In the illustrated example, Cluster-1 is both the Deployment Cluster and Service Cluster.

Single cluster deployments use a single Compute Profile (CP).

In the CP, the one cluster is designated as a Service Cluster and as the Deployment Cluster.

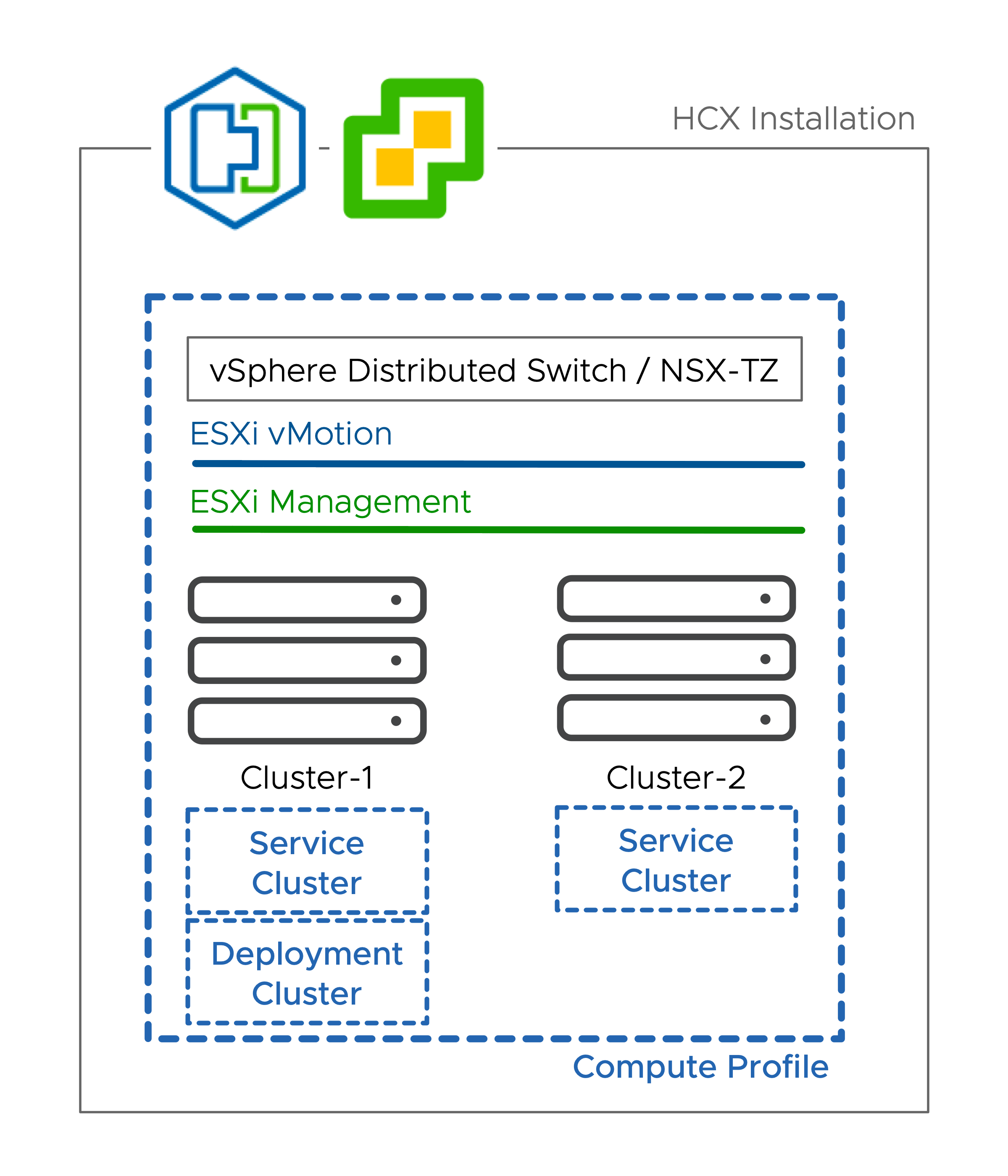

CP Configuration 2 - Multicluster (Simple CP)

In the illustrated example, Cluster-1 is the Deployment Cluster. Both Cluster-1 and Cluster-2 are Service Clusters.

-

In this CP configuration, one cluster is designated as the Deployment Cluster, and all clusters (including the Deployment Cluster) are designated as Service Clusters.

-

All the Service Clusters must be similarly connected (that is, same vMotion/Replication networks).

-

When the Service Mesh is instantiated, one HCX-IX is deployed for all clusters.

-

In larger deployments where clusters might change, a Datacenter container can be used (instead of individual clusters) so HCX automatically manages the Service Clusters.

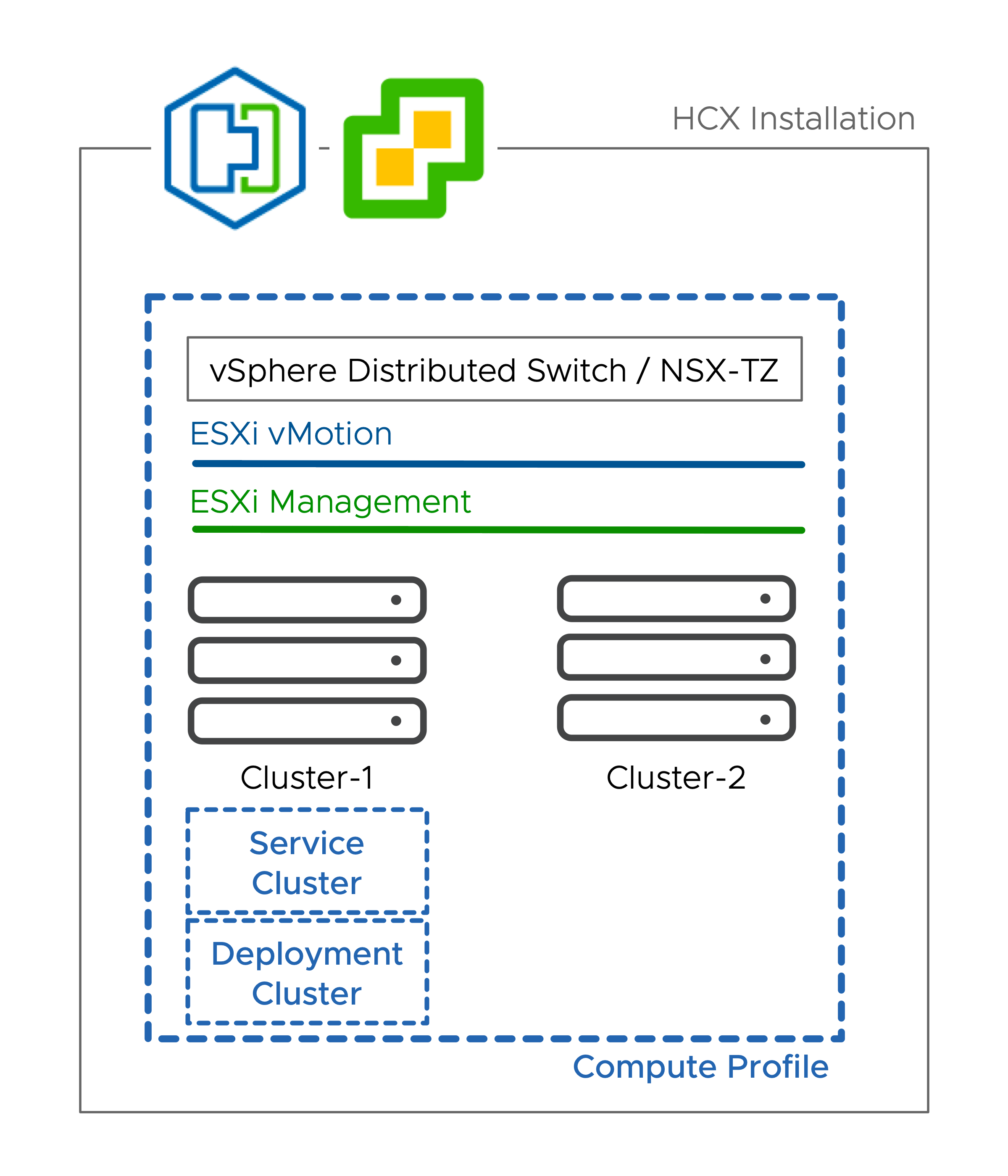

CP Configuration 3 - Multicluster (Dedicated Deployment Cluster)

In the illustrated example, Cluster-1 is the Deployment Cluster and Cluster-2 is the Service Cluster.

In this CP configuration, one cluster is designated as the Deployment Cluster and is not a Service Cluster. All other clusters are designated as Service Clusters:

This CP configuration can be used to dedicate resources to the HCX functions.

This CP configuration can be used to control site-to-site migration egress traffic.

This CP configuration can be used to provide a limited scope vSphere Distributed Switch in environments that heavily leverage the vSphere Standard Switch.

For HCX migrations, this CP configuration requires the Service Cluster VMkernel networks to be reachable from the Deployment Cluster, where the HCX-IX is deployed.

For HCX extension, this CP configuration requires the Deployment Cluster hosts to be within workload networks' broadcast domain (Service Cluster workload networks must be available in the Deployment Cluster Distributed Switch).

When the Service Mesh is instantiated, one HCX-IX is deployed for all clusters.

CP Configuration 4 - Cluster Exclusions

In the illustrated example, Cluster-2 is not included as a Service Cluster.

In this CP configuration, one or more servers have been excluded from the Service Cluster configuration.

This configuration can be used to prevent portions of infrastructure from being eligible for HCX services. Virtual machines in clusters that are not designated as a Service Cluster cannot be migrated using HCX (migrations will fail).

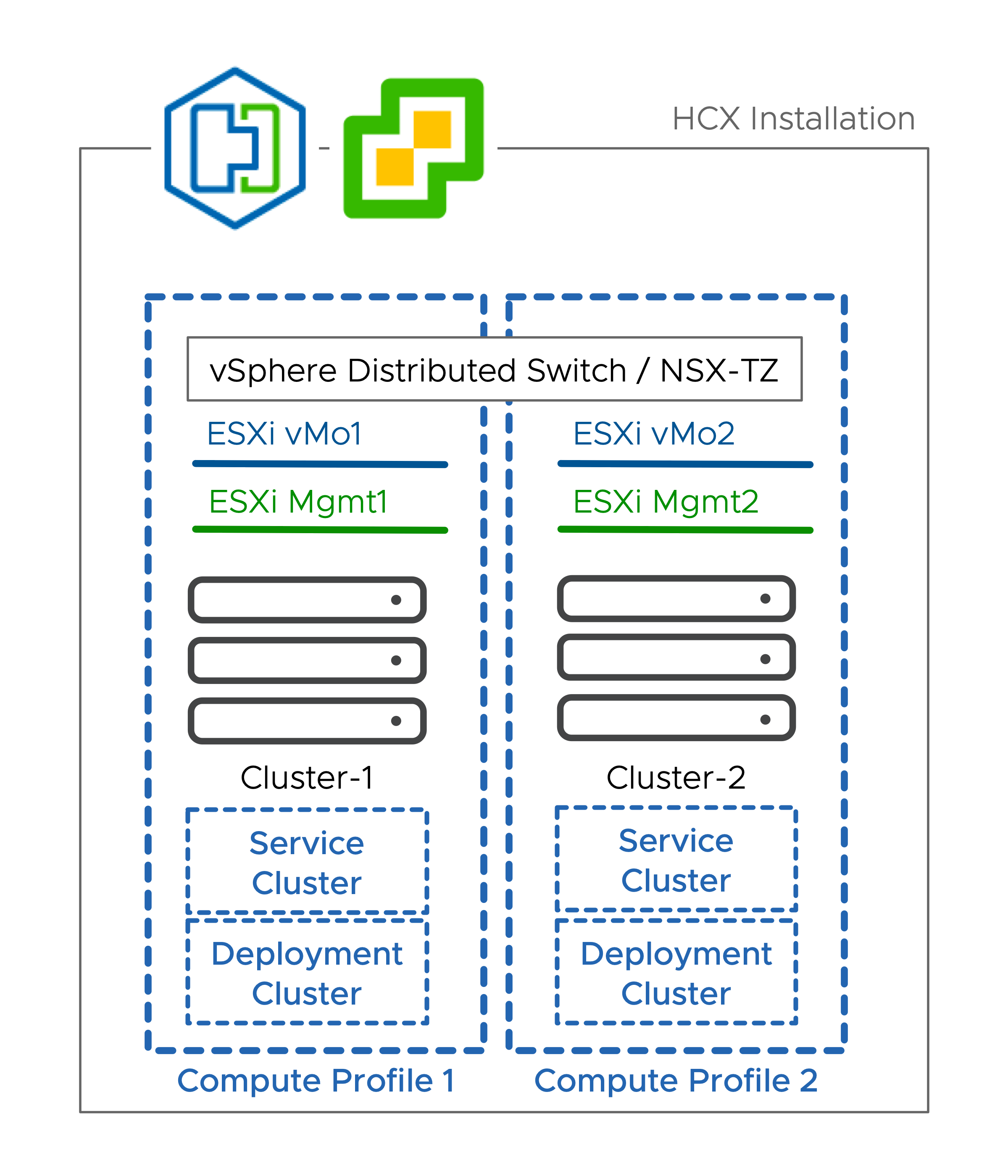

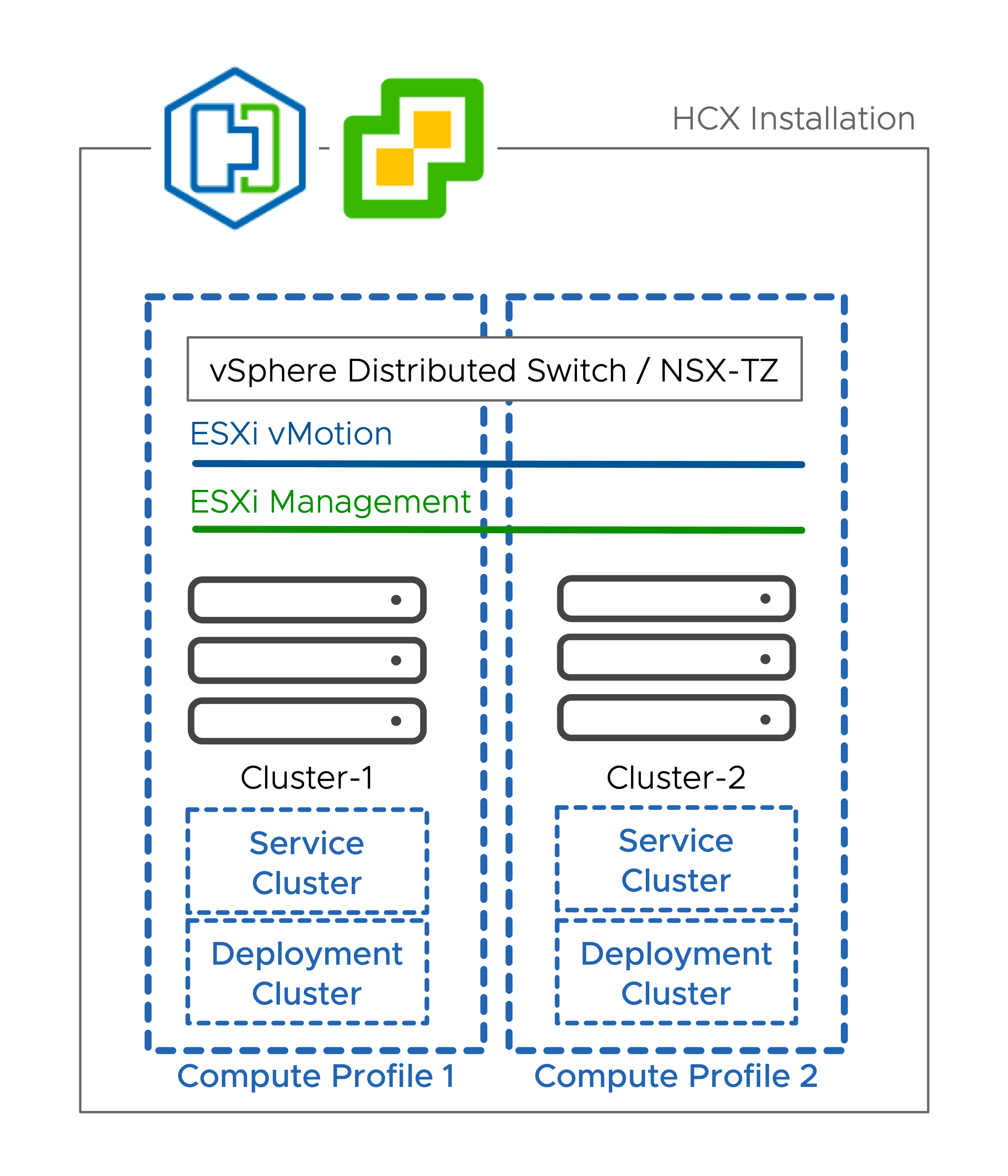

CP Configuration 5 - Multiple Compute Profiles (Optional, for Scale)

In the illustrated example, Compute Profile (CP) 1 has been created for Cluster-1, and CP-2 has been created for Cluster-2.

In the illustrated example, the VMkernel networks are the same. Creating additional Compute Profiles is optional (for scaling purposes).

In this Compute Profile configuration, Service Clusters are "carved" into Compute Profiles.

Every Compute Profile requires a Deployment Cluster, resulting in a dedicated Service Mesh configuration for each Compute Profile.

As an expanded example, if there were 5 clusters in a vCenter Server, you might have Service Clusters carved out as follows:

CP-1: 1 Service Cluster, CP-2: 4 Service Clusters

CP-1 2 Service Clusters, CP-2: 3 Service Clusters

CP-1: 1 Service Cluster, CP-2: 2 Service Clusters, CP-3: 2 Service Clusters

CP-1: 1 Service Cluster, CP-2: 1 Service Cluster, CP-3: 1 Service Cluster, CP-4: 1 Service Cluster, CP-5: 1 Service Cluster

It is worthwhile noting that the distinct Compute Profile configurations can leverage the same Network Profiles for ease of configuration.

CP Configuration 6 - Multiple Compute Profiles (with Dedicated Network Profiles)

In the illustrated example, Cluster-1 uses vMotion and Mgmt network 1. Cluster-2 uses vMotion and Mgmt network2.

In the illustrated example, the VMkernel networks are different, and isolated from each other. Creating dedicated Network Profiles (NPs) and dedicated Compute Profiles (CPs) is required.

In this CP configuration, the Service Clusters are 'carved up' into distinct Compute Profiles. The Compute Profiles reference cluster-specific Network Profiles.

Because the Service Mesh HCX-IX appliance connects directly to the cluster vMotion network, anytime the cluster networks for Replication and vMotion are different, create cluster-specific Network Profiles and assign them to cluster-specific Compute Profiles, which are instantiated using a cluster-specific Service Mesh.