VMware Integrated OpenStack with Kubernetes supports VDS, NSX-V, and NSX-T backend networking.

Networking Support

Container network and load balancer support for Kubernetes Services is dependent on the backend networking.

| Backend Networking | Container Network | Load Balancer | Network Policy |

|---|---|---|---|

| VDS | Flannel | Kubernetes Nginx Ingress Controller | Unsupported |

| NSX-V | Flannel | NSX Edge | Unsupported |

| NSX-T | NSX-T Container Plugin | NSX-T Edge | Supported |

- The container network is the Container Network Interface (CNI) plugin for container networking management. The plugin can allocate IP addresses, create a firewall, and create ingress for the pods.

- Flannel is a network fabric for containers and is the default for VDS and NSX-V networking.

- NSX-T Container Plug-in (NCP) is a software component that sits between NSX manager and the Kubernetes API server. It monitors changes on Kubernetes objects and creates networking constructs based on changes reported by the Kubernetes API. NCP includes native support for containers. It is optimized for NSX-T networking and is the default.

- The load balancer is a type of service that you can create in Kubernetes. To access the load balancer, you specify the external IP address defined for the service.

- The Kubernetes Nginx Ingress Controller is deployed on VDS by default but can be deployed on any backend platform. The Ingress Controller is a daemon, deployed as a Kubernetes Pod. It watches the ingress endpoint of the API server for updates to the ingress resource so that it can satisfy requests for ingress. Because the load balancer service type is not supported in a VDS environment, the Nginx Ingress Controller is used to expose the service externally.

- The NSX Edge load balancer distributes network traffic across multiple servers to achieve optimal resource use, provide redundancy, and distribute resource utilization. It is the default for NSX-V and is leveraged for Kubernetes services.

- The NSX-T Edge load balancer functions the same as the NSX Edge load balancer and is the default for NSX-T. However, when using the NCP, you cannot specify the IP address of the static load balancer.

- By default, pods accept network traffic from any source. Network policies provide a way of restricting the communication between pods and with other network endpoints. See https://kubernetes.io/docs/concepts/services-networking/network-policies/.

VDS Backend

With the VDS backend, VMware Integrated OpenStack with Kubernetes deploys Kubernetes cluster nodes directly on the OpenStack provider network. The OpenStack cloud administrator must verify that the provider network is accessible from outside the vSphere environment. VDS networking does not include native load balancing functionality for the cluster nodes, so VMware Integrated OpenStack with Kubernetes deploys HAProxy nodes outside the Kubernetes cluster to provide load balancing.

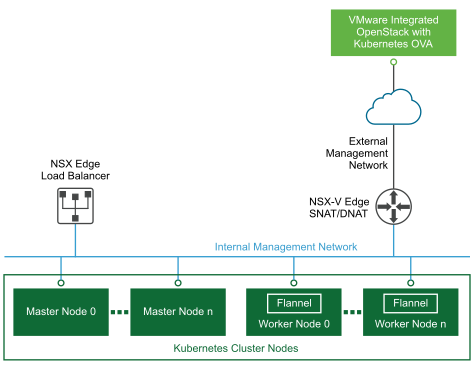

NSX-V Backend

With the NSX-V backend, VMware Integrated OpenStack with Kubernetes deploys multiple nodes within a single cluster behind the native NSX Edge load balancer. The NSX Edge load balancer manages up to 32 worker nodes. Every node within the Kubernetes cluster is attached to an internal network and the internal network is attached to a router with a default gateway set to the external management network.

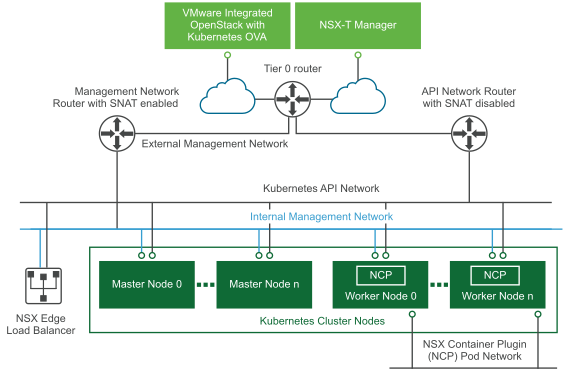

NSX-T Backend

- One NIC connects to the NCP Pod network, an internal network with no routing outside the vSphere environment. When the NSX Container Plugin is enabled, this NIC is dedicated to Pod traffic. IP addresses used for translating Pod IPs using SNAT rules and for exposing ingress controllers using SNAT/DNAT rules are referred to as external IPs.

- One NIC connects to the Kubernetes API network which is attached to a router with SNAT disabled. Special Pods such as KubeDNS can access the API server using this network.

- One NIC connects to the internal management network. This NIC is accessible from outside the vSphere environment through a floating IP that is assigned by the external management network.

NSX-T networking does not include native load balancing functionality, so VMware Integrated OpenStack with Kubernetes creates two separate load balancer nodes. The nodes connect to the management network and API network.