This topic explains the provisioning and configuring in Google Cloud Platform (GCP) with support for Bring Your Own IP Address (BYOIP).

Linux Server Cloud and GCP IPAM on GCP are not supported by NSX Advanced Load Balancer. For more details on Internal Load Balancer and BYOIP, see GCP VIP as Internal Load Balancer and BYOIP.

Public IP Support for BYOIP in GCP

Google has introduced public IP support for Internal Load Balancing in GCP, through which you can host your public IPs to the Google cloud. The NSX Advanced Load Balancer supports this feature in Google cloud, where you can create a load balancer with a VIP from the GCP VPC subnet. You also need to create a static route in GCP with the public IP as the destination and the VIP as next hop.

BYOIP in GCP

GCP offers Internal Load Balancing for TCP and UDP traffic. This enables you to operate and scale your services behind a private load balancing IP address that is accessible only to your internal virtual machine instances.

Using the load balancer you can configure a private load balancing IP address to be the front end for your private back end instances. With this, you will not need a public IP address for your load balancing service. The internal client requests stay internal to your VPC network and the region, resulting in lower latency, as all load-balanced traffic will be restricted within the Google network.

Features

Works with auto mode VPC networks, custom mode VPC networks, and legacy networks.

Allows autoscaling across a region, where it can be implemented within the regional managed instance groups, thus making services immune to zonal failures.

Supports traditional 3-tier web services, where the web tier uses external load balancers such as HTTP, HTTPS, or TCP/ UDP network load balancing. This also supports instances running the application tier or the back end databases that are deployed behind the Internal Load Balancer.

Load Balancing and Health Check Support

Supports load balancing TCP and UDP traffic to an internal IP address. You can also configure the Internal Load Balancing IP from within your VPC network.

Supports load balancing across instances in a region. This allows instantiating instances in multiple availability zones within the same region.

Provides fully managed load balancing service that scales as required, to handle client traffic.

GCP health check system monitors the back end instances. You can configure TCP, SSL (TLS), HTTP, or HTTPS health check for these instances.

Considerations

Health check probes are in the address range of 130.211.0.0/22 to 35.191.0.0/16. Add firewall rules to allow these addresses.

If all instances are unhealthy in the back-end service, the ILB load balances the traffic among all instances.

VIP - Internal Load Balancer (ILB)

The VIP reachability is through Internal Load Balancer (ILB) where VIP is allocated from a GCP subnet and the VIP is the frontend IP of the ILB. The ILB back ends are the SEs on which the virtual service is placed.

The ILB works across projects in a shared VPC case. Only auto allocate of VIP is supported.

You can create all the GCP resources for ILB in the SE’s project. But you cannot update the forwarding rule port once created.

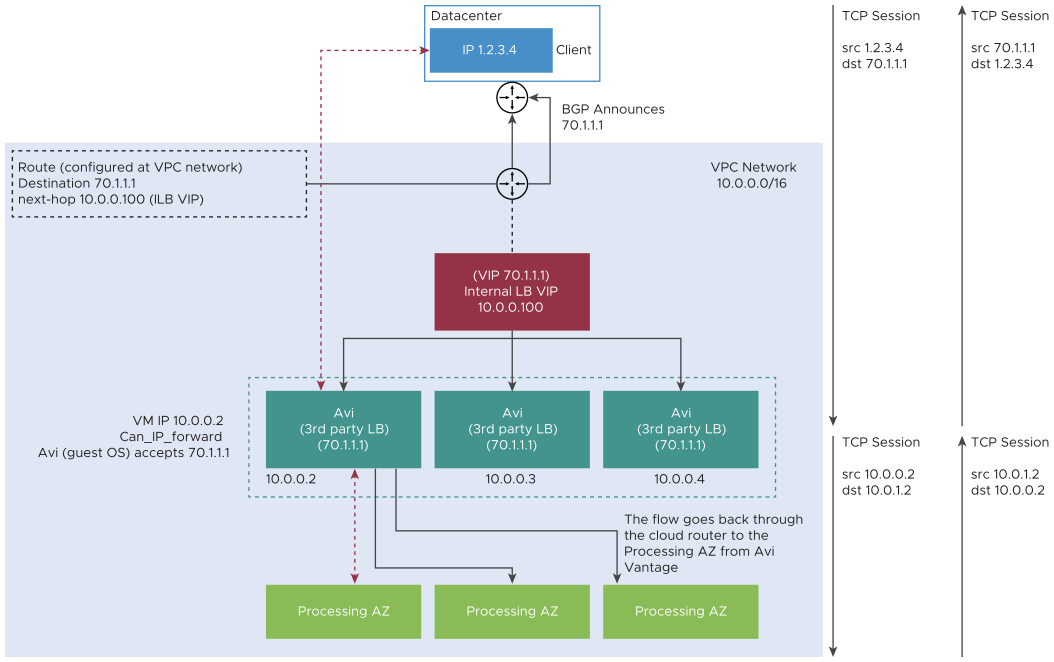

Floating or Public IP - GCP Route with ILB as next-hop

The floating IP is a GCP Route with destination IP as the public IP (BYOIP or GCP allocated) and next hop as the ILB.

You can configure GCP cloud routers to advertise the FIP routes through BGP.

Limitations

ILB supports UDP and TCP. But in the case of UDP, the health check type is not UDP (due to Google limitation) and so the fail over time is higher.

The virtual IP cannot be shared by other virtual services as the forwarding ports cannot be updated. A new forwarding rule with the same IP but different port is not allowed in GCP.

A virtual service can only have upto 5 ports as the forwarding rule can have only 5 ports.

The health check is done on the same port as the VIP and not on the instance IP.

If the Service Engine is in N backend service, it receives N health check probes per health check interval.

Ensure that the VIP is not configured in the same subnet as that of the Service Engine.

Deploying BYOIP

With BYOIP, you can configure a private RFC 1918 address as the load balancing IP address and configure back end instance groups to handle requests that are sent to the load balancing IP address from client instances.

The back end instance groups can be zonal or regional, which enables you to configure instance groups according to your availability requirements.

The traffic sourced from the client instances to the ILB must belong to the same VPC network and region, but can be in different subnets as compared to the load balancing IP address and the back end.

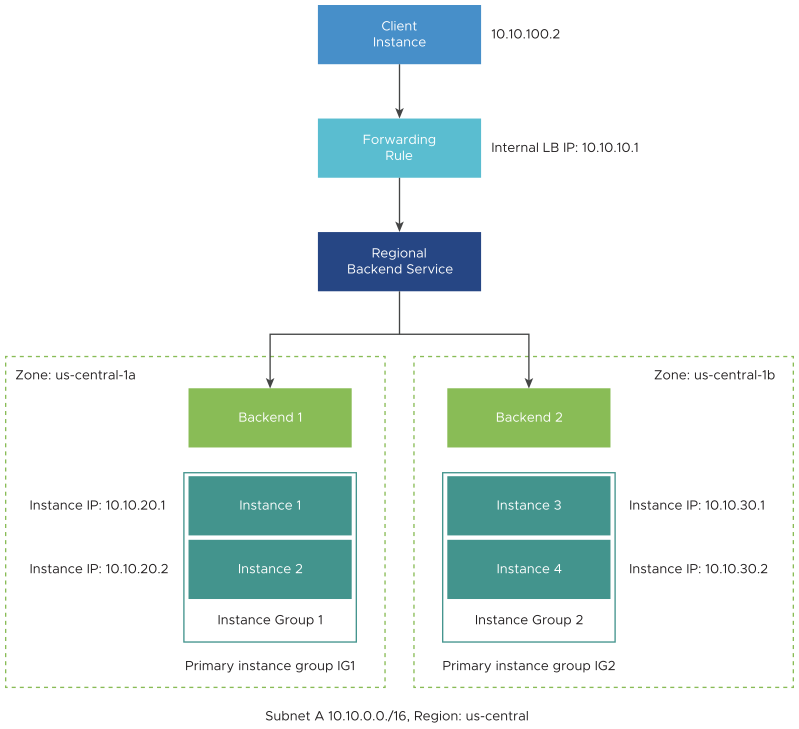

The following figure represents a sample deployment:

Provisioning Multi-Project

A virtual private cloud (VPC) is a global private isolated virtual network partition that provides managed networking functionality to the GCP resources. BYOIP in GCP supports shared VPC, also known as XPN, that allows you to connect resources from multiple projects to a common VPC network.

On using shared VPC, you need to designate a project as a host project and one or more projects as service projects.

- Host Project:

-

This contains one or more shared VPC networks. One or more service projects can be attached to the host project to use the shared network resources.

- Service Project:

-

This is any project that participates in a shared VPC by being attached to the host project. You can configure new IPAM fields in NSX Advanced Load Balancer and cross-project deployment is also supported.

Reach out to your Google support contact for allowing specific projects.

GCP Settings

The NSX Advanced Load Balancer Controller, Service Engines, and the network can all be in different projects.

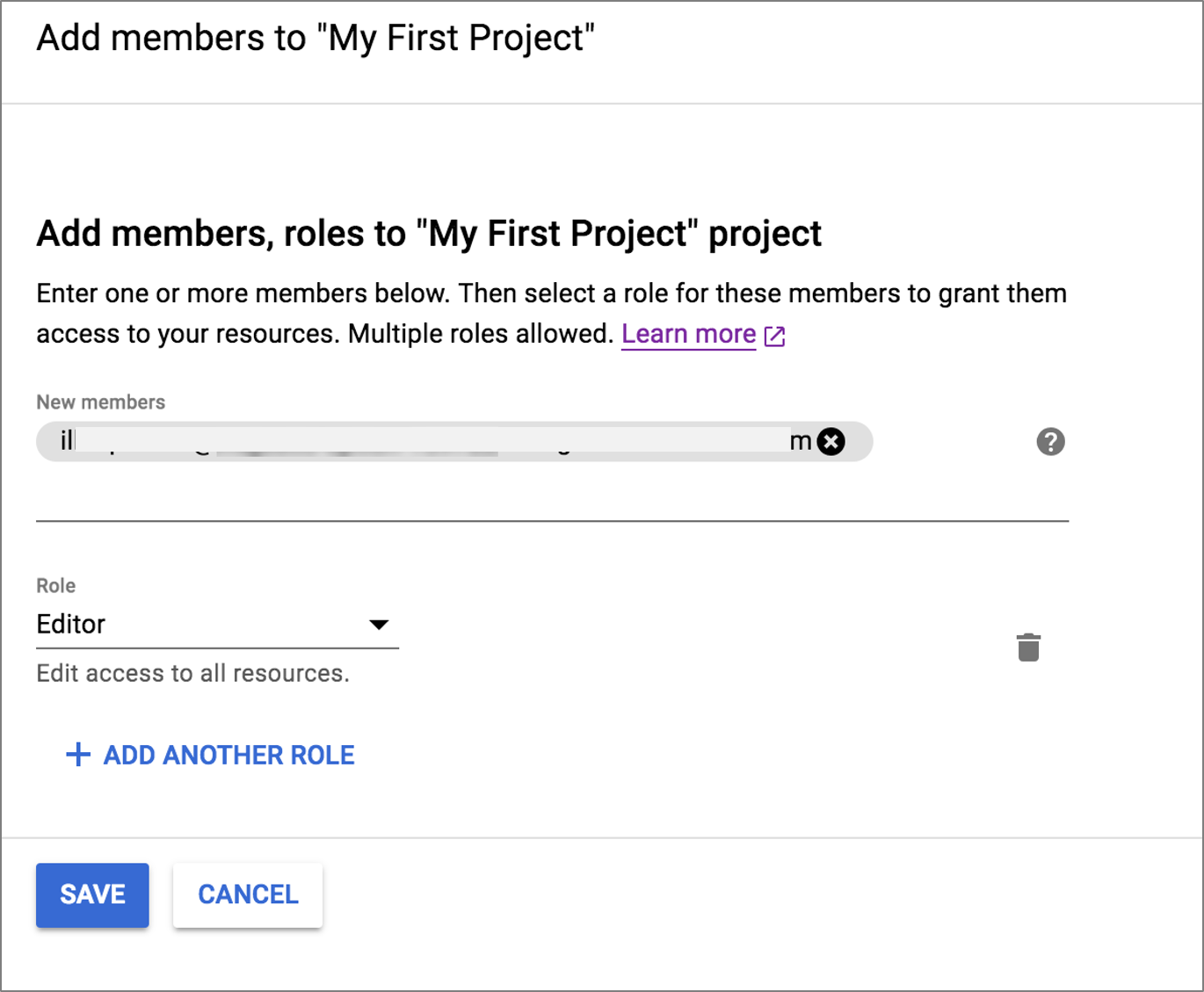

By default, GCP adds a service account to each virtual machine in the following format: [PROJECT_NUMBER][email protected].

For integration with NSX Advanced Load Balancer,

Set up the service account in editor role for the Controller VM to call the Google cloud APIs.

Add this service account as a member to the Service Engines and XPN project.

Map this account to the virtual machines created, so that the required permissions are allotted.

The following is an example of creating a new service account:

[email protected] in the Controller project:

NSX Advanced Load Balancer Configuration

Follow the steps below to configure NSX Advanced Load Balancer BYOIP support in GCP:

Setting up the Controller.

Virtual machine configuring GCP IPAM.

Attaching GCP IPAM.

Setting up Service Engine.

Configuring Virtual Service.

Setting up the NSX Advanced Load Balancer Controller VM

Follow the instructions outlined in Installing NSX Advanced Load Balancer Controller in a Linux Server Cloud to install or run the Controller on the previously created instance. Also ensure that the Service Engine status is active, indicated as Green.

Configuring GCP IPAM Using CLI

You can configure GCP IPAM using the commands as shown below.

[admin:10-1-1-1]: > configure ipamdnsproviderprofile gcp-ipam

[admin:10-1-1-1]: ipamdnsproviderprofile> type ipamdns_type_gcp

[admin:10-1-1-1]: ipamdnsproviderprofile> gcp_profile

[admin:10-1-1-1]: ipamdnsproviderprofile:gcp_profile> **use_gcp_network**

[admin:10-1-1-1]: ipamdnsproviderprofile:gcp_profile> vpc_network_name gcp_vcp

[admin:10-1-1-1]: ipamdnsproviderprofile:gcp_profile> region_name us-central1

[admin:10-1-1-1]: ipamdnsproviderprofile:gcp_profile> network_host_project_id net1

[admin:10-1-1-1]: ipamdnsproviderprofile:gcp_profile> save

[admin:10-1-1-1]: ipamdnsproviderprofile> save

+-------------------------+-------------------------------------------------------------+

| Field | Value |

+-------------------------+-------------------------------------------------------------+

| uuid | ipamdnsproviderprofile-e39d51e5-2170-415d-b4ac-7a82068b2bc5 |

| name | gcp-ipam |

| type | IPAMDNS_TYPE_GCP |

| gcp_profile | |

| match_se_group_subnet | False |

| **use_gcp_network** | True |

| region_name | us-central1 |

| vpc_network_name | gcp_vcp |

| allocate_ip_in_vrf | False |

| network_host_project_id | net1 |

| tenant_ref | admin |

+-------------------------+-------------------------------------------------------------+

The field use_gcp_network is not available in the UI. This option can be enabled only through the CLI.

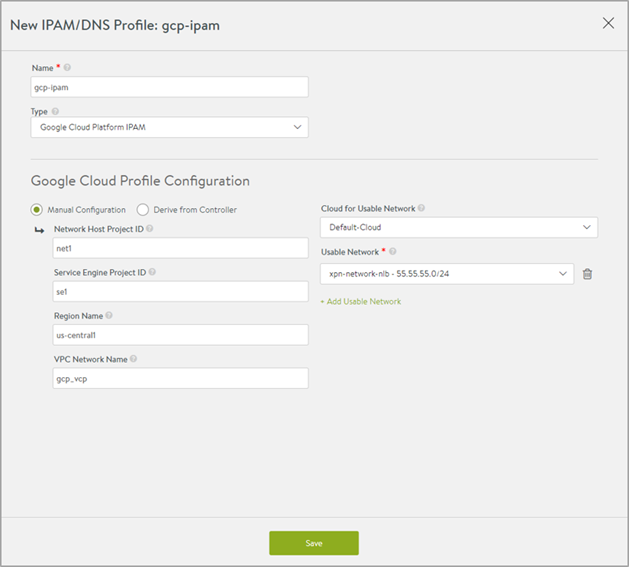

Configuring GCP IPAM Through UI

Navigate to and click Create.

Choose the Type as Google Cloud Platform IPAM from the drop-down menu.

For the Profile Configuration, click Manual Configuration, to enter the details for:

Network Host Project ID

Service Engine Project ID

Region Name

VPC Network Name

You can alternatively select the option for Derive from Controller, to obtain parameters for the profile configuration.

Click Add Usable Network to specify the network details.

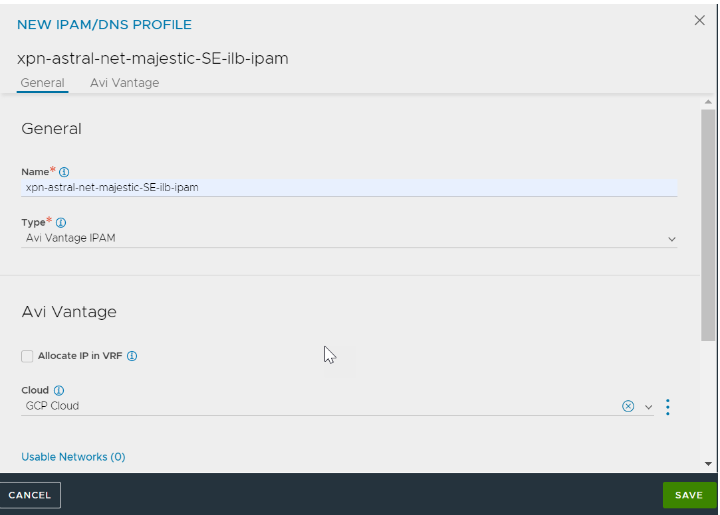

Starting with NSX Advanced Load Balancer 22.1.3, the following UI is available:

In the NEW IPAM/DNS PROFILE screen, specify the profile name.

Select Avi Vantage IPAM from the Type drop-down menu and select a previously created cloud for the Cloud field.

Add Usable Networks and click SAVE.

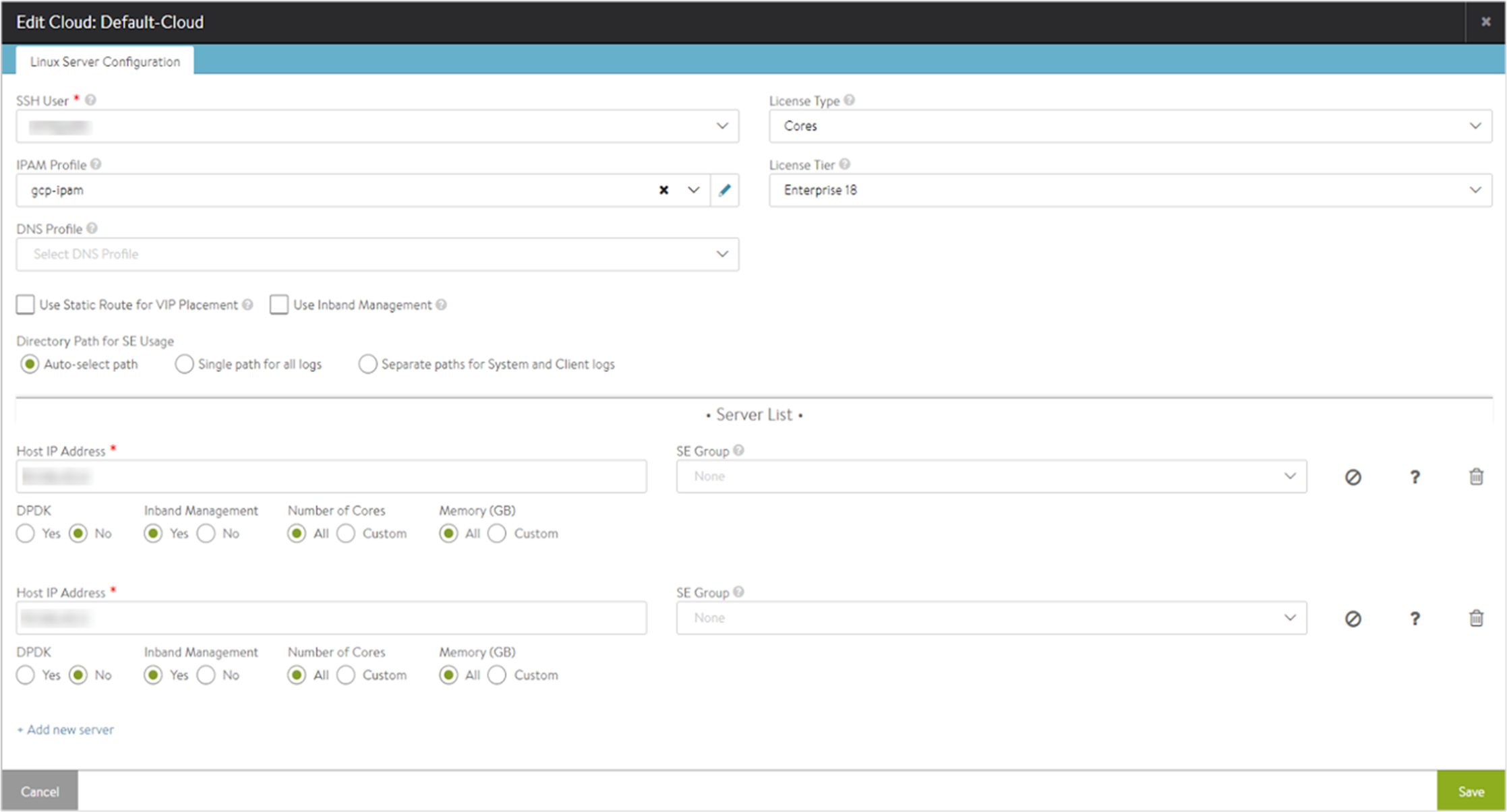

Attaching GCP IPAM

To attach GCP IPAM to a Linux Server cloud, edit the Default-Cloud. Choose the GCP IPAM provider.

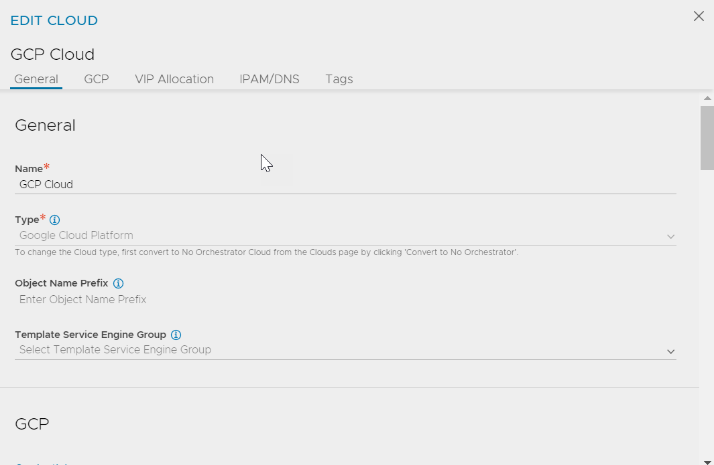

Starting with NSX Advanced Load Balancer 22.1.3, the following UI is available:

You can add the IPAM profile while editing a cloud as follows:

Edit an existing cloud.

Navigate to IPAM/DNS tab. In the IPAM Profile field, select the created IPAM profile from the drop-down menu.

Setting up Service Engine

Create Service Engines.

Add the created Service Engines to the Linux Server cloud.

Configure the Linux server cloud using the IP addresses of the Service Engine instances.

Configuring Virtual Service

Follow the instructions provided in the Creating Virtual Service in the NSX Advanced Load Balancer Deployment Guide for Google Cloud Platform (GCP) for creating virtual services.

Ensure that the VIP configured is not in the same subnet as that of the service engines.

Cloud Router Integration

Google Cloud Router enables dynamic exchange of routes between the Virtual Private Cloud (VPC) and on-premises networks by using Border Gateway Protocol (BGP).

The GCP Cloud Router will be updated by the Controller.

Prerequisites

The following permissions are required for updating the Cloud Router:

For more information, see Creating Custom Roles.

Limitations

Multiple clusters cannot share the same cloud router as the update cannot be coordinated between them.

Multiple NSX Advanced Load Balancer clouds cannot share the same Cloud Router.

If a Cloud Router is removed from the cloud configuration, the NSX Advanced Load Balancer Floating IPs (FIP) which are on the Cloud Router have to be manually deleted from the Cloud Router in GCP as the controller will no longer manage that Router.

Configuring Cloud Routers

Create the Cloud Router in the network project and in the region where the service engine VMs are created. See Creating Cloud Routers to know more.

Add the Cloud Router names in the custom_tags field of the cloud as shown in the configuration below:

[admin:10-152-134-17]: > configure cloud Default-Cloud [admin:10-152-134-17]: cloud> custom_tags New object being created [admin:10-152-134-17]: cloud:custom_tags> tag_key cloud_router_ids [admin:10-152-134-17]: cloud:custom_tags> tag_val "router-1, router-2" [admin:10-152-134-17]: cloud:custom_tags> save [admin:10-152-134-17]: cloud> save +--------------------------------+--------------------------------------------+ | Field | Value | +--------------------------------+--------------------------------------------+ | uuid | cloud-1a388500-1d6c-45e0-a557-f7b79af6f362 | | name | Default-Cloud | | vtype | CLOUD_LINUXSERVER | | apic_mode | False | | linuxserver_configuration | | | se_sys_disk_size_GB | 10 | | se_log_disk_size_GB | 5 | | se_inband_mgmt | False | | ssh_user_ref | user1 | | dhcp_enabled | False | | mtu | 1500 bytes | | prefer_static_routes | False | | enable_vip_static_routes | False | | license_type | LIC_CORES | | ipam_provider_ref | gcp-ipam | | custom_tags[1] | | | tag_key | cloud_router_ids | | tag_val | router-1, router-2 | | state_based_dns_registration | True | | ip6_autocfg_enabled | False | | tenant_ref | admin | | license_tier | ENTERPRISE_18 | | autoscale_polling_interval | 60 seconds | +--------------------------------+--------------------------------------------+

Multiple Cloud Routers can be added for an NSX Advanced Load Balancer cloud and all the routers will be updated with the FIP.

Add custom tags for the cloud tag_key as cloud_router_ids. tag_val is a comma separated list of Cloud Router names.

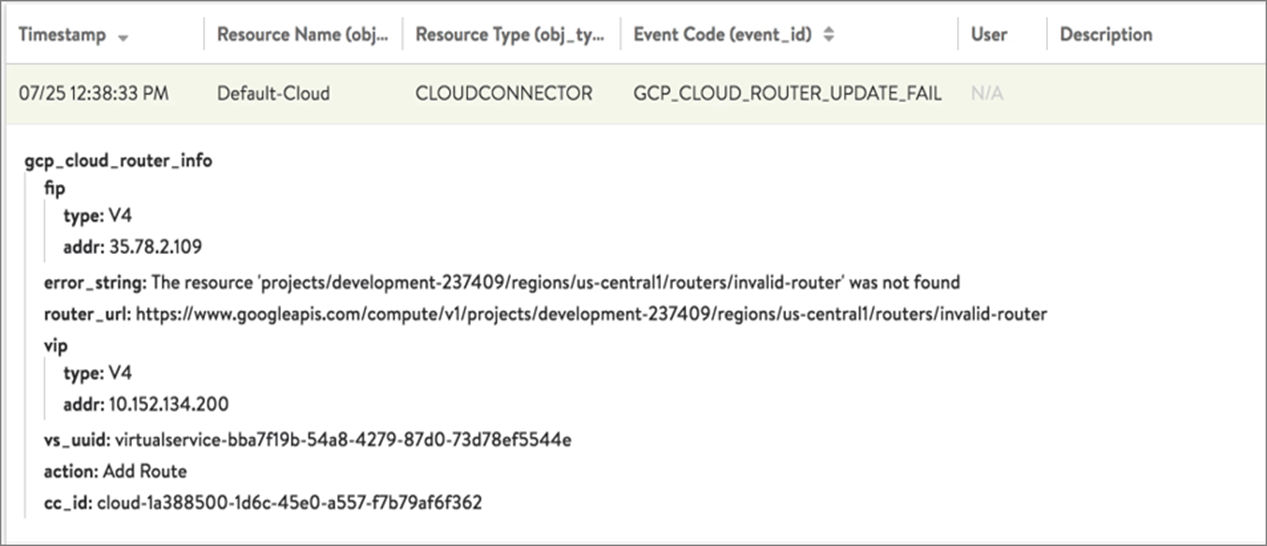

For each Cloud Router update, a corresponding event is generated as below:

GCP_CLOUD_ROUTER_UPDATE_SUCCESS: An internal event generated each time the Cloud Router is successfully updated.GCP_CLOUD_ROUTER_UPDATE_FAIL: An external event generated when an update for the Cloud Router fails.

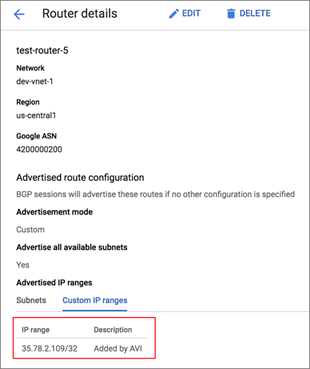

The Cloud Router can have other IPs also which were not created by the NSX Advanced Load Balancer. These IPs will remain as is and all the IPs that are configured by the Controller in the Cloud Router will have a description - Added by AVI.

Troubleshooting

If a FIP is not getting advertised, view the Custom IP Ranges in Cloud Router Details.

To view the Cloud Router Details,

Navigate to .

Click the Cloud Router required.

Click Custom IP ranges tab to view the IP range and Description as shown below:

If the FIP is not listed under Custom IP ranges, view the Events page for the Controller in NSX Advanced Load Balancer.

Navigate to .

Click the GCP_CLOUD_ROUTER_UPDATE_FAIL event to view the error message as shown below: