This section describes the installation design of NSX Advanced Load Balancer on NSX-T managed vSphere environments (vCenter + ESXi). NSX Advanced Load Balancer supports over-the-top, manual deployment in the NSX-T environment.

Deployment Mode

The following are the recommended deployment modes for NSX Advanced Load Balancer on top of NSX-T managed infrastructure:

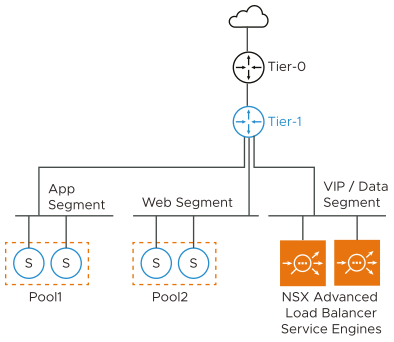

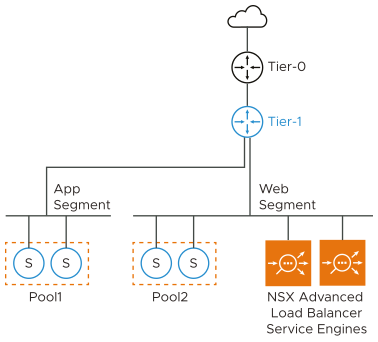

One Arm Mode with overlay VIP Segment - Single Tier 1

The diagrammatic representation of a typical NSX Advanced Load Balancer deployment on a simple NSX-T environment with all server segments connected to a single Tier 1 router is as follows:

You need to create the VIP/data segment manually. The network adapter 1 of the Service Engine VM is reserved for management connectivity. You can connect only one of the remaining nine data interfaces (network adapter 2-10) of the Service Engine VM to the VIP/data segment. The rest of the interfaces must be left disconnected. The Service Engines are deployed in one-arm mode; i.e. the same interface is used for client and backend server traffic. The SE routes to backend servers through the Tier 1 router.

You can allocate the VIPs from the same subnet as that of the VIP/data interface of the Service Engine. You should reserve a range of static IP addresses to be used as VIPs in the subnet assigned to the VIP/data segment. Having a dedicated segment for VIPs makes managing the IP ranges independent of other subnets easier.

Optionally, you can place the data interface of the Service Engine on one of the server segments if you do not need a separate VIP segment. The subnet assigned to the server segment must have enough free static IP addresses to be used as VIPs.

In both cases the SE to pool server traffic is handled by the Tier 1 DR (distributed router) which is present on all ESX transport nodes. Hence, there is no significant performance difference between using dedicated or shared segment for VIP/data.

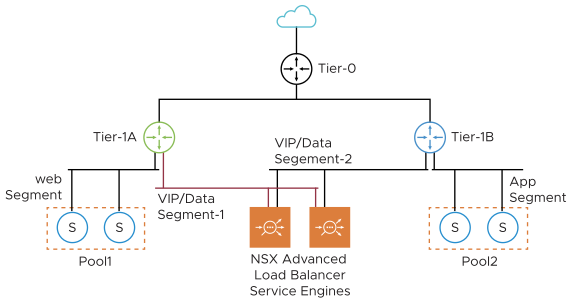

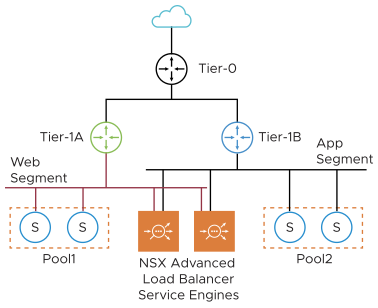

One Arm Mode with Overlay VIP Segment, Multiple Tier 1

In NSX-T environments, where web servers of different applications are connected to their individual Tier 1 routers, you need to create a VIP/data segment on each Tier 1.

The network adapter 1 of the Service Engine VM is reserved for management connectivity. One data interface (network adapter 2) is connected to VIP/data segment-1, and one data interface (network adapter 3) is connected to VIP/Data segment-2. The rest of the interfaces are kept disconnected.

You can allocate the VIPs from the same subnet as that of the VIP/data interface of the SE. You should reserve a range of static IP addresses to be used as VIPs in the subnet assigned to each VIP/data segment. Having a dedicated segment for VIPs makes managing the IP ranges independent of other server subnets easier. You need to configure a separate VRF on NSX Advanced Load Balancer for each Tier 1 and add the data interfaces to the VRF corresponding to the Tier 1 segment it is connected to. For instance, in the above diagram, you should configure VRF-A and VRF-B for Tier 1 A and Tier 1 B. Also, you should add the SE interface connected to VIP/data segment-1 to VRF-A and the interface connected to VIP/data segment-2 to VRF-B. While creating the virtual services for a pool, you should choose the corresponding VRF.

For instance, select VRF-A while creating a virtual service for Pool1 and VRF-B while creating a virtual service for Pool2. This way, the VIP of the virtual service managing Pool1 will be on VIP/data segment-1, and the VIP of the virtual service managing Pool2 will be on VIP/data segment-2. This is required since you can route the SE to pool server traffic through Tier 1 DR and do not have to hairpin to Tier 0, and also because you can also configure logical segments on different Tier 1 to have the same subnet. Hence each Tier 1 traffic must be contained in its own VRF.

Optionally, if a separate VIP segment is not required, you can place the data interfaces of the Service Engine on one of the server segments. The subnet assigned to the server segment must have enough free static IP addresses to be used as VIPs.

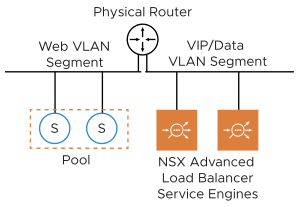

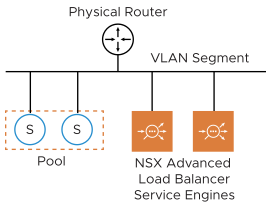

One Arm Mode with VLAN VIP Segment

NSX Advanced Load Balancer SEs can also be deployed in cases where the NSX-T logical segment is of VLAN type.

You need to create the VIP/data segment manually. The network adapter 1 of the Service Engine VM is reserved for management connectivity. You can connect only one of the remaining nine data interfaces (network adapter 2-10) of the SE VM to the VIP/data segment. Rest of the interfaces must be left disconnected. The SEs are deployed in one-arm mode; i.e. the same interface is used for client and backend server traffic. The SE routes to backend servers through the external physical router.In this mode, you can either allocate the VIPs from the same subnet as that of the VIP/data interface of the SE or allocate the VIP from a completely different subnet (not used anywhere else in the network). In the first case, you need to reserve a range of static IP addresses in the subnet assigned to each VIP/data segment to be used as VIPs.

In the second case, you can configure SEs to peer with the physical router by configuring BGP on NSX Advanced Load Balancer (provided the router supports BGP). The SEs will advertise VIP routes with the SE data interface IP as the next hop. The router does ECMP across the SEs if the VIP is scaled out.You can allocate the VIPs from the same subnet as that of the VIP/data interface of the SE. You should reserve a range of static IP addresses to be used as VIPs in the subnet assigned to the VIP/data segment. Having a dedicated segment for VIPs makes managing the IP ranges independent of other subnets easier.

Optionally, you can place the data interface of the SE on one of the server segments if you do not need a separate VIP segment. The subnet assigned to the server segment must have enough free static IP addresses to be used as VIPs.

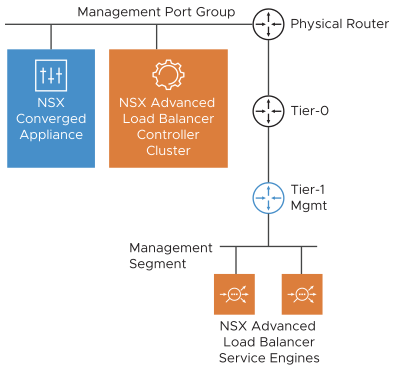

NSX Advanced Load Balancer Controller Deployment and Management Connectivity

You should deploy NSX Advanced Load Balancer Controller cluster VMs adjacent to NSX-T, connected to the management port group. You should also create a separate Tier 1 and connected management segment for NSX Advanced Load Balancer SE management connectivity.

The network interface 1 of the SE VM is connected to the management segment. You should configure the management Tier 1 to redistribute the connected subnet routes to the Tier 0. Tier 0 must advertise the VIP to external peer using BGP.

NSX Security Configuration

Exclusion List

NSX Advanced Load Balancer SE redirects traffic from the primary SE to secondary SEs when using L2 scale out mode. This leads to asymmetric traffic which can get blocked by the Distributed Firewall because of its stateful nature. Hence to ensure that the traffic is not dropped when a virtual service scales out, you should add the SE interfaces connected to the VIP/data segment to exclusion list.

This can be done by creating an NSG on NSX-T and adding the VIP/data segment as member. You can then add this NSG to the exclusion list. This way if a new SE is deployed its VIP/data interface will dynamically get added to Exclusion list. In case the SEs are connected to server segment, adding the segment to Exclude list is not an option as that will put all servers in the list too. You need to add individual SE VMs as members to the NSG.

Distributed Firewall

NSX Advanced Load Balancer Controller and the SEs require certain protocols/ports to be allowed for management traffic as listed in Ports and Protocols section. If the distributed firewall is enabled with default rule as block/reject all, create the following allow rules on DFW:

Controller UI Access

Source — Any (can be changed to restrict the UI access)

Destination — NSX Advanced Load Balancer Controller management IPs and Cluster IP Service — TCP(80,443)

Action — Allow

This rule is required only if NSX Advanced Load Balancer Controller is connected to NSX-T managed segment.

Controller Cluster Communication

Source — NSX Advanced Load Balancer Controller management IPs

Destination — NSX Advanced Load Balancer Controller management IPs

Service — TCP(22, 8443)

Action — Allow

This rule is required only if NSX Advanced Load Balancer Controller is connected to NSX-T managed segment.

SE to Controller Secure Channel

Source — NSX Advanced Load Balancer SE management IPs

Destination — NSX Advanced Load Balancer Controller management IPs

Service — TCP(22, 8443), UDP(123)

Action — Allow

SE initiates TCP connection for the secure channel to the Controller IP.

SE to Backend

Source — NSX Advanced Load Balancer SE data IPs

Destination — Backend server IPs

Service — Any (can be restricted to service port, for instance, TCP 80)

Action — Allow

Client to VIP traffic does not require a DFW rule as the VIP interface is in Exclusion list. The front-end security can be enforced for each VIP using network security policies on the virtual service.

North-South VIP

If certain VIP is required to have north-south access to allow external clients to reach the application, additional configuration is required on NSX-T manager.

You should configure Tier 1 to redistribute the VIP IP (/32) to Tier 0.

Tier 0 must advertise the VIP to external peer using BGP.

If all VIPs are required to be north-south or for simplicity of configuration, you can configure Tier 1 to redistribute the entire VIP range to Tier 0. You can configure Tier 0 to advertise all learned routes in that range to external peer. This way whenever a new VIP is created, it will be automatically advertised to the external peer.