The VXLAN network is used for Layer 2 logical switching across hosts, potentially spanning multiple underlying Layer 3 domains. You configure VXLAN on a per-cluster basis, where you map each cluster that is to participate in NSX to a vSphere distributed switch (VDS). When you map a cluster to a distributed switch, each host in that cluster is enabled for logical switches. The settings chosen here will be used in creating the VMkernel interface.

If you need logical routing and switching, all clusters that have NSX Data Center for vSphere VIBs installed on the hosts should also have VXLAN transport parameters configured. If you plan to deploy distributed firewall only, you do not need to configure VXLAN transport parameters.

When you configure VXLAN networking, you must provide a vSphere Distributed Switch, a VLAN ID, an MTU size, an IP addressing mechanism (DHCP or IP pool), and a NIC teaming policy.

The MTU for each switch must be set to 1550 or higher. By default, it is set to 1600. If the vSphere distributed switch MTU size is larger than the VXLAN MTU, the vSphere Distributed Switch MTU will not be adjusted down. If it is set to a lower value, it will be adjusted to match the VXLAN MTU. For example, if the vSphere Distributed Switch MTU is set to 2000 and you accept the default VXLAN MTU of 1600, no changes to the vSphere Distributed Switch MTU will be made. If the vSphere Distributed Switch MTU is 1500 and the VXLAN MTU is 1600, the vSphere Distributed Switch MTU will be changed to 1600.

VTEPs have an associated VLAN ID. You can, however, specify VLAN ID = 0 for VTEPs, meaning frames will be untagged.

You might want to use different IP address settings for your management clusters and your compute clusters. This would depend on how the physical network is designed, and likely won't be the case in small deployments.

Prerequisites

- All hosts within the cluster must be attached to a common vSphere Distributed Switch.

- NSX Manager must be installed.

- The NSX Controller cluster must be installed, unless you are using multicast replication mode for the control plane.

- Plan your NIC teaming policy. Your NIC teaming policy determines the load balancing and failover settings of the vSphere Distributed Switch.

Do not mix different teaming policies for different portgroups on a vSphere Distributed Switch where some use Etherchannel or LACPv1 or LACPv2 and others use a different teaming policy. If uplinks are shared in these different teaming policies, traffic will be interrupted. If logical routers are present, there will be routing problems. Such a configuration is not supported and should be avoided.

The best practice for IP hash-based teaming (EtherChannel, LACPv1 or LACPv2) is to use all uplinks on the vSphere Distributed Switch in the team, and do not have portgroups on that vSphere Distributed Switch with different teaming policies. For more information and further guidance, see the NSX Network Virtualization Design Guide at https://communities.vmware.com/docs/DOC-27683.

- Plan the IP addressing scheme for the VXLAN tunnel end points (VTEPs). VTEPs are the source and destination IP addresses used in the external IP header to uniquely identify the ESX hosts originating and terminating the VXLAN encapsulation of frames. You can use either DHCP or manually configured IP pools for VTEP IP addresses.

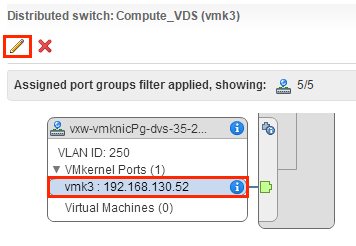

If you want a specific IP address to be assigned to a VTEP, you can either 1) use a DHCP fixed address or reservation that maps a MAC address to a specific IP address in the DHCP server or 2) use an IP pool and then manually edit the VTEP IP address assigned to the vmknic in .

Note: If you are manually editing the IP address, make sure that the IP address is NOT similar to the original IP pool range.For example:

- For clusters that are members of the same VDS, the VLAN ID for the VTEPs and the NIC teaming must be the same.

- As a best practice, export the vSphere Distributed Switch configuration before preparing the cluster for VXLAN. See http://kb.vmware.com/kb/2034602.

Procedure

Results

For example:

For more information on troubleshooting VXLAN, refer to NSX Troubleshooting Guide.