Distributed logical router (DLR) kernel modules in the host perform routing between VXLAN networks, and between virtual and physical networks. An NSX Edge Appliance provides dynamic routing ability if needed. A universal distributed logical router provides east-west routing between universal logical switches.

- NSX Data Center for vSphere 6.2 and later allows logical router-routed logical interfaces (LIFs) to be connected to a VXLAN that is bridged to a VLAN.

- Logical router interfaces and bridging interfaces cannot be connected to a dvPortgroup with the VLAN ID set to 0.

- A given logical router instance cannot be connected to logical switches that exist in different transport zones. This is to ensure that all logical switches and logical router instances are aligned.

- A logical router cannot be connected to VLAN-backed port groups if that logical router is connected to logical switches spanning more than one vSphere distributed switch (VDS). This is to ensure correct alignment of logical router instances with logical switch dvPortgroups across hosts.

- Logical router interfaces must not be created on two different distributed port groups (dvPortgroups) with the same VLAN ID if the two networks are in the same vSphere distributed switch.

- Logical router interfaces should not be created on two different dvPortgroups with the same VLAN ID if two networks are in different vSphere distributed switches, but the two vSphere distributed switches share identical hosts. In other words, logical router interfaces can be created on two different networks with the same VLAN ID if the two dvPortgroups are in two different vSphere distributed switches, as long as the vSphere distributed switches do not share a host.

- If VXLAN is configured, logical router interfaces must be connected to distributed port groups on the vSphere Distributed Switch where VXLAN is configured. Do not connect logical router interfaces to port groups on other vSphere Distributed Switches.

- Dynamic routing protocols (BGP and OSPF) are supported only on uplink interfaces.

- Firewall rules are applicable only on uplink interfaces and are limited to control and management traffic that is destined to the Edge virtual appliance.

- For more information about the DLR Management Interface, see the Knowledge Base Article "Management Interface Guide: DLR Control VM - NSX" http://kb.vmware.com/kb/2122060.

If you enable high availability on an NSX Edge in a cross-vCenter NSX environment, both the active and standby NSX Edge Appliances must reside in the same vCenter Server. If you migrate one of the appliances of an NSX Edge HA pair to a different vCenter Server, the two HA appliances no longer operate as an HA pair, and you might experience traffic disruption.

Prerequisites

- You must be assigned the Enterprise Administrator or NSX Administrator role.

- You must create a local segment ID pool, even if you have no plans to create logical switches.

- Make sure that the controller cluster is up and available before creating or changing a logical router configuration. A logical router cannot distribute routing information to hosts without the help of NSX controllers. A logical router relies on NSX controllers to function, while Edge Services Gateways (ESGs) do not.

- If a logical router is to be connected to VLAN dvPortgroups, ensure that all hypervisor hosts with a logical router appliance installed can reach each other on UDP port 6999. Communication on this port is required for logical router VLAN-based ARP proxy to work.

- Determine where to deploy the logical router appliance.

- The destination host must be part of the same transport zone as the logical switches connected to the new logical router's interfaces.

- Avoid placing it on the same host as one or more of its upstream ESGs if you use ESGs in an ECMP setup. You can use DRS anti-affinity rules to enforce this practice, reducing the impact of host failure on logical router forwarding. This guideline does not apply if you have one upstream ESG by itself or in HA mode. For more information, see the NSX Network Virtualization Design Guide at https://communities.vmware.com/docs/DOC-27683.

- Verify that the host cluster on which you install the logical router appliance is prepared for NSX Data Center for vSphere. See "Prepare Host Clusters for NSX" in the NSX Installation Guide.

- Determine if you need to enable local egress. Local egress allows you to selectively send routes to hosts. You may want this ability if your NSX deployment spans multiple sites. See Cross-vCenter NSX Topologies for more information. You cannot enable local egress after the universal logical router has been created.

Procedure

Results

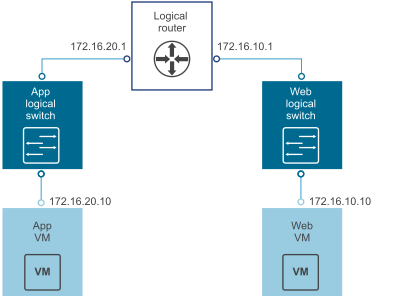

In the following example topology, the default gateway of app VM is 172.16.20.1. The default gateway of web VM is 172.16.10.1. Make sure the VMs can ping their default gateways and each other.

-

List all logical router instance information.

nsxmgr-l-01a> show logical-router list all Edge-id Vdr Name Vdr id #Lifs edge-1 default+edge-1 0x00001388 3 -

List the hosts that have received routing information for the logical router from the controller cluster.

nsxmgr-l-01a> show logical-router list dlr edge-1 host ID HostName host-25 192.168.210.52 host-26 192.168.210.53 host-24 192.168.110.53The output includes all hosts from all host clusters that are configured as members of the transport zone that owns the logical switch that is connected to the specified logical router (edge-1 in this example).

-

List the routing table information that is communicated to the hosts by the logical router. Routing table entries should be consistent across all the hosts.

nsx-mgr-l-01a> show logical-router host host-25 dlr edge-1 route VDR default+edge-1 Route Table Legend: [U: Up], [G: Gateway], [C: Connected], [I: Interface] Legend: [H: Host], [F: Soft Flush] [!: Reject] [E: ECMP] Destination GenMask Gateway Flags Ref Origin UpTime Interface ----------- ------- ------- ----- --- ------ ------ --------- 0.0.0.0 0.0.0.0 192.168.10.1 UG 1 AUTO 4101 138800000002 172.16.10.0 255.255.255.0 0.0.0.0 UCI 1 MANUAL 10195 13880000000b 172.16.20.0 255.255.255.0 0.0.0.0 UCI 1 MANUAL 10196 13880000000a 192.168.10.0 255.255.255.248 0.0.0.0 UCI 1 MANUAL 10196 138800000002 192.168.100.0 255.255.255.0 192.168.10.1 UG 1 AUTO 3802 138800000002 -

List additional information about the router from the point of view of one of the hosts. This output is helpful to learn which controller is communicating with the host.

nsx-mgr-l-01a> show logical-router host host-25 dlr edge-1 verbose VDR Instance Information : --------------------------- Vdr Name: default+edge-1 Vdr Id: 0x00001388 Number of Lifs: 3 Number of Routes: 5 State: Enabled Controller IP: 192.168.110.203 Control Plane IP: 192.168.210.52 Control Plane Active: Yes Num unique nexthops: 1 Generation Number: 0 Edge Active: No

Check the Controller IP field in the output of the show logical-router host host-25 dlr edge-1 verbose command.

-

192.168.110.202 # show control-cluster logical-switches vni 5000 VNI Controller BUM-Replication ARP-Proxy Connections 5000 192.168.110.201 Enabled Enabled 0The output for VNI 5000 shows zero connections and lists controller 192.168.110.201 as the owner for VNI 5000. Log in to that controller to gather further information for VNI 5000.192.168.110.201 # show control-cluster logical-switches vni 5000 VNI Controller BUM-Replication ARP-Proxy Connections 5000 192.168.110.201 Enabled Enabled 3The output on 192.168.110.201 shows three connections. Check additional VNIs.192.168.110.201 # show control-cluster logical-switches vni 5001 VNI Controller BUM-Replication ARP-Proxy Connections 5001 192.168.110.201 Enabled Enabled 3192.168.110.201 # show control-cluster logical-switches vni 5002 VNI Controller BUM-Replication ARP-Proxy Connections 5002 192.168.110.201 Enabled Enabled 3Because 192.168.110.201 owns all three VNI connections, we expect to see zero connections on the other controller, 192.168.110.203.192.168.110.203 # show control-cluster logical-switches vni 5000 VNI Controller BUM-Replication ARP-Proxy Connections 5000 192.168.110.201 Enabled Enabled 0 - Before checking the MAC and ARP tables, ping from one VM to the other VM.

From app VM to web VM:

vmware@app-vm$ ping 172.16.10.10 PING 172.16.10.10 (172.16.10.10) 56(84) bytes of data. 64 bytes from 172.16.10.10: icmp_req=1 ttl=64 time=2.605 ms 64 bytes from 172.16.10.10: icmp_req=2 ttl=64 time=1.490 ms 64 bytes from 172.16.10.10: icmp_req=3 ttl=64 time=2.422 msCheck the MAC tables.192.168.110.201 # show control-cluster logical-switches mac-table 5000 VNI MAC VTEP-IP Connection-ID 5000 00:50:56:a6:23:ae 192.168.250.52 7192.168.110.201 # show control-cluster logical-switches mac-table 5001 VNI MAC VTEP-IP Connection-ID 5001 00:50:56:a6:8d:72 192.168.250.51 23Check the ARP tables.192.168.110.201 # show control-cluster logical-switches arp-table 5000 VNI IP MAC Connection-ID 5000 172.16.20.10 00:50:56:a6:23:ae 7

192.168.110.201 # show control-cluster logical-switches arp-table 5001 VNI IP MAC Connection-ID 5001 172.16.10.10 00:50:56:a6:8d:72 23

Check the logical router information. Each logical router instance is served by one of the controller nodes.

The instance subcommand of show control-cluster logical-routers command displays a list of logical routers that are connected to this controller.

The interface-summary subcommand displays the LIFs that the controller learned from the NSX Manager. This information is sent to the hosts that are in the host clusters managed under the transport zone.

-

List all logical routers connected to this controller.

controller # show control-cluster logical-routers instance all LR-Id LR-Name Universal Service-Controller Egress-Locale 0x1388 default+edge-1 false 192.168.110.201 localNote the LR-Id and use it in the following command.

-

controller # show control-cluster logical-routers interface-summary 0x1388 Interface Type Id IP[] 13880000000b vxlan 0x1389 172.16.10.1/24 13880000000a vxlan 0x1388 172.16.20.1/24 138800000002 vxlan 0x138a 192.168.10.2/29 -

controller # show control-cluster logical-routers routes 0x1388 Destination Next-Hop[] Preference Locale-Id Source 192.168.100.0/24 192.168.10.1 110 00000000-0000-0000-0000-000000000000 CONTROL_VM 0.0.0.0/0 192.168.10.1 0 00000000-0000-0000-0000-000000000000 CONTROL_VM[root@comp02a:~] esxcfg-route -l VMkernel Routes: Network Netmask Gateway Interface 10.20.20.0 255.255.255.0 Local Subnet vmk1 192.168.210.0 255.255.255.0 Local Subnet vmk0 default 0.0.0.0 192.168.210.1 vmk0 - Display the controller connections to the specific VNI.

192.168.110.203 # show control-cluster logical-switches connection-table 5000 Host-IP Port ID 192.168.110.53 26167 4 192.168.210.52 27645 5 192.168.210.53 40895 6192.168.110.202 # show control-cluster logical-switches connection-table 5001 Host-IP Port ID 192.168.110.53 26167 4 192.168.210.52 27645 5 192.168.210.53 40895 6These Host-IP addresses are vmk0 interfaces, not VTEPs. Connections between ESXi hosts and controllers are created on the management network. The port numbers here are ephemeral TCP ports that are allocated by the ESXi host IP stack when the host establishes a connection with the controller.

-

On the host, you can view the controller network connection matched to the port number.

[[email protected]:~] #esxcli network ip connection list | grep 26167 tcp 0 0 192.168.110.53:26167 192.168.110.101:1234 ESTABLISHED 96416 newreno netcpa-worker

-

Display active VNIs on the host. Observe how the output is different across hosts. Not all VNIs are active on all hosts. A VNI is active on a host if the host has a VM that is connected to the logical switch.

[[email protected]:~] # esxcli network vswitch dvs vmware vxlan network list --vds-name Compute_VDS VXLAN ID Multicast IP Control Plane Controller Connection Port Count MAC Entry Count ARP Entry Count VTEP Count -------- ------------------------- ----------------------------------- --------------------- ---------- --------------- --------------- ---------- 5000 N/A (headend replication) Enabled (multicast proxy,ARP proxy) 192.168.110.203 (up) 1 0 0 0 5001 N/A (headend replication) Enabled (multicast proxy,ARP proxy) 192.168.110.202 (up) 1 0 0 0

Note: To enable the vxlan namespace in vSphere 6.0 and later, run the /etc/init.d/hostd restart command.For logical switches in hybrid or unicast mode, the esxcli network vswitch dvs vmware vxlan network list --vds-name <vds-name> command contains the following output:

- Control Plane is enabled.

- Multicast proxy and ARP proxy are listed. AARP proxy is listed even if you disabled IP discovery.

- A valid controller IP address is listed and the connection is up.

- If a logical router is connected to the ESXi host, the port Count is at least 1, even if there are no VMs on the host connected to the logical switch. This one port is the vdrPort, which is a special dvPort connected to the logical router kernel module on the ESXi host.

-

First ping from VM to another VM on a different subnet and then display the MAC table. Note that the Inner MAC is the VM entry while the Outer MAC and Outer IP refer to the VTEP.

~ # esxcli network vswitch dvs vmware vxlan network mac list --vds-name=Compute_VDS --vxlan-id=5000 Inner MAC Outer MAC Outer IP Flags ----------------- ----------------- -------------- -------- 00:50:56:a6:23:ae 00:50:56:6a:65:c2 192.168.250.52 00000111~ # esxcli network vswitch dvs vmware vxlan network mac list --vds-name=Compute_VDS --vxlan-id=5001 Inner MAC Outer MAC Outer IP Flags ----------------- ----------------- -------------- -------- 02:50:56:56:44:52 00:50:56:6a:65:c2 192.168.250.52 00000101 00:50:56:f0:d7:e4 00:50:56:6a:65:c2 192.168.250.52 00000111

What to do next

When you install an NSX Edge Appliance, NSX enables automatic VM startup/shutdown on the host if vSphere HA is disabled on the cluster. If the appliance VMs are later migrated to other hosts in the cluster, the new hosts might not have automatic VM startup/shutdown enabled. For this reason, when you install NSX Edge Appliances on clusters that have vSphere HA disabled, you must preferably check all hosts in the cluster to make sure that automatic VM startup/shutdown is enabled. See "Edit Virtual Machine Startup and Shutdown Settings" in vSphere Virtual Machine Administration.

After the logical router is deployed, double-click the logical router ID to configure additional settings, such as interfaces, routing, firewall, bridging, and DHCP relay.