In an NSX domain, VMware NSX® Virtual Switch™ is the software that operates in server hypervisors to form a software abstraction layer between servers and the physical network.

NSX Virtual Switch is based on vSphere distributed switches (VDSs), which provide uplinks for host connectivity to the top-of-rack (ToR) physical switches. As a best practice, VMware recommends that you plan and prepare your vSphere Distributed Switches before installing NSX Data Center for vSphere.

NSX services are not supported on vSphere Standard Switch. VM workloads must be connected to vSphere Distributed Switches to use NSX services and features.

A single host can be attached to multiple VDSs. A single VDS can span multiple hosts across multiple clusters. For each host cluster that will participate in NSX, all hosts within the cluster must be attached to a common VDS.

For instance, say you have a cluster with Host1 and Host2. Host1 is attached to VDS1 and VDS2. Host2 is attached to VDS1 and VDS3. When you prepare a cluster for NSX, you can only associate NSX with VDS1 on the cluster. If you add another host (Host3) to the cluster and Host3 is not attached to VDS1, it is an invalid configuration, and Host3 will not be ready for NSX functionality.

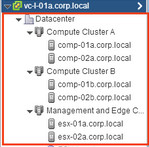

Often, to simplify a deployment, each cluster of hosts is associated with only one VDS, even though some of the VDSs span multiple clusters. For example, suppose your vCenter contains the following host clusters:

- Compute cluster A for app tier hosts

- Compute cluster B for web tier hosts

- Management and edge cluster for management and edge hosts

The following screen shows how these clusters appear in vCenter.

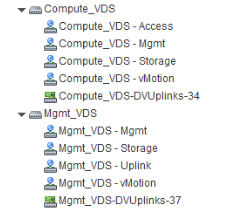

For a cluster design such as this, you might have two VDSs called Compute_VDS and Mgmt_VDS. Compute_VDS spans both of the compute clusters, and Mgmt_VDS is associated with only the management and edge cluster.

Each VDS contains distributed port groups for the different types of traffic that need to be carried. Typical traffic types include management, storage, and vMotion. Uplink and access ports are generally required as well. Normally, one port group for each traffic type is created on each VDS.

For example, the following screen shows how these distributed switches and ports appear in vCenter.

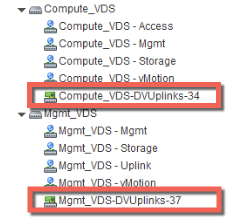

Each port group can, optionally, be configured with a VLAN ID. The following list shows an example of how VLANs can be associated with the distributed port groups to provide logical isolation between different traffic types:

-

Compute_VDS - Access---VLAN 130

-

Compute_VDS - Mgmt---VLAN 210

-

Compute_VDS - Storage---VLAN 520

-

Compute_VDS - vMotion---VLAN 530

-

Mgmt_VDS - Uplink---VLAN 100

-

Mgmt_VDS - Mgmt---VLAN 110

-

Mgmt_VDS - Storage---VLAN 420

-

Mgmt_VDS - vMotion---VLAN 430

The DVUplinks port group is a VLAN trunk that is created automatically when you create a VDS. As a trunk port, it sends and receives tagged frames. By default, it carries all VLAN IDs (0-4094). This means that traffic with any VLAN ID can be passed through the vmnic network adapters associated with the DVUplink slot and filtered by the hypervisor hosts as the distributed switch determines which port group should receive the traffic.

If your existing vCenter environment contains vSphere Standard Switches instead of vSphere Distributed Switches, you can migrate your hosts to distributed switches.