Supported Topology

The NSX Migration for VMware Cloud Director tool supports the following topology.

- One or multiple organization VDCs from the same Organization can be migrated together.

- No edge gateway, one or multiple edge gateways connected to one or multiple external networks in the organization VDC backed by NSX Data Center for vSphere.

- Direct, routed (including distributed), and isolated organization VDC networks.

Direct Networks

Direct organization VDC networks are connected directly to an external network that is backed by one or more vSphere Distributed Switch port groups (usually VLAN or VXLAN backed).

By default, the two scenarios are considered and migrated differently:

- Colocation/MPLS use case: The external network is dedicated to a single tenant (organization VDC) and is VLAN backed. During the migration, in the VLAN transport zone, the NSX-T logical segment defined using ImportedNetworkTransportZone YAML element will be automatically created and imported into the target organization VDC.

- Service network use case: The external network (VLAN backed) is shared across multiple tenants (more than one organization VDCs have an organization VDC network directly connected to it). In this scenario, an identically named External network with the suffix

-v2tbacked NSX-T segment (usually with the same VLAN as the source external network, else bridging needs to be configured manually) must be created by the system administrator before the migration. The migration tool will then create a directly connected organization VDC network to the NSX-T segment backed external network in the target organization VDC. The administrator can use NSX-T distributed firewall (to enforce tenant boundaries) on such segment directly in NSX-T Manager (outside of VMware Cloud Director).

Note that prior version 1.3.2 of the NSX Migration for VMware Cloud Director tool, the service network scenario migration method was different. The migration reused the existing external network scoped to both source and target PVDC (backed by VLAN backed vSphere Distributed Switch port groups. If required, this (legacy) behavior can still be invoked with YAML flag (LegacyDirectNetwork: true).

Note Irrespective of both the use cases mentioned above or the use of the LegacyDirectNetwork YAML flag, direct Org VDC networks that are connected to a VXLAN backed external network will always be created as overlay segments connected to an identically named external network with the suffix -v2t backed NSX-T segment.

For both use cases above, layer 2 bridging will not be automatically configured by the migration tool with NSX-T (bridging) Edge Nodes. If required, the bridge should be configured manually.

Shared Networks

The shared networks are organization VDC networks shared with other Organization VDCs within the same organization.

The Org VDC network can be shared with the two following possibilities:

- Org VDC network is scoped to a Data Center Group - it is shared with all Org VDC participating in the Data Center Group (maximum 16 Org VDCs).

- Org VDC network is enabled as shared (also called legacy sharing) - This network is shared across all Org VDCs of the Organization (constrained only by transport zone scope span).

- When a routed or isolated shared Org VDC network is present in an organization VDC that is going to be migrated, all organization VDCs having vApps connected to the shared network must be migrated together (supported maximum is 16 Org VDCs). The system administrator must provide a list of organization VDCs (and their specific parameters) to migrate together. NSX-T backed Provider VDCs use Data Center Groups to increase the organization VDC network scope across multiple organization VDCs. The migration tool will automatically create these Data Center Groups. All organization VDCs migrated together will participate in these Data Center Groups and relevant organization VDC networks will be scoped to them. The migration tool validates if any other organization VDCs (than those mentioned in YAML) have vApps/VMs connected to it and warns that such Org VDCs also need to be included in the migration.

- Organization VDC network that is directly connected to an External network used also by other organization VDCs (typically Service Network use case) will not be migrated via the Data Center Group mechanism. Hence, the maximum supported 16 Org VDC limit does not apply in this case. An identically named External network with the suffix

-v2tbacked by NSX-T segment (usually with the same VLAN as the source external network, else bridging needs to be configured manually) must be created by the system administrator before the migration. The migration tool will then create in the target organization VDC legacy shared directly connected organization VDC network to the NSX-T segment backed external network. The administrator can use NSX-T distributed firewall (to enforce tenant boundaries) on such segment directly in NSX-T Manager (outside of VMware Cloud Director). - Organization VDC network that is directly connected to a VXLAN backed External network (MPLS/service network use case) will not be migrated via the Data Center Group mechanism. Hence, the maximum supported 16 Org VDC limit does not apply in this case. An identically named External network with the suffix -v2t backed by NSX-T segment (usually with the same

VLANas the source external network, else bridging needs to be configured manually) must be created by the system administrator before the migration. The migration tool will then create in the target organization VDC legacy shared directly connected organization VDC network to the NSX-T segment backed external network.

Direct Network Migration Mechanism Summary

| Direct Network Backing (Source) | Default Migration Mechanism | Migration Mechanism with LegacyDirectNetwork = True |

|---|---|---|

External network VLAN backed, used only by single direct Org VDC network |

Imported NSX-T VLAN segment automatically created during the migration process. | Imported NSX-T VLAN segment automatically created during the migration process. |

External network VLAN backed, used by multiple direct Org VDC networks |

Target direct organization VDC network is connected to an external network with the same name but appended with -v2t suffix. This external network must be created before the migration (backed by NSX-T segments). | Same external network is used to connect the target directly to the organization VDC network and must be scoped to target provider VDC. |

External network VXLAN backed |

Target direct organization VDC network is connected to an external network with the same name but appended with -v2t suffix. This external network must be created before the migration (backed by NSX-T segments). | Target direct organization VDC network is connected to an external network with the same name but appended with -v2t suffix. This external network must be created prior to the migration (backed by NSX-T segments). |

Note Shared networks migration is supported only with VMware Cloud Director version 10.3 or higher.

Route Advertisement

- BGP: When Route Redistribution is configured for BGP on the source NSX-V backed edge gateway, the migration tool will migrate all prefixes permitted by redistribution criteria that are either explicitly defined via Named IP Prefix, or implicitly via Allow learning from - Connected. Additionally, target gateway BGP IP Prefix Lists will be populated with specific IP Prefixes including allow/deny action. All such criteria are migrated into a single

v-t migratedIP prefix list. - Static Routing: VMware Cloud Director 10.4 supports only configuration of internal static routes on NSX-T backed Edge Gateway (set on Tier-1 gateway) but not external static routes that need to be configured on Tier-0/VRF gateway. The external routes however can be preconfigured on Tier-0/VRF by the provider prior to the migration. The migration tool will advertise all connected Org VDC networks from Tier-1 gateway to Tier-0/VRF if the flag

AdvertiseRoutedNetworksis set toTruefor the respective Edge Gateway in the user input file.

Automated IP Address Allocation Behavior

VMware Cloud Director (10.3.1 or older) does not preserve VM’s network interface IP address that is automatically allocated via Static – IP Pool IP Mode during migration or rollback. As a workaround, to retain the IP address of VMs, the migration tool will change the VM’s network interface IP mode from ‘Static – IP Pool’ to ‘Static - Manual’ with the specific originally allocated IP. This change persists after rollback as well.

Note VCD 10.3.2 resolved the issue of IP address reset while migrating a VM which was using a static IP address.

Routed vApp Networks

Starting with version 1.3.2, the migration tool supports the migration of routed vApp networks. vApp routers are deployed as standalone Tier-1 gateways connected to a single Org VDC network via service interface. Due to NSX-T service interface limitations, vApp routers can only be connected to overlay-backed Org VDC networks. This feature requires VMware Cloud Director 10.3.2.1 or newer.

Edge Gateway Rate Limits

Starting with version 1.3.2, the migration tool based on source Org VDC gateway rate limit configuration creates Gateway QoS profiles which is then assigned to a specific Tier-1 gateway. This feature requires VMware Cloud Director 10.3.2 or newer.

Multiple External Networks:

NSX-V backed Org VDC gateway can have multiple external networks connected and thus there can be specific rate limits set on each such external interface. NSX-T backed Org VDC gateway supports only single rate limit towards Tier-0/VRF therefore the target side will after migration be using only the single most restrictive (lowest) limit in such case.

Support for Org VDC network routed via SR (not distributed)

Starting with version 10.3.2, VMware Cloud Director supports the configuration of an NSX-T Data Center edge gateway to allow non-distributed routing and to connect routed organization VDC networks directly to a tier-1 service router, forcing all VM traffic for a specific network through the service router. Starting with migration tool version 1.3.2, the migration of routed non-distributed Org VDC networks is supported.

A maximum of 9 Org VDC networks can use the non-distributed connection to a single NSX-T Data Center edge gateway. Sub-interface connected Org VDC networks are still migrated as distributed networks.

The two possible ways to enable non-distributed routing for Org VDC networks.

Explicit configuration via an optional Org VDC YAML parameter

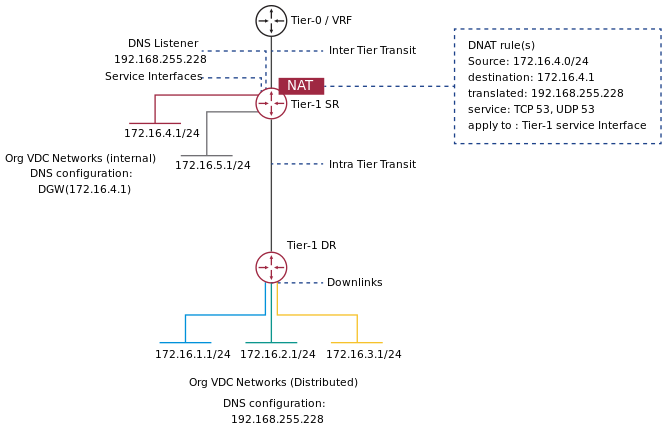

NonDistributedNetworksset toTrue. In such case all routed (via an internal interface, excluding distributed and sub-interface) Org VDC networks of the particular Org VDC will be created as non-distributed (SR connected).Implicit configuration - if the migration tool detects that the Org VDC network DNS configuration is identical to its gateway IP and DNS relay/forwarding is enabled on the NSX for vSphere backed edge gateway, such network will be migrated in the non-distributed mode.

If you were using your Org VDC network gateway address as a DNS server address before migration, you can use non-distributed routing to configure your Org VDC network that is backed by NSX-T Data Center to also use its network gateway's IP address as a DNS server address. To do that, the migration tool will additionally create a DNAT rule for DNS traffic translating the network default gateway IP to the DNS forwarder IP.

Note Two DNAT rules for each network are needed - one for TCP and another for UDP DNS traffic.

Parallel Migrations

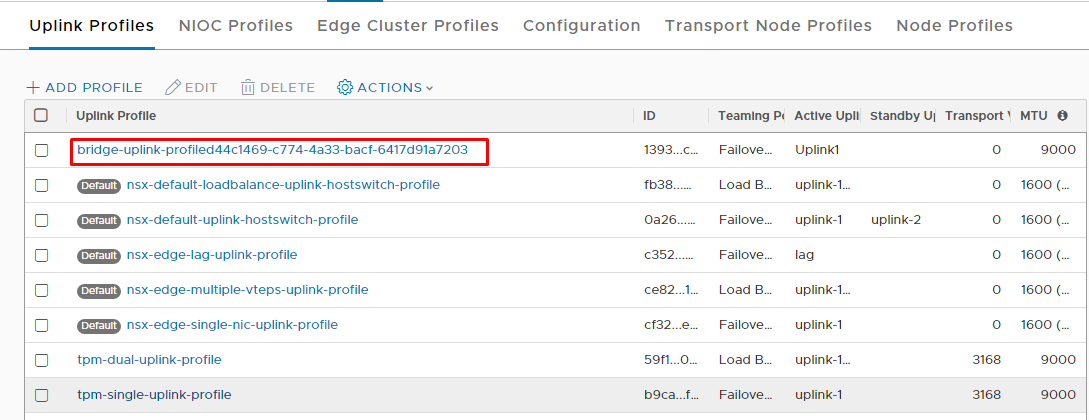

Starting with release 1.4.0, multiple parallel migrations utilizing shared pool of bridging NSX-T Edge Node clusters is supported. This is achieved by defining unique bridge profile for each migration instance in the format bridge-uplink-profileUUID.

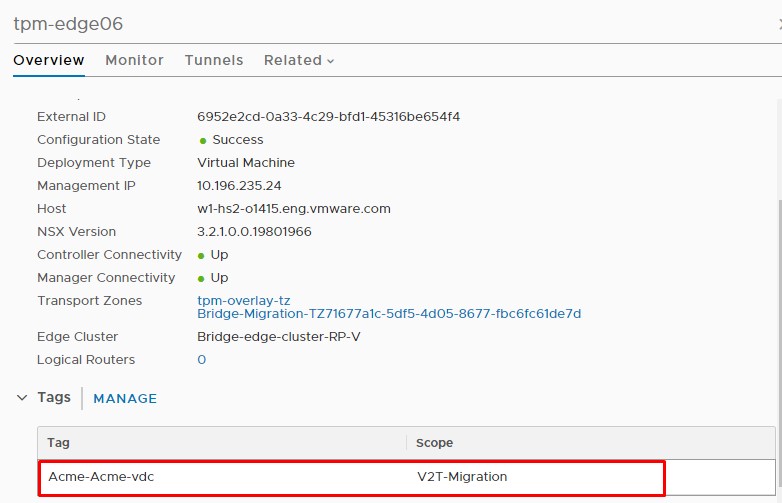

A sophisticated mechanism is used to reserve bridging edge nodes for concurrent migrations. The migration tool will check for the availability of required nodes for bridging first. If required edge nodes are available the migration tool will create a tag with the format Organization-OrgVDC, that will be attached to bridge edge nodes and keep them reserved for the mentioned Org VDC migration.

In order to efficiently utilize bridge edge clusters and nodes, the bridging mechanism is enhanced in the following ways:

Unique bridge/uplink/transport zone profile names (bridge-uplink-profileUUID).

Reservation of edge nodes with NSX-T edge node tag (Organization-OrgVCD).

Validation during pre-check that supplied bridge edge clusters have enough free (untagged) edge nodes based on the need for to-be-bridged networks.

Verifying Bridge Uplink in NSX-T:

Verifying tagging on bridging nodes in NSX-T:

Verifying Transport zone on bridging nodes in NSX-T:

Granular edge gateway parameters

Starting with release 1.4, when multiple edge gateways are migrated, it is possible to have specific extended parameters defined in YAML for each such gateway. If the granular edge gateway parameters are not provided, it takes the default from the Org VDC block configured in user YAML.

Example for granular edge gateway field from user input YAML:

EdgeGateways:

EdgeGateway1:

Tier0Gateways: tpm-externalnetwork

NoSnatDestinationSubnet:

- 10.102.0.0/16

- 10.103.0.0/16

ServiceEngineGroupName: Tenant1

LoadBalancerVIPSubnet: 192.168.255.128/28

LoadBalancerServiceNetwork: 192.168.155.1/25

LoadBalancerServiceNetworkIPv6: fd0c:2fb3:9a78:d746:0000:0000:0000:0001/120

AdvertiseRoutedNetworks: False

NonDistributedNetworks: False

serviceNetworkDefinition: 192.168.255.225/27

EdgeGateway2:

Tier0Gateways: tpm-externalnetwork2

NoSnatDestinationSubnet:

- 10.102.0.0/16

- 10.103.0.0/16

ServiceEngineGroupName: Tenant2

LoadBalancerVIPSubnet: 192.168.255.128/28

LoadBalancerServiceNetwork: 192.168.155.1/25

LoadBalancerServiceNetworkIPv6: fd0c:2fb3:9a78:d746:0000:0000:0000:0001/120

AdvertiseRoutedNetworks: False

NonDistributedNetworks: False

serviceNetworkDefinition: 192.168.255.225/27

NSX Advanced Load Balancer (Avi)

Starting with release 1.4.1, the migration tool supports VIP and pools to be from Org VDC network subnets for IPv4. Migration tool 1.4.0 already supports VIP from Org VDC network only for IPv6. The following scenarios are to be considered:

With IPv4:

1.1. VIP in virtual services is from external network/Tier-0 subnets:

The provider needs to define the network subnet to be used for the Virtual IP configuration of the load balancer virtual service via the optional Org VDC YAML parameter

LoadBalancerVIPSubnet. In this case, the migration tool will create a DNAT rule to translate the original external IP to the new VIP. This DNAT rule ensures that the same external IP can be used for the VIP and other services (NAT, VPN) which is supported by NSX-V but not by NSX-T Advanced Load Balancer.1.2. VIP in virtual services is from Org VDC network subnets:

The migration tool will configure the same VIP as the source side. There is no need to define the optional Org VDC YAML parameter

LoadBalancerVIPSubnet. The migration tool will not create any additional DNAT rules for this scenario.With IPv6:

2.1. VIP in virtual services from external network/Tier-0 subnets or Org VDC network subnets:

The migration tool will configure the same VIP as the source side. There is no need to define the optional Org VDC YAML parameter

LoadBalancerVIPSubnet. The migration tool will not create any additional DNAT rules for this scenario.

Transparent Load Balancer:

Starting with the release of 1.4.1, the migration tool supports Transparent Load Balancing.

In transparent load balancing, the migration tool will configure the same VIP as the source side. There is no need to define the optional Org VDC YAML parameter LoadBalancerVIPSubnet. The migration tool will not create any additional DNAT rules for the transparent load balancer. Only in-line topology is supported. For pool members and virtual service, only IPv4 is supported.

Prerequisites:

Avi version must be at least 21.1.4.

The service engine group must be in A/S mode (legacy HA).

The LB service engine subnet must have at least 8 IPs available.

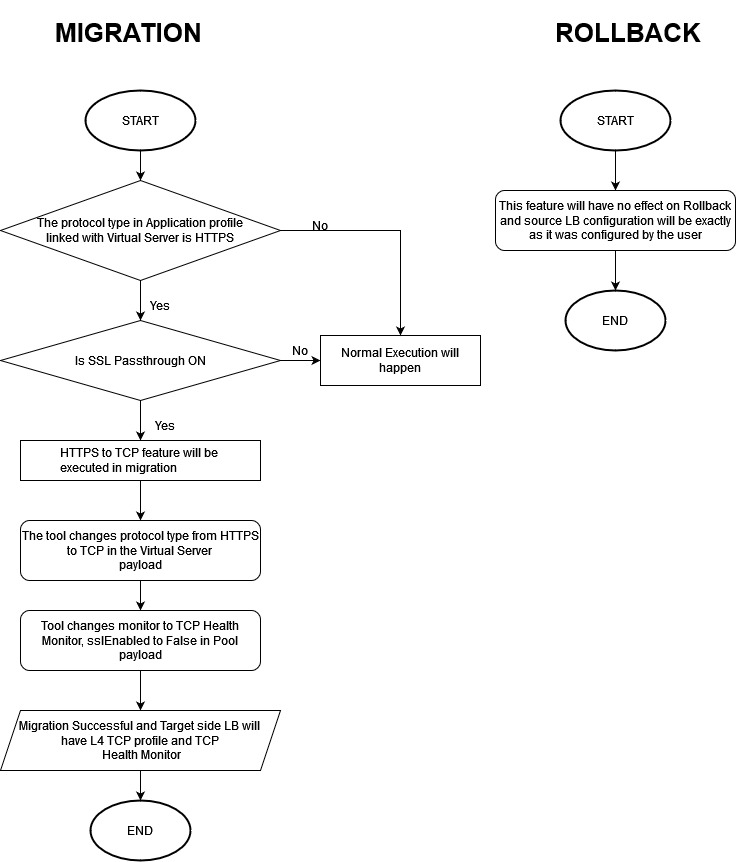

SSL Passthrough:

Starting with the release of 1.4.2, the migration tool supports SSL Passthrough in Load Balancer.

If SSL Passthrough is enabled with HTTPS protocol in LB, then after migration the target side LB will have L4 TCP protocol and TCP health monitor.

Note SSL Passthrough is only available for HTTPS protocol.

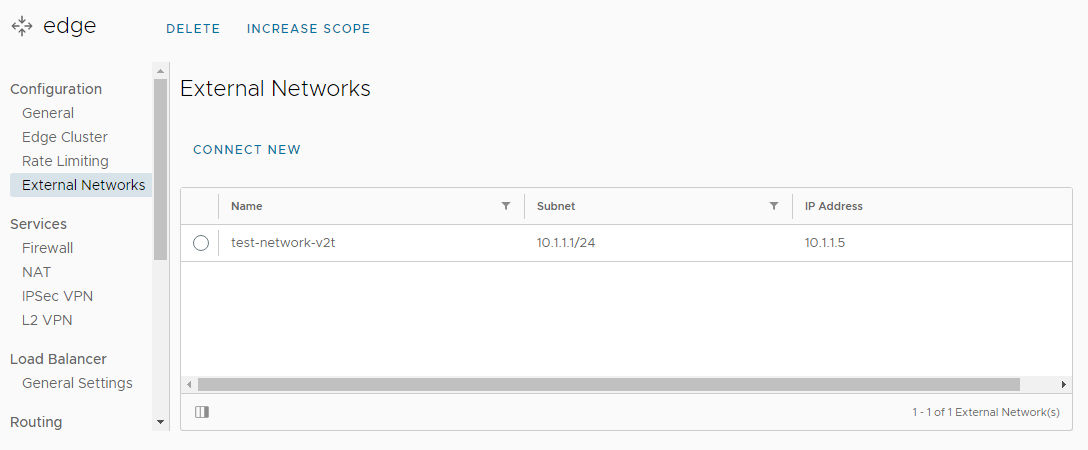

External Network to Edge Connection via the service port

Starting with the release of 1.4.1, the migration tool supports NSX-T Overlay or VLAN-backed external network connected to edge gateway via a service interface connection with VMware Cloud Director 10.4.1 onwards. This adds additional flexibility for the network topology as the external networks do not always require to be routed via a dedicated Tier-0/VRF gateway.

The service provider can decide if a particular external network should be routed via Tier-0/VRF or connected via the service interface directly to Tier-1 GW. Note that an Org VDC Gateway must always have a parent Tier-0/VRF even if it is routing all its external traffic to external networks connected directly via the service interface.

The migration tool will search for the external network subnets used by NSX-V backed edge gateway on the input YAML specified Tier-0/VRF gateway (Tier0Gateways field from the input YAML file) or the internal scopes of the IP Space uplinks connected to it if it's IP Space enabled. If the subnet is not present in the Tier-0/VRF gateway provided in an input YAML file, the migration tool will search for an NSX-T segment-backed external network with a similar name as of the external network to which NSX-V edge gateway is connected but with -v2t' suffix. When the appropriate NSX-T segment-backed external network is found that has the subnet used by the NSX-V backed edge gateway, the migration tool will connect the Tier-1 gateway to that segment-backed external network directly via service interface connection.

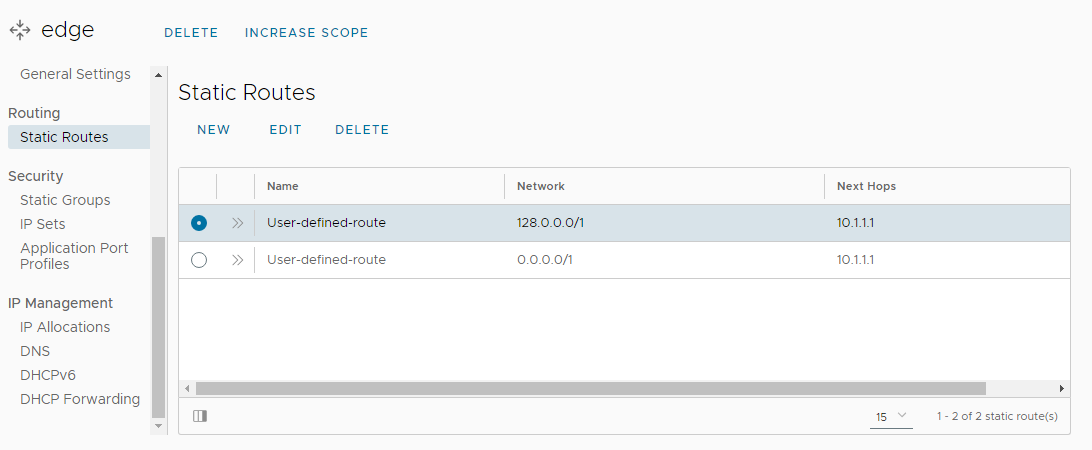

The migration tool will create necessary static routes when the default gateway is towards the external network directly connected to the edge gateway and disconnect the Tier-1 gateway from its parent Tier-0/VRF GW.

External static routes will be created on the Tier-1 gateway if they are scoped to a segment-backed external network directly connected to the gateway.

Note

- NSX-T backed edge gateway can be connected only to a single subnet of a given segment-backed external network.

- VLAN Segment backed external network can be connected only to one edge gateway directly.

- Ensure that the VLAN ID of an external network should not clash with the VLAN ID of the Tier-0 uplink interface on the same Edge Node where Tier-1 is instantiated.

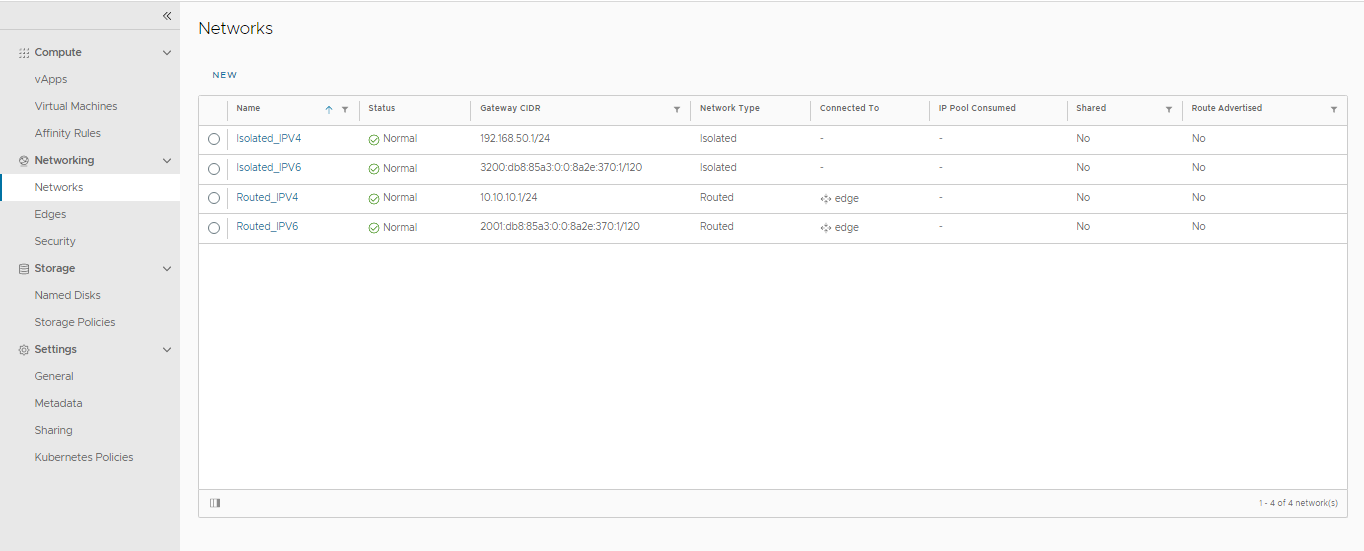

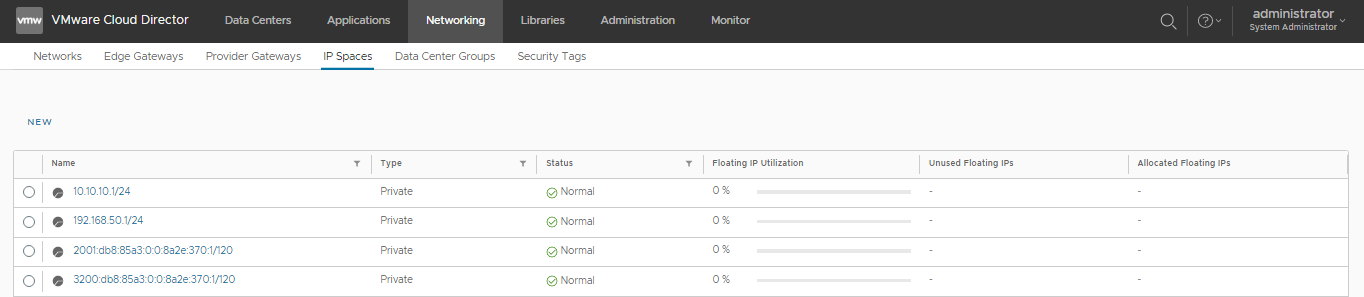

IP Spaces Support

Starting with the release of 1.4.2, the migration tool supports IP Space enabled Provider Gateways with VMware Cloud Director 10.4.2 onwards. The migration tool will support the creation of edge gateways connected to an IP Space enabled Provider Gateway. The migration of (Sub-allocated IPs) in case of IP Spaces enabled Tier-0/VRF will be different. The migration tool will not extend the IP range at the provider gateway level but instead will do so at the IP Space level.

The migration tool will create private IP Spaces having an internal scope and subsequent IP prefix identical to a subnet of Org VDC networks connected to edge gateways mapped with IP Space enabled Tier-0/VRF Provider Gateway in the input YAML file. These private IP Spaces created by the tool will be used by the routed Org VDC networks connected to the target NSX-T edge gateway. The migration tool will follow a 1:1 approach - Private IP Space to Org VDC subnet. These private IP Spaces created by the tool must be unique within the organization. The precheck will validate that there is no conflict of internal scopes among existing private IP Spaces (that already potentially exist in the target organization created by previous migrations) with to be migrated Org VDC networks connected to IP Space enabled provider gateways as well as conflict between to be migrated Org VDC networks connected to IP Space enabled gateway.

Note

Isolated networks in Org VDCs having at least one edge gateway connected to IP Space enabled Provider Gateway will use private IP Spaces similar to the routed networks connected to IP Space enabled edge gateways. All the precheck validations related to IP Spaces that are applied on routed networks will also be applied on such isolated networks as well. The reasoning behind such implementation is Isolated networks using IP Spaces can be connected to edge gateway connected to IP Space enabled Provider Gateway as well as Legacy Provider Gateway.

LoadBalancer VIP subnet is created as a private IP Space prefix and can be reused by multiple Edge Gateways from the same organization.

LoadBalancer VIPs from the Org VDC networks are not supported with Edge Gateways connected to IP Space enabled Provider Gateways.

BGP and Route Advertisement:

When BGP is enabled on the NSX-V edge and IP prefixes are present in the Route Redistribution section of BGP, with the target edge gateway mapped to an IP Space enabled Provider Gateway, the migration tool will search for BGP prefixes in internal scopes of public IP Space uplinks. If the BGP prefix belongs to the internal scope of the public IP Space uplink of the Provider Gateway, the migration tool will validate for any existing IP prefix conflict (the BGP prefix should not exist even if it is not allocated). If no conflict is present, the migration tool will add the BGP prefix to the public IP Space uplink of Provider Gateway. If the BGP prefix is not found in any public IP space uplink of the Provider Gateway, the migration tool will create Private IP Space with an internal scope and subsequent IP prefix similar to the BGP prefix and add it as an uplink to the Provider Gateway. This uplink name is prefixed with V2T-Migration. To add a private IP Space uplink to Provider Gateway, the Provider Gateway should be private to the tenant (otherwise private IP Space cannot be route advertised). The migration tool will throw the necessary error if the Provider Gateway is not private.

If the AdvertiseRoutedNetworks flag is set to True and the Provider Gateway to which NSX-T edge is connected is IP Space enabled, the migration tool will create private IP Spaces for routed Org VDC networks connected to edge gateway as explained above and set it to route advertised.