Enhanced data path is a networking stack mode, which when configured provides superior network performance. It is primarily targeted for NFV workloads, which offer performance benefits leverging DPDK capability.

The N-VDS switch can be configured in the enhanced data path mode only on an ESXi host. ENS also supports traffic flowing through Edge VMs.

In the enhanced data path mode, both traffic modes are supported:

- Overlay traffic

- VLAN traffic

Supported VMkernel NICs

With NSX-T Data Center supporting multiple ENS host switches, the maximum number of VMkernel NICs supported per host is 32.

High-Level Process to Configure Enhanced Data Path

As a network administrator, before creating transport zones supporting N-VDS in the enhanced data path mode, you must prepare the network with the supported NIC cards and drivers. To improve network performance, you can enable the Load Balanced Source teaming policy to become NUMA node aware.

The high-level steps are as follows:

- Use NIC cards that support the enhanced data path.

See VMware Compatibility Guide to know NIC cards that support enhanced data path.

On the VMware Compatibility Guide page, under the IO devices category, select ESXi 6.7, IO device Type as Network, and feature as N-VDS Enhanced Datapath.

- Download and install the latest NIC drivers from the My VMware page.

- Go to Drivers & Tools > Driver CDs.

- Download NIC drivers:

VMware ESXi 6.7 ixgben-ens 1.1.3 NIC Driver for Intel Ethernet Controllers 82599, x520, x540, x550, and x552 family

VMware ESXi 6.7 i40en-ens 1.1.3 NIC Driver for Intel Ethernet Controllers X710, XL710, XXV710, and X722 family

- To use the host as an ENS host, at least one ENS capable NIC must be available on the system. If there are no ENS capable NICs, the management plane will not allow hosts to be added to ENS transport zones.

- List the ENS driver.

esxcli software vib list | grep -E "i40|ixgben"

- Verify whether the NIC is capable to process ENS datapath traffic.

esxcfg-nics -e

Name Driver ENS Capable ENS Driven MAC Address Description vmnic0 ixgben True False e4:43:4b:7b:d2:e0 Intel(R) Ethernet Controller X550 vmnic1 ixgben True False e4:43:4b:7b:d2:e1 Intel(R) Ethernet Controller X550 vmnic2 ixgben True False e4:43:4b:7b:d2:e2 Intel(R) Ethernet Controller X550 vmnic3 ixgben True False e4:43:4b:7b:d2:e3 Intel(R) Ethernet Controller X550 vmnic4 i40en True False 3c:fd:fe:7c:47:40 Intel(R) Ethernet Controller X710/X557-AT 10GBASE-T vmnic5 i40en True False 3c:fd:fe:7c:47:41 Intel(R) Ethernet Controller X710/X557-AT 10GBASE-T vmnic6 i40en True False 3c:fd:fe:7c:47:42 Intel(R) Ethernet Controller X710/X557-AT 10GBASE-T vmnic7 i40en True False 3c:fd:fe:7c:47:43 Intel(R) Ethernet Controller X710/X557-AT 10GBASE-T

- Install the ENS driver.

esxcli software vib install -v file:///<DriverInstallerURL> --no-sig-check

- Alternately, download the driver to the system and install it.

wget <DriverInstallerURL>

esxcli software vib install -v file:///<DriverInstallerURL> --no-sig-check

- Reboot the host to load the driver. Proceed to the next step.

- To unload the driver, follow these steps:

vmkload_mod -u i40en

ps | grep vmkdevmgr

kill -HUP "$(ps | grep vmkdevmgr | awk {'print $1'})"

ps | grep vmkdevmgrkill -HUP <vmkdevmgrProcessID>

kill -HUP "$(ps | grep vmkdevmgr | awk {'print $1'})"

- To uninstall the ENS driver, esxcli software vib remove --vibname=i40en-ens --force --no-live-install.

- Create an uplink policy.

- Create a transport zone.

Note: ENS transport zones configured for overlay traffic: For a Microsoft Windows virtual machine running VMware tools version earlier to version 11.0.0 and vNIC type is VMXNET3, ensure MTU is set to 1500. For a Microsoft Windows virtual machine running vSphere 6.7 U1 and VMware tools version 11.0.0 and later, ensure MTU is set to a value less than 8900. For virtual machines running other supported OSes, ensure that the virtual machine MTU is set to a value less than 8900.

- Create a host transport node. Configure mode in Enhanced Datapath on an N-VDS or VDS switch with logical cores and NUMA nodes.

Load Balanced Source Teaming Policy Mode Aware of NUMA

-

The Latency Sensitivity on VMs is High.

-

The network adapter type used is VMXNET3.

If the NUMA node location of either the VM or the physical NIC is not available, then the Load Balanced Source teaming policy does not consider NUMA awareness to align VMs and NICs.

- The LAG uplink is configured with physical links from multiple NUMA nodes.

- The VM has affinity to multiple NUMA nodes.

- The ESXi host failed to define NUMA information for either VM or physical links.

ENS Support for Applications Requiring Traffic Reliability

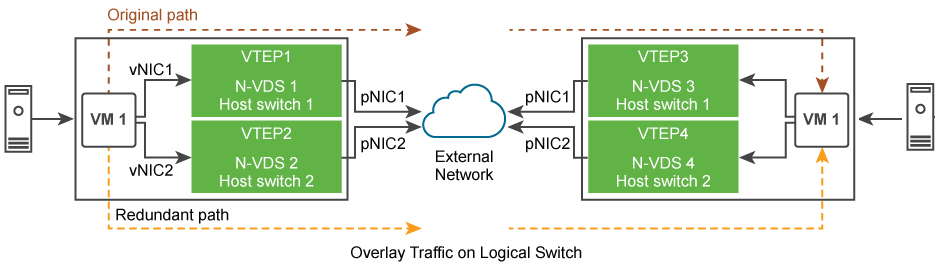

NFV workloads might use multi-homing and redundancy features provided by Stream Control Transmission Protocol (SCTP) to increase resiliency and reliability to the traffic running on applications. Multi-homing is the ability to support redundant paths from a source VM to a destination VM.

Depending upon the number of physical NICs available to be used as an uplink for an overlay or a VLAN network, those many redundant network paths are available for a VM to send traffic over to the target VM. The redundant paths are used when the pinned pNIC to a logical switch fails. The enhanced data path switch provides redundant network paths between the hosts.

The high-level tasks are:

- Prepare host as an NSX-T Data Center transport node.

- Prepare VLAN or Overlay Transport Zone with two N-VDS switches in Enhanced Data Path mode.

- On N-VDS 1, pin the first physical NIC to the switch.

- On N-VDS 2, pin the second physical NIC to the switch.

The N-VDS in enhanced data path mode ensures that if pNIC1 becomes unavailable, then traffic from VM 1 is routed through the redundant path - vNIC 1 → tunnel endpoint 2 → pNIC 2 → VM 2.