Use PXE server to automate installation of NSX Edge on a bare metal server or use ISO file to install NSX Edge on a bare metal server.

The NSX Edge bare metal node is a dedicated physical server that runs a special version NSX Edge software. The bare metal NSX Edge node requires a NIC supporting the Data Plane Development Kit (DPDK). VMware maintains a list of the compatibility with various vendor NICs. See the Bare Metal Server System Requirements.

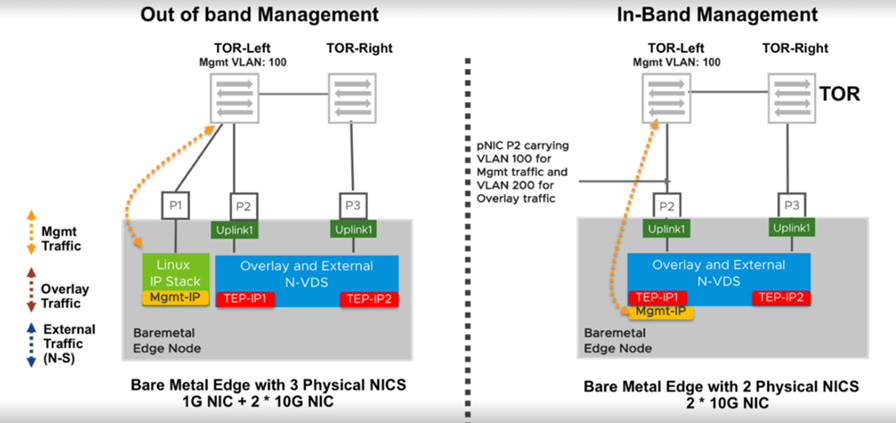

The NSX Edge Bare Metal in the diagram is illustrated with single N-VDS with two datapath interfaces, and two dedicated NICs for management plane high availability.

The NSX Edge nodes in the diagram are illustrated with one NVDS, each with two datapath interfaces. Bare Metal NSX Edge nodes can be configured with more than 2 datapath interfaces depending on the number of NICs available on the server. Bare Metal NSX Edge nodes use pNICs as uplinks that directly connect to the top of rack switches. To provide high availability for bare metal edge management, configure two pNICs on the server as an active/standby Linux bond.

The CPUs on the edge will be assigned as either datapath (DP) CPUs that provide routing and stateful services or services CPUs that provide load balancing and VPN services.

For bare metal edge nodes, all the cores from the first node of a multi-NUMA node server will be assigned to NSX-T Data Center datapath. If the bare metal has only one NUMA node, then 50% of the cores will be assigned for the NSX-T Data Center datapath.

Bare Metal NSX Edge nodes support a maximum of two NUMA nodes. The sub-NUMA clustering functionality changes the socket of heap memory from two NUMA domains to four NUMA domains. This change limits the size of heap memory allocated to each socket and causes a shortage of heap memory that the datapath requires. You must disabled sub-NUMA functionlity in the BIOS. Any changes made to the BIOS will require a reboot.

To check if the sub-NUMA functionality is enabled, log in to the bare metal NSX Edge as root and run lscpu. The output is captured in the support bundle. If there are more than two sockets per heap, it implies that the sub-NUMA functionality is enabled and it must be disabled.

- When configuring LACP LAG bonds on Bare Metal NSX Edge nodes, datapath cores (backing NICs) should belong to same NUMA node for load balancing to occur on both devices. If devices forming the bond span multipe NUMA nodes, then bond only uses network device CPU that is local NUMA node (0) to transmit packets. So, not all devices do not get used for balancing traffic that is sent out of the bond device.

In this case, failover still works: if the device attached to the local NUMA node is down then the bond will send traffic to the other device even though it is not NUMA local.

Run get datapath command to view the NUMA node associated with each datapath interface. To move nics associated with datapath to single NUMA node, physical reconfiguration of the server is required via the BIOS.

When a bare metal Edge node is installed, a dedicated interface is retained for management. This configuration is called out-of-band management. If redundancy is desired, two NICs can be used for management plane high availability. Bare metal Edge also supports in-band management where management traffic can leverage an interface being used for overlay or external (N-S) traffic as in the diagram.

Prerequisites

- Disable sub-NUMA clustering by editing the BIOS settings. NSX-T Data Center does not support sub-NUMA clustering. For more details, refer to the KB article, https://kb.vmware.com/s/article/91790.