An uplink is a link from the NSX Edge nodes or hypervisor nodes to the top-of-rack switches or NSX logical switches. A link is from a physical network interface on an NSX Edge node or hypervisor nodes to a switch.

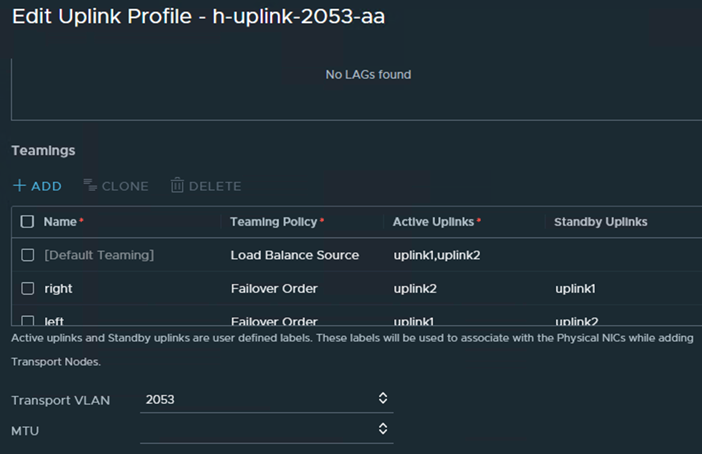

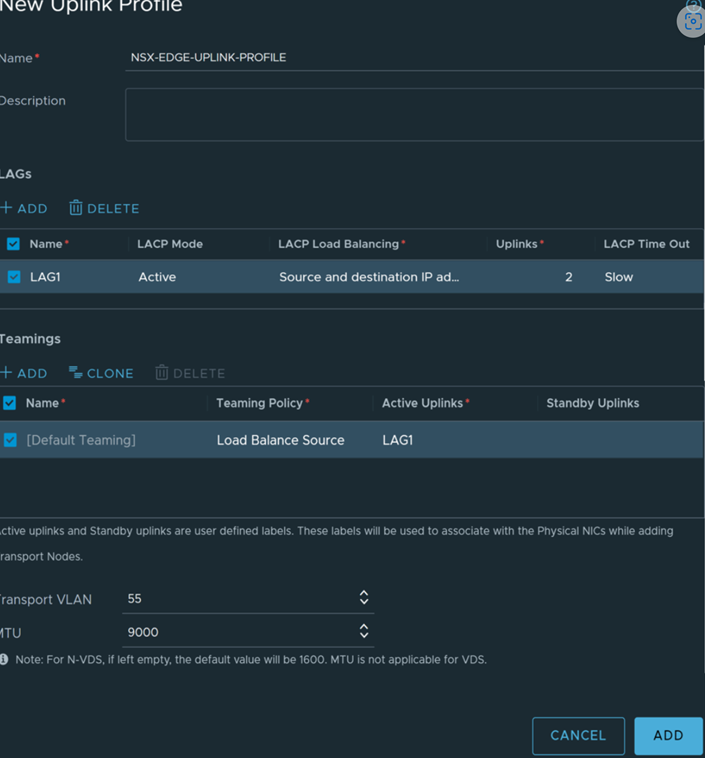

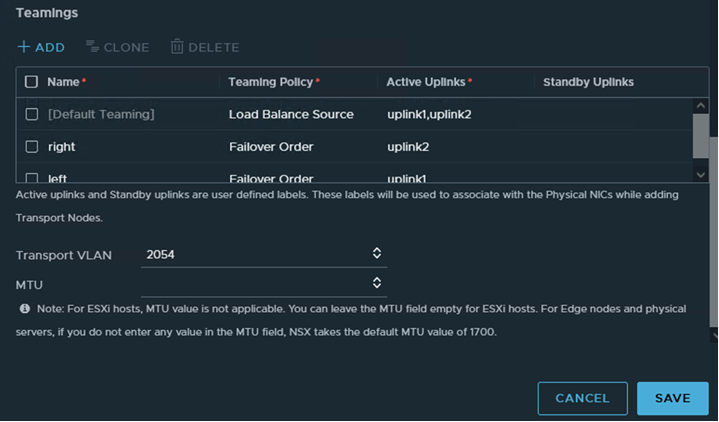

An uplink profile defines policies for the uplinks. The settings defined by uplink profiles can include teaming policies, active and standby links, transport VLAN ID, and MTU setting.

Consider the following points when configuring Failover Teaming Policy for VM appliance-based NSX Edge nodes and Bare Metal NSX Edge:- For uplinks used by a teaming policy, you cannot use the same uplinks in a different uplink profile for a given NSX Edge transport node. Standby uplinks are not supported and must not be configured in the failover teaming policy. If the teaming policy uses more than one uplink (active/standby list), you cannot use the same uplinks in the same or a different uplink profile for a given NSX Edge transport node.

- Supported scenarios:

- Bare Metal NSX Edge supports a single active uplink and a standby uplink. They do not support multiple standby uplinks.

- NSX Edge VMs do not support any standby uplinks - single or multiple standby uplinks.

- Supports multiple active uplinks.

- You cannot use LAG to configue the teaming policy.

- In the Active Uplinks field, enter uplink labels that will be associated to physical NICs when you prepare transport nodes. For example, uplink1, uplink2. When you prepare transport nodes, you will associate uplink1 to pnic1 and uplink2 to pnic2.

- You must use the Load Balanced Source teaming policy for traffic load balancing.

Consider the following points when configuring Load Balance Source for Bare Metal NSX Edge:

- Supports multiple active uplinks.

- In the Active Uplinks field, you can use LAGs or enter individual uplink lables. For example, LAG1 or uplink1, uplink2.

- A LAG must have two physical NICs on the same N-VDS.

- The number of LAGs that you can actually use depends on the capabilities of the underlying physical environment and the topology of the virtual network. For example, if the physical switch supports up to four ports in an LACP port channel, you can connect up to four physical NICs per host to a LAG.

- In the LACP section, Bare Metal NSX Edge only supports Source and destination MAC address, IP address and TCP/UDP port.

- If multiple LAG uplinks are configured on a Bare Metal NSX Edge, enter a unique LAG name for each LAG uplink profile.

- If multi-vtep uplink profile is used for Bare Metal NSX Edge or edge VMs, NSX only supports Load Balance Source teaming policy.

- You must use the Load Balanced Source teaming policy for traffic load balancing.

Prerequisites

- See NSX Edge network requirements in NSX Edge Installation Requirements.

- Each uplink in the uplink profile must correspond to an up and available physical link on your hypervisor host or on the NSX Edge node.

For example, your hypervisor host has two physical links that are up: vmnic0 and vmnic1. Suppose vmnic0 is used for management and storage networks, while vmnic1 is unused. This might mean that vmnic1 can be used as an NSX uplink, but vmnic0 cannot. To do link teaming, you must have two unused physical links available, such as vmnic1 and vmnic2.

For an NSX Edge, tunnel endpoint and VLAN uplinks can use the same physical link. For example, vmnic0/eth0/em0 might be used for your management network and vmnic1/eth1/em1 might be used for your fp-ethX links.

Procedure

Results

In addition to the UI, you can also view the uplink profiles with the API call GET /policy/api/v1/infra/host-switch-profiles.