This section describes how to install the VCG qcow on KVM.

Pre-Installation Considerations

KVM provides multiple ways to provide networking to virtual machines. The networking in libvirt should be provisioned before the VM configuration. There are multiple ways to configure networking in KVM. For a full configuration of options on how to configure Networks on libvirt, please see the following link:

https://libvirt.org/formatnetwork.html

From the full list of options, the following are recommended by VeloCloud:

- SR-IOV (This mode is required for the VCG to deliver the maximum throughput specified by VeloCloud)

- OpenVSwitch Bridge

Validating SR-IOV (Optional)

If you decided to use SR-IOV, you can quickly verify if your host machine has it activated.

You can verify this by typing:

lspci | grep -i Ethernet

Verify that you have Virtual Functions:

01:10.0 Ethernet controller: Intel Corporation 82599 Ethernet Controller Virtual Function

(rev 01)

If you decide to use SR-IOV mode, activate SR-IOV on KVM. To activate the SR-IOV on KVM, see Enable SR-IOV on KVM.

Installation Steps

- Copy the QCOW and the Cloud-init files created in the Cloud-Init Creation section to a new empty directory.

- Create the Network pools that you are going to use for the device. Provided below is a sample of a pool using SR-IOV and a sample of a pool using OpenVswitch.

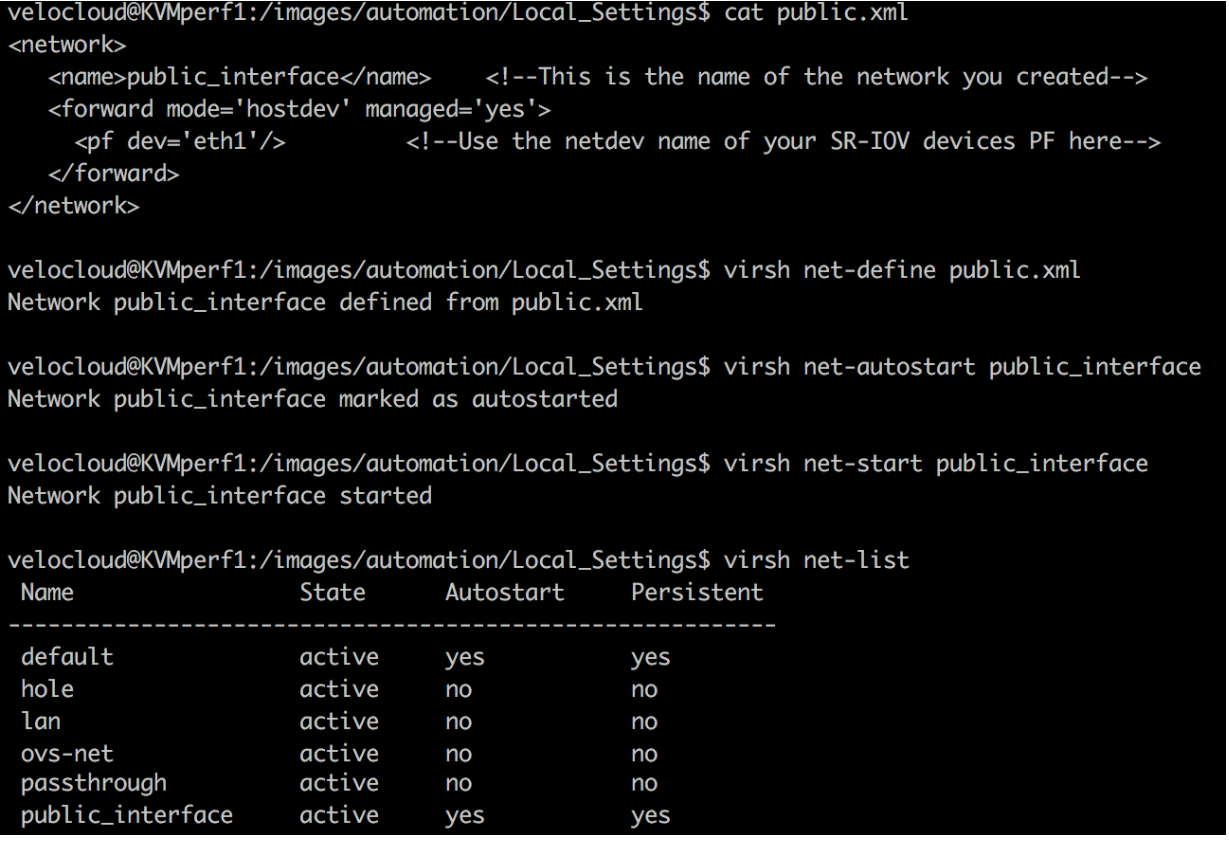

Using SR-IOV

git ./vcg/templates/KVM_NETWORKING_SAMPLES/template_outside_sriov.xml

<network> <name>public_interface</name> <!--This is the network name--> <forward mode='hostdev' managed='yes'> <pf dev='eth1'/> <!--Use the netdev name of your SR-IOV devices PF here→ <address type='pci' domain='0x0000' bus='0x06' slot='0x12' function='0x6'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x13' function='0x0'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x13' function='0x2'/> </forward> </network>

Create a network for inside_interface.

git ./vcg/templates/KVM_NETWORKING_SAMPLES/template_inside_sriov.xml

<network> <name>inside_interface</name> <!--This is the network name--> <forward mode='hostdev' managed='yes'> <pf dev='eth2'/> <!--Use the netdev name of your SR-IOV devices PF here→ <address type='pci' domain='0x0000' bus='0x06' slot='0x12' function='0x0'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x12' function='0x2'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x12' function='0x4'/> </forward> </network>Using OpenVSwitch

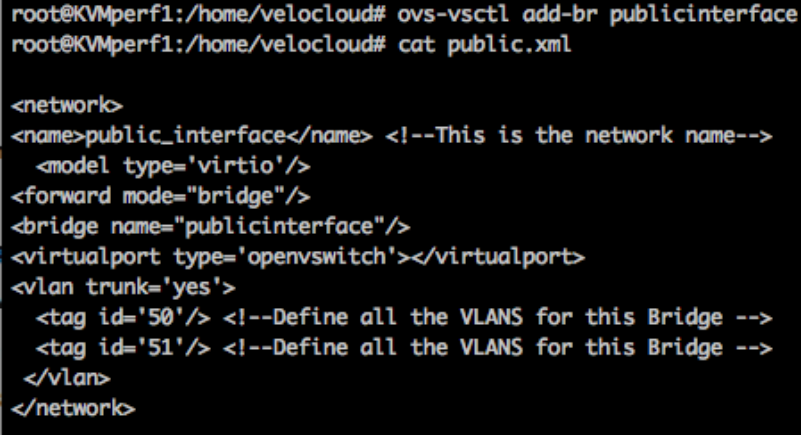

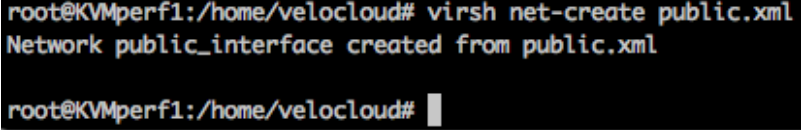

git ./vcg/templates/KVM_NETWORKING_SAMPLES/template_outside_openvswitch.xml

<?xml version="1.0" encoding="UTF-8"?> <network> <name>public_interface</name> <!--This is the network name--> <model type="virtio" /> <forward mode="bridge" /> <bridge name="publicinterface" /> <virtualport type="openvswitch" /> <vlan trunk="yes"> <tag id="50" /> <!--Define all the VLANS for this Bridge --> <tag id="51" /> <!--Define all the VLANS for this Bridge --> </vlan> </network>

Create a network for

inside_interface:git ./vcg/templates/KVM_NETWORKING_SAMPLES/template_inside_openvswitch.xml

<network> <name>inside_interface</name> <!--This is the network name--> <model type='virtio'/> <forward mode="bridge"/> <bridge name="insideinterface"/> <virtualport type='openvswitch'></virtualport> <vlan trunk='yes'></vlan> <tag id='200'/> <!—Define all the VLANS for this Bridge --> <tag id='201'/> <!—Define all the VLANS for this Bridge --> <tag id='202'/> <!—Define all the VLANS for this Bridge --> </network>

- Edit the VM XML. There are multiple ways to create a Virtual Machine in KVM. We are going to use the traditional way where we define the VM in an XML file and create it using libvirt. Below is a template that you can use for the XML file. You can create the XML file using:

vi my_vm.xml

Copy the template below and replace the sections in bold.<?xml version="1.0" encoding="UTF-8"?> <domain type="kvm"> <name>#domain_name#</name> <memory unit="KiB">8388608</memory> <currentMemory unit="KiB">8388608</currentMemory> <vcpu>8</vcpu> <cputune> <vcpupin vcpu="0" cpuset=" 0" /> <vcpupin vcpu="1" cpuset=" 1" /> <vcpupin vcpu="2" cpuset=" 2" /> <vcpupin vcpu="3" cpuset=" 3" /> <vcpupin vcpu="4" cpuset=" 4" /> <vcpupin vcpu="5" cpuset=" 5" /> <vcpupin vcpu="6" cpuset=" 6" /> <vcpupin vcpu="7" cpuset=" 7" /> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type>hvm</type> </os> <features> <acpi /> <apic /> <pae /> </features> <cpu mode="host-passthrough" /> <clock offset="utc" /> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/bin/kvm-spice</emulator> <disk type="file" device="disk"> <driver name="qemu" type="qcow2" /> <source file=" #folder#/#qcow_root#" /> <target dev="hda" bus="ide" /> <alias name="ide0-0-0" /> <address type="drive" controller="0" bus="0" target="0" unit="0" /> </disk> <disk type="file" device="cdrom"> <driver name="qemu" type="raw" /> <source file=" #folder#/#Cloud_ INIT_ ISO#" /> <target dev="sdb" bus="sata" /> <readonly /> <alias name="sata1-0-0" /> <address type="drive" controller="1" bus="0" target="0" unit="0" /> </disk> <controller type="usb" index="0"> <alias name="usb0" /> <address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x2" /> </controller> <controller type="pci" index="0" model="pci-root"> <alias name="pci.0" /> </controller> <controller type="ide" index="0"> <alias name="ide0" /> <address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x1" /> </controller> <interface type="network"> <source network=" public_interface" /> <vlan> <tag id=" #public_vlan#" /> </vlan> <alias name="hostdev1" /> <address type="pci" domain="0x0000" bus="0x00" slot="0x11" function="0x0" /> </interface> <interface type="network"> <source network="inside_interface" /> <alias name="hostdev2" /> <address type="pci" domain="0x0000" bus="0x00" slot="0x12" function="0x0" /> </interface> <serial type="pty"> <source path="/dev/pts/3" /> <target port="0" /> <alias name="serial0" /> </serial> <console type="pty" tty="/dev/pts/3"> <source path="/dev/pts/3" /> <target type="serial" port="0" /> <alias name="serial0" /> </console> <memballoon model="none" /> </devices> <seclabel type="none" /> </domain> - Launch the VM.

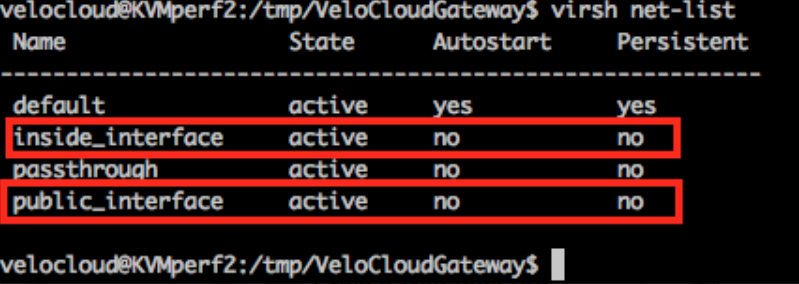

- Verify the basic networks are created and active.

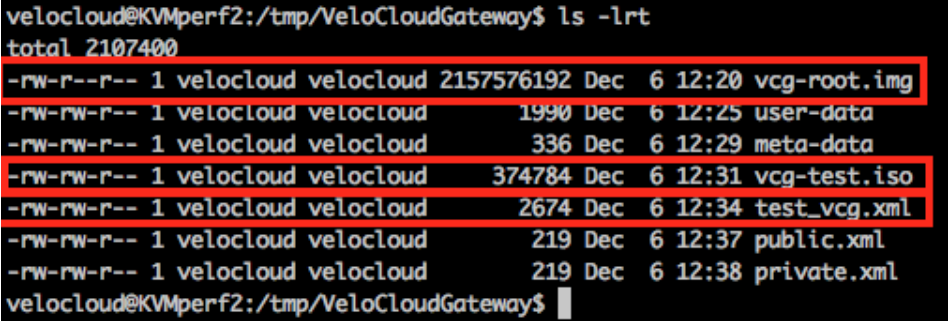

Main Files

- vcg-root (qcow file)

- vcg-test.iso (cloud-init)

- test_vcg.xml (XML file that defines the VM)

Define VM

velocloud@KVMperf2:/tmp/VeloCloudGateway$ virsh define test_vcg.xml Domain test_vcg defined from test_vcg.xml

Set VM to autostart

velocloud@KVMperf2:/tmp/VeloCloudGateway$ virsh define test_vcg.xml Domain test_vcg defined from test_vcg.xml

Start VM

velocloud@KVMperf2:/tmp/VeloCloudGateway$ virsh define test_vcg.xml Domain test_vcg defined from test_vcg.xml

- Console into the VM.

virsh list Id Name State ---------------------------------------------------- 25 test_vcg running velocloud@KVMperf2$ virsh console 25 Connected to domain test_vcg Escape character is ^]

Special Consideration for KVM Host

- Deactivate GRO (Generic Receive Offload) on physical interfaces (to avoid unnecessary re-fragmentation in VCG).

ethtool –K <interface> gro off tx off

- Deactivate CPU C-states (power states affect real-time performance). Typically, this can be done as part of kernel boot options by appending

processor.max_cstate=1or just deactivate in the BIOS. -

For production deployment, vCPUs must be pinned to the instance. No oversubscription on the cores should be allowed to take place.