Here are the details of the Small Footprint VMware Tanzu Application Service for VMs (TAS for VMs) tile for Ops Manager.

The Small Footprint TAS for VMs is a repackaging of the TAS for VMs components into a smaller deployment with fewer virtual machines (VMs). For a description of the limitations that come with a smaller deployment, see Limitations.

Differentiate small footprint TAS for VMs and TAS for VMs

A standard TAS for VMs deployment must have at least 13 VMs, but Small Footprint TAS for VMs requires only four.

The following image displays a comparison of the number of VMs deployed by TAS for VMs and Small Footprint TAS for VMs.

Use cases

Use Small Footprint TAS for VMs for smaller Ops Manager deployments on which you intend to host 2500 or fewer apps, as described in Limitations. If you want to use Small Footprint TAS for VMs in a production environment, ensure the limitations described below are not an issue in your use case.

Small Footprint TAS for VMs is compatible with Ops Manager service tiles.

Small Footprint TAS for VMs is also ideal for the following use cases:

-

Proof-of-concept installations: Deploy Ops Manager quickly and with a small footprint for evaluation or testing purposes.

-

Sandbox installations: Use Small Footprint TAS for VMs as a Ops Manager operator sandbox for tasks such as testing compatibility.

-

Service tile R&D: Test a service tile against Small Footprint TAS for VMs instead of a standard TAS for VMs deployment to increase efficiency and reduce cost.

Limitations

Small Footprint TAS for VMs has the following limitations:

-

Number of app instances: The tile is not designed to support large numbers of app instances. You cannot scale the number of Compute VMs beyond 10 instances in the Resource Config pane. Small Footprint TAS for VMs is designed to support 2500 or fewer apps.

-

Increasing platform capacity: You cannot upgrade the Small Footprint TAS for VMs tile to the standard TAS for VMs tile. If you expect platform usage to increase beyond the capacity of Small Footprint TAS for VMs, VMware recommends using the standard TAS for VMs tile.

-

Management plane availability during tile upgrades: You may not be able to perform management plane operations like deploying new apps and accessing APIs for brief periods during tile upgrades. The management plane is located on the Control VM.

-

App availability during tile upgrades: If you require availability during your upgrades, you must scale your Compute VMs to a highly available configuration. Ensure sufficient capacity exists to move app instances between Compute VM instances during the upgrade.

Architecture

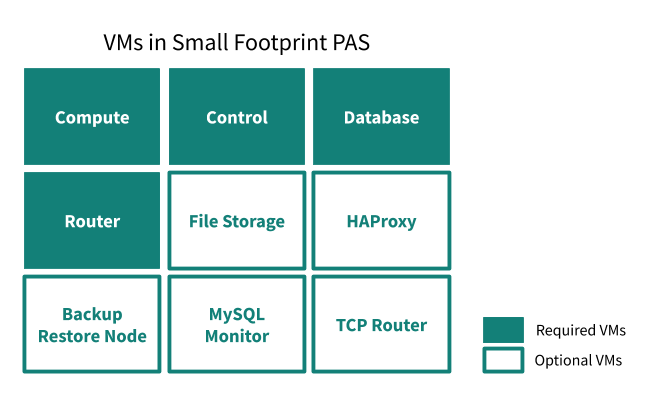

You can deploy Small Footprint TAS for VMs with a minimum of four VMs, as shown in the following image. The configuration shown here assumes that you are using an external blobstore.

To reduce the number of VMs required for Small Footprint TAS for VMs, the Control and Database VMs include colocated jobs that run on a single VM in TAS for VMs. See the next sections for details.

For more information about the components mentioned on this page, see TAS for VMs Components.

Control VM

The Control VM includes the TAS for VMs jobs that handle management plane operations, app lifecycles, logging, and user authorization and authentication. Additionally, all errands run on the Control VM, eliminating the need for a VM for each errand and significantly reducing the time it takes to run errands.

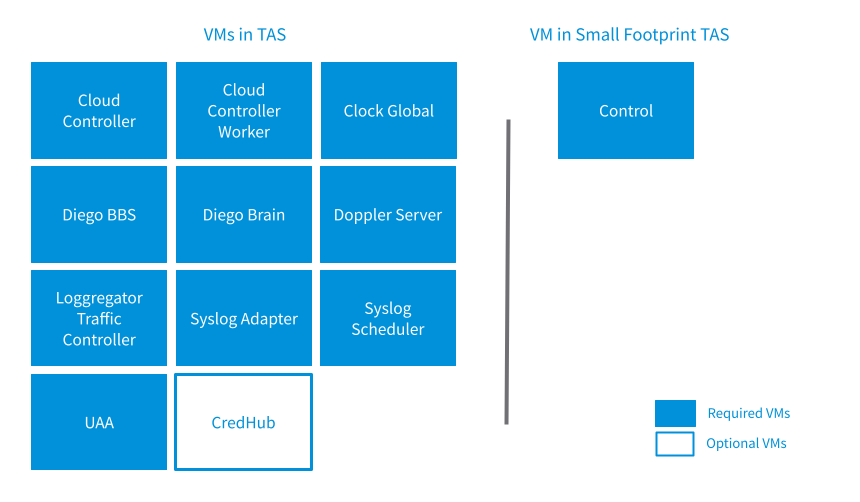

The following image shows all the jobs from TAS for VMs that are co-located on the Control VM in Small Footprint TAS for VMs. Small Footprint TAS for VMs co-locates 11 VMs onto 1 VM called Control. The 11 VMs are Cloud Controller, Cloud Controller Worker, Clock Global, Diego BBS, Diego Brain, Doppler Server, Loggregator Traffic Controller, Syslog Adapter, Syslog Scheduler, UAA, and optionally, CredHub.

Database VM

The Database VM includes the TAS for VMs jobs that handle internal storage and messaging.

The following image shows all the jobs from TAS for VMs that are co-located on the Database VM in Small Footprint TAS for VMs. Small Footprint TAS for VMs Condenses 3 VMs onto 1 VM called Database. The three TAS for VMs VMs are NATS, MySQL Proxy, and MySQL Server. The MySQL Proxy and MySQL Server are optional VMs.

Compute VM

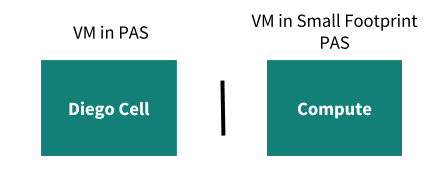

The Compute VM is the same as the Diego Cell VM in TAS for VMs.

Other VMs (unchanged)

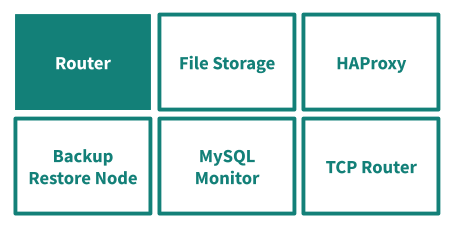

The following image shows the VMs performs the same functions in both versions of the TAS for VMs tile.

Requirements

The following topics list the minimum resources needed to run Small Footprint TAS for VMs on the public IaaSes that Ops Manager supports:

- Installing Ops Manager on AWS

- Installing Ops Manager on Azure

- Installing Ops Manager on GCP

- Installing Ops Manager on vSphere

Installing Small Footprint TAS for VMs

To install Small Footprint TAS for VMs, see Architecture and Installation Overview and the installation and configuration topics for your IaaS.

Follow the same installation and configuration steps as for TAS for VMs, with these differences:

-

Selecting a product in VMware Tanzu Network: When you navigate to the VMware Tanzu Application Service for VMs page on VMware Tanzu Network, select the Small Footprint release.

-

Configuring resources:

- The Resource Config pane in the Small Footprint TAS for VMs tile reflects the differences in VMs discussed in Architecture.

- Small Footprint TAS for VMs does not default to a highly available configuration like TAS for VMs does. It defaults to a minimum configuration. To make Small Footprint TAS for VMs highly available, scale the VMs to the following instance counts:

- Compute:

3 - Control:

2 - Database:

3 - Router:

3

- Compute:

-

Configuring load balancers: If you are using an SSH load balancer, you must enter its name in the Control VM row of the Resource Config pane. There is no Diego Brain row in Small Footprint TAS for VMs because the Diego Brain is colocated on the Control VM. You can still enter the appropriate load balancers in the Router and TCP Router rows as normal.

Troubleshooting co-located jobs using logs

To troubleshoot a job that runs on the Control or Database VMs:

-

Follow the procedures in Advanced Troubleshooting with the BOSH CLI to the log in to the BOSH Director for your deployment:

-

Use BOSH to list the VMs in your Small Footprint TAS for VMs deployment. Run:

`bosh -e BOSH-ENV -d TAS-DEPLOYMENT vms`Where: *

BOSH-ENVis the name of your BOSH environment. *TAS-DEPLOYMENTis the name of your Small Footprint TAS for VMs deployment.Note If you do not know the name of your deployment, you can run

bosh -e BOSH-ENV deploymentsto list the deployments for your BOSH Director. -

Use BOSH to SSH into one of the Small Footprint TAS for VMs VMs. Run:

`bosh -e BOSH-ENV -d TAS-DEPLOYMENT ssh VM-NAME/VM-GUID`Where: *

BOSH-ENVis the name of your BOSH environment. *TAS-DEPLOYMENTis the name of your Small Footprint TAS for VMs deployment. *VM-NAMEis the name of your VM. *VM-GUIDis the GUID of your VM.For example, to SSH into the Control VM, run:

`bosh -e example-env -d example-deployment ssh control/12b1b027-7ffd-43ca-9dc9-7f4ff204d86a` -

To act as a super user, run:

`sudo su` -

To list the processes running on the VM, run:

`monit summary`The following example output lists the processes running on the Control VM. The processes listed reflect the co-location of jobs as outlined in Architecture.

control/12b1b027-7ffd-43ca-9dc9-7f4ff204d86a:/var/vcap/bosh\_ssh/bosh\_f9d2446b18b445e# monit summary The Monit daemon 5.2.5 uptime: 5d 21h 10m Process 'bbs' running Process 'metron\_agent' running Process 'locket' running Process 'route\_registrar' running Process 'policy-server' running Process 'silk-controller' running Process 'uaa' running Process 'statsd\_injector' running Process 'cloud\_controller\_ng' running Process 'cloud\_controller\_worker\_local\_1' running Process 'cloud\_controller\_worker\_local\_2' running Process 'nginx\_cc' running Process 'routing-api' running Process 'cloud\_controller\_clock' running Process 'cloud\_controller\_worker\_1' running Process 'auctioneer' running Process 'cc\_uploader' running Process 'file\_server' running Process 'nsync\_listener' running Process 'ssh\_proxy' running Process 'tps\_watcher' running Process 'stager' running Process 'loggregator\_trafficcontroller' running Process 'reverse\_log\_proxy' running Process 'adapter' running Process 'doppler' running Process 'syslog\_drain\_binder' running System 'system\_localhost' running

-

To access logs, navigate to

/vars/vcap/sys/logby running:`cd /var/vcap/sys/log` -

To list the log directories for each process, run:

`ls` -

Navigate to the directory of the process that you want to view logs for. For example, for the Cloud Controller process, run:

`cd cloud_controller_ng/`From the directory of the process, you can list and view its logs. See the following example output:

control/12b1b027-7ffd-43ca-9dc9-7f4ff204d86a:/var/vcap/sys/log/cloud\_controller\_ng# ls cloud\_controller\_ng\_ctl.err.log cloud\_controller\_ng.log.2.gz cloud\_controller\_ng.log.6.gz drain pre-start.stdout.log cloud\_controller\_ng\_ctl.log cloud\_controller\_ng.log.3.gz cloud\_controller\_ng.log.7.gz post-start.stderr.log cloud\_controller\_ng.log cloud\_controller\_ng.log.4.gz cloud\_controller\_worker\_ctl.err.log post-start.stdout.log cloud\_controller\_ng.log.1.gz cloud\_controller\_ng.log.5.gz cloud\_controller\_worker\_ctl.log pre-start.stderr.log

Release notes

The Small Footprint TAS for VMs tile releases alongside the TAS for VMs tile. For more information, see VMware Tanzu Application Service for VMs v2.13 Release Notes.