The general requirements for deploying and managing an installation of Tanzu Operations Manager and VMware Tanzu Application Service for VMs (TAS for VMs) are:

-

A wildcard DNS record that points to your Gorouter or load balancer. Also, you can use a service such as xip.io. For example,

203.0.113.0.xip.io.- TAS for VMs gives each app its own hostname in your app domain.

- With a wildcard DNS record, every hostname in your domain resolves to the IP address of your Gorouter or load balancer, and you do not have to configure an A record for each app hostname. For example, if you create a DNS record

*.example.compointing to your load balancer or Gorouter, every app deployed to theexample.comdomain resolves to the IP address of your Gorouter.

-

At least one wildcard TLS certificate that matches the DNS record you set up,

*.example.com. -

Sufficient IP allocation:

- One static IP address for one of your Gorouters.

- One static IP address for each job in the TAS for VMs tile. For a full list, see the Resource Config pane for each tile.

- One static IP address for each of the following jobs:

- Consul

- NATS

- File Storage

- MySQL Proxy

- MySQL Server

- Backup Restore Node

- Router

- MySQL Monitor

- Diego Brain

- TCP Router

- One IP for each VM instance created by the service.

- An additional IP address for each compilation worker. The formula for total IPs needed is

IPs needed = static IPs + VM instances + compilation workers.

VMware recommends that you allocate at least 36 dynamic IP addresses when installing TAS for VMs. BOSH requires additional dynamic IP addresses during installation to compile and deploy VMs, install TAS for VMs, and connect to services.

-

One or more NTP servers if not already provided by your IaaS.

-

(Recommended) A network without DHCP available for deploying the TAS for VMs VMs.

If you have DHCP, see Troubleshooting Deployment Problems in the Tanzu Operations Manager documentation for information about avoiding issues with your installation.

-

(Optional) External storage. When you deploy TAS for VMs, you can select internal file storage or external file storage, either network-accessible or IaaS-provided, as an option in the TAS for VMs tile.

VMware recommends using external storage whenever possible. For more information about how file storage location affects platform performance and stability during upgrades, see Configure File Storage in Configuring TAS for VMs for Upgrades.

-

(Optional) External databases. When you install TAS for VMs, you can select internal or external databases for the BOSH Director and for TAS for VMs. VMware recommends using external databases in production deployments. An external database must be configured to use the UTC timezone.

-

(Optional) External user stores. When you deploy TAS for VMs, you can select a SAML user store for Tanzu Operations Manager or a SAML or LDAP user store for TAS for VMs, to integrate existing user accounts.

-

The most recent version of the Cloud Foundry Command Line Interface (cf CLI).

Differences in Small Footprint TAS for VMs

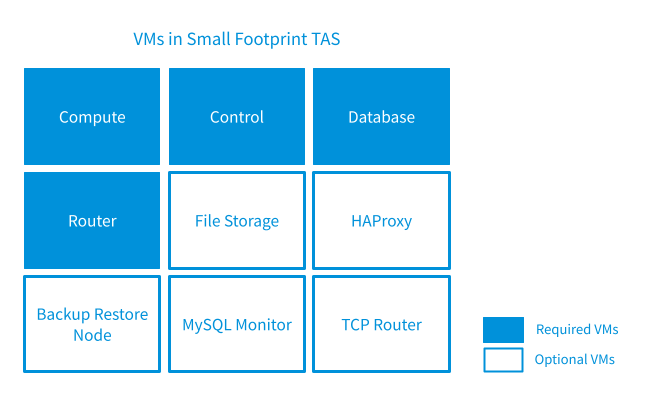

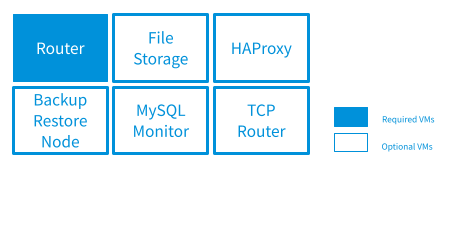

You can deploy Small Footprint TAS for VMs with a minimum of four VMs, as shown in the following image.

Note The following image assumes that you are using an external blobstore.

To reduce the number of VMs required for Small Footprint TAS for VMs, the Control and Database VMs include co-located jobs that run on a single VM in TAS for VMs. See the next sections for details.

For more information about the components mentioned on this page, see TAS for VMs Components.

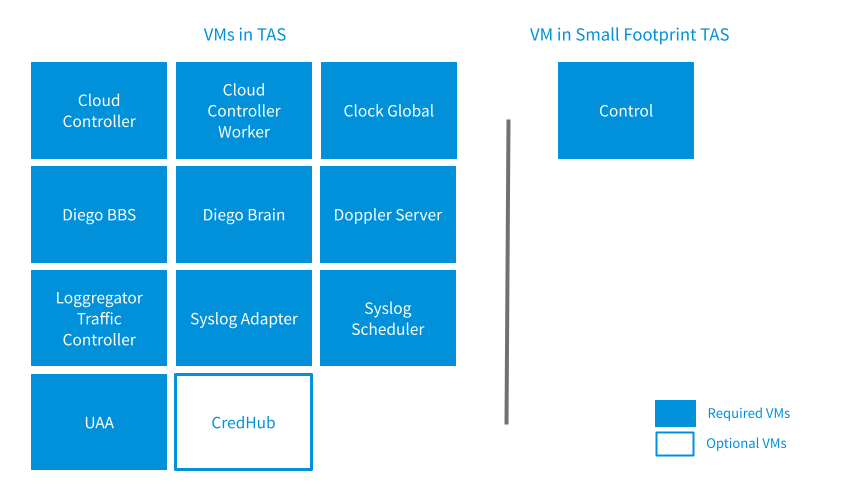

Control VM

The Control VM includes the TAS for VMs jobs that handle management plane operations, app life cycles, logging, and user authorization and authentication. Additionally, all errands run on the Control VM, eliminating the need for a VM for each errand and significantly reducing the time it takes to run errands.

The following image shows all the jobs from TAS for VMs that are co-located on the Control VM in Small Footprint TAS for VMs. Small Footprint TAS for VMs co-locates 11 VMs onto 1 VM called Control. The 11 VMs are Cloud Controller, Cloud Controller Worker, Clock Global, Diego BBS, Diego Brain, Doppler Server, Loggregator Traffic Controller, Syslog Adapter, Syslog Scheduler, UAA, and optionally, CredHub.

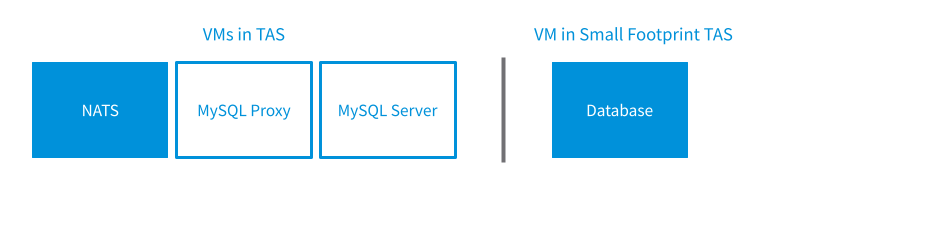

Database VM

The Database VM includes the TAS for VMs jobs that handle internal storage and messaging.

The following image shows all the jobs from TAS for VMs that are co-located on the Database VM in Small Footprint TAS for VMs. Small Footprint TAS for VMs Condenses 3 VMs onto 1 VM called Database. The three TAS for VMs VMs are NATS, MySQL Proxy, and MySQL Server. The MySQL Proxy and MySQL Server are optional VMs.

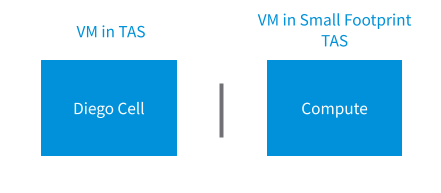

Compute VM

The Compute VM is the same as the Diego Cell VM in TAS for VMs.

Other VMs (unchanged)

For the other VMs, they perform the same functions in both versions of the TAS for VMs tile. The Router, File Storage, Backup Restore Node, MySQL Monitor, and TCP Router VMs are the same in both TAS for VMs and Small Footprint TAS for VMs.

Instance number and scaling requirements

By default, TAS for VMs deploys the number of VM instances required to run a highly available configuration. If you are deploying a test or sandbox TAS for VMs that does not require HA, then you can scale down the number of instances in your deployment.

For information about the number of instances required to run a minimal, non-HA TAS for VMs deployment, see Scaling TAS for VMs.