Connect to and Examine Workload Clusters

After you have deployed workload clusters, you use the tanzu cluster list and tanzu cluster kubeconfig get commands to obtain the list of running clusters and their credentials. Then, you can connect to the clusters by using kubectl and start working with your clusters.

List Deployed Workload Clusters

To list your workload clusters and the management cluster that manages them, use the tanzu cluster list command.

-

To list all of the workload clusters that are running in the

defaultnamespace of the management cluster, run thetanzu cluster listcommand:tanzu cluster listThe output lists all of the workload clusters in the default namespace, including the cluster names, their current status, the numbers of actual and requested control plane and worker nodes, and the Kubernetes version that the cluster is running.

Clusters can be in the following states:

creating: The control plane is being created.createStalled: The process of creating control plane has stalled.deleting: The cluster is in the process of being deleted.failed: The creation of the control plane has failed.running: The control plane has initialized fully.updating: The cluster is in the process of rolling out an update or is scaling nodes.updateFailed: The cluster update process failed.updateStalled: The cluster update process has stalled.- No status: The creation of the cluster has not started yet.

If a cluster is in a stalled state, check that there is network connectivity to the external registry, make sure that there are sufficient resources on the target platform for the operation to complete, and ensure that DHCP is issuing IPv4 addresses correctly.

-

To list clusters in all of the namespaces that you have access to, specify the

-Aor--all-namespacesoption.tanzu cluster list --all-namespaces -

To list only those clusters that are running in a given namespace, specify the

--namespaceoption.tanzu cluster list --namespace=NAMESPACEWhere

NAMESPACEis the namespace in which the clusters are running.On vSphere with Tanzu, DevOps engineers must include a

--namespacevalue when they runtanzu cluster list, to specify a namespace that they can access. See vSphere with Tanzu User Roles and Workflows in the VMware vSphere documentation. -

If you are logged in to a standalone management cluster, to include the current standalone management cluster in the output of

tanzu cluster list, specify the--include-management-cluster -Aoptions.tanzu cluster list --include-management-cluster -AYou can see that the management cluster is running in the

tkg-systemnamespace and has themanagementrole.NAME NAMESPACE STATUS CONTROLPLANE WORKERS KUBERNETES ROLES PLAN TKR workload-cluster-1 default running 1/1 1/1 v1.27.5+vmware.1 <none> dev v1.27.5---vmware.1-tkg.1 workload-cluster-2 default running 1/1 1/1 v1.27.5+vmware.1 <none> dev v1.27.5---vmware.1-tkg.1 mgmt-cluster tkg-system running 1/1 1/1 v1.27.5+vmware.1 management dev v1.27.5---vmware.1-tkg.1 -

To see all of the management clusters and change the context of the Tanzu CLI to a different management cluster, run the

tanzu context usecommand. See List Management Clusters and Change Context for more information.

Export Workload Cluster Details to a File

You can export the details of the clusters that you have access to in either JSON or YAML format. You can save the JSON or YAML to a file so that you can use it in scripts to run bulk operations on clusters.

Export as a JSON File

To export cluster details as JSON, run tanzu cluster list with the --output option, specifying json:

tanzu cluster list --output json > clusters.json

The output shows the cluster information as JSON:

[

{

"name": "workload-cluster-1",

"namespace": "default",

"status": "running",

"plan": "dev",

"controlplane": "1/1",

"workers": "1/1",

"kubernetes": "v1.27.5+vmware.1",

"roles": [],

"tkr": "v1.27.5---vmware.1-tkg.1",

"labels": {

"cluster.x-k8s.io/cluster-name": "workload-cluster-1",

"run.tanzu.vmware.com/tkr": "v1.27.5---vmware.1-tkg.1",

"tkg.tanzu.vmware.com/cluster-name": "workload-cluster-1",

"topology.cluster.x-k8s.io/owned": ""

}

},

{

"name": "workload-cluster-2",

"namespace": "default",

"status": "running",

"plan": "dev",

"controlplane": "1/1",

"workers": "1/1",

"kubernetes": "v1.27.5+vmware.1",

"roles": [],

"tkr": "v1.27.5---vmware.1-tkg.1",

"labels": {

"tanzuKubernetesRelease": "v1.27.5---vmware.1-tkg.1",

"tkg.tanzu.vmware.com/cluster-name": "workload-cluster-2"

}

}

]

Export as a YAML File

To export cluster details as YAML, run tanzu cluster list with the --output option, specifying yaml:

tanzu cluster list --output yaml > clusters.yaml

The output shows the cluster information as YAML:

- name: workload-cluster-1

namespace: default

status: running

plan: dev

controlplane: 1/1

workers: 1/1

kubernetes: v1.27.5+vmware.1

roles: []

tkr: v1.27.5---vmware.1-tkg.1

labels:

cluster.x-k8s.io/cluster-name: workload-cluster-1

run.tanzu.vmware.com/tkr: v1.27.5---vmware.1-tkg.1

tkg.tanzu.vmware.com/cluster-name: workload-cluster-1

topology.cluster.x-k8s.io/owned: ""

- name: workload-cluster-1

namespace: default

status: running

plan: dev

controlplane: 1/1

workers: 1/1

kubernetes: v1.27.5+vmware.1

roles: []

tkr: v1.27.5---vmware.1-tkg.1

labels:

tanzuKubernetesRelease: v1.27.5---vmware.1-tkg.1

tkg.tanzu.vmware.com/cluster-name: workload-cluster-1

Export Details for Multiple Clusters

For information about how to save the details of multiple management clusters, including their context and kubeconfig files, see Managing Your Management Clusters.

Retrieve Workload Cluster kubeconfig

After you create a workload cluster, you can obtain its context and kubeconfig settings by running the tanzu cluster kubeconfig get command, specifying the name of the cluster. By default, the command adds the cluster’s kubeconfig settings to your current kubeconfig file.

tanzu cluster kubeconfig get my-cluster

ImportantIf identity management is not configured on the cluster, you must specify the

--adminoption.

To export kubeconfig to a file, specify the --export-file option.

tanzu cluster kubeconfig get my-cluster --export-file my-cluster-kubeconfig

ImportantDeleting your

kubeconfigfile will prevent you from accessing all the clusters that you have deployed from the Tanzu CLI.

If identity management and role-based access control (RBAC) are configured on the cluster, users with standard, non-admin kubeconfig credentials will need to authenticate with an external identity provider in order to log in to the cluster.

- For information about identity management and RBAC in Tanzu Kubernetes Grid with a standalone management cluster, see Identity and Access Management.

- For information about identity and access management for TKG 2.x on vSphere with Supervisor, see About Identity and Access Management for TKG 2 Clusters on Supervisor.

Admin kubeconfig

To generate a standalone admin kubeconfig file with embedded credentials, add the --admin option. This kubeconfig file grants its user full access to the cluster’s resources and lets them access the cluster without logging in to an identity provider.

tanzu cluster kubeconfig get my-cluster --admin

You should see the following output:

You can now access the cluster by running 'kubectl config use-context my-cluster-admin@my-cluster'

Standard kubeconfig

If identity management is configured on the target cluster, you can generate a standard, non-admin kubeconfig that requires the user to authenticate with your external identity provider and grants them access to cluster resources based on their assigned roles. In this case, run tanzu cluster kubeconfig get without the --admin option.

tanzu cluster kubeconfig get CLUSTER-NAME --namespace=NAMESPACE

Where CLUSTER-NAME and NAMESPACE is the cluster and namespace that you are targeting.

You should see the following output:

You can now access the cluster by running 'kubectl config use-context ...'

To save the configuration information in a standalone kubeconfig file, for example, to distribute it to developers, specify the --export-file option. This kubeconfig file requires the user to authenticate with an external identity provider and grants access to cluster resources based on their assigned roles.

tanzu cluster kubeconfig get CLUSTER-NAME --namespace=NAMESPACE --export-file PATH-TO-FILE

ImportantBy default, unless you specify the

--export-fileoption to save thekubeconfigfor a cluster to a specific file, the credentials for all clusters that you deploy from the Tanzu CLI are added to a sharedkubeconfigfile. If you delete the sharedkubeconfigfile, all clusters become unusable.

Examine the Deployed Cluster

After you have added the credentials to your kubeconfig, you can connect to the cluster by using kubectl.

-

Get available contexts:

kubectl config get-contexts -

Point

kubectlto your cluster. For example:kubectl config use-context my-cluster-admin@my-cluster -

Use

kubectlto see the status of the nodes in the cluster:kubectl get nodesFor example, if you deployed the

my-dev-clusterwith thedevplan, you see the following output.NAME STATUS ROLES AGE VERSION my-dev-cluster-control-plane-9qx88-p88cp Ready control-plane 2d2h v1.27.5+vmware.1 my-dev-cluster-md-0-infra-n62lq-hq4k9 Ready <none> 2d2h v1.27.5+vmware.1Because networking with Antrea is enabled by default in workload clusters, all clusters are in the

Readystate without requiring any additional configuration. -

Use

kubectlto see the status of the pods running in the cluster:kubectl get pods -AThe following example shows the pods running in the

kube-systemnamespace in themy-dev-clustercluster on Azure.NAMESPACE NAME READY STATUS RESTARTS AGE kube-system antrea-agent-ck7lv 2/2 Running 4 (3h7m ago) 2d2h kube-system antrea-agent-fpq79 2/2 Running 4 (3h7m ago) 2d2h kube-system antrea-controller-66d7978fd7-nkzgb 1/1 Running 4 (3h7m ago) 2d2h kube-system coredns-77d74f6759-b68ht 1/1 Running 2 (3h7m ago) 2d2h kube-system coredns-77d74f6759-qfp8b 1/1 Running 2 (3h7m ago) 2d2h kube-system csi-azuredisk-controller-5957dc576c-42tsd 6/6 Running 12 (3h7m ago) 2d2h kube-system csi-azuredisk-node-5hmkw 3/3 Running 6 (3h7m ago) 2d2h kube-system csi-azuredisk-node-k7fr7 3/3 Running 6 (3h7m ago) 2d2h kube-system csi-azurefile-controller-5d48b98756-rmrxp 5/5 Running 10 (3h7m ago) 2d2h kube-system csi-azurefile-node-brnf2 3/3 Running 6 (3h7m ago) 2d2h kube-system csi-azurefile-node-vqghv 3/3 Running 6 (3h7m ago) 2d2h kube-system csi-snapshot-controller-7ffbf75bd9-5m64r 1/1 Running 2 (3h7m ago) 2d2h kube-system etcd-my-dev-cluster-control-plane-9qx88-p88cp 1/1 Running 2 (3h7m ago) 2d2h kube-system kube-apiserver-my-dev-cluster-control-plane-9qx88-p88cp 1/1 Running 2 (3h7m ago) 2d2h kube-system kube-controller-manager-my-dev-cluster-control-plane-9qx88-p88cp 1/1 Running 2 (3h7m ago) 2d2h kube-system kube-proxy-hqhfx 1/1 Running 2 (3h7m ago) 2d2h kube-system kube-proxy-s6wwf 1/1 Running 2 (3h7m ago) 2d2h kube-system kube-scheduler-my-dev-cluster-control-plane-9qx88-p88cp 1/1 Running 2 (3h7m ago) 2d2h kube-system metrics-server-545546cdfd-qhbxc 1/1 Running 2 (3h7m ago) 2d2h secretgen-controller secretgen-controller-7b7cbc6d9d-p6qww 1/1 Running 2 (3h7m ago) 2d2h tkg-system kapp-controller-868f9469c5-j58pn 2/2 Running 5 (3h7m ago) 2d2h tkg-system tanzu-capabilities-controller-manager-b46dcfc7f-7dzd9 1/1 Running 4 (3h7m ago) 2d2h

Access a Workload Cluster as a Standard User (Standalone Management Cluster)

This section describes how a standard, non-admin user can log in to a workload cluster deployed by a standalone management cluster. This workflow differs from how a cluster administrator who created the cluster’s management cluster can access the workload cluster.

Prerequisites

Before you perform this task, ensure that:

- You have a Docker application that is running on your system. If your system runs Microsoft Windows, set the Docker mode to Linux and configure Windows Subsystem for Linux.

- On a Linux machine, use

aptinstead ofsnapto install Docker from a CLI.

- On a Linux machine, use

- You have obtained from the cluster administrator:

- Management cluster endpoint details

- The name of the workload cluster that you want to access

- A

kubeconfigfile for the workload cluster, as explained in Retrieve Workload Clusterkubeconfig

Access the Workload Cluster

-

On the Tanzu CLI, run the following command:

tanzu context create --endpoint https://MANAGEMENT-CLUSTER-CONTROL-PLANE-ENDPOINT:PORT --name SERVER-NAMEWhere:

- By default,

PORTis6443. If the cluster administrator setCLUSTER_API_SERVER_PORTorVSPHERE_CONTROL_PLANE_ENDPOINT_PORTwhen deploying the cluster, use the port number defined in the variable. SERVER-NAMEis the name of your management cluster server.

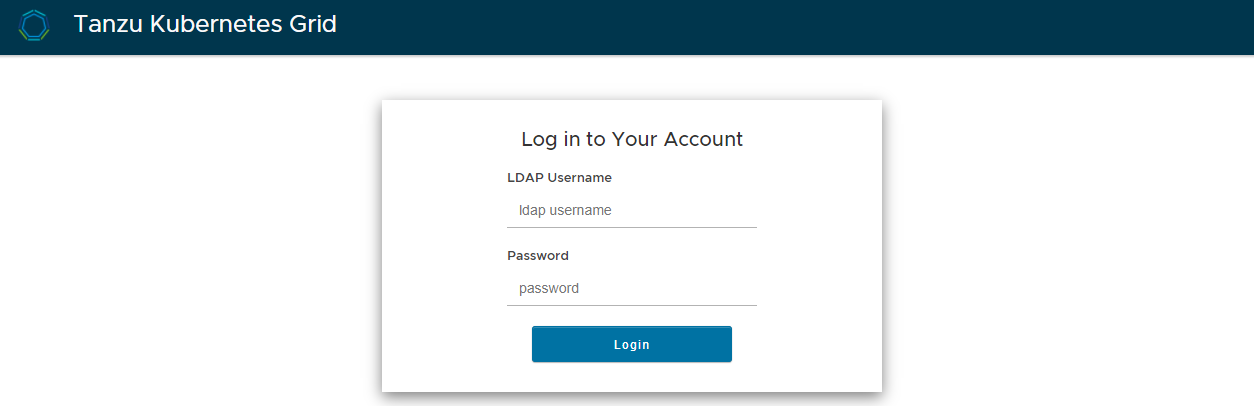

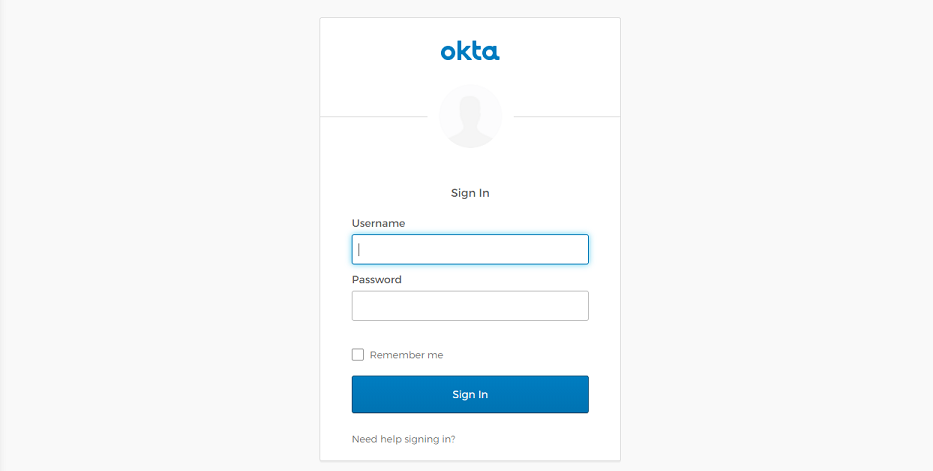

If identity management is configured on the management cluster, the login screen for the identity management provider (LDAP or OIDC) opens in your default browser.

LDAPS:

OIDC:

- By default,

-

Log in to the identity management provider.

-

Run the following command to switch to the workload cluster:

kubectl config use-context CONTEXT --kubeconfig="MY-KUBECONFIG"Where

CONTEXTandMY-KUBECONFIGare the cluster context andkubeconfigfile obtained from the cluster administrator.

In your subsequent logins to the Tanzu CLI, you will see an option to choose your Tanzu Kubernetes Grid environment from a list that pops up after your enter tanzu context use.