This topic describes the Azure reference architecture for VMware Tanzu Operations Manager and any runtime products, including VMware Tanzu Application Service for VMs (TAS for VMs) and VMware Tanzu Kubernetes Grid Integrated Edition (TKGI). This architecture builds on the common base architectures described in Platform Architecture and Planning Overview.

For additional requirements and installation instructions for running Tanzu Operations Manager on Azure, see Installing Tanzu Operations Manager on Azure.

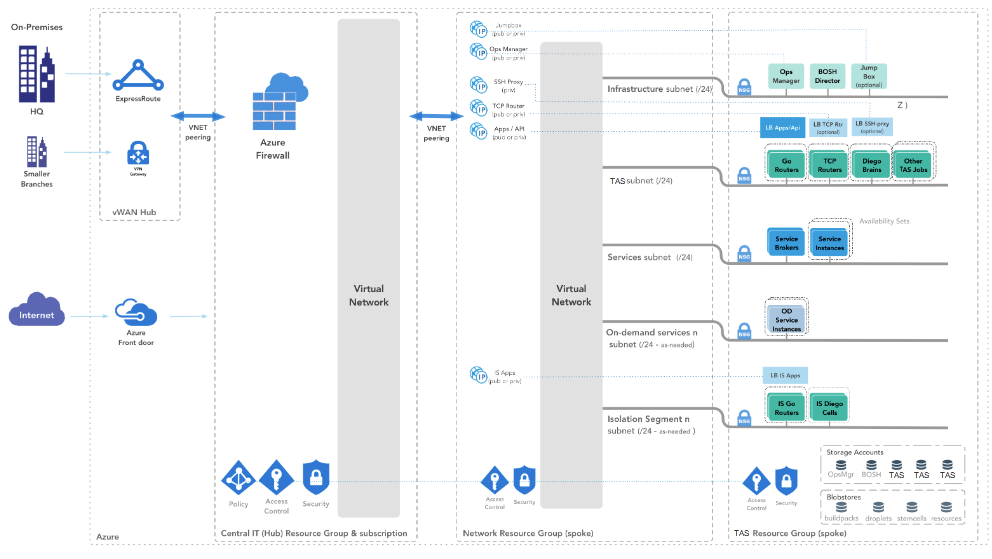

Azure Virtual Data Center (VDC) uses a hub and spoke model for extending on-premise data centers. For more information about VDC, see the Azure Virtual Datacenter e-book.

When you install Tanzu Operations Manager, you can use this model to place resources in resource groups. For example, a Tanzu Operations Manager installation contains:

-

Hub:

- A central IT resource group included in Azure VDC setup. This is where you define firewall, access control, and security policies.

-

Spokes:

- A Network resource group for all network resources.

- TAS for VMs or TKGI resource groups for all TAS for VMs or TKGI resources.

Reference architecture diagram

The following diagram illustrates the reference architecture for Tanzu Operations Manager on Azure using the hub and spoke model.

View a larger version of this diagram..

The following sections provide guidance about the resources that you use for running Tanzu Operations Manager on Azure.

Networking

The following sections provide guidance about networking resources.

General requirements and recommendations

The following are general requirements and recommendations related to networking when running Tanzu Operations Manager on Azure:

-

You must enable virtual network peering between hub and spoke resource groups. For more information, see the Microsoft Azure documentation.

-

You must have separate subscriptions for each resource group. This includes:

- The central network resource group. This is the hub.

- Each workspace where workloads run. These are the spokes.

-

Use ExpressRoutes for a dedicated connection from an on-premises datacenter to VDC. For more information, see the ExpressRoute documentation. As a backup for when ExpressRoutes stops, you can use Azure VPN Gateways, which pass encrypted data into VDC through the internet.

-

Use a central firewall in the hub resource group and a network appliance to prevent data infiltration and exfiltration.

-

You can place network resources, such as DNS and NTP servers, either on-premise or on Azure Cloud.

DNS

For your domains, use a sub-zone of an Azure DNS zone.

Azure DNS supports DNS delegation, which allows sub-level domains to be hosted within Azure. For example, a company domain of example.com has a Tanzu Operations Manager zone in Azure DNS of pcf.example.com.

Azure DNS does not support recursion. To properly configure Azure DNS:

-

Create an

NSrecord with your registrar that points to the four name servers supplied by your Azure DNS Zone configuration. -

Create the required wildcard

Arecords for the TAS for VMs app and system domains, and any other records desired for your Tanzu Operations Manager deployments.

You do not need to make any configuration changes in Tanzu Operations Manager to support Azure DNS.

Load balancing

Use Standard Azure Load Balancers (ALBs). To allow internet ingress, you must create the ALBs with elastic IP addresses.

During installation, you configure ALBs for TAS for VMs Gorouters. For more information, see Create Load Balancers in Deploying Tanzu Operations Manager on Azure. When applicable, TCP routers and SSH Proxies also require load balancers.

The TAS for VMs system and app domains must resolve to your ALBs and have either a private or a public IP address assigned. You set these domains in the Domains pane of the TAS for VMs tile.

Networks

Tanzu Operations Manager requires the networks defined in the base reference architectures for TAS for VMs and TKGI. For more information, see the Networks sections in Platform Architecture and Planning Overview.

TAS for VMs on a single resource group

If shared network resources do not exist in an Azure subscription, you can use a single resource group to deploy Tanzu Operations Manager and define network constructs.

High Availability (HA)

Tanzu Operations Manager on Azure supports both availability zones (AZs) and Availability Sets.

Use AZs instead of Availability Sets in all Azure regions where they are available. AZs solve the placement group pinning issue associated with Availability Sets and allow greater control when defining high-availability configuration when using multiple Azure services.

For additional guidance depending on your use case, see the following table:

| Foundation type | Guidance |

|---|---|

| New foundation | If you do not have strict requirements, select AZ-enabled regions. Examples of strict requirements include existing ExpressRoute connections from non-AZ enabled regions to customer data centers and app-specific latency requirements. |

| Existing foundation in AZ-enabled region | Migrate to a new Tanzu Operations Manager foundation, or to a Tanzu Operations Manager foundation so that you can use AZs. These existing foundations, especially those using older families of VMs such as DS_v2, risk running out of capacity as they scale. |

| Existing foundation not in AZ-enabled region | Evaluate your requirements to determine the cost/benefit of migrating to an AZ-enabled region. |

For more information about availability modes in Azure, see the following:

Availability zones

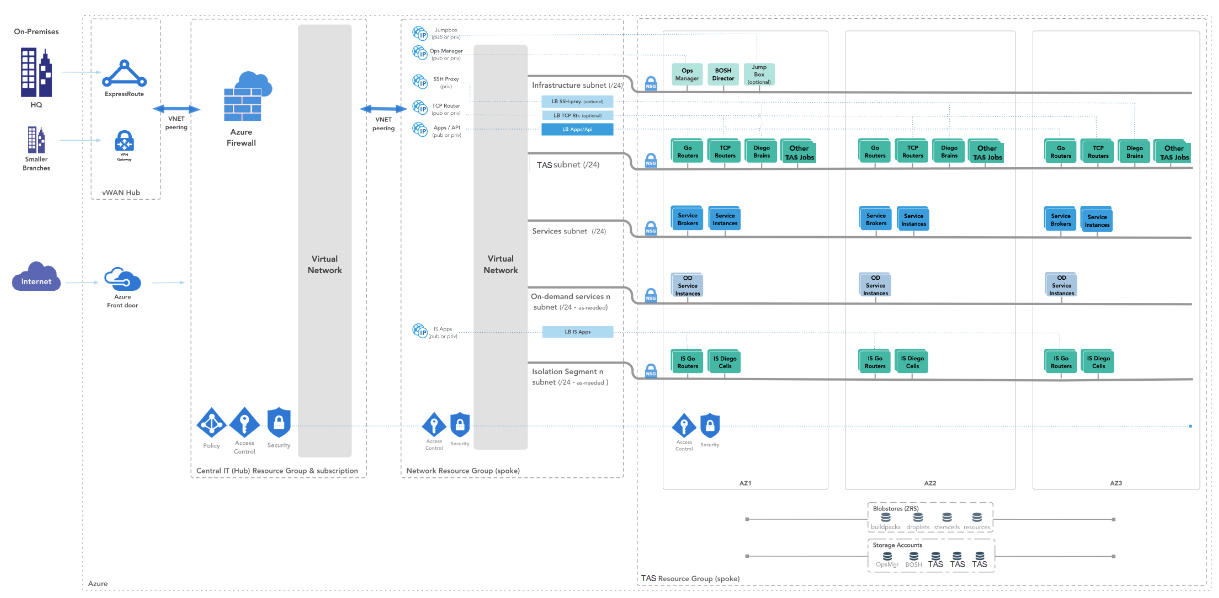

AZs are an Azure primitive that supports HA within a region. They are physically separate data centers in the same region, each with separate power, cooling, and network.

The following diagram illustrates TAS for VMs deployed on Azure using AZs.

View a larger version of this diagram..

AZs have the following advantages:

-

VMs and other zonal services deployed to independent zones provide greater physical separation than Fault Domains and avoid the placement group pinning issue associated with Availability sets.

-

Zonal services are effectively in separate Update Domains as updates are rolled out to one zone at a time.

-

AZs are visible and configurable. Two zonal services placed in the same logical zone are also placed in the same physical zone. This allows services of different types to take advantage of the highly available qualities that a zone provides.

Availability sets

Availability sets were the first Azure primitive to support high availability within a region.

Availability Sets are implemented at the hardware rack level, which can cause issues when deploying Tanzu Operations Manager on Azure. For more information, see Placement group pinning issues.

Availability Sets have the following properties:

-

They are a logical concept that ensures multiple VMs in the same set are spread evenly across three Fault Domains and either 5 or 20 Update Domains.

-

When a hardware issue occurs, VMs on independent Fault Domains are guaranteed not to share power supply or networking and therefore maintain availability of a subset of VMs in the set.

-

When updates are rolled out to a Cluster, VMs in independent Update Domains are not updated at the same time, ensuring that downtime caused by an update does not affect the availability of the set.

Placement group pinning issues

In Availability Set HA mode, VMs for a particular job are pinned to a placement group determined when the first VM in the Availability Set is provisioned. This is because BOSH places VMs for each job in the same Availability Set.

Cluster-pinning causes issues when:

-

migrating jobs from one family of VMs to another, if they are backed by different hardware, such as

DS_v2andDS_v3 -

scaling up if there is no capacity remaining in the placement group

Storage

For storage, VMware recommends:

-

For the storage account type, use the premium performance tier.

-

Configure storage accounts for Zone Redundant Storage (ZRS) in AZ-enabled Regions. In non-AZ enabled regions, locally Redundant Storage (LRS) is sufficient.

-

Configure VMs to use managed disks instead of manually-managed VHDs on storage accounts. Managed disks are an Azure feature that handles the correct distribution of VHDs over storage accounts to maintain high IOPS and high availability for the VMs.

-

Use five storage accounts. Each foundation on Azure requires five storage accounts: one for BOSH, one for Tanzu Operations Manager, and three for TAS for VMs. Each account comes with a set amount of disk space. Azure storage accounts have an IOPs limit of about 20k per account, which generally corresponds to a BOSH job/VM limit of 20 VMs each.

SQL Server

The Internal MySQL database provided by TAS for VMs is sufficient for production use.

Blobstore storage account

Use Azure Blob Storage as the external file storage option for Tanzu Operations Manager. This provides redundancy for high-availability deployments of Tanzu Operations Manager and unlimited scaling. Azure Blob Storage provides fully-redundant hot, cold, or archival storage in local, regional, or global offerings.

Tanzu Operations Manager requires a bucket for the BOSH blobstore.

TAS for VMs requires the following buckets:

-

Buildpacks

-

Droplets

-

Packages

-

Resources

These buckets require an associated role for read-write access.

Identity management

Use unique managed identities to deploy Tanzu Operations Manager, BOSH Director, Microsoft Azure Service Broker for VMware Tanzu, and any other tiles that require independent credentials. Ensure that each managed identity is scoped to the least privilege necessary to operate.

Azure uses managed identities to handle service authorization. For more information, see the Microsoft Azure documentation.