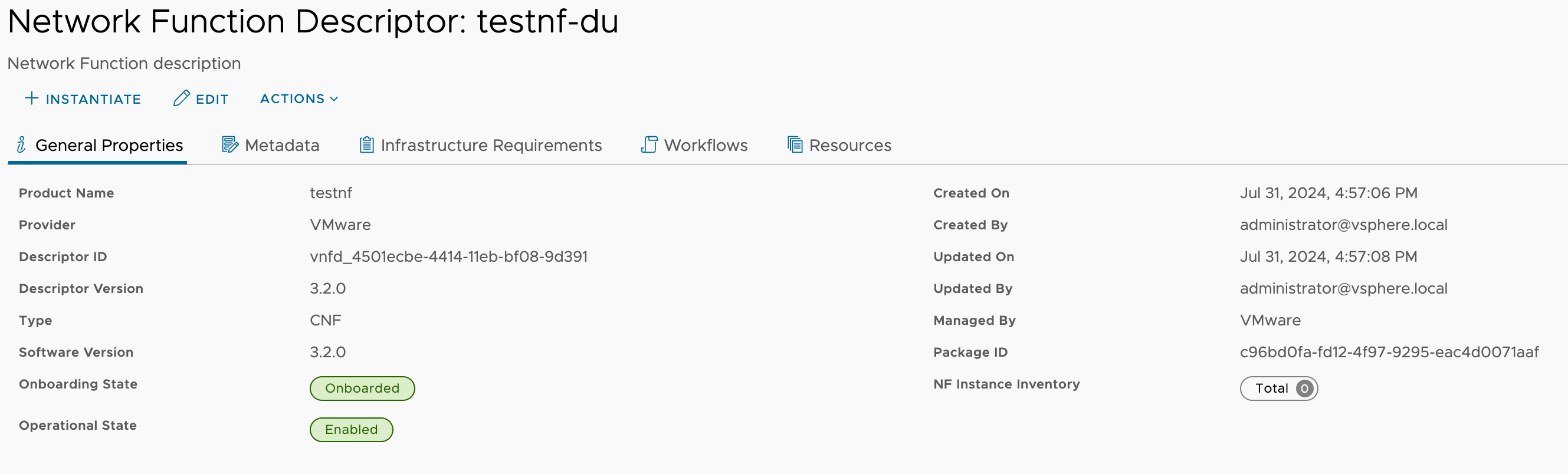

This topic documents steps for how to instantiate testnf-du CNF.

Once the prerequisites are satisified, you can login to the TCA GUI and follow the steps below to instantiate testnf-du CNF. For the architecture of testnf-du-flexran CNF, please view this page Overview.

Prerequisites

(Optional) Prepare values.yaml file for testnf-du helm chart.

If you want to enable ipvlan and macvlan for the testnf pod, following annotations need to be set.

annotations: k8s.v1.cni.cncf.io/networks: ipvlan,macvlan,sriov-pass

By default, NAD using host-local would be included. If you want to override default ones, nads section could be explicitly specified as following.

nads:

- apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: ipvlan

annotations:

k8s.v1.cni.cncf.io/resourceName: vlan1

spec:

config: '{

"cniVersion": "0.3.0",

"name": "ipvlan",

"plugins": [

{

"type": "ipvlan",

"master": "vlan1",

"ipam": {

"type": "host-local",

"ranges": [

[{

"subnet": "192.167.1.0/24",

"rangeStart": "192.167.1.1",

"rangeEnd": "192.167.1.255"

}]

]

}

}

]

}'

- apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: macvlan

annotations:

k8s.v1.cni.cncf.io/resourceName: vlan2

spec:

config: '{

"cniVersion": "0.3.0",

"name": "macvlan",

"plugins": [

{

"type": "macvlan",

"master": "vlan2",

"ipam": {

"type": "host-local",

"ranges": [

[{

"subnet": "192.167.2.0/24",

"rangeStart": "192.167.2.1",

"rangeEnd": "192.167.2.255"

}]

]

}

}

]

}'

- apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: sriov-pass

annotations:

k8s.v1.cni.cncf.io/resourceName: intel.com/sriovpass

spec:

config: '{

"cniVersion": "0.3.0",

"name": "sriov-pass-network",

"plugins": [

{

"type": "host-device",

"ipam": {

"type": "host-local",

"ranges": [

[{

"subnet": "192.167.3.0/24",

"rangeStart": "192.167.3.1",

"rangeEnd": "192.167.3.255"

}]

]

}

},

{

"type": "sbr"

},

{

"type": "tuning",

"sysctl": {

"net.core.somaxconn": "500"

},

"promisc": true

}

]

}'

If there are multiple node pools which have the same configuration, you might need to specify nodeSelector explicitly as following:

nodeSelector: key1: value1

If you want to use in Airgap environment, you need to specify right image path as following.

image: repository: airgap.fqdn.com/path # name: testnf-du-lite

(Optional) Prepare values.yaml for testnf-verify helm chart.

If you want to use in Airgap environment, you need to specify right image path as following.

image: repository: airgap.fqdn.com/path # name: testnf-verify

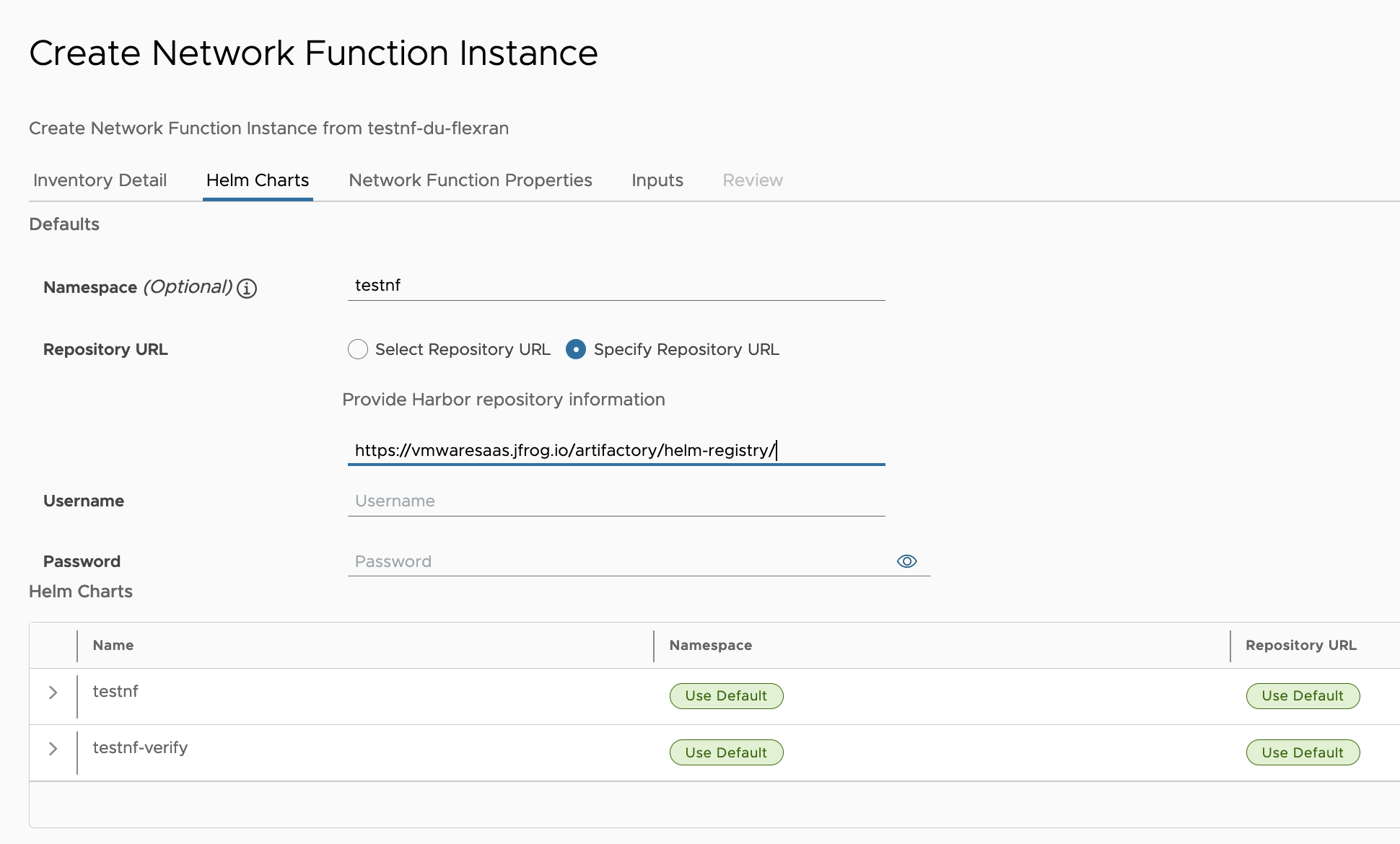

(Optional) Prepare harbor

There are two ways to specify the helm chart locations. To simplify the configuration, you can use https://vmwaresaas.jfrog.io/artifactory/helm-registry/ as the default URL. However, if you want to upload the testnf-du helm chart to your own harbor for airgap or some other cases, you might need to prepare harbor, upload testnf-du helm chart to harbor, associatiate harbor to workload cluster and consume harbo repo during network function instantiation.

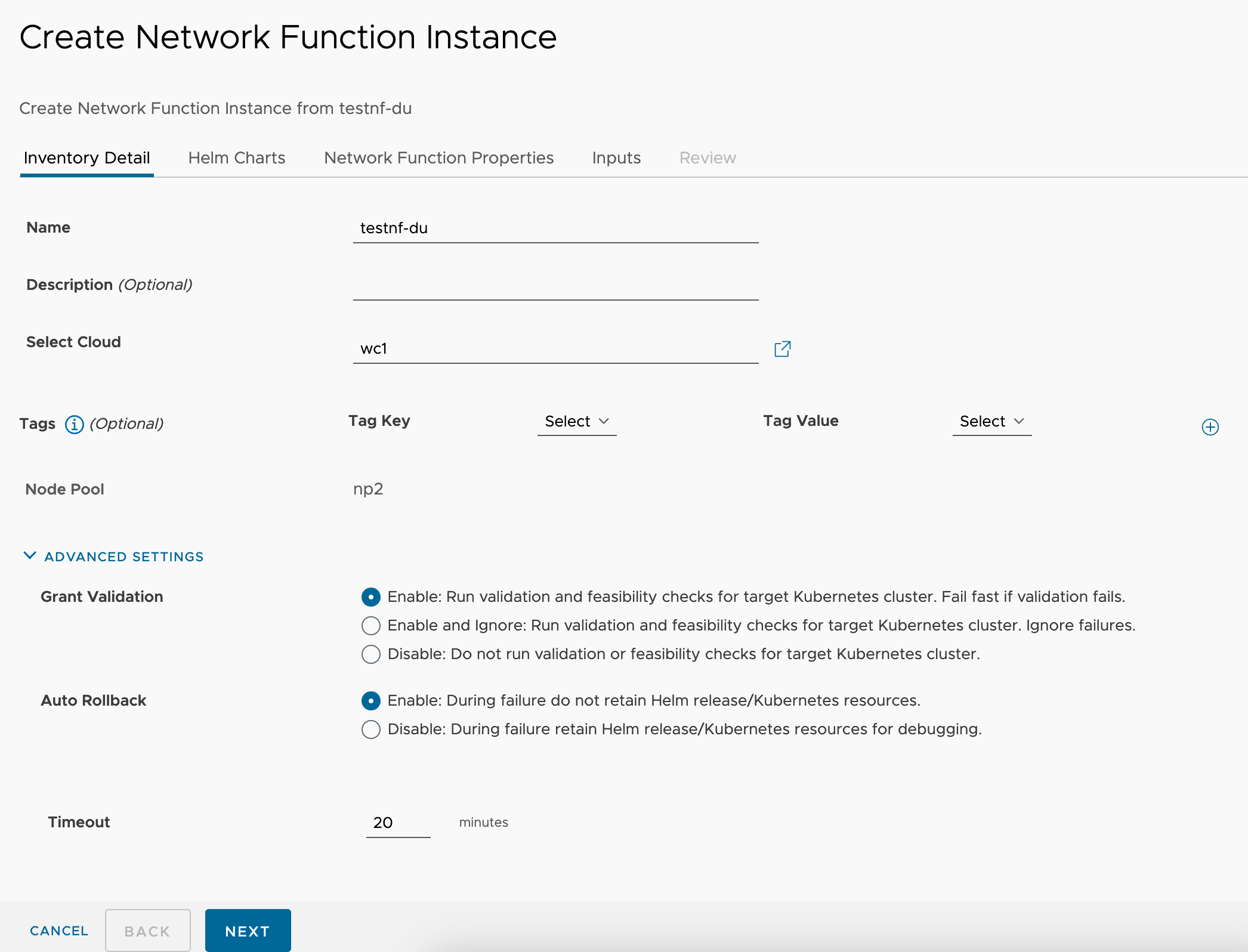

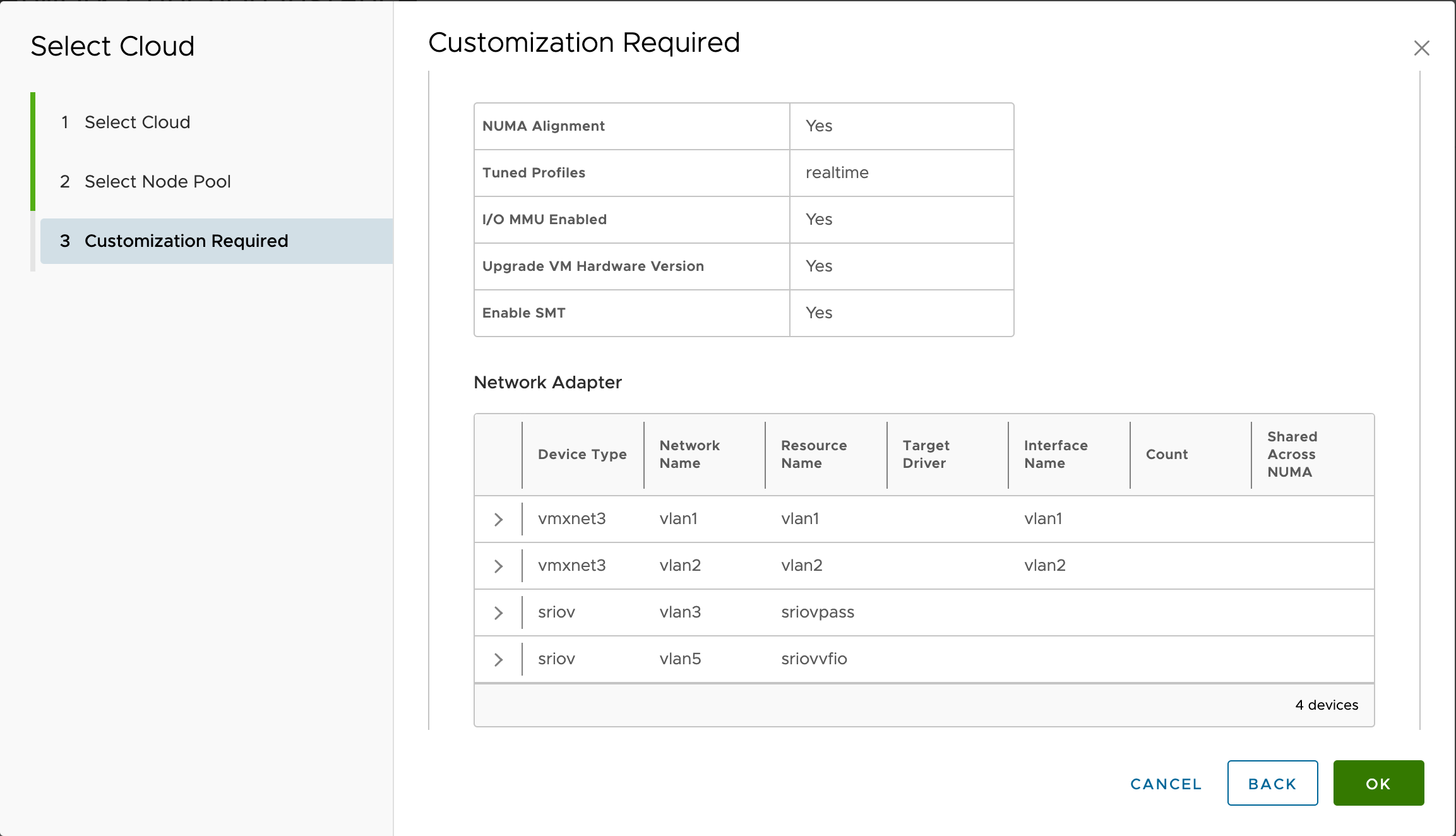

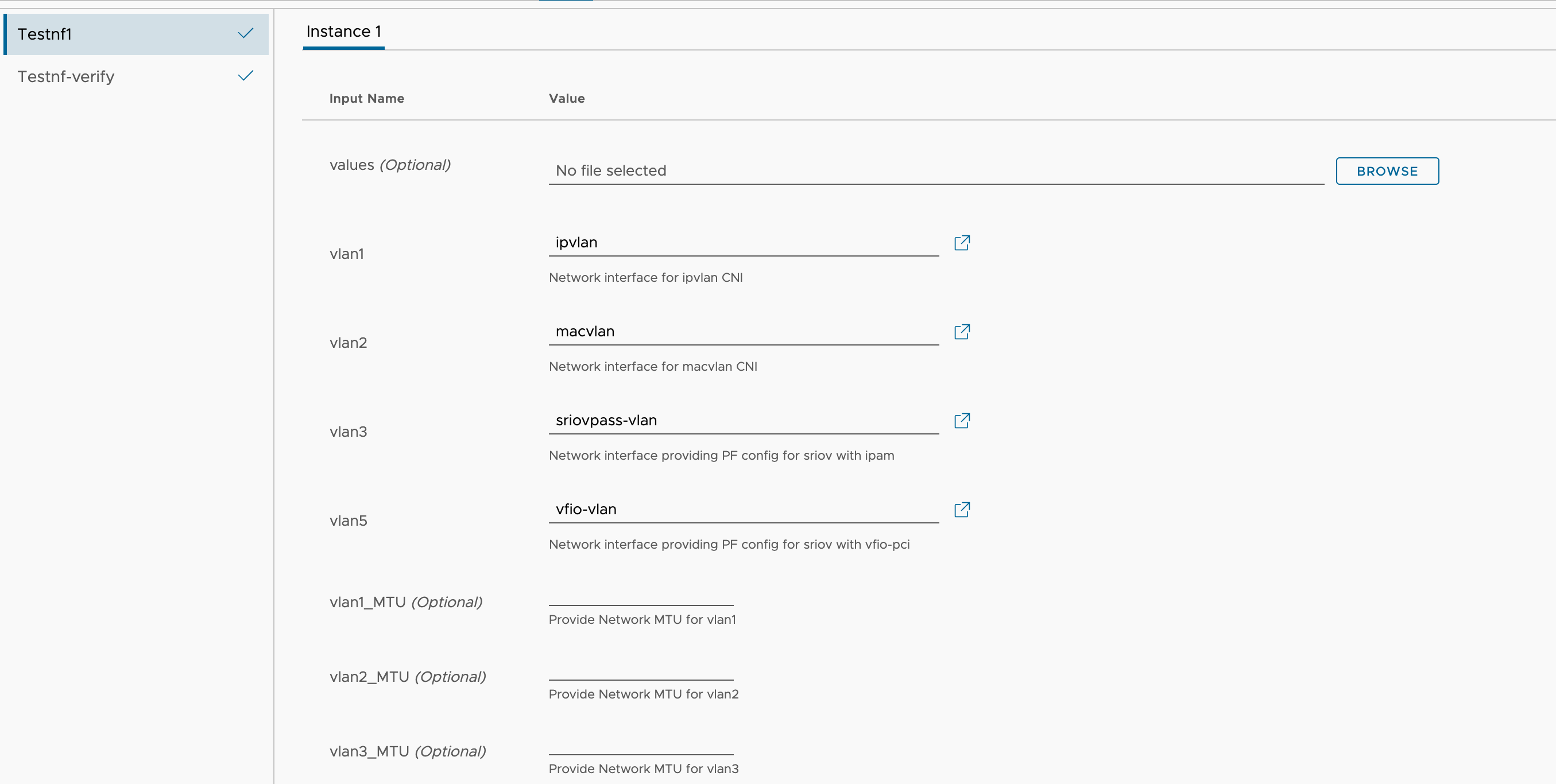

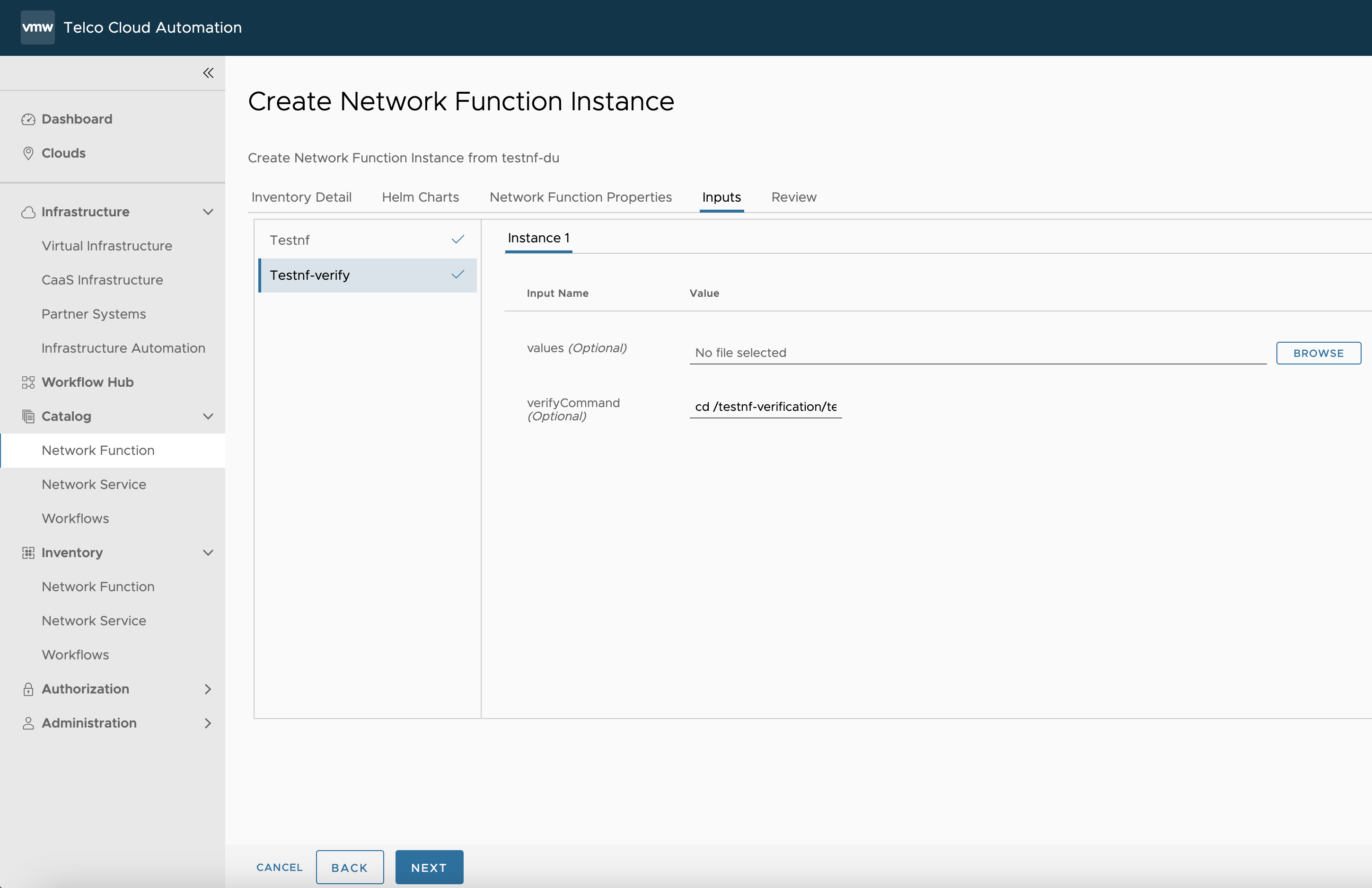

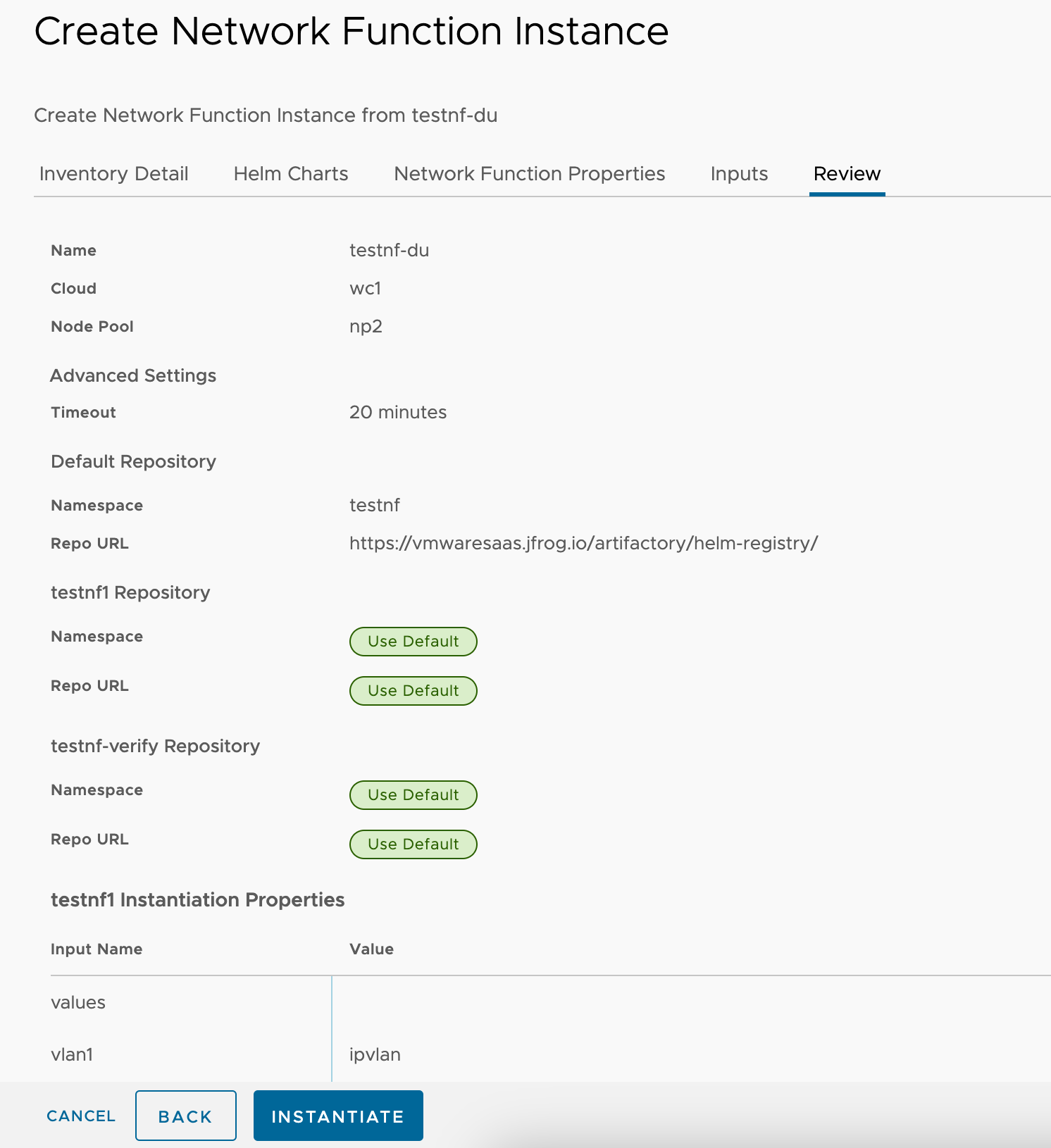

Procedure

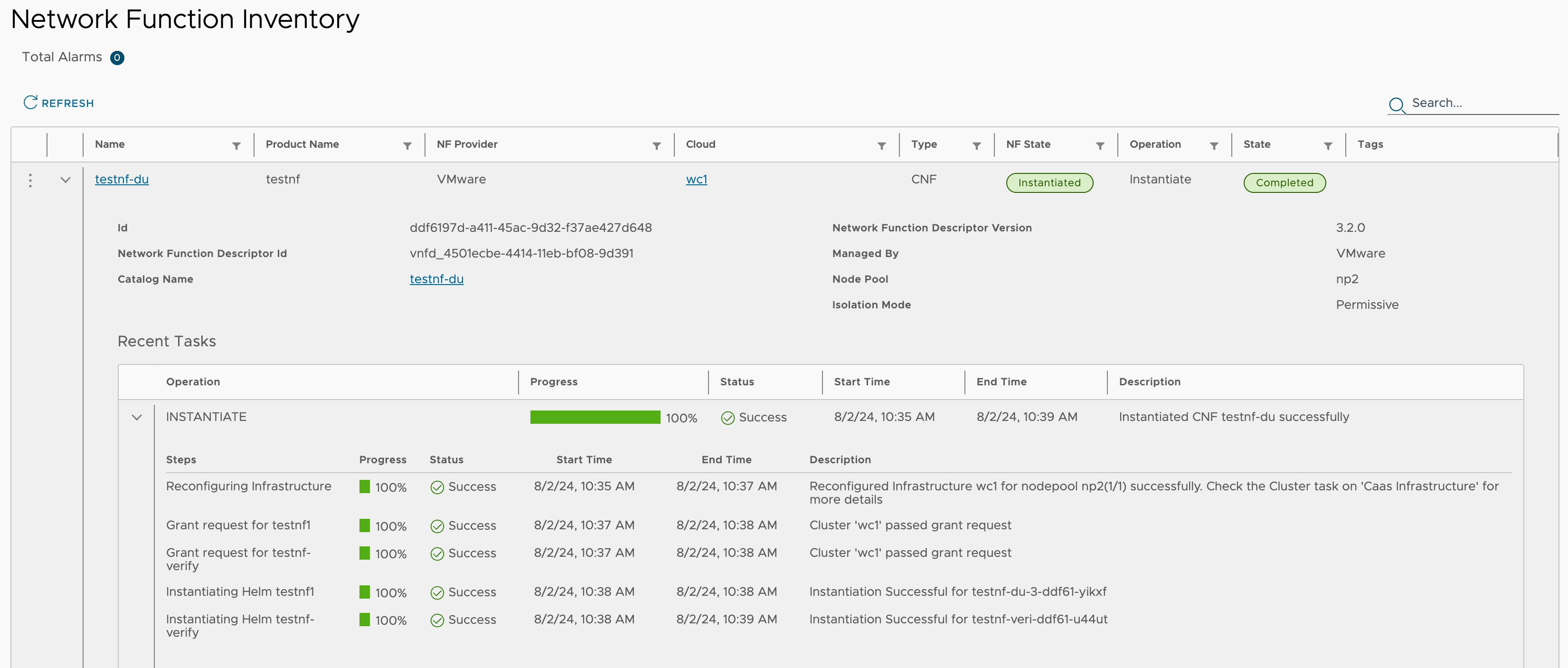

Results

Once the above steps were executed, the testnf-du can be instantiatied successfully with some post-instantiation workflows to ensure that the pod, node, vm customizations are realized.

What to do next

When testnf-du CNF is ready, you can bash to the testnf-du pod to run some dpdk apps such as testpmd, pktgen, performance tools such as iperf, for example:

capv@wc0-master-control-plane-xlwrz [ ~ ]$ POD_NS=testnf

capv@wc0-master-control-plane-xlwrz [ ~ ]$ POD_NAME=$(kubectl get pods --namespace $POD_NS -l "app.kubernetes.io/name=testnf-du" -o jsonpath="{.items[0].metadata.name}")

capv@wc0-master-control-plane-xlwrz [ ~ ]$ kubectl -n $POD_NS exec -it $POD_NAME -- bash

root [ / ]# testpmd

EAL: Detected 8 lcore(s)

EAL: Detected 1 NUMA nodes

EAL: Multi-process socket /var/run/dpdk/rte/mp_socket

EAL: Selected IOVA mode 'PA'

EAL: Probing VFIO support...

EAL: VFIO support initialized

...