This section describes how the VNF-Components (VNF-C), the Data plane VNF-C, the Control plane VNF-C, and the Operations, Administration, Management (OAM) VNF-C can leverage N- VDS capabilities.

VNF Workloads Using N-VDS (S) and N-VDS (E)

The Data plane VNF-C requires more vCPUs to support high data rates for a large number of subscribers. To ensure optimal performance of the Data plane VNF-C, its vCPUs are mapped to CPU cores. Most of the CPU cores on each NUMA are used by the data plane VNF-C. The N-VDS Enhanced mode uses vertical NUMA alignment capabilities to ensure that pNIC, Logical Cores, and vCPUs are aligned in the same NUMA. The Control plane and OAM VMs have a small resource footprint and do not require data plane acceleration.

The Data plane VNF-C is deployed in a cluster that is dedicated to data plane components and occupies most of the CPU resources on a NUMA node in the host. The Data plane VNF-C ensures that the number of vCPUs it uses does not exceed the number of CPU cores that are available on the NUMA. Some CPU cores are left free for the virtualization layer.

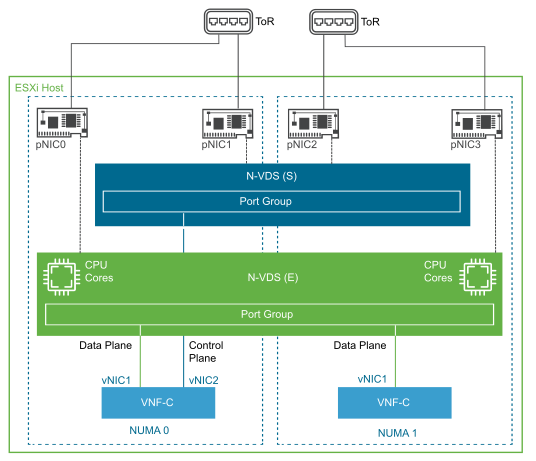

After the VNF-Cs are distributed on the NUMA nodes of a host, the components are connected to the virtual networking elements as shown in the following figure:

N-VDS in Standard and Enhanced Data Path modes work side by side on the same host. vNICs that require accelerated performance can be connected directly to N-VDS (E), whereas vNICs that are used for internal communication, OAM, or control plane can use the N-VDS (S). The Control plane and Data plane VNF-Cs communicate in the East-West connectivity.

As VNF plays a vital role in the CSP network, it must also provide a high level of availability. To achieve a resilient network, the NUMA node where the Data plane VNF-C is installed has two physical NICs (preferably in each NUMA node) configured as redundant uplinks from an N-VDS (E). The Data plane VNF-C also benefits from host resiliency by leveraging anti-affinity rules. The N-VDS (E) uplinks are bonded using a teaming policy that increases availability.

The benefits of this approach are:

-

Network traffic separation: This approach separates the bursty network traffic (management and control plane traffic) from the faster throughput and constant data plane traffic.

-

Ease of designing the network: The physical NICs used to transport the data plane traffic can use a different path and physical components than the NICs that are used to transport the management and control plane traffic.

-

Benefits of shared resources: As N-VDS (E) is configured with dedicated CPU resources that are not accessible by other applications, the dedicated CPU resources process the data path traffic. However, the control and management plane traffic is served by N-VDS (S).

For detailed design considerations for accelerated workloads, see the Tuning vCloud NFV for Data Plane Intensive Workloads guide.