VMware Integrated OpenStack Networking consists of integrating Neutron services with NSX-T to build rich networking topologies and configure advanced network policies in the cloud.

A Telco VNF deployment requires the following networking services:

L2 services: Enables tenants to create and consume their own L2 networks.

L2 trunk: Enables tenants to create and consume L2 trunk interfaces

L3 services: Enables tenants to create and consume their own IP subnets and routers. These routers can connect intra-tenant application tiers and can also connect to the external domain through NATed and non-NATed topologies.

Floating IPs: A DNAT rule that maps a routable IP on the external side of the router (External network) to a private IP on the internal side (Tenant network). This floating IP forwards all ports and protocols to the corresponding private IP of the instance (VM) and is typically used in cases where there is IP overlap in tenant space.

DHCP Services: Enables tenants to create their own DHCP address scopes.

Security Groups: The ability for tenants to create their own firewall policies (L3 or L4) and apply them directly to an instance or a group of instances.

Load-Balancing-as-a-Service (LBaaS): Enables tenants to create their own load-balancing rules, virtual IPs, and load-balancing pools.

High throughput data forwarding: Enables the configuration of passthrough or DPDK interfaces for data plane intensive VNFs.

Data Plane Separation: Separates the traffic between different Tenants at L2 and L3.

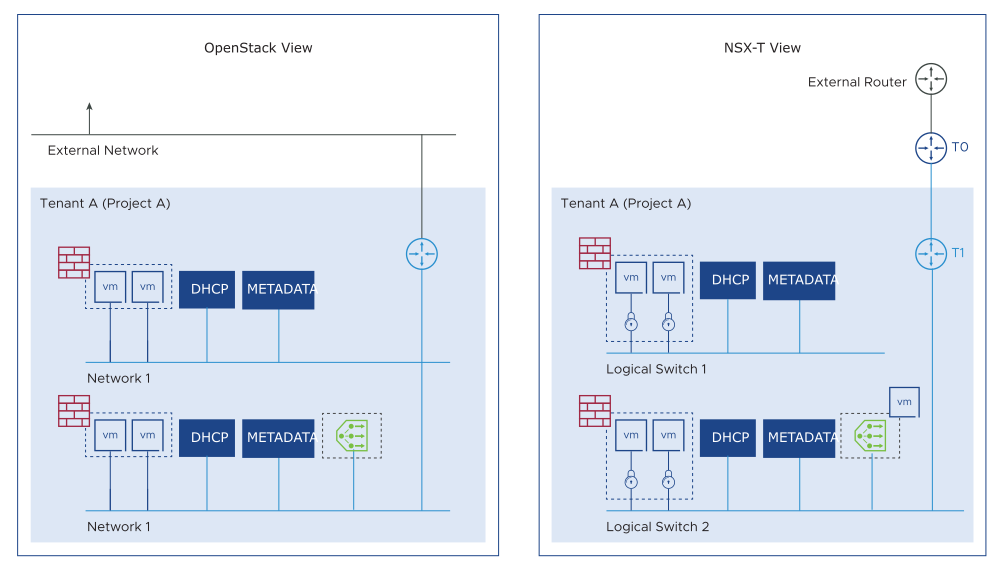

Object Mapping in VMware Integrated OpenStack NSX-T Neutron Plug-in

NSX-T Neutron plug-in is an open-source project and can be used with any OpenStack implementation. VMware Integrated OpenStack natively integrates the NSX-Neutron plug-in out-of-the-box. VMware Integrated OpenStack Neutron services leverage NSX-T Neutron plug-in to invoke API calls to NSX Manager, which is the API provider and management plane of NSX.

The following table summarizes the mapping between the standard Neutron objects and VMware NSX-T Neutron Plugin.

Neutron Object |

Mapping in NSX-T Neutron Plugin |

|---|---|

Provider Network |

VLAN |

Tenant Network |

Logical switch |

Metadata Services |

Edge Node Service |

DHCP Server |

Edge Node Service |

Tenant Router |

Tier 1 router |

External Network |

Tier 0 router (add VRF) |

Floating IP |

NSX-T Tier-1 NAT |

Trunk Port |

NSX-T Guest VLAN tagging |

LBaaS |

NSX-T Load Balancer through Tier-1 router |

FWaaS/Security Group |

NSX-T MicroSegmentation / Security Group |

Supported Network Topologies

Use Case |

L2 |

L3 |

OpenStack Network Security Policy |

|---|---|---|---|

VLAN-backed Network |

VLAN |

Physical-Router |

Security Group Only |

Overlay-Backed Network with NSX L3 Services and NAT |

Overlay |

NSX Edge |

FWaaS and Security Group |

Overlay-Backed Network with NSX L3 Services and No-NAT |

Overlay |

NSX-Edge |

FWaaS and Security Group |

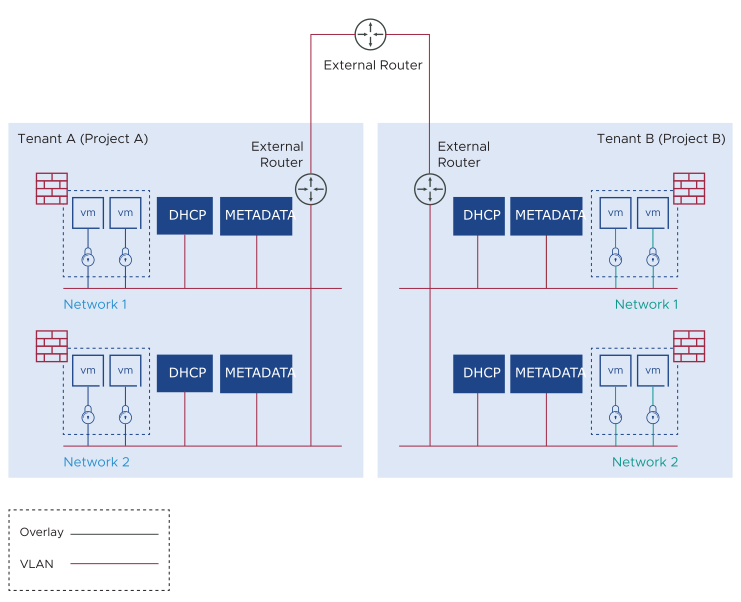

VLAN-Backed Network

VLAN-backed network is often known as an OpenStack Provider network. VNF booted in the provider network gets its DHCP lease and metadata relay information from the NSX-T but relies on the physical network infrastructure to provide default gateway or first-hop routing services. Because the physical infrastructure handles routing, only security groups are supported in the provider network. LBaaS, FWaaS, NAT, and so on, are implemented in the physical tier, outside of VIO.

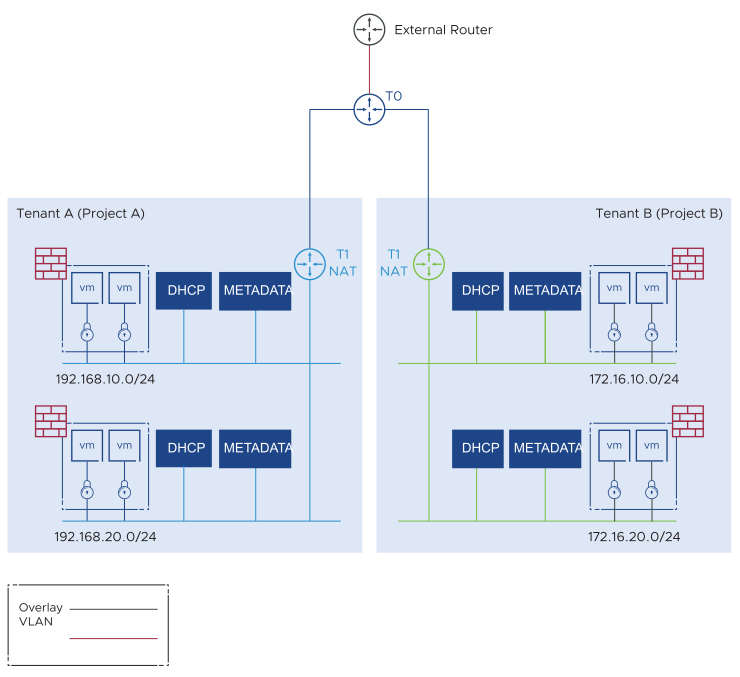

NAT Topology

The NAT topology enables tenants the ability to create and consume private RFC 1918 IP subnets and routers. The NAT topology supports overlapping IP subnets between tenants. Inter-tenant and connections to the external domain must source NAT by the NSX-T tier gateway backing the OpenStack tenant router.

NSX-T Tier-0 router maps to the OpenStack external network and can be shared between tenants or dedicated to a single tenant.

A dedicated DHCP server is created in the NSX-T backend for each tenant subnet and segment. Neutron services such as LBaaS, FWaaS, Floating IPs, and so on, are fully supported.

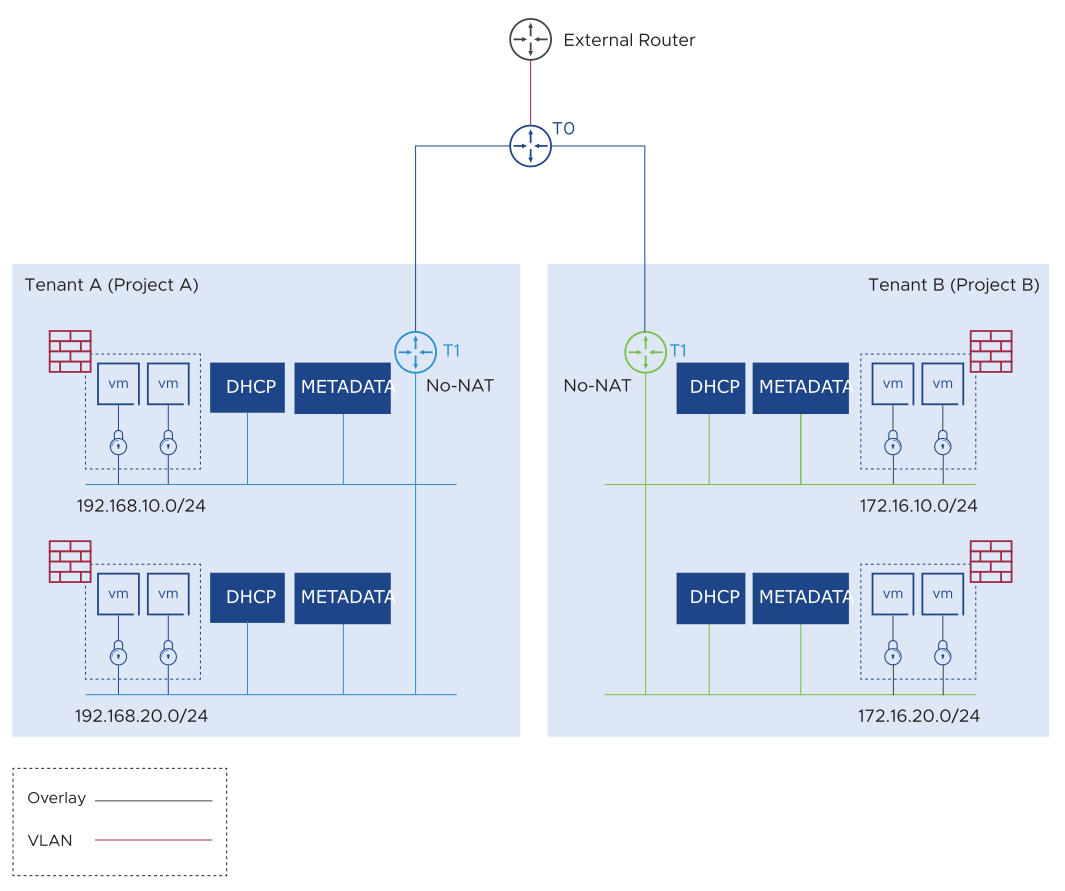

No-NAT Topology

In the No-NAT topology, NSX-T performs L2 switching, security segmentation, L3 routing, load balancing, and other tasks. The source IP addresses of the VMs are preserved for inter-tenant communication and for communication to the external domain. IP subnets cannot overlap as there is no NAT.

Similar to the NAT topology, the NSX-T Tier-0 router maps to the OpenStack external network and can be shared between tenants or dedicated to a single tenant.

A dedicated DHCP server is created in the NSX-T backend for each tenant subnet and segment. Neutron services such as LBaaS, FWaaS, Floating IPs, and so on are fully supported.

Passthrough Networking

VMware Integrated OpenStack supports SR-IOV through Passthrough Networking. You can configure a port to allow SR-IOV passthrough and then create OpenStack instances that use physical network adapters. Starting with VMware Integrated OpenStack 7.0, multiple ports with different SR-IOV physical NICs are also supported. Multiple SR-IOVs provide vNIC redundancy for a VM, ensuring that the SR-IOV ports are assigned by different physical NICs in the ESXi server host. The Multiple SRIOV Redundancy setting is defined as part of the nova flavor definition.

Passthrough networking can be used for data-intensive traffic but it cannot use virtualization benefits such as vMotion, DRS, and so on. Hence, VNFs employing SR-IOV become static hosts. A special host aggregate can be configured for such workloads.

Port security is not supported for passthrough-enabled ports and is automatically deactivated for the port created.

Data Plane Isolation with VIO and NSX

VIO uses NSX-T Tier-0 router to represent OpenStack External Networks. An External network can be shared across all OpenStack tenants or dedicated to a tenant through the neutron RBAC policy.

When associating an OpenStack tenant router with an external network, the NSX-T Neutron plugin-in attaches the OpenStack tenant router (Tier-1) with the associated external network (Tier-0).

If you use the VRF Lite feature in NSX-T, each VRF instance can be defined as a unique OpenStack External Network. Through the Neutron RBAC policy, the external network (VRF instance) can be mapped to specific tenants. VRF maintains dedicated routing instances for each OpenStack tenant and offers data path isolation required for VNFs in a multi-tenant deployment.

Network Security

Security can be enforced at multiple layers with VMware Integrated OpenStack:

Tenant Security Group: Tenant Security group is defined by Tenants to control what traffic can access Tenant application. It has the following characteristics:

Every rule has an ALLOW action, followed by an implicit deny rule at the end.

One or more Security Groups can be associated with an OpenStack Instance.

Tenant Security Groups are with lower precedence over Provider Security Groups.

Provider Security Group: Provider security groups are defined and managed only by OpenStack Administrators only and primarily used to enforce compliance. For example, blocking specific destination and enforcing protocol compliance used by a tenant application. The Provider security group has the following characteristics:

Every rule has a DENY action.

One or more Provider Security Groups can be associated with an OpenStack Tenant.

Provider Security Groups are with higher precedence over Tenant Security Groups.

Provider Security Groups are automatically attached to new tenant Instances.

Firewall-as-a-Service: FWaaSv2 is a perimeter firewall controlled by Tenants to control security policy at a project level. With FWaaSv2, a firewall group is applied not at the router level (all ports on a router) but at the router port level to define ingress/egress policies.

Port Security: Neutron Port security prevents IP spoofing. Port Security is enabled by default with VIO. Depending on the application, port security settings might need to be updated or deactivated.

Scenario I: First hop routing protocol such as VRRP relies on Virtual IP (VIP) address to send and receive traffic. Port security policy must be updated so that the traffic to and from the VIP address can be allowed.

Scenario II: Port Security policy must be deactivated to support virtual routers.

Octavia Load Balancer Support

The Octavia LBaaS workflow includes the following capabilities:

Create a Load Balancer.

Create a Listener.

Create a Pool.

Create Pool Members.

Create a Health Monitor.

Configure Security Groups to allow Health Monitor to work from Load Balancer to Pool Members.

Octavia Load balancer requires the backing of an OpenStack Tenant router and this tenant router must be attached to an OpenStack External Network. The following types of VIPs are supported:

VIP using External network subnet pool: VIP allocated from an external network is designed to be accessed from public networks. To define a VIP that is publicly accessible, you should do one of the following:

Create Load balancer on an external network

Create Load balancer on a tenant network and assign floating IP to the VIP port.

Server pool members can belong to the different OpenStack tenant subnets. The Load balancer admin must ensure reachability between the VIP network and server pool members.

VIP using Internal Network subnet pool: Load balancer VIP is allocated from the tenant subnet you selected when creating the load balancer, typically a private IP. Server pool members can belong to different OpenStack subnets. The Load balancer admin must ensure reachability between the VIP network and server pool members.

VIO Neutron Design Recommendations

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

When deploying OpenStack provider networks, do not deploy additional DHCP server on the VLAN backing the provider network. |

DHCP instance is created automatically in the NSX when the Provider network subnet is created. |

None |

When running with No-NAT on the OpenStack Tenant router, NSX-T0 must be pre-configured to advertise (redistribute) NAT IP blocks either as /32 or summary route to physical router. |

T0 route advertisement updates physical L3 device with Tenant IP reachability information. |

None |

When running with No-NAT on the OpenStack Tenant router, NSX-T0 must be pre-configured to advertise connected routes. |

T0 route advertisement updates physical L3 device with Tenant IP reachability information. |

None |

When deploying FWaaS, ensure that Tenant quota allows for at least one OpenStack Tenant router. |

FWaaS requires the presence of a Tenant router. |

None |

When deploying LBaaS, ensure that Tenant quota allows for at least one OpenStack Tenant router. |

Neutron NSX-T plugin requires a Logical router with the external network attached. |

None |

When SR-IOV is required for VNF workloads, use Multiple SR-IOV to provide vNIC redundancy to VNFs. |

SR-IOV NIC redundancy protects VNF from link-level failure. |

VNF must be configured to switchover between vNICs in case of link failure. |

Place all SR-IOV workloads in a dedicated ESXi cluster. Deactivate DRS and vMotion for this cluster. |

SR-IOV cannot benefit from virtualization features such as vMotion and DRS. |

Resource consumption might be imbalanced in the SR-IOV cluster as DRS is not available to rebalance workloads. |

Create a dedicated Neutron External Networks backed by VRF per tenant. |

Data path isolation and separate routing instances per tenant. |

VRFs must be created manually before VMware Integrated OpenStack can use them. |

Use allowed_address_pairs to allow traffic to and from VNF that implements the first hop routing protocol such as VRRP. |

Default Neutron Port security permits traffic to and from the IP address assigned to vNIC only, not VIP. |

None |

Deactivate Port Security on attachments that connect to virtual routing appliances. |

Default Neutron Port security permits traffic to and from the IP address assigned to vNIC only, not Transit traffic. |

None |