An OpenStack Cloud is categorized into Regions, Availability Zones, and Host Aggregates. This section describes the high-level design considerations for each category.

OpenStack Region

A Region in VIO is a full OpenStack deployment, including a dedicated VIO control plane, API endpoints, networks, and compute SDDC stack. Unlike other OpenStack services, Keystone and Horizon services can be shared across Regions. Shared Keystone service allows for unified and consistent user onboarding and management. Similarly, for Horizon, OpenStack users and administrators can access different regions through a single pane of glass view.

OpenStack regions can be geographically diverse. Because each region has independent control and data planes, the failure in one region does not impact the operational state of another. Application deployment across regions is an effective mechanism for geo-level application redundancy. With a geo-aware load balancer, application owners can control application placement and failover across geo regions. Instead of active/standby data centers with higher-level automation (Heat templates, Terraform, or third-party CMP), applications can dynamically adjust across regions to meet SLA requirements

KeyStone Design

Keystone federation is required to manage Keystone services across different regions. VIO supports two configuration models for federated identity. The most common configuration is with keystone as a Service Provider (SP), using an external Identity Provider such as the vIDM as the identity source and authentication method. The two leading protocols for external identity providers are OIDC and SAML2.0.

The second type of integration is “Keystone to Keystone”, where multiple keystones are linked with one acting as the identity source for all regions. The central Keystone instance integrates with the required user backends such as AD for user onboarding and authentication.

If an identity provider is not available to integrate with VIO, cloud administrators can use an LDAP-based integration.

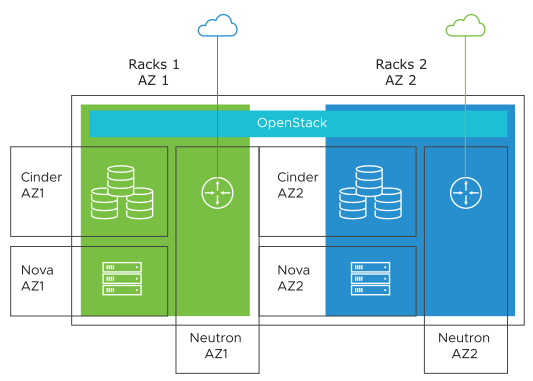

OpenStack Availability Zone

Nova compute aggregate in OpenStack is a grouping of resources based on common characteristics such as hardware, software, or even location profile. Nova Availability Zone (AZ) is a special form of Nova-compute aggregate that is associated with availability. Unlike traditional compute aggregates, a single compute cluster can only be a single Nova AZ member. Cloud administrators can create AZs based on availability characteristics such as power, cooling, rack location, and so on. In case of Multi-VC, AZ can be created based on the vCenter Server instance. To place a VNF in an AZ, OpenStack users specify the AZ preference during VNF instantiation. The nova-scheduler determines the best match for the user request within the specified AZ.

Cinder Availability Zone

Availability zone is a form of resource partition and placement, so it applies to Cinder and Neutron also. Similar to nova, Cinder zones can be grouped based on location, storage backend type, power, and network layout. In addition to placement logic, cinder volumes must attach to a VNF instance in most Telco use cases. Depending on the storage backend topology, an OpenStack admin must decide if the cross AZ Cinder volume attachment must be allowed. If the storage backend does not expand across VZ, the cross AZ attach policy must be set to false.

Neutron Availability Zone

With NSX-T, Transport zones dictate which hosts and VMs can participate in the use of a particular network. A transport zone does this by limiting which hosts and VMs that can map to a logical switch. A transport zone can span one or more host clusters.

An NSX-T Data Center environment can contain one or more transport zones based on your requirements. The overlay transport zone is used by both host transport nodes and NSX Edges. The VLAN transport zone is used by the NSX Edge for its VLAN uplinks. A host can belong to multiple transport zones. A logical switch can belong to only one transport zone.

From an OpenStack Neutron perspective, you can create additional Neutron availability zones with NSX-T Data Center by creating a different overlay transport zone, VLAN transport zone, DHCP, and Metadata proxy Server for each availability zone. Neutron availability zones can share an edge cluster or use separate edge clusters.

In a single edge cluster scenario, External networks along with associated floating IP pools can be shared across AZ. In a multiple edge clusters scenario, create non-overlap subnet pools and assign a unique subnet per external network.

VIO Availability Design Recommendations

Design Recommendation |

Design Justification |

Design Implication |

|---|---|---|

When using Region as a unit of failure domain, ensure that each region maps to an independent set of SDDC infrastructure. |

Regions are distinct API entry points. Failure in one region must not impact the availability of another region. |

None |

When using Region as a unit of failure domain, design your application deployment automation such that it is region aware and can redirect API requests across the region. |

Region-aware application deployment is more cloud native and reduces the need for legacy DR type of backup or restore. |

More complexity in infrastructure and deployment automation. |

When using the vCenter Server instance as a unit of Availability Zone (Multi-VC), do not allow the cross AZ cinder attachment. |

With Multi-VC, all hardware resources must be available locally to the newly created AZ.

Note:

The scenario where the resources in AZ1 are leveraged to support AZ2 is not valid. |

None |

When using neutron AZ as a unit of failure domain, map each Neutron AZ to a separate Edge cluster. |

Different edge clusters ensure that at least one NSX Edge is always available based on recommendations outlined in the platform design. |

|