The Telco Cloud Platform (TCP) tier comprises the Automation and CaaS components of the Telco Cloud. While the Infrastructure tier focuses on IaaS, the Platform tier focuses on CaaS and the automated deployment of CaaS clusters, CNFs, and VNFs.

The major objectives of the Telco Cloud Platform tier are as follows:

CaaS deployment and life cycle management

On-boarding, instantiation, and life cycle management of VNFs and CNFs

Implementing the container repository

The components of the Telco Cloud Platform tier reside in the management domain created as part of the Telco Cloud Infrastructure.

Component |

Description |

|---|---|

Telco Cloud Automation (Manager and Control-Plane nodes) |

Responsible for creation of Kubernetes clusters, onboarding, and lifecycle management of CNFs, VNFs, and Network Services |

Telco Cloud Automation (Airgap Server) |

Deployed in air-gapped environments to create and customize Kubernetes deployments |

Harbor |

Provides the OCI repository for images and helm charts |

Workload Domain NSX Cluster |

Provides SDN functionality for the management cluster such as overlay networks, VRFs, firewalling, and micro-segmentation. Implemented as a cluster of 3 nodes |

Aria Automation Orchestrator |

Runs custom workflows in different languages |

It is not necessary to deploy NSX as part of Telco Cloud Infrastructure and Telco Cloud Platform. A common NSX Manager can be used for the workload domains.

Telco Cloud Automation Architecture

Telco Cloud Automation provides orchestration and management services for Telco clouds.

TCA-Manager: The Telco Cloud Automation manger is the heart of the Telco Cloud platform. It manages the CaaS cluster deployments, Network Function and services catalog, network function and services inventory, and connectivity to partner systems (sVNFM and Harbor). The TCA manager also manages user authentication and role-based access.

TCA-Control Plane: The virtual infrastructure in the Telco edge, aggregation, and core sites are connected using the Telco Cloud Automation-Control Plane (TCA-CP). The TCA-CP provides the infrastructure for placing workloads across clouds using TCA. It supports several types of Virtual Infrastructure Manager (VIM) such as VMware vCenter Server, VMware Cloud Director, VMware Integrated OpenStack (VIO), and Kubernetes. Each TCA-CP node supports only one VIM type.

The TCA Manager connects with TCA-CP to communicate with the VIMs. Both TCA Manager and TCA-CP nodes are deployed as an OVA.

To deploy VNFs, the TCA Manager uses a Control Plane for VMware Cloud Director or VIO, although direct VNF deployments to vCenter is also supported. When deploying Kubernetes, the TCA Manager leverages a Control Plane node configured for vCenter - this is used for new Tanzu Kubernetes Grid deployments.

A dedicated TCA-CP is required for each non-Kubernetes VIM.

SVNFM: Registration of supported SOL 003 based SVNFMs.

NSX Manager: Telco Cloud Automation communicates with NSX Manager through the VIM layer. A single instance of the NSX Manager can support multiple VIM types.

Aria Automation Orchestrator: Aria Automation Orchestrator registers with TCA-CP and runs customized workflows for VNF and CNF onboarding and day-2 life cycle management.

RabbitMQ: RabbitMQ tracks VMware Cloud Director and VMware Integrated OpenStack notifications. It is not required for Telco Cloud Platform when using Kubernetes-based VIMs only.

Telco Cloud Automation Persona

The following key stakeholders are involved in the end-to-end service management, life cycle management, and operations of the Telco cloud native solution:

Persona |

Role |

|---|---|

CNF Vendor / Partners |

CNF vendors supply HELM charts and container images |

Read access to NF Catalog |

|

CNF Deployer / Operator |

Read access to NF Catalog |

Responsible for CNF LCM through Telco Cloud Automation (ability to self-manage CNF) |

|

CNF Developer / Operator |

Develops CNF CSAR by working with vendors, including defining Dynamic Infrastructure Provisioning requirements. |

Maintains CNF catalog |

|

Responsible for CNF LCM through TCA |

|

Updates Harbor with HELM and Container images |

|

Tanzu Kubernetes Cluster admin |

Kubernetes Cluster Admin for one or more Tanzu Kubernetes clusters |

Creates and maintains Tanzu Kubernetes Cluster template. |

|

Deploys Kubernetes clusters to pre-assigned Resource Pool |

|

Assigns Kubernetes clusters to tenants Assigns Developers and Deployers for CNF deployment through TCA |

|

Works with CNF Developer to supply CNF dependencies such as Container images, OS packages, and so on. |

|

Performs worker node dimensioning |

|

Deploys CNF monitoring/logging tools such as Prometheus and fluentd. |

|

API and CLI access to Kubernetes clusters associated with CNF deployment |

|

Telco Cloud Automation Admin / System Admin |

Onboards Telco Cloud Automation users and partners |

Adds new VIM Infrastructure and associates it with TCA-CP |

|

Infrastructure monitoring through Aria Operations. |

|

Creates and maintains vCenter Resource Pools for Tanzu Kubernetes clusters. |

|

Creates and maintains Tanzu Kubernetes Cluster templates. |

|

Deploys and maintains the Harbor repository |

|

Deploys and maintains Tanzu Kubernetes bootstrap process |

|

Performs TCA, TCA-CP, Harbor, infrastructure upgrades, and infrastructure monitoring |

Tanzu Kubernetes Grid

VMware Tanzu Standard for Telco provisions and performs life cycle management of Tanzu Kubernetes clusters.

A Tanzu Kubernetes cluster is an opinionated installation of the Kubernetes open-source software that is built and supported by VMware. With Tanzu Standard for Telco, administrators provision Tanzu Kubernetes clusters through Telco Cloud Automation and consume them in a declarative manner that is familiar to Kubernetes operators and developers.

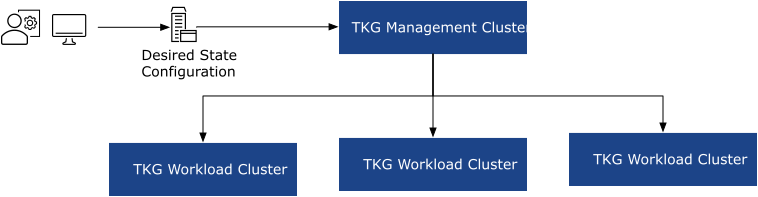

Within the Telco Cloud, the desired state of Tanzu Kubernetes Grid deployments is configured as a cluster template and the template is used to create the initial management cluster through an internal bootstrap. After the management cluster is created, the cluster template is passed to the Tanzu Kubernetes management cluster to instantiate the Tanzu Kubernetes workload clusters using the Cluster-API (CAPI) for vSphere.

The following diagram shows different hosts and components of the Tanzu Kubernetes Grid architecture:

Cluster API brings declarative, Kubernetes style APIs for application deployments and makes similar APIs available for cluster creation, configuration, and management. The Cluster API uses native Kubernetes manifests and APIs to manage bootstrapping and lifecycle management (LCM) of Kubernetes clusters.

The Cluster API relies on a pre-defined cluster YAML specification that describes the desired state of the cluster and attributes such as the class of VM, size, and the total number of nodes in the Kubernetes cluster, the node pools, and so on.

Tanzu Kubernetes Grid - Control Plane components

The Kubernetes control plane runs as pods on the Kubernetes Control Plane nodes. The Kubernetes Control Plane consists of the following components:

Etcd: Etcd is a simple, distributed key-value store that stores the Kubernetes cluster configuration, data, API objects, and service discovery details. For security reasons, etcd must be accessible only from the Kubernetes API server.

Kube-API-Server: The Kubernetes API server is the central management entity that receives all API requests for managing Kubernetes objects and resources. The API server serves as the frontend to the cluster and is the only cluster component that communicates with the etcd key-value store.

For redundancy and availability, place a load balancer for the control plane nodes. The load balancer performs health checks on the API server to ensure that external clients such as kubectl connect to a healthy API server even during the cluster degradation.

Kube-Controller-Manager: The Kubernetes controller manager is a daemon that embeds the core control loops shipped with Kubernetes. A control loop is a non-terminating loop that regulates the state of the system. In Kubernetes, a controller is a control loop that watches the shared state of the cluster through the API server and moves the current state to the desired state.

Kube-Scheduler: Kubernetes schedulers know the total resources available in a Kubernetes cluster and the workload allocated on each worker node in the cluster. The API server invokes the scheduler every time there is a need to modify a Kubernetes pod. Based on the operational service requirements, the scheduler assigns the workload on a node that best fits the resource requirements.

Virtual Infrastructure Management

Telco Cloud Automation administrators can onboard, view, and manage the entire Telco Cloud through the Telco Cloud Automation console. Details about each cloud such as Cloud Name, Cloud URL, Cloud Type, Tenant Name, Connection Status, and Tags can be displayed as an aggregate list or graphically based on the geographic location.

Telco Cloud Automation administrators use roles, permissions, and tags to provide resource separation for the VIM, Users (Internal or external), and Network Functions.

CaaS Subsystem

The CaaS subsystem allows Telco Cloud operators to create and manage Kubernetes clusters for cloud-native 5G and RAN workloads. VMware Telco Cloud Platform uses VMware Tanzu Standard for Telco to create Kubernetes clusters.

Telco Cloud Automation administrators create the Kubernetes management and workload cluster templates to reduce repetitive tasks associated with Kubernetes cluster creation and standardize the cluster sizing and capabilities. A template can include a group of workers nodes through node pool, control and worker node sizing, container networking, storage class, and CPU manager policies.

Kubernetes clusters can be deployed using the templates or directly from the Telco Cloud Automation UI or API. In addition to the base cluster deployment, Telco Cloud Automation supports various PaaS add-ons that can be deployed during cluster creation or at a later stage.

Add-on Category |

Add-on |

Description |

|---|---|---|

CNI |

Antrea |

Antrea CNI |

Calico |

Calico CNI |

|

CSI |

vSphere-CSI |

Allows the use of vSphere datastores for PVs |

NFS |

NFS Client mounts NFS shares to worker nodes |

|

Monitoring |

Prometheus |

Publishes metrics |

Fluent-bit |

Formats and publishes logs |

|

Networking |

Whereabouts |

Used for cluster-wide IPAM |

Multus |

Allows more than a single interface to a pod |

|

NSX ALB / Ingress |

Integrates LB or Ingress objects with VMware NSX Advanced Load Balancer |

|

System |

Cert-Manager |

Provisions certificates |

Harbor |

Integrates harbor add-on to Tanzu Kubernetes clusters |

|

Systemsettings |

Used for password and generic logging |

|

TCA-Core |

nodeconfig |

Used as part of dynamic infrastructure provisioning |

Tools |

Velero |

Performs backup and restore of Kubernetes namespaces and objects. |

The CaaS subsystem access control is backed by RBAC. The Kubernetes Cluster administrators have full access to Kubernetes clusters, including direct SSH access to Kubernetes nodes and API access through the Kubernetes configuration. CNF developers and deployers can have deployment access to Kubernetes clusters through the TCA console in a restricted role.

Cloud-Native Networking

In Kubernetes networking, each Pod has a unique IP that is shared by all the containers in that Pod. The IP of a Pod is routable from all the other Pods, regardless of the nodes they are on. Kubernetes is agnostic to reachability. L2, L3 or overlay networks can be used as long as the traffic reaches the desired pod on any node.

CNI is a container networking specification adopted by Kubernetes to support pod-to-pod networking. The specification defines the Linux network namespace. The container runtime allocates a network namespace to the container and passes numerous CNI parameters to the network driver. The network driver then attaches the container to a network and reports the assigned IP address to the container runtime. Multiple plug-ins might run at a time with the container joining networks driven by different plug-ins.

Telco workloads require a separation of the control plane and data plane. A strict separation between the Telco traffic and the Kubernetes control plane requires multiple network interfaces to provide service isolation or routing. To support those workloads that require multiple interfaces in a Pod, additional plug-ins are required. A CNI meta plug-in or CNI multiplexer that attaches multiple interfaces supports multiple Pod NICs.

Primary CNI: The CNI plug-in that serves pod-to-pod networking is called the primary or default CNI, a network interface that every Pod is created with. In case of network functions, the primary interface is managed by the primary CNI.

Secondary CNI: Each network attachment created by the meta plug-in is called the secondary CNI. SR-IOVs or VDS (Enhanced Data Path) NICs configured for pass-through are managed by secondary CNIs.

While there are several container networking technologies and different ways of deploying them, Telco Cloud and Kubernetes admins want to eliminate manual CNI provisioning and configuration in containerized environments and reduce the overall complexity. Calico or Antrea is often used as the primary CNI plug-in. MACVLAN and Host-devices can be used as secondary CNI together with a CNI meta plug-in such as Multus.

Airgap Server

In the non-air-gapped design, VMware Telco Cloud Automation uses external repositories for Harbor and the PhotonOS packages to implement the VM and NodeConfig operators, new kernel builds, or additional packages to the nodes. Internet access is required to pull these additional components.

The Airgap server is a Photon OS VM that is deployed as part of the telco cloud. The airgap server is then registered as a partner system within the platform and is used in the air-gapped (internet-restricted) environments.

The airgap server operates in two modes:

Restricted mode: This mode uses a proxy server between the Airgap server and the internet. In this mode, the Airgap server is deployed in the same segment as the Telco Cloud Automation VMs in a one-armed mode design.

Air-gapped mode: In this mode, the airgap server is created and migrated to the air-gapped environment. The airgap server has no external connectivity requirements. You can upgrade the airgap server through a new Airgap deployment or an upgrade patch.

Harbor

5G (Core and RAN) consists of CNFs in the form of container images and Helm charts from 5G network vendors. Container images and Helm charts must be stored in an OCI compliant registry that is highly available and easily accessible. Public or cloud-based registries lack critical security compliance features to operate and maintain carrier-class deployments. Harbor addresses these challenges by providing trust, compliance, performance, and interoperability.

VNF and CNF Management

Virtual Network Function (VNF) and Cloud-native Network Function (CNF) management encompasses onboarding, designing, and publishing of the SOL001-compliant CSAR packages to the TCA Network Function catalog. Telco Cloud Automation maintains the CSAR configuration integrity and provides a Network Function Designer for CNF developers to update and release new NF iterations in the Network Function catalog.

Network Function Designer is a visual design tool within VMware Telco Cloud Automation. It generates SOL001-compliant TOSCA descriptors based on the 5G Core or RAN deployment requirements. A TOSCA descriptor consists of CNF instantiation parameters and operational characteristics for life cycle management.

Network functions from each vendor have their unique infrastructure requirements. A CNF developer specifies infrastructure requirements within the CSAR package to instantiate and operate a 5G Core or RAN CNF.

VMware Telco Cloud Automation customizes worker node configurations based on the application requirements by using the VMware Telco NodeConfig Operator. The NodeConfig and VMconfig Operators are Kubernetes operators that manage the node OS, VM customization, and performance tuning. Instead of static resource pre-allocation during the Kubernetes cluster instantiation, the operators defer resource binding of expensive network resources such as SR-IOV VFs, DPDK package installation, and Huge Pages to the CNF instantiation. This allows the control and configuration of each cluster to be bound to the application requirements. This customization is automated by Telco Cloud Automation in a zero-touch process.

Access policies for CNF Catalogs and Inventory are based on roles and permissions. TCA administrators create custom policies in Telco Cloud Automation to offer self-managed capabilities required by the CNF developers and deployers.