VMware Telco Cloud Automation allows Telco Cloud Administrators to perform various activities around Network Functions and Services.

VMware Telco Cloud Automation (TCA) is a unified orchestrator. It onboards and orchestrates workloads seamlessly from VM and container-based infrastructures for an adaptive service-delivery foundation.

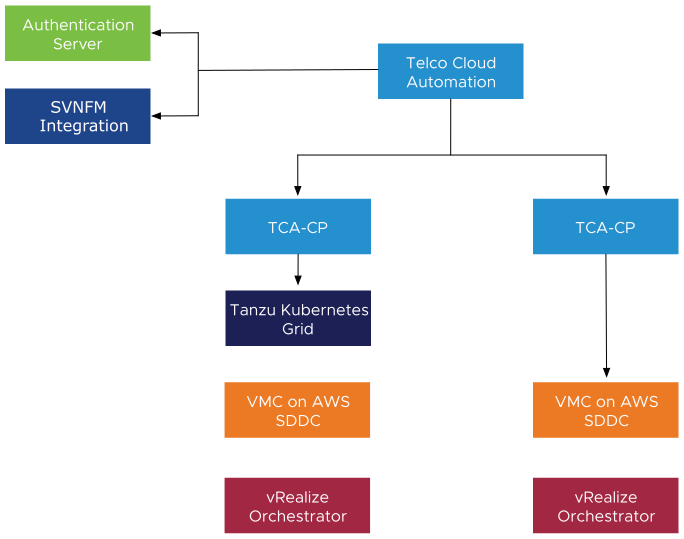

TCA Architecture

TCA provides orchestration and management services for Telco clouds.

- TCA-Control Plane

-

The VMC on AWS SDDC is connected to TCA via the TCA-Control Plane (TCA-CP). TCA-CP provides infrastructure for placing workloads. TCA connects with TCA-CP to communicate with the SDDCs and Tanzu Kubernetes clusters. TCA-CP is deployed as an OVA. A dedicated instance of TCA-CP is required for each SDDC.

- Authentication Server

-

The SDDC vCenter Server is used to authenticate users. Users can be from external identity providers such as LDAP or Active Directory.

- SVNFM

-

Any SOL 003 SVNFM can be registered with TCA.

- vRealize Orchestrator

-

vRealize Orchestrator (vRO) registers with TCA-CP and is used to run customized workflows for CNF onboarding and day 2 life cycle management.

Design Decision |

Design Justification |

Design Implication |

|---|---|---|

Create a new Resource Pool for TCA and vRO appliances with a 12 CPU and 36 GB of RAM reservation.

Note:

CPU reservations are in MHz or GHz, to reserve 12 CPUs take the physical ESXi hosts CPU clock speed and multiple by 12. |

Ensures, even under contention, that the TCA and vRO appliances can continue to run and manage the environment. |

Reservations reserve capacity even when it's not used. |

Deploy a single instance of the TCA manager to manage all TCA-CP endpoints. |

|

None. |

Deploy a TCA-CP node for each SDDC. |

TCA-CP has a one-to-one mapping with vCenter Server, each SDDC is managed by a dedicated vCenter Server. |

Can lead to a large number of TCA-CP appliances to manage. |

Deploy a vRealize Orchestrator that is shared across all TCA-CP and SDDC pairings. |

Consolidated vRO deployment reduces the number of VRO nodes to deploy and manage. |

Requires vRO to be highly available if multiple TCA-CP endpoints are dependent on a shared deployment |

vRealize Orchestrator uses vCenter Server for authentication. Because VMC on AWS is a managed service users do not have the required permissions to register vRealize Orchestrator with the vCenter Server managed by VMC. To work around this issue deploy a standalone vCenter Server as a user workload and configure vRealize Orchestrator to use it as its authentication source.

CaaS Infrastructure

The CaaS subsystem allows Telco Cloud Platform operators to create and manage Kubernetes clusters and Cloud-native workloads. VMware Telco Cloud platform uses VMware Tanzu Standard for Telco to create Kubernetes clusters.

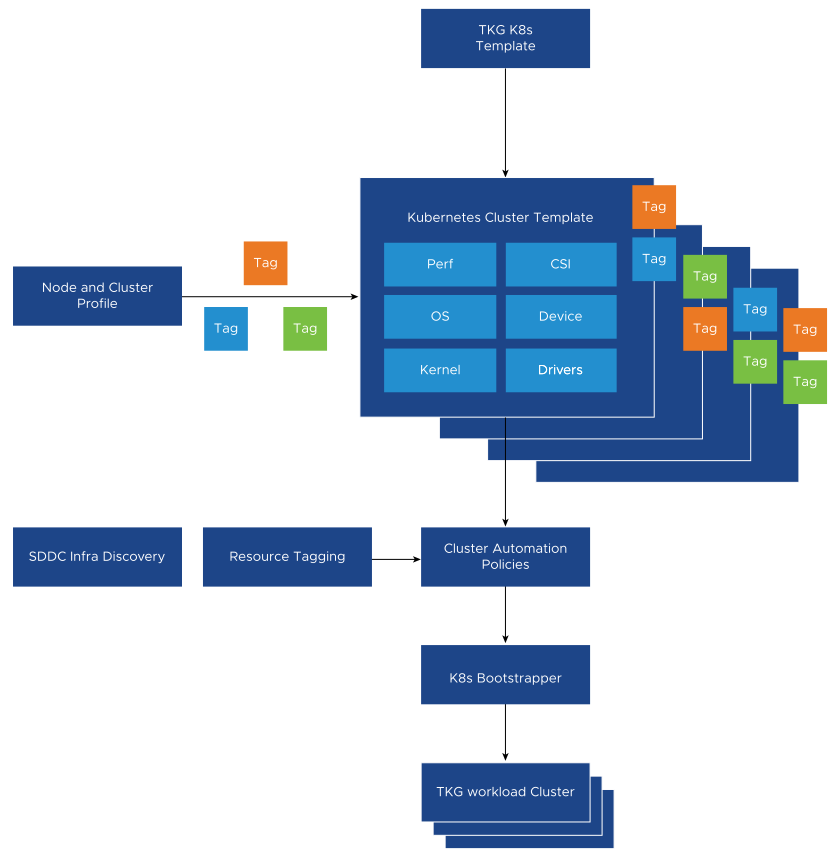

Using VMware Telco Cloud Automation, the TCA admins can create Telco management and workload templates to reduce repetitive parts of Kubernetes cluster creation while standardizing cluster sizing and capabilities. A typical template can include a grouping of workers nodes through Node Pool, control and worker node sizing, Container Networking, Storage class, and CPU affinity policies.

After the TCA Admin publishes the Kubernetes Cluster templates, the TKG admin can consume those templates by deploying and managing various types of Kubernetes clusters.

The CaaS subsystem access control is fully backed by RBAC. The TKG admins have full access to Kubernetes clusters under their management, including direct console access to Kubernetes nodes and API. CNF developers and deployers can gain deployment access to the Kubernetes cluster through the TCA console.

Design Decision |

Design Justification |

Design Implication |

|---|---|---|

When creating the Tanzu Standard for Telco Management Cluster template, define a single network label for all nodes across the cluster. |

Tanzu Standard for Telco management cluster nodes require only a single NIC per node. |

None |

Create unique Kubernetes Cluster templates for each required system profile. |

Cluster templates serve as a blueprint for Kubernetes cluster deployments and minimize repetitive tasks, enforce best practices, and define guard rails for infrastructure management. |

K8s templates must be maintained to align with the latest CNF requirements. |

When creating workload Cluster templates, define only network labels required for Tanzu Standard for Telco management using network labels. |

|

None |

When creating workload Cluster templates, enable Multus CNI for clusters that host Pods requiring multiple NICs. |

|

Multus is an upstream plugin and follows the community support model. |

When defining workload cluster and Management templates, create a single node pool for the Kubernetes Control Plane nodes. |

TCA supports only a single Control Plane node group per cluster. |

None |

When defining a workload cluster template, if a cluster is designed to host CNFs with different performance profiles, create a separate node pool for each profile. Define unique node labels to distinguish node members from other node pools. |

|

Too many node pools might lead to resource underutilization. |

CNF Management Overview

Cloud-native Network Function (CNF) management encompasses onboarding, designing, and publishing of the SOL001-compliant CSAR packages to the TCA Network Function catalog. TCA maintains the CSAR configuration integrity and provides a Network Function Designer for CNF developers to update and release newer iterations in the Network Function catalog.

Network Function Designer is a visual design tool within VMware Telco Cloud Automation. It generates SOL001-compliant TOSCA descriptors based on the deployment requirements. A TOSCA descriptor consists of instantiation parameters and operational behaviors of the CNF for life cycle management.

Network functions from different vendors have their own and unique set of infrastructure requirements. A CNF developer can include the CSAR package infrastructure requirements to instantiate and operate a CNF. VMware Telco Cloud Automation attempts to customize worker node configuration based on those requirements through the VMware Telco NodeConfig Operator. The NodeConfig Operator is a K8s operator that handles the node OS customization, performance tuning, and upgrade. Instead of static resource pre-allocation during the Kubernetes cluster instantiation, the NodeConfig Operator defers resource binding to the CNF instantiation. When new workloads are instantiated through TCA, TCA automatically assigns more resources to the Kubernetes cluster to accommodate new workloads.

Access policies for CNF Catalogs and Inventory are roles and permissions based. Custom policies can be created in TCA to offer self-managed capabilities required by CNF Developers and Deployers.