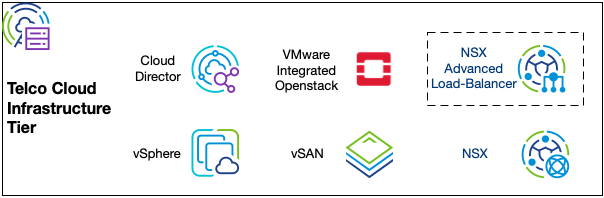

The Telco Cloud Infrastructure (TCI) Tier is the foundation for the Telco Cloud and is used by all Telco Cloud derivatives including Telco Cloud Infrastructure, Telco Cloud Platform 5G Core, and Telco Cloud Platform RAN.

The Telco Cloud Infrastructure Tier focuses on the following major objectives:

Provide the Software Defined Infrastructure

Provide the Software Defined Storage

Provide the Software Defined Networking

Implement the Virtual Infrastructure Manager (VIM)

The physical tier focuses on infrastructure (servers and network switches), while the infrastructure tier focuses on the applications that form the Telco Cloud foundations.

In the Telco Cloud infrastructure tier, access to the underlying physical infrastructure is controlled and allocated to the management and network function workloads. The Infrastructure tier consists of hypervisors on the physical hosts and the hypervisor management components across the virtual management layer, network and storage layers, business continuity and security areas.

The Infrastructure tier is divided into pods, classified as domains such as Management Domain and Compute domain).

Management Pod

The Management pod is crucial for the day-to-day operations and observability capabilities of the Telco Cloud. The management pod hosts the Management and Operational applications from all tiers of the Telco cloud. Applications such as Telco Cloud Automation that is part of the Platform tier reside in the management domain.

The following table lists the components associated with the entire Telco Cloud. Some components are specific to Telco Cloud Infrastructure and VNF deployments (such as VMware Cloud Director). Other components are exclusive to Telco Cloud Platform for 5G Core and RAN stacks and CNF deployments (such as VMware Telco Cloud Automation and its associated components).

Component |

Description |

|---|---|

Management vCenter Server |

Controls the management workload domain |

Resource vCenter Servers |

Controls one or more workload domains |

Management NSX Cluster |

Provides SDN functionality for the management cluster such as overlay networks, VRFs, firewalling, and micro-segmentation. Implemented as a cluster of 3 nodes |

Resource NSX Clusters |

Provides SDN functionality for one or more resource domains such as overlay networks, VRFs, firewalling, and micro-segmentation. Implemented as a cluster of 3 nodes |

VMware Cloud Director Cells (VIM) |

Provides tenancy and control for VNF based workloads |

VMware Integrated OpenStack (VIM) |

Provides tenancy and control for VNF based workloads |

NSX Advanced Load Balancer management and edge nodes |

Load Balancer controller and service edges provide L4 load balancing and L7 ingress services. |

Aria Operations Cluster |

Collects metrics and determines the health of the Telco Cloud |

Aria Operations for Logs Cluster |

Collects logs for troubleshooting the Telco Cloud |

VMware Telco Cloud Manager and Control-Plane Nodes |

ETSI SOL-based NFVO, G-VNFM, and CaaS management platform are used to design and orchestrate the deployment of Kubernetes clusters and onboard / lifecycle manage Cloud-Native Network Functions. |

Aria Operations for Networks |

Collects multi-tier networking metric and flow telemetry for network-level troubleshooting |

VMware Site Recovery Manager |

Facilitates implementation of BCDR plans |

Aria Automation Orchestrator |

Runs custom workflows in different programming or scripting languages |

VMware Telco Cloud Service Assurance |

Performs monitoring and closed-loop remediation for 5G Core and RAN deployments |

Telco Cloud Automation Airgap servers |

Facilitates Tanzu Kubernetes Grid deployments in air-gapped environments with no Internet connectivity |

For architectural reasons, some deployments might choose to deploy specific components outside of the Management cluster. This approach can be used for a distributed management domain as long as the required constraints are met, that is, 150ms latency between the ESXi hosts and various management components.

Each component has a set of constraints around latency and connectivity requirements. Additional components can be hosted in the Management Cluster. For example, Rabbit MQ or NFS VM is used by VMware Cloud Director. Additional elements such as Directory service and NTP can also be hosted in the management domain if required.

To ensure a responsive management domain, do not oversubscribe the management domain in terms of CPU or Memory. To ensure that all components run within a single management domain in case of a failure in one management location, considerations must also account for management domain failover.

The management domain can be deployed in a single availability zone (single rack), across multiple availability zones, and across regions in an active/active or active/standby site. The configuration varies according to the availability and failover requirements.

Workload / Compute Pods

The compute pods or Far/Near edge pods are used to host the Network functions. Some additional components from the management domain can be in the workload domain. For example, Cloud Proxies for remote data collection from Aria Operations.

The workload domain is derived from one or more compute pods. A compute pod is synonymous with a vSphere cluster. The pod can include a minimum of two hosts (four when using vSAN) and a maximum of 96 hosts (64 when using vSAN). The compute pod deployment in a workload domain is aligned with a rack.

Configuration maximums can change. For information about current maximums, see VMware Configuration Maximums.

The only distinction between compute pods and far/near-edge pods is the pod size. The far/near edge pods are smaller and distributed throughout the network, whereas the compute pods are larger and co-located within a single data center.

In addition to the pod, the RAN environment is a combination of far/near edge pods and single-host cell sites.

Far edge sites can deploy a 2-node vSAN with an external witness for small vSAN-based deployments but is not considered for compute workload pods.

The distribution and quantity of cell sites is higher than far/near-edge or compute pods. Due to factors such as cost, power, cooling and space, the cell site has fewer active components (single servers) in a restricted environment.

Each workload domain can host up to 2,500 ESXi hosts and 40,000 VMs. The host can be arranged in any combination of compute or far/near-edge pods (clusters) or individual cell sites.

Cell sites are added as standalone hosts in vSphere, and they do not have cluster features such as HA, DRS, and vSAN.

To maintain marginal room for growth and to avoid overloading a component, the maximums of the component must not exceed more than 75-80%.

The Edge pod is deployed as an additional component in the workload domain. The Edge pod provides North/South routing from the Telco Cloud to the IP Core and other external networks.

The deployment architecture of an edge pod is similar to the workload domain pods when using ESXi as the hypervisor. This edge pod is different from NSX Bare Metal edge pods.

Virtual Infrastructure Management

The Telco Cloud Infrastructure tier includes a common resource orchestration platform called Virtual infrastructure Manager (VIM) for traditional VNF workloads. The two types of VIM are as follows:

VMware Cloud Director (CD)

VMware Integrated OpenStack (VIO)

The VIM interfaces with other components of the Telco Cloud Infrastructure (vSphere, NSX) to provide a single interface for managing VNF deployments.

VMware Cloud Director

VMware Cloud Director is used for the cloud automation plane. It supports, pools, and abstracts the virtualization platform in terms of virtual data centers. It provides multi-tenancy features and self-service access for tenants through a native graphical user interface or API. The API allows programmable access for both tenant consumption and the provider for cloud management.

The cloud architecture contains management components deployed in the management cluster and resource groups for hosting the tenant workloads. Some of the reasons for the separation of the management and compute resources include:

Different SLAs such as resource reservations, availability, and performance for user plane and control plane workloads

Separation of responsibilities between the CSP and NF providers

Consistent management and scaling of resource groups

The management cluster runs the cloud management components, vSphere resource pools are used as independent units of compute within the workload domains.

VMware Cloud Director is deployed as one or more cells in the management domain. Some of the common terminologies used in VMware Cloud Director include:

Catalog is a repository of vAPP / VM templates and media available to tenants for VNF deployment. Catalogs can be local to an Organization (Tenant) or shared across Organizations.

External Networks provide the egress connectivity for Network Functions. External networks can be VLAN-backed port groups or NSX networks.

Network Pool is a collection of VM networks that can be consumed by the VNFs. Network pools can be Geneve or VLAN / Port-Group backed for Telco Cloud.

Organization is a unit of tenancy within VMware Cloud Director. An organization represents a logical domain and encompasses its own security, networking, access control, network catalogs and resources for consumption by network functions.

Organization Administrator manages users, resources, services, and policy in an organization.

Organization Virtual Data Center (OrgVDC) is a set of compute, storage and networking resources provided for the deployment of a Network Function. Different methods exist for allocating guaranteed resources to the OrgVDC to ensure that appropriate resources are allocated to the Network Functions.

Organization VDC Networks are networking resources that are available within an organization. They can be isolated to a single organization or shared across multiple organizations. Different types of Organization VDC networks are Routed, Isolated, Direct, or Imported network segments.

Provider Virtual Data Center (pVDC) is an aggregate set of resources from a single VMware vCenter. pVDC contains multiple resource pools or vSphere clusters and datastores. OrgVDCs are carved out of the aggregate resources provided by the pVDCs.

vAPP is a container for one or more VMs and their connectivity. vAPP is a common unit of deployment into an OrgVDC from within VMware Cloud Director.

VMware Integrated OpenStack (VIO)

VMware Integrated OpenStack integrates with the vCenter Server and NSX components to form a single open-api based programmable interface.

OpenStack is a cloud framework for creating an Infrastructure-as-a-Service (IaaS) cloud. It provides cloud-style APIs and a plug-in model that activates virtual infrastructure technologies. OpenStack does not provide the virtual technologies, instead leverages the underlying hypervisor, networking, and storage from different vendors.

VMware Integrated OpenStack is a VMware production-grade OpenStack that consumes industry-leading VMware technologies. It leverages an existing VMware vSphere installation to simplify installation, upgrade, operations, monitoring, and so on. VMware Integrated OpenStack is OpenStack-powered and is validated to provide API compatibility for OpenStack core services.

OpenStack Core Service |

OpenStack Project |

Coverage |

|---|---|---|

Block Storage API and extensions |

Cinder |

Full |

Compute Service API and extensions |

Nova |

Full |

Identity Service API and extensions |

Keystone |

Full |

Image Service API |

Glance |

Full |

Networking API and extensions |

Neutron |

Full |

Object Store API and extension |

Swift |

Tech Preview |

Load Balancer |

Octavia |

Full |

Metering and Data Collection Service API |

Ceilometer (Aodh, Panko, Gnocchi) |

Full |

Key Manager Service |

Barbican |

Full |

The VMware Integrated OpenStack architecture aligns with the pod/domain architecture:

Management Pod: OpenStack Control Plane and OpenStack Life Cycle Manager are deployed in the Management Pod.

Edge Pod: OpenStack Neutron, DHCP, NAT, Metadata Proxy services reside in the Edge Pod.

Compute Pod: Tenant VMs and VNFs provisioned by OpenStack reside in the Compute Pod.

The core services of VMware Integrated OpenStack run as containers in an internally deployed VMware Tanzu Kubernetes Grid Cluster. This cluster is deployed using the VIO Lifecycle Manager on top of PhotonOS VMs.

In a production-grade environment, the deployment of VMware Integrated OpenStack consists of:

Kubernetes Control Plane VMs are responsible for Life Cycle Management (LCM) of the VIO control plane. The control plane is responsible for the Kubernetes control processes, the Helm charts, the cluster API interface for Kubernetes LCM, and the VIO Lifecycle Manager web interface.

VIO Controller Nodes host the OpenStack components and are responsible for integrating the OpenStack APIs with the individual VMware components.

OpenStack services are the interface between OpenStack users and vSphere infrastructure. Incoming OpenStack API requests are translated into vSphere system calls by each service.

For redundancy and availability, OpenStack services run at least two identical Pod replicas. Depending on the load, Cloud administrators can scale the number of pod replicas up or down per service. As OpenStack services scale out horizontally, API requests are load-balanced natively across Kubernetes Pods for even distribution.

OpenStack Service |

VMware Component |

Driver |

|---|---|---|

Nova |

vCenter Server |

VMware vCenter Driver |

Glance |

vCenter Server |

VMware VMDK Driver |

Cinder |

vCenter Server |

VMware VMDK Driver |

Neutron |

NSX Manager |

VMware NSX Driver / Plug-in |

Nova Compute Pods

In VMware Integrated OpenStack, a vSphere cluster represents a single Nova compute node. This differs from traditional OpenStack deployments where each hypervisor (or server) is represented as individual nova compute nodes.

The Nova process represents an aggregated view of compute resources. Each vSphere cluster represents a single nova compute node. Clusters can come from one or more vCenter Servers.

All incoming requests to OpenStack are handled by the Nova Driver, which is integrated with the VMware vCenter Driver through the nova compute pods.

RabbitMQ Pod

RabbitMQ is the default message queue used by all VIO services. It is an intermediary for messaging, providing applications with a common platform to send and receive messages. All core services of OpenStack connect to the RabbitMQ message bus. Messages are placed in a queue and cleared only after they are acknowledged.

The VIO RabbitMQ implementation runs in active-active-active mode in a cluster environment with queues mirrored between three RabbitMQ Pods.

Each OpenStack service is configured with IP addresses of the RabbitMQ cluster members and one node is designated as primary. If the primary node is not reachable, the Rabbit Client uses one of the remaining nodes. Because queues are mirrored, messages are consumed identically regardless of the node to which a client connects. RabbitMQ provides high scalability.